Never Lose Data Again

BeeGFS Buddy Mirroring automatically replicates data, handles storage server failures transparently for running applications and provides automatic self-healing.

Efficient Persistent Access

Create multiple storage pools with segmented projects based on performance so each project gets exactly what it needs.

Scale On Demand

Simply add more disks to increase capacity, plus with BeeOND, you can easily create on-demand shared parallel filesystems on a per-job basis.

Simple and Scalable on any Hardware

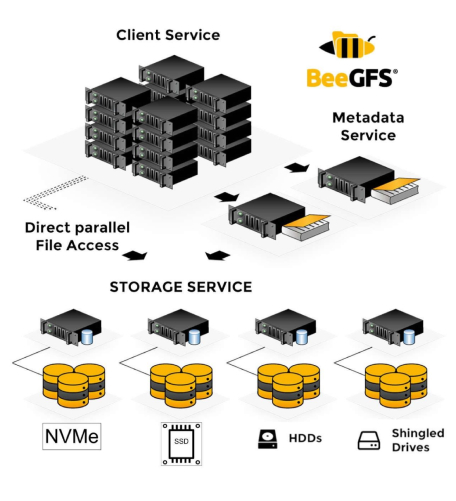

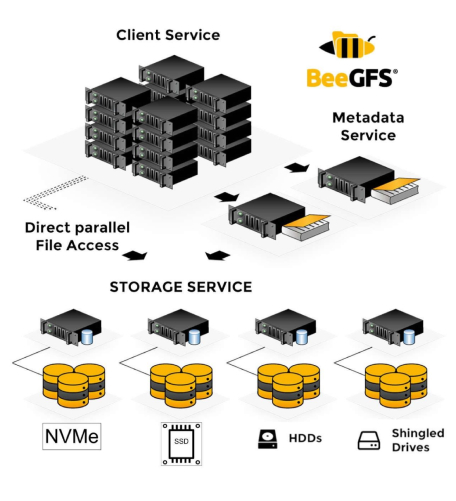

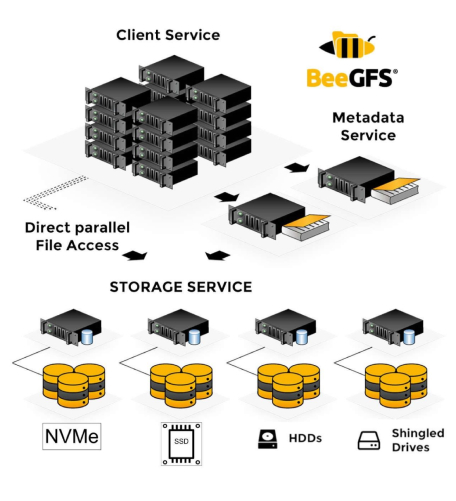

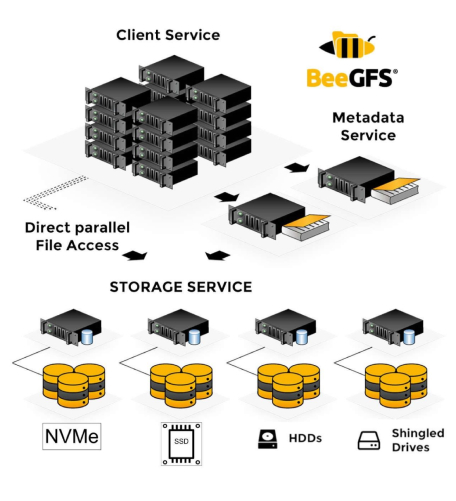

BeeGFS Architecture

Each Exxact BeeGFS Storage Cluster Includes

1x 1U Management Node

• 96GB Memory

• 960GB SSD

• 24TB HDD

1x 1U Metadata Node

• 96GB Memory

• 960GB SSD

• 48TB HDD

1x 1U Networking

• 24 Ports

• Optional 25GbE/40GbE/50GbE

• Optional EDR IB/ 100GbE

1 PB 16U StorageCluster

1.5 PB 24U StorageCluster

2 PB 32U StorageCluster

See How it Scales

Specs: 2x Intel Xeon X5660, 48 GB RAM | 4x Intel 510 Series SSD (RAID 0) | Ext4, QDR Infiniband | Scientific Linux 6.3 | Kernel 2.6.32-279 and FhGFS 2012.10-beta1 Performed on Fraunhofer Seislab: 25 nodes | 3 tier memory: 1 TB RAM, 20 TB SSD, 120 TB HDD *Single node local performance without BeeGFS: 1,332 MB/s (write) and 1,317 MB/s (read).