ARM Efficiency

NVIDIA Grace Hopper Superchip packs 72 ARM cores to deliver leading per-thread performance and higher energy efficiency than traditional x86 CPUs.

Less Bottlenecks

NVIDIA NVLink-C2C interconnect is the heart of the superchip with 900GB/s bidirectional bandwidth between Grace and Hopper, increasing performance by minimizing data transfer latency between CPU and GPU.

Scalability

NVIDIA NVLink Switch System scales DGX GH200 by connecting 256 NVIDIA Grace Hopper Superchips to build a seamless, high bandwidth system with 1:1 CPU-to-GPU ratio.

Fuel Discovery with NVIDIA Grace Hopper Superchip Platforms

NVIDIA Grace Hopper 1UServer

Highlights

Liquid Cooled NVIDIA Grace Hopper 1UServer

Highlights

Dual Node NVIDIA Grace Hopper 1UServer

Highlights

Power your infrastructure with NVIDIA Grace CPU Platforms

Dual Node NVIDIA Grace CPU Server (LiquidCooled)

Highlights

Quad GPU Single NVIDIA Grace CPUServer

Highlights

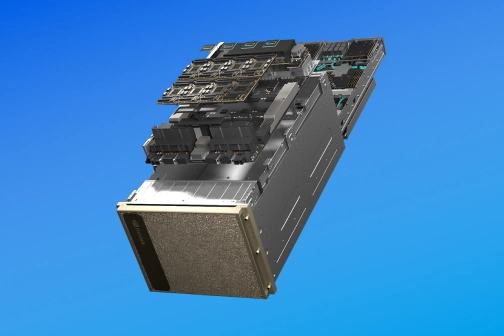

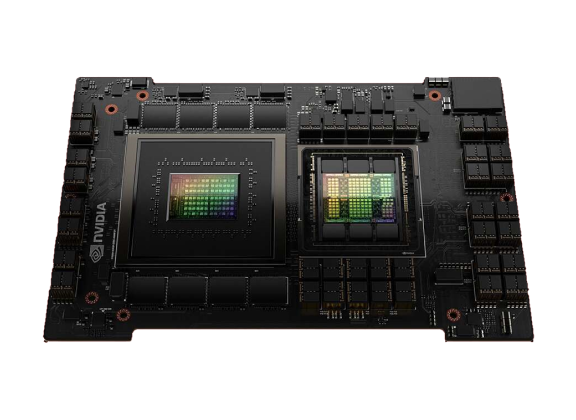

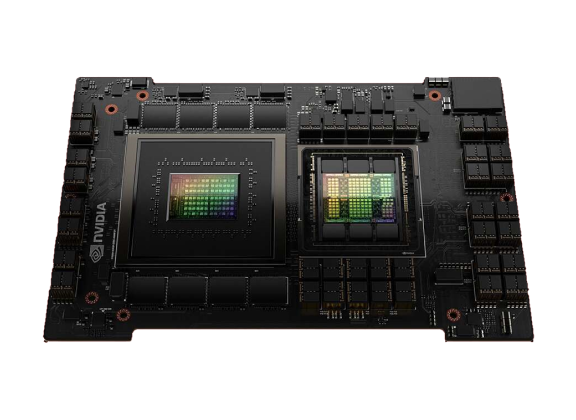

Supercharge with a Superchip

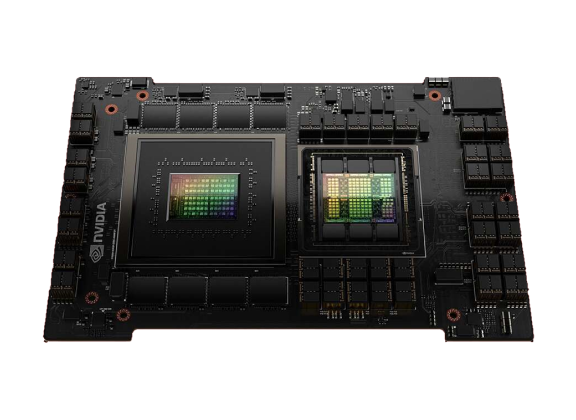

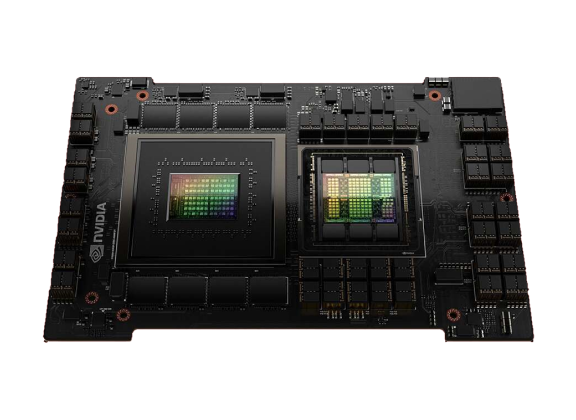

Connecting Two Groundbreaking Architectures

NVIDIA Grace CPU, the brain of the superchip, is the first NVIDIA data center CPU. Built with 72 ARM Neoverse V2 CPU cores and 480GB of LPDDR5 memory, Grace delivers 53% more bandwidth at one-eighth the power per GB/s versus traditional DDR5, memory for optimal energy efficiency and bandwidth.

NVIDIA Hopper GPU utilizes the groundbreaking Transformer Engine capable of mixed FP8 and FP16 precision formats. With mixed precision, Hopper intelligently manages accuracy while gaining dramatic AI performance, 9X faster training and 30x faster inferencing than previous generation.

The memory-coherent, high bandwidth, and low latency NVLink C2C interconnect is the heart of the Grace Hopper Superchip enabling speeds up to 900 GB/s of total bandwidth, 7x faster than the traditional PCIe 5. Address Translation Service (ATS) enables Grace and Hopper to share a single per-process page table enabled CPU and GPU threads to access all system allocated memory, minimizing latency and providing scalable distributed caching system that improves IO performance.

NVIDIA GH200 Specifications

GH200 Grace Hopper | Features |

|---|---|

| CPU | 72 Core NVIDIA Grace CPU |

| GPU | NVIDIA H100 Tensor Core GPU |

| CPU Memory | Up to 480 LPDDR5x ECC |

| GPU Memory | Supports 96GB HBM3 or 144GB HBM3e |

| Memory Bandwidth | Up to 624GB of fast-access memory |

| NVLink-C2C Bandwidth | 900GB/s of bidirectional coherent memory |

| Superchip TDP | Programmable 450W to 1000W |

| Thermal Solution | Air or Liquid Cooled |

Interested?