Exxact TensorEX 4U 2x AMD EPYC processor - Deep Learning & AI server - TS4-173535991-DPN

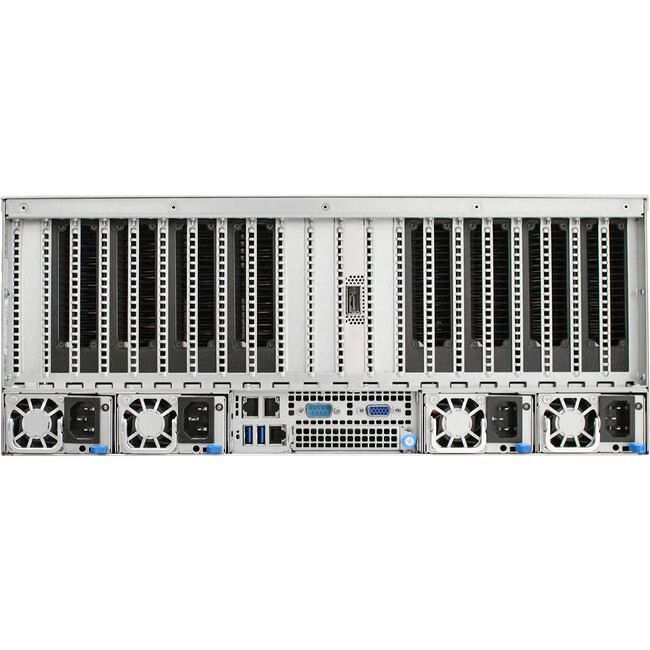

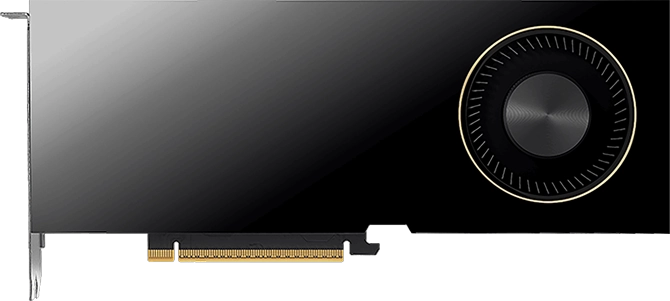

The TensorEX TS4-173535991-DPN is a 4U rack mountable Deep Learning & AI server supporting 2x AMD EPYC 7002/7003 Series processors, a maximum of 4 TB DDR4 memory, and up to 8x Double-Wide GPUs.

An EMLI Environment for Every Developer

Conda EMLI

For developers who want pre-installed deep learning frameworks and their dependencies in separate Python environments installed natively on the system.

Container EMLI

For developers who want pre-installed frameworks utilizing the latest NGC containers, GPU drivers, and libraries in ready to deploy DL environments with the flexibility of containerization.

DIY EMLI

For experienced developers who want a minimalist install to set up their own private deep learning repositories or custom builds of deep learning frameworks.

More Cores, More Cache, More Performance

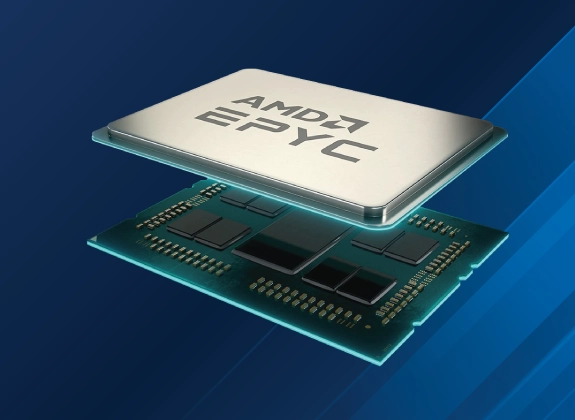

AMD EPYC Processors Ignite EPYC Performance

Data centers that require the best performance, security, and scalability gravitate to AMD EPYC™. AMD EPYC™ processors are built to handle large scientific and engineering datasets - ideal for compute-intensive modeling and advanced analysis techniques. AMD EPYC™ enables fast time-to-results for HPC.

- Exceptional performance per watt and per-core performance

- 3D V-Cache™ delivers breakthrough on-die memory with up to 768MB of L3 cache (available only on 7003X-series EPYC)