Neural Networks for Natural Language Processing (NLP)

Wouldn’t it be cool if a computer could understand the actual human sentiment behind sarcastic texts that can sometimes even trump actual humans?

Or what if computers could understand a human language so well that it can estimate a probability telling you how likely it is to encounter any random sentence that you give it?

Or maybe it could generate completely fake code snippets of the Linux kernel that look so authentic that they are just as intimidating as the actual source code (well, unless you are a kernel programmer yourself)?

What if computers could immaculately translate English to French or over 100 languages from all over the world?

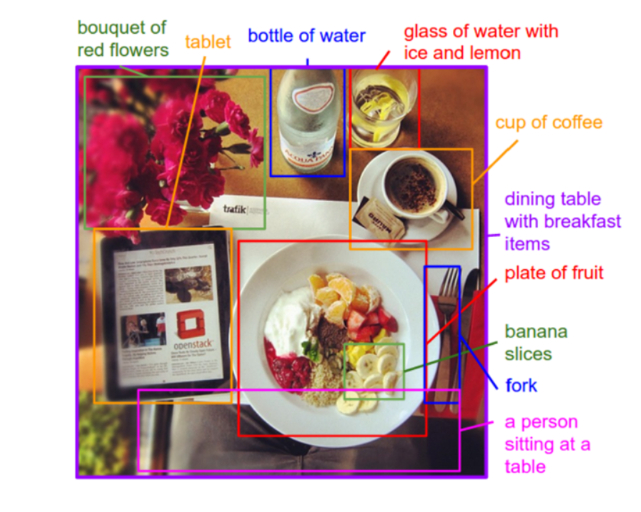

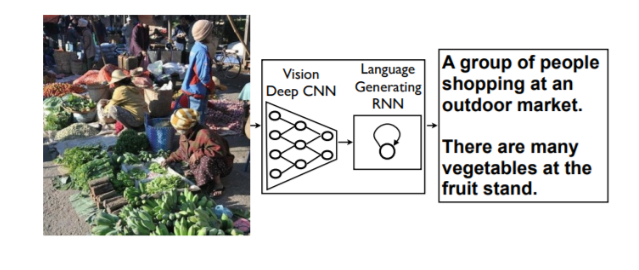

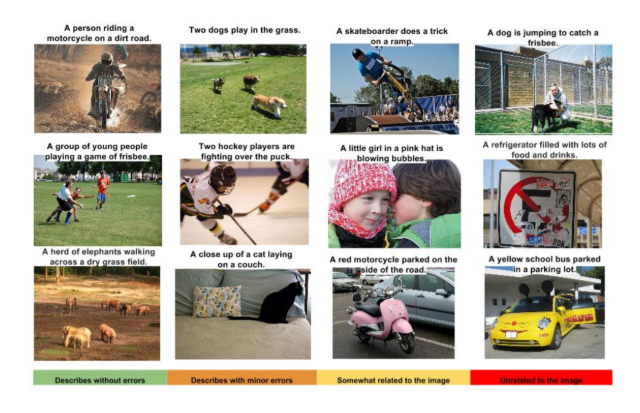

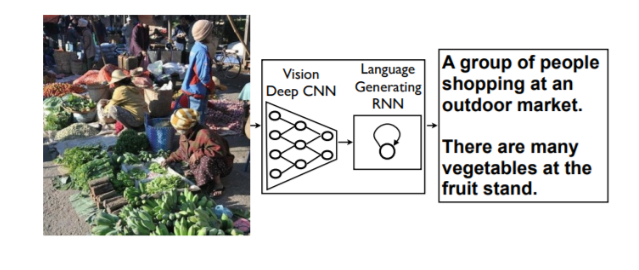

Or “see” an image and describe the items found in the photo?

Source: https://arxiv.org/pdf/1412.2306v2.pdf

Well, if you were looking for a cool computer program that could do these things in the previous decade, you would be out of luck. In fact, go to the year 2012 or before, and you will see that all of these are only present in science fiction.

But fast forward to today, these things are not just possible but embedded in our day-to-day lives. Social media companies are actively using sentiment analysis to identify and curb bad behavior/bad speech on their platforms, Google Translate can translate between 100s of languages, and chatbots in live customer service chat software are on the rise.

Natural Language Processing (NLP)

All the above bullets fall under the Natural Language Processing (NLP) domain. The main driver behind this science-fiction-turned-reality phenomenon is the advancement of Deep Learning techniques, specifically, the Recurrent Neural Network (RNN) and Convolutional Neural Network (CNN) architectures.

Let’s look at a few of the Natural Language Processing tasks and understand how Deep Learning can help humans with them:

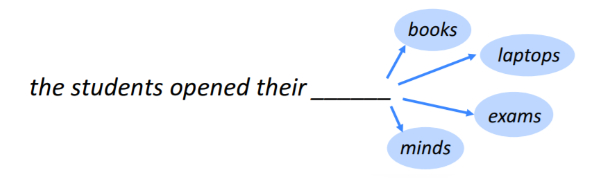

Language Modeling

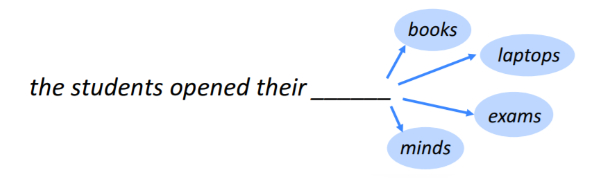

Language models aim to represent the history of observed text succinctly in order to predict the next word. Simply put, it is the task of predicting what word comes next in the sequence.

Source: Stanford

Notice that a language model needs to use the words that it has encountered so far in the sequence, in order to make a prediction. The longer the word sequences that the model can use during a prediction, the better it will be at this task.

For instance:

If the model just uses the last 2 words — “opened their” — in order to make a prediction, the possibilities for the next word will be much greater than if it used the last 3 words — “students opened their”. Hence, the prediction will not be so accurate in the former case.

So, a good model will ‘remember’ what it has read so far.

And this is something that Recurrent Neural Networks are pretty good at. Particularly, the more advanced versions called GRUs (Gated Recurrent Unit) and LSTMs (Long Short-Term Memory) give quite remarkable results by having ‘memory’ units built into them.

One interesting side-effect of language modeling is getting a generative model that we can use to generate all kinds of sequences.

Generating Shakespearean text/Linux source code(!)/Jazz music(!!)/Super Mario levels(!!!)

All those viral Twitter posts that go something like:

“I made an AI read all of and then made it write a -like story of its own. Here is the first page..”

They are all possible using RNNs!

Let’s say you wanted to generate a Shakespeare-like story. What you would do is train an RNN on a dataset of existing Shakespeare stories and let it learn how to model it. This way it will learn how to predict the next word in a Shakespeare story (just to be clear, it won’t be 100% accurate but it will be pretty satisfying). What we can do now is make it predict the most likely word on and on and on…

Words continue to get added until we get a brand new “Shakespearean” masterpiece!

What’s more?

You can model not just text, but any kind of sequences using similar RNNs. For example:

- Use the source code found in the Linux repo on Github to train an RNN and generate fake Linux source like functions!

- Preprocess the Super Mario levels into text files, train the model on them and create your own, never seen before Super Mario levels!

Note that the above examples use character-level RNNs. Here, instead of predicting the next word in the sequence, the model predicts the next character. This allows the RNN to learn arbitrary types of sequences like Super Mario levels!

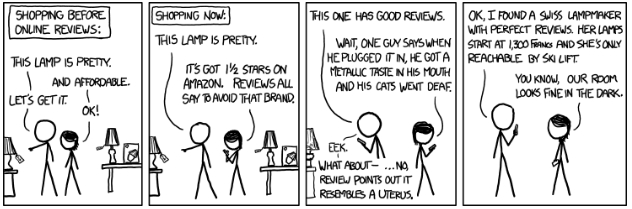

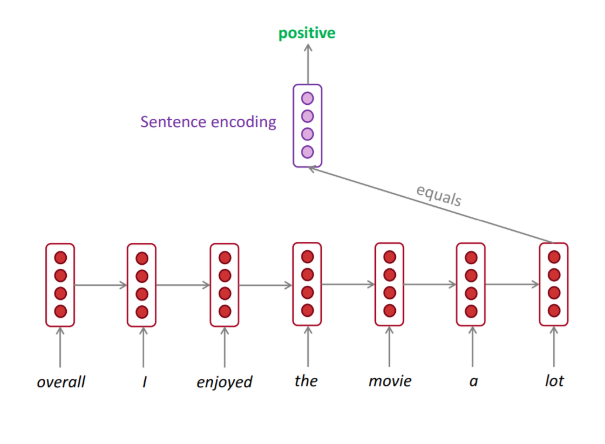

Sentiment Analysis

Human communication isn’t just words and meanings. It is much more complex as it involves emotions. Choice of words, writing style and sentence structure play a huge part in determining the sentiment behind a written message.

Source: https://xkcd.com/1036/

Earlier approaches to sentiment analysis were based on tokenizing the written sentences and trying to figure out the sentiment based on rules of grammar. Needless to say, they were not too effective.

But today, the RNN based analysis systems are being deployed by giant social media companies to flag and identify inappropriate content on their platforms. They work alongside the human moderators and help them in removing toxic content.

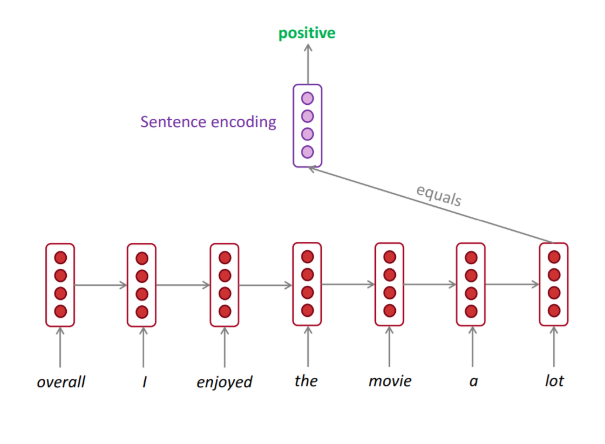

Source: Stanford

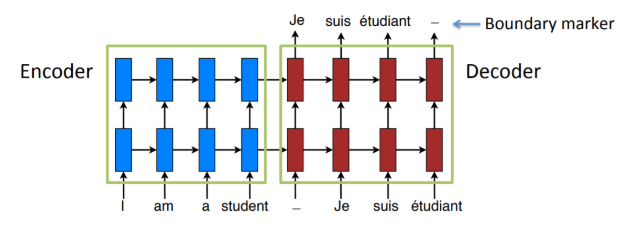

Language Translation

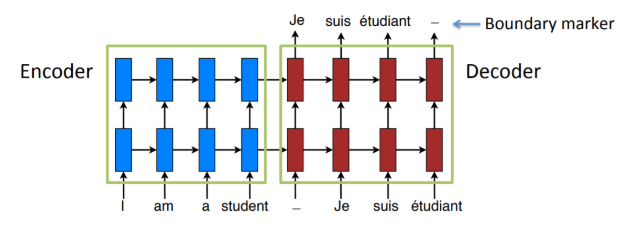

Over the past 3 years, the technology behind machine translation has been totally rewritten. Using a technology called sequence-to-sequence learning, programmers can now build some of the best translation systems in the world.

These sequence-to-sequence architectures are based on Recurrent Neural Networks.

Source: Stanford

Let’s say we want to translate between English and French. Here, one RNN (called the encoder) is responsible for taking the English sentence and converting it into a feature vector which the second RNN (called the decoder) takes as input and outputs the corresponding French translation.

These sequence-to-sequence architectures are useful not just in language translation. They are also used to build AI chatbots! The idea here is pretty straightforward:

Instead of training the encoder and decoder on 2 languages, we train them on 2 sides of conversation. So, maybe the encoder will represent the users’ request messages while the decoder will represent the Support Team’s corresponding messages. So, the system learns to “translate” between the users’ requests and the Support Team’s answers.

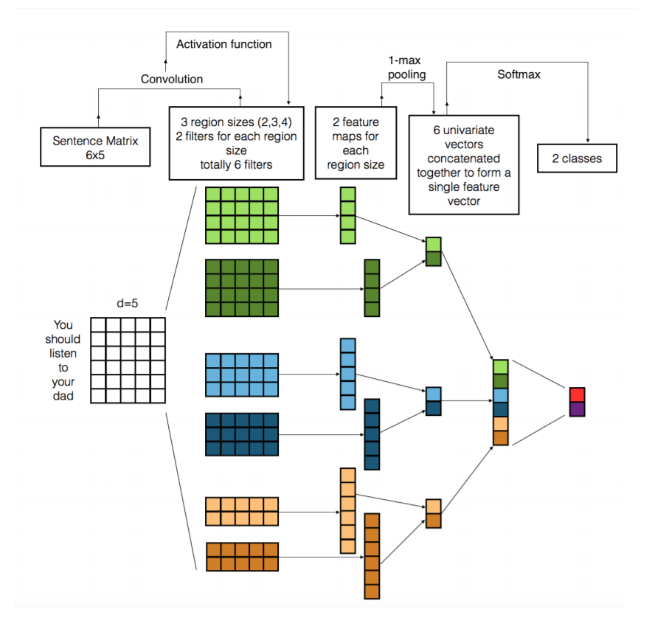

Using CNNs for NLP tasks

Traditionally, we think that a convolutional network (CNN) is a neural network that is specialized for processing a grid of values such as an image. And a recurrent neural network (RNN) is a neural network that is specialized for processing a sequence of values.

But more recently we’ve also started to apply CNNs to problems in Natural Language Processing and gotten some interesting results.

Classification Tasks

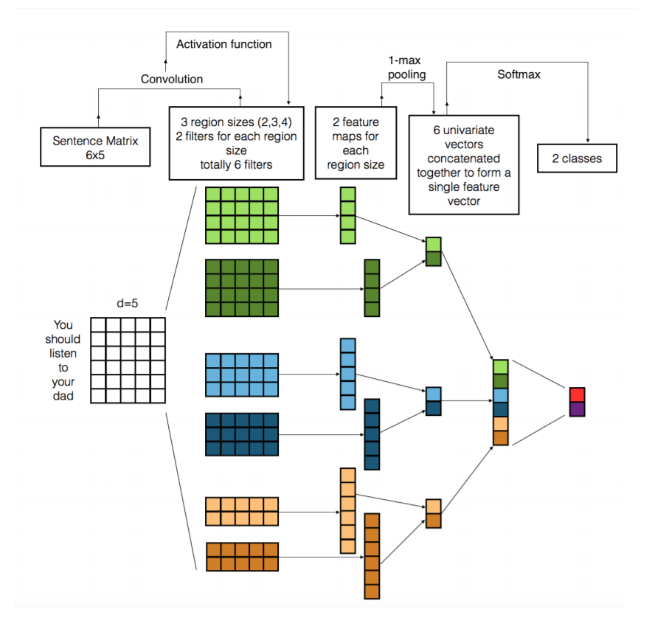

Since CNNs, unlike RNNs, can output only fixed sized vectors, the natural fit for them seem to be in the classification tasks such as Sentiment Analysis, Spam Detection or Topic Categorization.

In computer vision tasks, the filters used in CNNs slide over patches of an image whereas in NLP tasks, the filters slide over the sentence matrices, a few words at a time.

The 1st layer shows 6 filters: 2 pass over 2 words at a time, another 2 filters pass over 3 words at a time and the last 2 filters pass over 4 words at a time.

Source: https://arxiv.org/pdf/1703.03091.pdf

The learned filters of the first layer, capture features that are quite like the n-grams but represented in a more compact way.

A big argument for CNNs is that they are very fast. Convolutions are a central part of computer graphics and implemented on a hardware level on GPUs.

You should check out this paper to get a summary of the various ways CNNs have been used for NLP tasks.

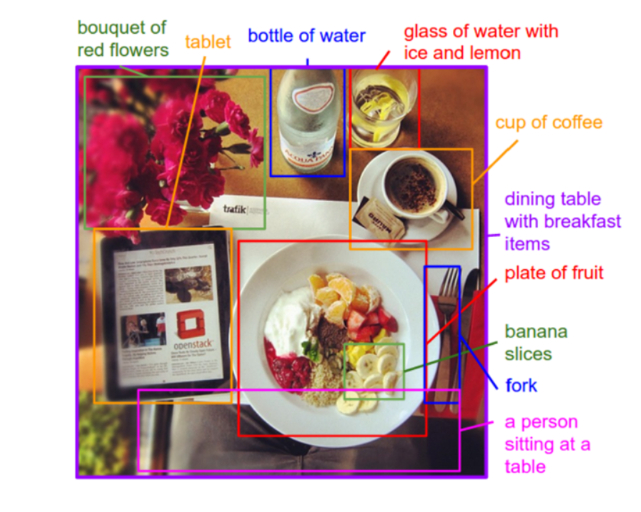

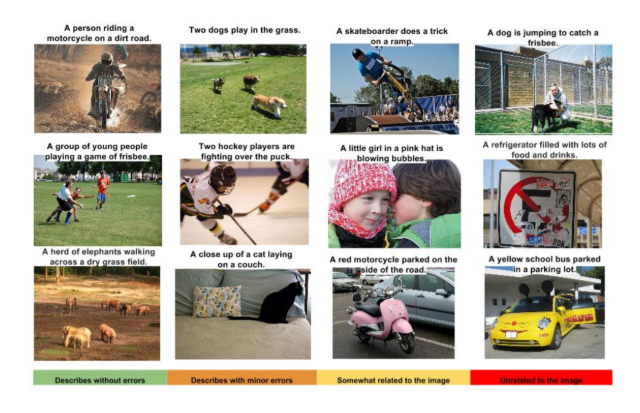

Generating Image Descriptions

Remember how in the sequence-to-sequence models, the encoder creates a vector representation of the input data and the decoder takes that vector to generate a new sequence?

Now, what if we replaced the RNN in that encoder with a CNN?

This would give us a model that can take in an image and generate a sequence based on that image!

We can then pass an image to our model and have it output a sentence that describes the image.

Automatically describing the content of an image is a fundamental problem in artificial intelligence that connects computer vision and natural language processing. Being able to automatically describe the content of an image using properly formed English sentences is a very challenging task, but it could have great impact. For instance, it can assist visually impaired people better understand the content of images on the web. Automatically describing the content of an image is a fundamental problem in artificial intelligence that connects computer vision and natural language processing. Being able to automatically describe the content of an image using properly formed English sentences is a very challenging task, but it could have great impact. For instance, it can assist visually impaired people better understand the content of images on the web.

Here are two important papers that address this problem:

- Show and Tell: A Neural Image Caption Generator by a Google team

- Deep Visual-Semantic Alignments for Generating Image Descriptions by Andrej Karpathy and Li Fei-Fei

All of the advancements that we talked about in this article are very recent. None of these were possible 6 years ago. This just shows how quickly the field of Deep Learning is accelerating.

It sure is an exciting time to be in this field. So get in on the action right now and go train some neural networks of your own!

Deep Learning for Natural Language Processing (NLP) — using RNNs & CNNs

Neural Networks for Natural Language Processing (NLP)

Wouldn’t it be cool if a computer could understand the actual human sentiment behind sarcastic texts that can sometimes even trump actual humans?

Or what if computers could understand a human language so well that it can estimate a probability telling you how likely it is to encounter any random sentence that you give it?

Or maybe it could generate completely fake code snippets of the Linux kernel that look so authentic that they are just as intimidating as the actual source code (well, unless you are a kernel programmer yourself)?

What if computers could immaculately translate English to French or over 100 languages from all over the world?

Or “see” an image and describe the items found in the photo?

Source: https://arxiv.org/pdf/1412.2306v2.pdf

Well, if you were looking for a cool computer program that could do these things in the previous decade, you would be out of luck. In fact, go to the year 2012 or before, and you will see that all of these are only present in science fiction.

But fast forward to today, these things are not just possible but embedded in our day-to-day lives. Social media companies are actively using sentiment analysis to identify and curb bad behavior/bad speech on their platforms, Google Translate can translate between 100s of languages, and chatbots in live customer service chat software are on the rise.

Natural Language Processing (NLP)

All the above bullets fall under the Natural Language Processing (NLP) domain. The main driver behind this science-fiction-turned-reality phenomenon is the advancement of Deep Learning techniques, specifically, the Recurrent Neural Network (RNN) and Convolutional Neural Network (CNN) architectures.

Let’s look at a few of the Natural Language Processing tasks and understand how Deep Learning can help humans with them:

Language Modeling

Language models aim to represent the history of observed text succinctly in order to predict the next word. Simply put, it is the task of predicting what word comes next in the sequence.

Source: Stanford

Notice that a language model needs to use the words that it has encountered so far in the sequence, in order to make a prediction. The longer the word sequences that the model can use during a prediction, the better it will be at this task.

For instance:

If the model just uses the last 2 words — “opened their” — in order to make a prediction, the possibilities for the next word will be much greater than if it used the last 3 words — “students opened their”. Hence, the prediction will not be so accurate in the former case.

So, a good model will ‘remember’ what it has read so far.

And this is something that Recurrent Neural Networks are pretty good at. Particularly, the more advanced versions called GRUs (Gated Recurrent Unit) and LSTMs (Long Short-Term Memory) give quite remarkable results by having ‘memory’ units built into them.

One interesting side-effect of language modeling is getting a generative model that we can use to generate all kinds of sequences.

Generating Shakespearean text/Linux source code(!)/Jazz music(!!)/Super Mario levels(!!!)

All those viral Twitter posts that go something like:

“I made an AI read all of and then made it write a -like story of its own. Here is the first page..”

They are all possible using RNNs!

Let’s say you wanted to generate a Shakespeare-like story. What you would do is train an RNN on a dataset of existing Shakespeare stories and let it learn how to model it. This way it will learn how to predict the next word in a Shakespeare story (just to be clear, it won’t be 100% accurate but it will be pretty satisfying). What we can do now is make it predict the most likely word on and on and on…

Words continue to get added until we get a brand new “Shakespearean” masterpiece!

What’s more?

You can model not just text, but any kind of sequences using similar RNNs. For example:

- Use the source code found in the Linux repo on Github to train an RNN and generate fake Linux source like functions!

- Preprocess the Super Mario levels into text files, train the model on them and create your own, never seen before Super Mario levels!

Note that the above examples use character-level RNNs. Here, instead of predicting the next word in the sequence, the model predicts the next character. This allows the RNN to learn arbitrary types of sequences like Super Mario levels!

Sentiment Analysis

Human communication isn’t just words and meanings. It is much more complex as it involves emotions. Choice of words, writing style and sentence structure play a huge part in determining the sentiment behind a written message.

Source: https://xkcd.com/1036/

Earlier approaches to sentiment analysis were based on tokenizing the written sentences and trying to figure out the sentiment based on rules of grammar. Needless to say, they were not too effective.

But today, the RNN based analysis systems are being deployed by giant social media companies to flag and identify inappropriate content on their platforms. They work alongside the human moderators and help them in removing toxic content.

Source: Stanford

Language Translation

Over the past 3 years, the technology behind machine translation has been totally rewritten. Using a technology called sequence-to-sequence learning, programmers can now build some of the best translation systems in the world.

These sequence-to-sequence architectures are based on Recurrent Neural Networks.

Source: Stanford

Let’s say we want to translate between English and French. Here, one RNN (called the encoder) is responsible for taking the English sentence and converting it into a feature vector which the second RNN (called the decoder) takes as input and outputs the corresponding French translation.

These sequence-to-sequence architectures are useful not just in language translation. They are also used to build AI chatbots! The idea here is pretty straightforward:

Instead of training the encoder and decoder on 2 languages, we train them on 2 sides of conversation. So, maybe the encoder will represent the users’ request messages while the decoder will represent the Support Team’s corresponding messages. So, the system learns to “translate” between the users’ requests and the Support Team’s answers.

Using CNNs for NLP tasks

Traditionally, we think that a convolutional network (CNN) is a neural network that is specialized for processing a grid of values such as an image. And a recurrent neural network (RNN) is a neural network that is specialized for processing a sequence of values.

But more recently we’ve also started to apply CNNs to problems in Natural Language Processing and gotten some interesting results.

Classification Tasks

Since CNNs, unlike RNNs, can output only fixed sized vectors, the natural fit for them seem to be in the classification tasks such as Sentiment Analysis, Spam Detection or Topic Categorization.

In computer vision tasks, the filters used in CNNs slide over patches of an image whereas in NLP tasks, the filters slide over the sentence matrices, a few words at a time.

The 1st layer shows 6 filters: 2 pass over 2 words at a time, another 2 filters pass over 3 words at a time and the last 2 filters pass over 4 words at a time.

Source: https://arxiv.org/pdf/1703.03091.pdf

The learned filters of the first layer, capture features that are quite like the n-grams but represented in a more compact way.

A big argument for CNNs is that they are very fast. Convolutions are a central part of computer graphics and implemented on a hardware level on GPUs.

You should check out this paper to get a summary of the various ways CNNs have been used for NLP tasks.

Generating Image Descriptions

Remember how in the sequence-to-sequence models, the encoder creates a vector representation of the input data and the decoder takes that vector to generate a new sequence?

Now, what if we replaced the RNN in that encoder with a CNN?

This would give us a model that can take in an image and generate a sequence based on that image!

We can then pass an image to our model and have it output a sentence that describes the image.

Automatically describing the content of an image is a fundamental problem in artificial intelligence that connects computer vision and natural language processing. Being able to automatically describe the content of an image using properly formed English sentences is a very challenging task, but it could have great impact. For instance, it can assist visually impaired people better understand the content of images on the web. Automatically describing the content of an image is a fundamental problem in artificial intelligence that connects computer vision and natural language processing. Being able to automatically describe the content of an image using properly formed English sentences is a very challenging task, but it could have great impact. For instance, it can assist visually impaired people better understand the content of images on the web.

Here are two important papers that address this problem:

- Show and Tell: A Neural Image Caption Generator by a Google team

- Deep Visual-Semantic Alignments for Generating Image Descriptions by Andrej Karpathy and Li Fei-Fei

All of the advancements that we talked about in this article are very recent. None of these were possible 6 years ago. This just shows how quickly the field of Deep Learning is accelerating.

It sure is an exciting time to be in this field. So get in on the action right now and go train some neural networks of your own!

.jpg?format=webp)