.jpg?format=webp)

Introduction

All AI models are built on data collected from a wide range of sources, including the vast repositories of the internet. The real challenge is not just gathering this raw information, but extracting its value. Thanks to Data labeling and neural network architectures, significant progress in turning unstructured data into intelligent models.

Data is gold. As AI models parse all kinds of data from numerous sources, a model fed with more descriptive data, will perform better. We’ll talk about what metadata is and how it data about data increases AI efficiency.

What is Metadata?

Metadata is "data about data" or descriptive information that characterizes, categorizes, and organizes data. It provides context and structure to raw information, enabling AI systems to process and interpret data more effectively.

Metadata provides essential context to data, making it more meaningful and usable for AI systems. Tools like DataHub and Apache Atlas help organizations manage metadata at scale, streamlining search and control throughout the data stack. Here are the key metadata types that enhance AI model training:

- Tags: Categorize datasets for quick discovery

- Lineage: Track data origins and transformations

- Business Glossary: Standardize definitions across teams

Consider a CSV file that lists potential leads. Without metadata, it's just a collection of numbers and text strings. With metadata, however, it becomes a structured resource that can tell you when each lead was captured, their source (social media, referral, website), their engagement level, and demographic information. We can feed this information to a model that helps evaluate priority for outreach and personalize communication strategies.

How Does Metadata Help AI?

Metadata provides the structural framework needed for systematic AI development. By organizing essential information about datasets, algorithms, and training parameters, metadata significantly improves model training efficiency by:

- Eliminate redundant experiments: tracking which parameter combinations have already been tested

- Accelerate model tuning: through systematic hyperparameter tracking and optimization

- Streamline data ingestion: with standardized schemas and preprocessing pipelines

- Enable experiment reproducibility: by recording exact training conditions and environments

- Facilitate model comparison: through consistent performance metrics and evaluation criteria

- Support automated feature selection: by tracking feature importance across experiments

These expanded points more clearly convey the specific ways metadata creates efficiency gains in AI development, aligning with the article's overall message about metadata's importance in structured AI development.

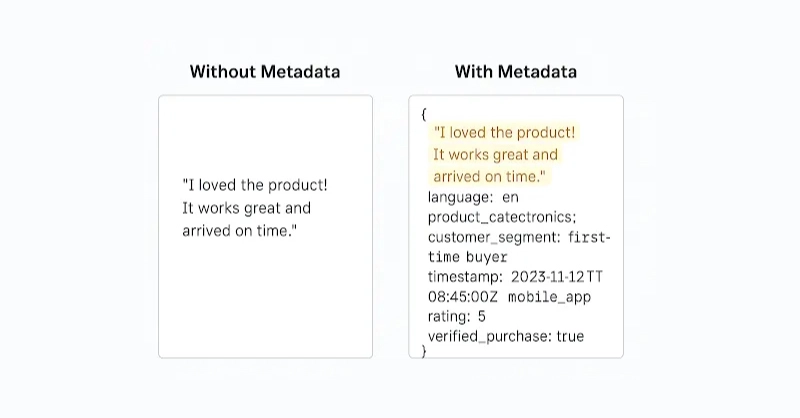

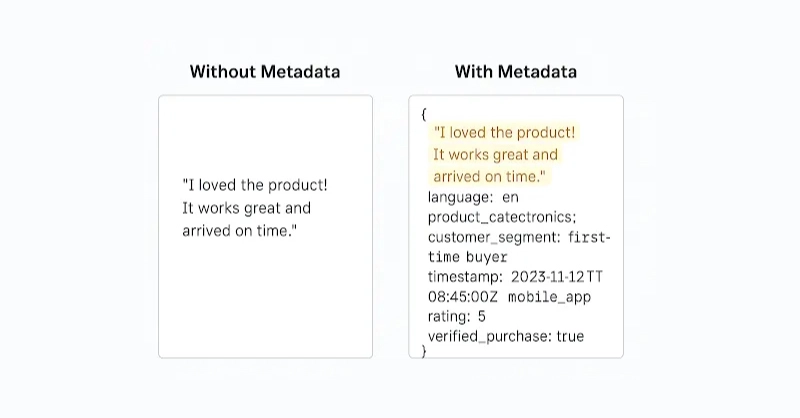

A data science team is training a sentiment analysis model on diverse text strings, but faces significant challenges without metadata.

Without Metadata

The model that only sees raw text, lacks context and limits its pattern recognition and generalization. Critical missing information includes:

- Customer demographics and purchase history

- Language identification and regional context

- Temporal information (when the review occurred)

With Metadata

Enriching the same dataset with metadata provides context that contributes to better efficiency, improved accuracy, and more robust, fair models. The enhanced model can:

- Interpret sentiment nuances across multiple languages and regions

- Segment insights by customer type or product category

- Leverage timestamps to identify seasonal patterns and evolving customer behaviors

Key Benefits of Metadata for AI Model Training

AI engineers have discovered that metadata is essential, not just helpful, for creating faster, smarter, and more reliable AI systems. When properly utilized, metadata illuminates the entire training pipeline, transforming AI development from experimentation into strategic, data-driven engineering. Here are the core benefits:

1. Improved Model Training

By tracking feature configurations, data versions, label quality, and preprocessing logic, metadata provides crucial context that streamlines learning. Models converge faster and achieve higher accuracy by leveraging consistent, well-defined input signals rather than repeatedly learning fundamental patterns.

2. Smarter Resource Allocation

Metadata reveals dataset structure and usage patterns, helping teams identify redundant entries, duplicate records, and low-impact features. This allows models to skip unnecessary data, reducing training time while maintaining performance—optimizing GPU utilization and lowering computation costs.

3. Better Data Quality Management

Using data lineage, schema evolution, and source tags, teams can quickly spot anomalies and outdated records, ensuring cleaner training data with reduced noise and bias.

4. Faster Debugging and Experiment Tracking

By documenting hyperparameters, data versions, architecture changes, and performance metrics, metadata creates a reproducible record of each training run. When issues arise or breakthroughs occur, you'll know exactly why—significantly reducing debugging time and enabling more confident iteration.

Proving Metadata for AI on a Small Scale

Now that we’ve covered how metadata is important in training AI models, let’s walk through an example that shows the difference of having metadata in your training dataset on your computer. We’ll be doing this through a Docker container:

We created a Dockerfile and ai_metadata_demo.py as below:

Dockerfile

FROM python:3.9-slim WORKDIR /app COPY ai_metadata_demo.py RUN pip install --no-cache-dir pandas numpy scikit-learn CMD ["python", "ai_metadata_demo.py"]

Python script: ai_metadata_demo.py

import pandas as pd

import numpy as np

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

from sklearn.model_selection import train_test_split

# Sample dataset of product reviews

raw_reviews = [

"I loved the product! It works great and arrived on time.",

"Terrible service, the product broke after a day.",

"Excellent quality and fast delivery.",

"The item was okay, but packaging was poor.",

"Worst experience ever. Totally disappointed.",

"Really satisfied with the product and the support.",

"Not what I expected, will not buy again.",

"Great value for money!",

]

# Corresponding labels (1 for positive, 0 for negative)

labels = [1, 0, 1, 0, 0, 1, 0, 1]

# Additional metadata for each review

metadata_features = [

{"verified": 1, "length": len(raw_reviews[0]), "rating": 5},

{"verified": 0, "length": len(raw_reviews[1]), "rating": 1},

{"verified": 1, "length": len(raw_reviews[2]), "rating": 5},

{"verified": 1, "length": len(raw_reviews[3]), "rating": 3},

{"verified": 0, "length": len(raw_reviews[4]), "rating": 1},

{"verified": 1, "length": len(raw_reviews[5]), "rating": 4},

{"verified": 0, "length": len(raw_reviews[6]), "rating": 2},

{"verified": 1, "length": len(raw_reviews[7]), "rating": 5},

]

# Convert metadata to DataFrame

metadata_df = pd.DataFrame(metadata_features)

# Vectorize raw text

vectorizer = CountVectorizer()

X_text = vectorizer.fit_transform(raw_reviews)

# Model WITHOUT metadata

X_train_no_meta, X_test_no_meta, y_train, y_test = train_test_split(X_text, labels, test_size=0.25, random_state=42)

model_no_meta = LogisticRegression()

model_no_meta.fit(X_train_no_meta, y_train)

preds_no_meta = model_no_meta.predict(X_test_no_meta)

acc_no_meta = accuracy_score(y_test, preds_no_meta)

# Model WITH metadata

X_text_array = X_text.toarray()

X_combined = np.hstack((X_text_array, metadata_df.values))

X_train_meta, X_test_meta, _, _ = train_test_split(X_combined, labels, test_size=0.25, random_state=42)

model_meta = LogisticRegression()

model_meta.fit(X_train_meta, y_train)

preds_meta = model_meta.predict(X_test_meta)

acc_meta = accuracy_score(y_test, preds_meta)

# Display results

print(f"Accuracy WITHOUT metadata: {acc_no_meta:.2f}")

print(f"Accuracy WITH metadata: {acc_meta:.2f}")Run the Docker Command

docker build -t metadata-demo . docker run metadata-demo

From the code above, we trained a simple logistic regression model on a small sentiment analysis dataset, once using only raw text, and once with additional metadata. Although this isn’t a deep neural network, the demo tells us that adding relevant metadata significantly improves model accuracy. With metadata like verification status, review length, and product rating, the model gains better context, allowing it to make more informed predictions:

- Accuracy WITHOUT metadata: 0.50

- Accuracy WITH metadata: 1.00

FAQs About AI Metadata

What is the difference between data and metadata in AI systems?

Data refers to the actual content being analyzed - like text, images, or numerical values. Metadata is "data about data" - information that describes, categorizes and provides context for the primary data. For example, in a customer review dataset, the review text is data while the timestamp, user ID, and product category would be metadata.

How does metadata improve AI model accuracy?

Metadata improves AI model accuracy by providing crucial context that helps models better interpret patterns in the data. It enables models to segment and categorize information more effectively, recognize situational variables, and make more nuanced predictions based on additional contextual clues beyond the raw data itself.

Can adding metadata help reduce bias in AI models?

Yes, metadata can help reduce bias by providing transparency about data sources, collection methods, and demographic representation. With proper metadata, teams can identify and address imbalances in training data, track data lineage to understand potential sources of bias, and implement more effective fairness testing across different data segments.

What tools can help manage metadata at scale for AI projects?

Several tools can help manage metadata at scale, including DataHub and Apache Atlas as mentioned earlier. Other options include MLflow for experiment tracking, DVC (Data Version Control) for dataset versioning, Great Expectations for data validation, and cloud-native services like AWS Glue Data Catalog or Google DataCatalog for enterprise-scale metadata management.

How does metadata help with AI model troubleshooting and debugging?

Metadata creates a traceable history of model development by documenting training parameters, data versions, and performance metrics. When models underperform, this information helps pinpoint whether issues stem from data quality problems, suboptimal hyperparameters, or implementation errors. This comprehensive context dramatically reduces debugging time and enables more systematic problem-solving.

Is it possible to add metadata to an existing AI dataset retroactively?

Yes, it's possible to add metadata retroactively to existing datasets, though it may require additional effort. This process often involves data enrichment through external sources, manual annotation, or automated inference techniques. While not ideal compared to capturing metadata during initial data collection, retroactive enrichment can still significantly improve model performance.

How does metadata impact training time and computational resources?

Metadata can both reduce and optimize resource usage. It helps identify redundant or low-value data that can be excluded from training, enables more efficient feature selection, and supports better hyperparameter initialization. These improvements lead to faster convergence during training, more efficient use of computational resources, and ultimately lower costs for AI development.

Effectively Utilizing Metadata for AI Models

Metadata is quickly becoming a critical enabler of efficient, scalable AI systems. While model architectures and compute power often take the spotlight, it's metadata that brings structure, clarity, and control to the training process. As we've explored in this article:

- What metadata is and why it matters for AI

- How different types of metadata (tags, lineage, glossary) provide essential context

- How metadata improves training efficiency, accuracy, and resource use

- A working demo showing real performance gains from using metadata

Hopefully at this point you understand that not utilizing metadata to its fullest potential is a big mistake to make. Visit our blog to read Common Machine Learning and Deep Learning Mistakes and Limitations to Avoid. As AI continues to evolve, those enriched data will be better equipped to build smarter, faster, and more responsible models, shifting from reactive development to intentional, well-informed AI engineering.

.png)

Fueling Innovation with an Exxact Multi-GPU Server

Training AI models on massive datasets can be accelerated exponentially with the right system. It's not just a high-performance computer, but a tool to propel and accelerate your research.

Configure Now.jpg?format=webp)

Meta Data: How Data about Your Data is Optimal for AI

Introduction

All AI models are built on data collected from a wide range of sources, including the vast repositories of the internet. The real challenge is not just gathering this raw information, but extracting its value. Thanks to Data labeling and neural network architectures, significant progress in turning unstructured data into intelligent models.

Data is gold. As AI models parse all kinds of data from numerous sources, a model fed with more descriptive data, will perform better. We’ll talk about what metadata is and how it data about data increases AI efficiency.

What is Metadata?

Metadata is "data about data" or descriptive information that characterizes, categorizes, and organizes data. It provides context and structure to raw information, enabling AI systems to process and interpret data more effectively.

Metadata provides essential context to data, making it more meaningful and usable for AI systems. Tools like DataHub and Apache Atlas help organizations manage metadata at scale, streamlining search and control throughout the data stack. Here are the key metadata types that enhance AI model training:

- Tags: Categorize datasets for quick discovery

- Lineage: Track data origins and transformations

- Business Glossary: Standardize definitions across teams

Consider a CSV file that lists potential leads. Without metadata, it's just a collection of numbers and text strings. With metadata, however, it becomes a structured resource that can tell you when each lead was captured, their source (social media, referral, website), their engagement level, and demographic information. We can feed this information to a model that helps evaluate priority for outreach and personalize communication strategies.

How Does Metadata Help AI?

Metadata provides the structural framework needed for systematic AI development. By organizing essential information about datasets, algorithms, and training parameters, metadata significantly improves model training efficiency by:

- Eliminate redundant experiments: tracking which parameter combinations have already been tested

- Accelerate model tuning: through systematic hyperparameter tracking and optimization

- Streamline data ingestion: with standardized schemas and preprocessing pipelines

- Enable experiment reproducibility: by recording exact training conditions and environments

- Facilitate model comparison: through consistent performance metrics and evaluation criteria

- Support automated feature selection: by tracking feature importance across experiments

These expanded points more clearly convey the specific ways metadata creates efficiency gains in AI development, aligning with the article's overall message about metadata's importance in structured AI development.

A data science team is training a sentiment analysis model on diverse text strings, but faces significant challenges without metadata.

Without Metadata

The model that only sees raw text, lacks context and limits its pattern recognition and generalization. Critical missing information includes:

- Customer demographics and purchase history

- Language identification and regional context

- Temporal information (when the review occurred)

With Metadata

Enriching the same dataset with metadata provides context that contributes to better efficiency, improved accuracy, and more robust, fair models. The enhanced model can:

- Interpret sentiment nuances across multiple languages and regions

- Segment insights by customer type or product category

- Leverage timestamps to identify seasonal patterns and evolving customer behaviors

Key Benefits of Metadata for AI Model Training

AI engineers have discovered that metadata is essential, not just helpful, for creating faster, smarter, and more reliable AI systems. When properly utilized, metadata illuminates the entire training pipeline, transforming AI development from experimentation into strategic, data-driven engineering. Here are the core benefits:

1. Improved Model Training

By tracking feature configurations, data versions, label quality, and preprocessing logic, metadata provides crucial context that streamlines learning. Models converge faster and achieve higher accuracy by leveraging consistent, well-defined input signals rather than repeatedly learning fundamental patterns.

2. Smarter Resource Allocation

Metadata reveals dataset structure and usage patterns, helping teams identify redundant entries, duplicate records, and low-impact features. This allows models to skip unnecessary data, reducing training time while maintaining performance—optimizing GPU utilization and lowering computation costs.

3. Better Data Quality Management

Using data lineage, schema evolution, and source tags, teams can quickly spot anomalies and outdated records, ensuring cleaner training data with reduced noise and bias.

4. Faster Debugging and Experiment Tracking

By documenting hyperparameters, data versions, architecture changes, and performance metrics, metadata creates a reproducible record of each training run. When issues arise or breakthroughs occur, you'll know exactly why—significantly reducing debugging time and enabling more confident iteration.

Proving Metadata for AI on a Small Scale

Now that we’ve covered how metadata is important in training AI models, let’s walk through an example that shows the difference of having metadata in your training dataset on your computer. We’ll be doing this through a Docker container:

We created a Dockerfile and ai_metadata_demo.py as below:

Dockerfile

FROM python:3.9-slim WORKDIR /app COPY ai_metadata_demo.py RUN pip install --no-cache-dir pandas numpy scikit-learn CMD ["python", "ai_metadata_demo.py"]

Python script: ai_metadata_demo.py

import pandas as pd

import numpy as np

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

from sklearn.model_selection import train_test_split

# Sample dataset of product reviews

raw_reviews = [

"I loved the product! It works great and arrived on time.",

"Terrible service, the product broke after a day.",

"Excellent quality and fast delivery.",

"The item was okay, but packaging was poor.",

"Worst experience ever. Totally disappointed.",

"Really satisfied with the product and the support.",

"Not what I expected, will not buy again.",

"Great value for money!",

]

# Corresponding labels (1 for positive, 0 for negative)

labels = [1, 0, 1, 0, 0, 1, 0, 1]

# Additional metadata for each review

metadata_features = [

{"verified": 1, "length": len(raw_reviews[0]), "rating": 5},

{"verified": 0, "length": len(raw_reviews[1]), "rating": 1},

{"verified": 1, "length": len(raw_reviews[2]), "rating": 5},

{"verified": 1, "length": len(raw_reviews[3]), "rating": 3},

{"verified": 0, "length": len(raw_reviews[4]), "rating": 1},

{"verified": 1, "length": len(raw_reviews[5]), "rating": 4},

{"verified": 0, "length": len(raw_reviews[6]), "rating": 2},

{"verified": 1, "length": len(raw_reviews[7]), "rating": 5},

]

# Convert metadata to DataFrame

metadata_df = pd.DataFrame(metadata_features)

# Vectorize raw text

vectorizer = CountVectorizer()

X_text = vectorizer.fit_transform(raw_reviews)

# Model WITHOUT metadata

X_train_no_meta, X_test_no_meta, y_train, y_test = train_test_split(X_text, labels, test_size=0.25, random_state=42)

model_no_meta = LogisticRegression()

model_no_meta.fit(X_train_no_meta, y_train)

preds_no_meta = model_no_meta.predict(X_test_no_meta)

acc_no_meta = accuracy_score(y_test, preds_no_meta)

# Model WITH metadata

X_text_array = X_text.toarray()

X_combined = np.hstack((X_text_array, metadata_df.values))

X_train_meta, X_test_meta, _, _ = train_test_split(X_combined, labels, test_size=0.25, random_state=42)

model_meta = LogisticRegression()

model_meta.fit(X_train_meta, y_train)

preds_meta = model_meta.predict(X_test_meta)

acc_meta = accuracy_score(y_test, preds_meta)

# Display results

print(f"Accuracy WITHOUT metadata: {acc_no_meta:.2f}")

print(f"Accuracy WITH metadata: {acc_meta:.2f}")Run the Docker Command

docker build -t metadata-demo . docker run metadata-demo

From the code above, we trained a simple logistic regression model on a small sentiment analysis dataset, once using only raw text, and once with additional metadata. Although this isn’t a deep neural network, the demo tells us that adding relevant metadata significantly improves model accuracy. With metadata like verification status, review length, and product rating, the model gains better context, allowing it to make more informed predictions:

- Accuracy WITHOUT metadata: 0.50

- Accuracy WITH metadata: 1.00

FAQs About AI Metadata

What is the difference between data and metadata in AI systems?

Data refers to the actual content being analyzed - like text, images, or numerical values. Metadata is "data about data" - information that describes, categorizes and provides context for the primary data. For example, in a customer review dataset, the review text is data while the timestamp, user ID, and product category would be metadata.

How does metadata improve AI model accuracy?

Metadata improves AI model accuracy by providing crucial context that helps models better interpret patterns in the data. It enables models to segment and categorize information more effectively, recognize situational variables, and make more nuanced predictions based on additional contextual clues beyond the raw data itself.

Can adding metadata help reduce bias in AI models?

Yes, metadata can help reduce bias by providing transparency about data sources, collection methods, and demographic representation. With proper metadata, teams can identify and address imbalances in training data, track data lineage to understand potential sources of bias, and implement more effective fairness testing across different data segments.

What tools can help manage metadata at scale for AI projects?

Several tools can help manage metadata at scale, including DataHub and Apache Atlas as mentioned earlier. Other options include MLflow for experiment tracking, DVC (Data Version Control) for dataset versioning, Great Expectations for data validation, and cloud-native services like AWS Glue Data Catalog or Google DataCatalog for enterprise-scale metadata management.

How does metadata help with AI model troubleshooting and debugging?

Metadata creates a traceable history of model development by documenting training parameters, data versions, and performance metrics. When models underperform, this information helps pinpoint whether issues stem from data quality problems, suboptimal hyperparameters, or implementation errors. This comprehensive context dramatically reduces debugging time and enables more systematic problem-solving.

Is it possible to add metadata to an existing AI dataset retroactively?

Yes, it's possible to add metadata retroactively to existing datasets, though it may require additional effort. This process often involves data enrichment through external sources, manual annotation, or automated inference techniques. While not ideal compared to capturing metadata during initial data collection, retroactive enrichment can still significantly improve model performance.

How does metadata impact training time and computational resources?

Metadata can both reduce and optimize resource usage. It helps identify redundant or low-value data that can be excluded from training, enables more efficient feature selection, and supports better hyperparameter initialization. These improvements lead to faster convergence during training, more efficient use of computational resources, and ultimately lower costs for AI development.

Effectively Utilizing Metadata for AI Models

Metadata is quickly becoming a critical enabler of efficient, scalable AI systems. While model architectures and compute power often take the spotlight, it's metadata that brings structure, clarity, and control to the training process. As we've explored in this article:

- What metadata is and why it matters for AI

- How different types of metadata (tags, lineage, glossary) provide essential context

- How metadata improves training efficiency, accuracy, and resource use

- A working demo showing real performance gains from using metadata

Hopefully at this point you understand that not utilizing metadata to its fullest potential is a big mistake to make. Visit our blog to read Common Machine Learning and Deep Learning Mistakes and Limitations to Avoid. As AI continues to evolve, those enriched data will be better equipped to build smarter, faster, and more responsible models, shifting from reactive development to intentional, well-informed AI engineering.

.png)

Fueling Innovation with an Exxact Multi-GPU Server

Training AI models on massive datasets can be accelerated exponentially with the right system. It's not just a high-performance computer, but a tool to propel and accelerate your research.

Configure Now