Introduction

Access to extreme-performance hardware is not always feasible. You may not have access to the hardware for completely fine-tuning a foundational LLM like DeepSeek R1 or Llama 3. Or, your organization may have trained a model on the main computing infrastructure, but running fine-tuning will interrupt new projects.

LoRA, or Low-Rank Adaptation, is a practical method for fine-tuning large language models without needing to retrain all of their parameters. Instead of relying on large clusters or high-end GPUs, LoRA makes model customization accessible to teams with modest hardware like your Exxact GPU workstation or an Exxact GPU server.

This guide explains how LoRA works, what makes it practical, and how it fits into real-world AI development—especially when paired with hardware designed to support efficient training workflows.

LoRA Fine-Tuning and How LoRA Works

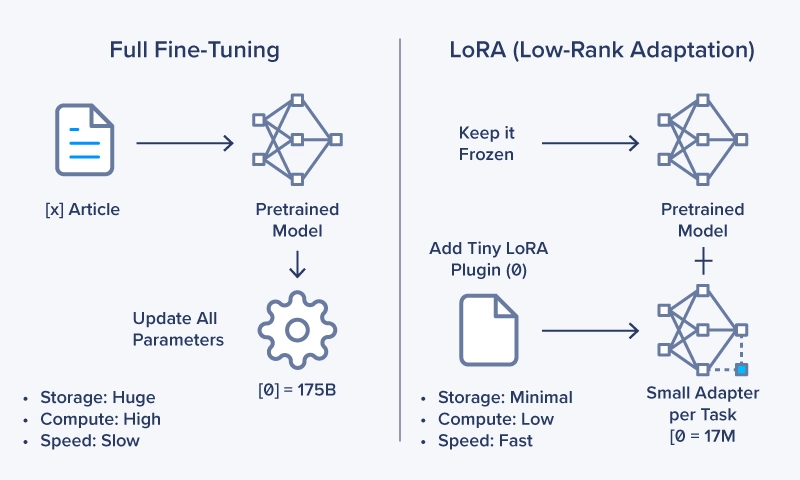

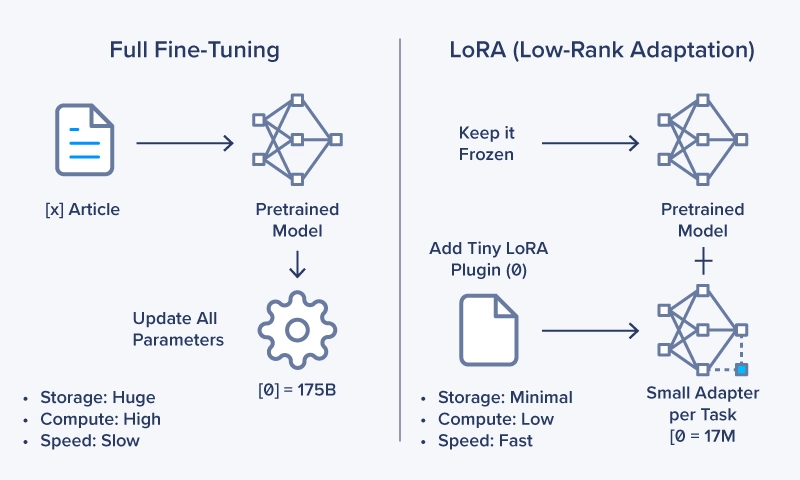

LoRA (Low-Rank Adaptation) is a technique that fine-tunes large language models efficiently by updating only a small number of parameters instead of retraining the entire model.

Model customization is now accessible to teams without full-scale compute infrastructure. Practitioners, organizations, or departments can fine-tune foundation models for their specific use cases without requiring large-scale computing resources.

LoRA works by freezing the original model weights and training only a small set of additional low-rank matrices. This approach keeps the base model intact while adapting it to new data or tasks.

- It reduces trainable parameters to roughly 1–2% of the full model.

- It lowers GPU memory requirements because most of the model remains unchanged during training.

- It shortens fine-tuning time since you only optimize lightweight components.

For comparison, instead of fine-tuning the entire model with 100 billion parameters, LoRA might only require updating a couple million. This leads to faster, more efficient training without compromising output quality.

How LoRA Helps Your AI Workloads

LoRA directly benefits teams that need to customize models for specific use cases without the overhead of traditional fine-tuning. It provides practical advantages that go beyond what prompt engineering or full fine-tuning can offer.

- Lower Compute Requirements: VRAM usage drops because the majority of parameters in the base model stay frozen. Fine-tuning can then run on an eighth or even a sixteenth of the original size. Teams can fine-tune large models without scaling out to expensive clusters or using their main computing infrastructure.

- Faster Fine-Tuning Cycles: Training runs complete faster since fewer parameters need to be calculated. This allows teams to iterate on more experiments in the same amount of time and update models more frequently.

- Easier Model Customization: You can create multiple lightweight and easy-to-store LoRA adapters tailored for different tasks, including domain-specific assistants and tuned customer support chatbots.

- Lower Total Cost of Ownership: Training fewer parameters reduces GPU hours and electricity usage. Smaller training jobs lower operational costs and make budgeting more predictable. Reduced compute demands help teams avoid overprovisioning hardware.

- Scalable and Maintainable Workflow: LoRA adapters are modular and easy to version control. Teams can maintain multiple task-specific adapters without retraining base models. Workflows stay cleaner because each adapter targets a single task or dataset.

Fine-Tuning GPU Requirements

The following table provides general VRAM estimates for fine-tuning Large Language Models (LLMs) with LoRA and QLoRA, based on a 7 billion parameter (7B) model as a benchmark:

| Fine-Tuning Method | VRAM per 1B Parameters (Approx.) | 32B Model Total VRAM (Approx.) | Example GPU |

|---|---|---|---|

| Full Fine-Tuning | ~16 GB | ~512 GB | 4x H200 NVL (141GB)

8x RTX PRO 6000 (96GB) |

| LoRA (FP16/BF16) | ~2 GB (for model) + overhead | ~64 GB | 2x NVIDIA RTX 5090 (32GB)

1x RTX PRO 6000 (96GB) |

| QLoRA (4-bit) | ~0.5 GB (for model) + overhead | ~16 GB | 1x NVIDIA RTX 5080 (16G) 1x RTX PRO 4500 (24GB) |

Key factors affecting GPU requirements:

- Model Size: The primary factor. Larger models inherently require more VRAM.

- Precision: Using lower precision (BF16/FP16 or INT8/FP8) cuts the memory needed for model weights in half. QLoRA further quantizes the base model to 4-bit (INT4), drastically reducing memory usage (at the expense of precision). This may or may not have an adverse effect on performance.

- Optimizers: The AdamW optimizer is common but stores additional states that increase VRAM usage. Using a simpler optimizer like SGD can save a small amount of VRAM.

- Gradient Checkpointing: This optimization technique reduces VRAM usage by recomputing some activations during backpropagation instead of storing them, though it slightly increases training time.

- Batch Size and Sequence Length: Larger batch sizes and longer sequence lengths (prompt contexts) demand more VRAM and longer computations.

Practical GPU Recommendations

- Up to 7B parameters (using QLoRA): GPUs with 8GB or 12GB VRAM may work, though they require very small batch sizes and longer training times. Larger VRAM provides more flexibility for experimentation for both LoRA and QLoRA.

- Up to 32B–70B parameters: Exxact GPU Workstations with multi-GPU setups provide ample VRAM. With 2x NVIDIA RTX PRO 6000 Blackwell GPUs (96GB VRAM each, 192GB total), you can effectively load and LoRA-tune models up to 70B parameters.

- For larger models (70B+ parameters): Professional-grade multi-GPU deployments are typically needed. This is where newer GPUs shine: an Exxact GPU Workstation or Server with 4x NVIDIA RTX PRO 6000 Blackwell GPUs delivers 384GB of VRAM for models up to 140B parameters. Larger models can scale to 8x GPUs in a single 4U node.

By using techniques like LoRA and QLoRA, fine-tuning large models that once required expensive multi-GPU data center setups is now feasible on affordable single-GPU, multi-GPU, or single-node configurations.

Frequently Asked Questions about LoRA

What is LoRA and why is it important?

LoRA (Low-Rank Adaptation) fine-tunes large language models by updating only a small fraction of parameters, dramatically reducing compute requirements, memory use, and training time. It makes model customization accessible without hyperscaler-level hardware or massive infrastructure costs.

How does LoRA reduce fine-tuning costs?

Traditional fine-tuning updates billions of parameters, requiring significant GPU memory and expense. LoRA freezes the original model and trains only small low-rank matrices, using far less VRAM and fewer GPU hours—directly lowering total cost of ownership.

How does LoRA improve enterprise AI workflows?

LoRA enables rapid experimentation by creating lightweight adapters for different tasks. You can swap adapters without retraining the base model, resulting in faster iteration, version-controlled deployment, and minimal inference overhead.

Is LoRA compatible with my existing GPUs?

Yes. LoRA works efficiently on mid-range and workstation-grade GPUs, not just cutting-edge clusters. Exxact's AI workstations and servers are optimized for LoRA workloads, offering the right balance of GPU memory, compute density, and thermal stability.

Why use LoRA instead of full fine-tuning or prompt engineering?

Prompt engineering is fast but limited. Full fine-tuning is powerful but expensive. LoRA delivers near-full fine-tuning quality at a fraction of the compute cost, with reusable lightweight adapters—making it ideal for enterprises seeking customized AI without overspending.

Conclusion

LoRA provides a practical way to fine-tune large language models without the heavy compute demands of traditional methods. It lowers training costs, shortens iteration cycles, and gives teams the ability to build domain-specific AI with predictable hardware requirements.

When paired with Exxact hardware, LoRA becomes even more accessible. Exxact systems offer the GPU performance, stability, and flexibility needed to run LoRA efficiently, whether teams are tuning a 7B model on a workstation or supporting larger workloads on multi-GPU servers.

For organizations looking to customize AI without overspending, LoRA delivers a direct path forward. With the right hardware foundation, you can build faster, deploy smarter, and scale your AI projects with confidence.

Accelerate Training with an Exxact Multi-GPU Workstation

With the latest CPUs and most powerful GPUs available, accelerate your deep learning and AI project optimized to your deployment, budget, and desired performance!

Configure Now

How LoRA Makes AI Fine-Tuning Faster, Cheaper, and More Practical

Introduction

Access to extreme-performance hardware is not always feasible. You may not have access to the hardware for completely fine-tuning a foundational LLM like DeepSeek R1 or Llama 3. Or, your organization may have trained a model on the main computing infrastructure, but running fine-tuning will interrupt new projects.

LoRA, or Low-Rank Adaptation, is a practical method for fine-tuning large language models without needing to retrain all of their parameters. Instead of relying on large clusters or high-end GPUs, LoRA makes model customization accessible to teams with modest hardware like your Exxact GPU workstation or an Exxact GPU server.

This guide explains how LoRA works, what makes it practical, and how it fits into real-world AI development—especially when paired with hardware designed to support efficient training workflows.

LoRA Fine-Tuning and How LoRA Works

LoRA (Low-Rank Adaptation) is a technique that fine-tunes large language models efficiently by updating only a small number of parameters instead of retraining the entire model.

Model customization is now accessible to teams without full-scale compute infrastructure. Practitioners, organizations, or departments can fine-tune foundation models for their specific use cases without requiring large-scale computing resources.

LoRA works by freezing the original model weights and training only a small set of additional low-rank matrices. This approach keeps the base model intact while adapting it to new data or tasks.

- It reduces trainable parameters to roughly 1–2% of the full model.

- It lowers GPU memory requirements because most of the model remains unchanged during training.

- It shortens fine-tuning time since you only optimize lightweight components.

For comparison, instead of fine-tuning the entire model with 100 billion parameters, LoRA might only require updating a couple million. This leads to faster, more efficient training without compromising output quality.

How LoRA Helps Your AI Workloads

LoRA directly benefits teams that need to customize models for specific use cases without the overhead of traditional fine-tuning. It provides practical advantages that go beyond what prompt engineering or full fine-tuning can offer.

- Lower Compute Requirements: VRAM usage drops because the majority of parameters in the base model stay frozen. Fine-tuning can then run on an eighth or even a sixteenth of the original size. Teams can fine-tune large models without scaling out to expensive clusters or using their main computing infrastructure.

- Faster Fine-Tuning Cycles: Training runs complete faster since fewer parameters need to be calculated. This allows teams to iterate on more experiments in the same amount of time and update models more frequently.

- Easier Model Customization: You can create multiple lightweight and easy-to-store LoRA adapters tailored for different tasks, including domain-specific assistants and tuned customer support chatbots.

- Lower Total Cost of Ownership: Training fewer parameters reduces GPU hours and electricity usage. Smaller training jobs lower operational costs and make budgeting more predictable. Reduced compute demands help teams avoid overprovisioning hardware.

- Scalable and Maintainable Workflow: LoRA adapters are modular and easy to version control. Teams can maintain multiple task-specific adapters without retraining base models. Workflows stay cleaner because each adapter targets a single task or dataset.

Fine-Tuning GPU Requirements

The following table provides general VRAM estimates for fine-tuning Large Language Models (LLMs) with LoRA and QLoRA, based on a 7 billion parameter (7B) model as a benchmark:

| Fine-Tuning Method | VRAM per 1B Parameters (Approx.) | 32B Model Total VRAM (Approx.) | Example GPU |

|---|---|---|---|

| Full Fine-Tuning | ~16 GB | ~512 GB | 4x H200 NVL (141GB)

8x RTX PRO 6000 (96GB) |

| LoRA (FP16/BF16) | ~2 GB (for model) + overhead | ~64 GB | 2x NVIDIA RTX 5090 (32GB)

1x RTX PRO 6000 (96GB) |

| QLoRA (4-bit) | ~0.5 GB (for model) + overhead | ~16 GB | 1x NVIDIA RTX 5080 (16G) 1x RTX PRO 4500 (24GB) |

Key factors affecting GPU requirements:

- Model Size: The primary factor. Larger models inherently require more VRAM.

- Precision: Using lower precision (BF16/FP16 or INT8/FP8) cuts the memory needed for model weights in half. QLoRA further quantizes the base model to 4-bit (INT4), drastically reducing memory usage (at the expense of precision). This may or may not have an adverse effect on performance.

- Optimizers: The AdamW optimizer is common but stores additional states that increase VRAM usage. Using a simpler optimizer like SGD can save a small amount of VRAM.

- Gradient Checkpointing: This optimization technique reduces VRAM usage by recomputing some activations during backpropagation instead of storing them, though it slightly increases training time.

- Batch Size and Sequence Length: Larger batch sizes and longer sequence lengths (prompt contexts) demand more VRAM and longer computations.

Practical GPU Recommendations

- Up to 7B parameters (using QLoRA): GPUs with 8GB or 12GB VRAM may work, though they require very small batch sizes and longer training times. Larger VRAM provides more flexibility for experimentation for both LoRA and QLoRA.

- Up to 32B–70B parameters: Exxact GPU Workstations with multi-GPU setups provide ample VRAM. With 2x NVIDIA RTX PRO 6000 Blackwell GPUs (96GB VRAM each, 192GB total), you can effectively load and LoRA-tune models up to 70B parameters.

- For larger models (70B+ parameters): Professional-grade multi-GPU deployments are typically needed. This is where newer GPUs shine: an Exxact GPU Workstation or Server with 4x NVIDIA RTX PRO 6000 Blackwell GPUs delivers 384GB of VRAM for models up to 140B parameters. Larger models can scale to 8x GPUs in a single 4U node.

By using techniques like LoRA and QLoRA, fine-tuning large models that once required expensive multi-GPU data center setups is now feasible on affordable single-GPU, multi-GPU, or single-node configurations.

Frequently Asked Questions about LoRA

What is LoRA and why is it important?

LoRA (Low-Rank Adaptation) fine-tunes large language models by updating only a small fraction of parameters, dramatically reducing compute requirements, memory use, and training time. It makes model customization accessible without hyperscaler-level hardware or massive infrastructure costs.

How does LoRA reduce fine-tuning costs?

Traditional fine-tuning updates billions of parameters, requiring significant GPU memory and expense. LoRA freezes the original model and trains only small low-rank matrices, using far less VRAM and fewer GPU hours—directly lowering total cost of ownership.

How does LoRA improve enterprise AI workflows?

LoRA enables rapid experimentation by creating lightweight adapters for different tasks. You can swap adapters without retraining the base model, resulting in faster iteration, version-controlled deployment, and minimal inference overhead.

Is LoRA compatible with my existing GPUs?

Yes. LoRA works efficiently on mid-range and workstation-grade GPUs, not just cutting-edge clusters. Exxact's AI workstations and servers are optimized for LoRA workloads, offering the right balance of GPU memory, compute density, and thermal stability.

Why use LoRA instead of full fine-tuning or prompt engineering?

Prompt engineering is fast but limited. Full fine-tuning is powerful but expensive. LoRA delivers near-full fine-tuning quality at a fraction of the compute cost, with reusable lightweight adapters—making it ideal for enterprises seeking customized AI without overspending.

Conclusion

LoRA provides a practical way to fine-tune large language models without the heavy compute demands of traditional methods. It lowers training costs, shortens iteration cycles, and gives teams the ability to build domain-specific AI with predictable hardware requirements.

When paired with Exxact hardware, LoRA becomes even more accessible. Exxact systems offer the GPU performance, stability, and flexibility needed to run LoRA efficiently, whether teams are tuning a 7B model on a workstation or supporting larger workloads on multi-GPU servers.

For organizations looking to customize AI without overspending, LoRA delivers a direct path forward. With the right hardware foundation, you can build faster, deploy smarter, and scale your AI projects with confidence.

Accelerate Training with an Exxact Multi-GPU Workstation

With the latest CPUs and most powerful GPUs available, accelerate your deep learning and AI project optimized to your deployment, budget, and desired performance!

Configure Now