Introduction

With pre-trained models now widely available, companies no longer need to build models from scratch. Whether it’s image recognition, natural language processing, or recommendation systems, there’s likely a fine-tuned model already available for your use case.

Neural networks continue to learn by adjusting weights via backpropagation. During backpropagation, the model calculates its prediction error, computes gradients to see how each weight contributed to that error, and updates the weights to improve accuracy. The challenge is adapting these models to your specific needs. That’s where freezing layers in transfer learning comes in.

What is Freezing Layers in Deep Learning and Transfer Learning?

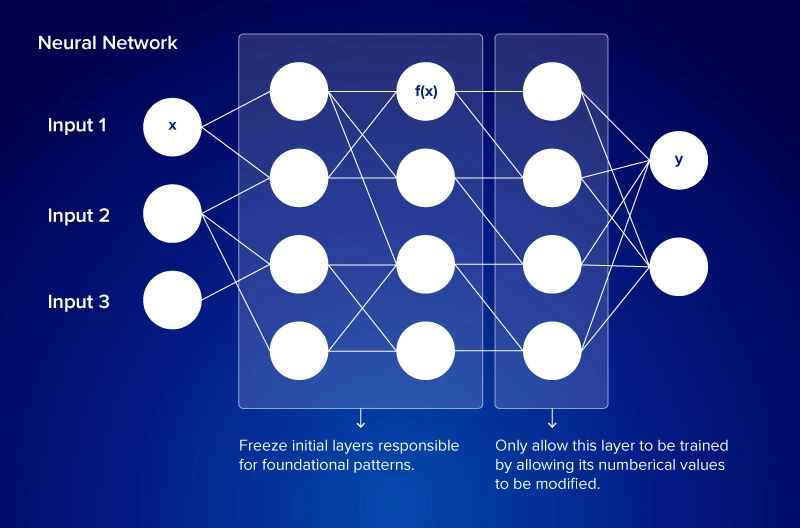

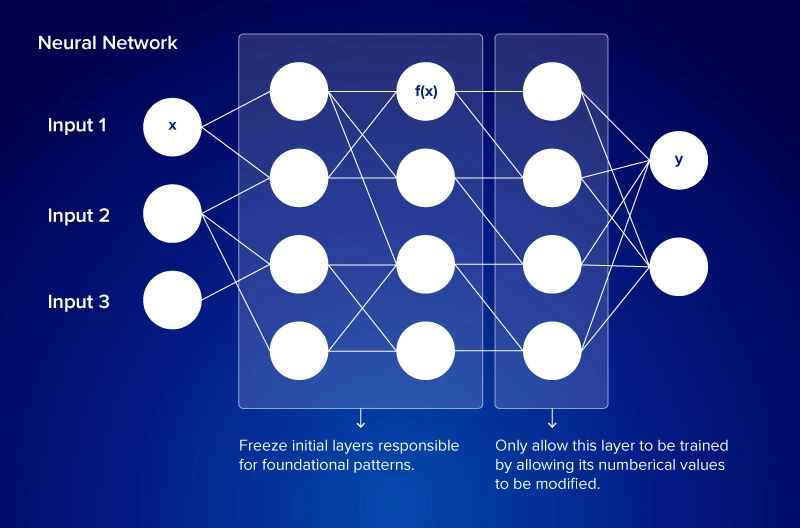

Freezing a layer means preventing its weights from being updated during AI training, namely during transfer learning. Freezing layers allows you to retain the useful features learned in the original model while fine-tuning the parts that need to adapt to this new use case.

Instead of training a model from the ground up, utilize transfer learning and freezing layers. Transfer learning is a process where a teacher model built for one task is repurposed and fine-tuned into a student model for a related yet different task. Certain features of the teacher model are frozen while other parameters are finetuned and updated, cutting training time, reducing data requirements, and improving accuracy.

When a layer is frozen, data still flows through the layer in the forward pass, but in the backward pass, the model skips weight updates for that layer.

- Its weights stay fixed.

- No gradients are calculated for it.

- It stops learning from new training data.

Which layers should you freeze and when?

When Should You Freeze Layers in Fine-Tuning?

You should freeze layers when you want to reduce training time, save compute resources, and utilize the general features of a model without having to train from scracth. This is especially effective if your dataset is small or your hardware resources are limited.

For example, an e-commerce company building a model to summarize customer reviews could fine-tune a pre-trained language model like LLaMA, BART, or GPT. By freezing the early layers (which capture universal language patterns) and only training the later layers (which adapt to review-specific language), the team saves time while maintaining accuracy.

Key advantages of freezing layers:

- Lower compute costs: fewer layers to update means reduced GPU and memory usage

- Faster training: shortens the backpropagation cycle

- Better generalization: keeps the model’s broad language knowledge intact

How to Freeze Layers in Deep Learning (Keras Code Example)

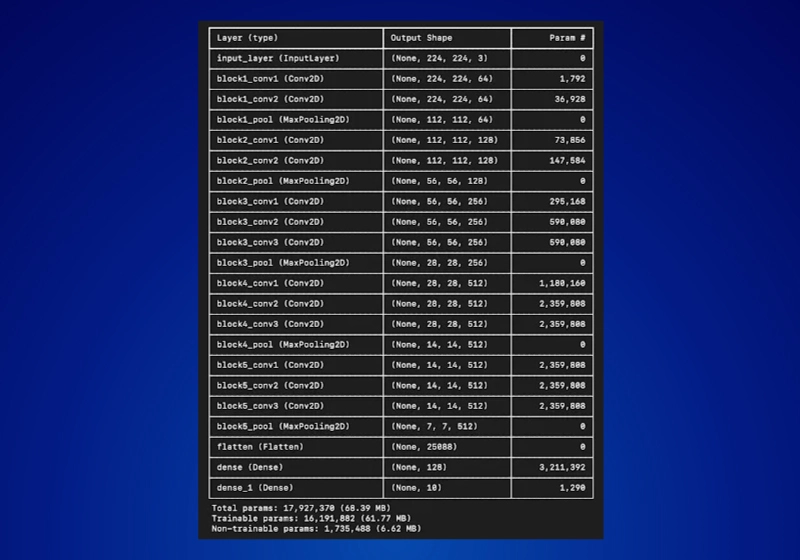

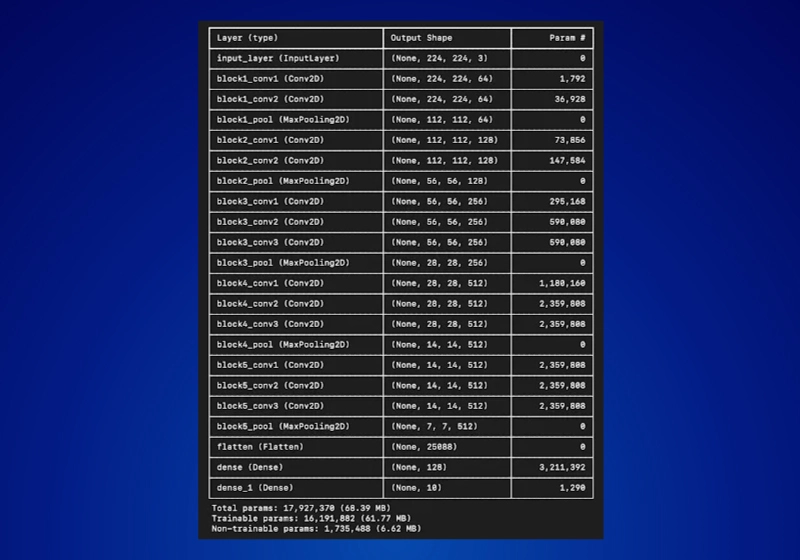

Freezing layers in deep learning is straightforward with Keras. Below is a complete example using VGG16, a CNN for image classification, along with a Docker setup so you can run it in a containerized environment for reproducibility.

Preface - Review What Each Layer Learns

Knowing what different layers in a neural network learn is crucial for deciding which layers to freeze during fine-tuning. In a convolutional neural network (CNN)—commonly used for image classification—layers follow a hierarchical structure:| Layer Type | What It Learns | Visual Example |

| Early (Conv1) | Edges, gradients, simple blobs | Vertical/horizontal lines |

| Mid (Conv2-3) | Shapes, textures, corners | Circles, grids |

| Late (Dense) | Complex patterns, object parts | Eyes, faces, buildings |

This knowledge comes from

- Gradient ascent visualizations: Generate input images that strongly activate a neuron to understand what features it looks for.

- Activation heatmaps: Show where and how strongly an input activates each layer

Once you've understood the structure, it helps to inspect the actual architecture of your model to decide on key layers. To decide which layers to freeze, inspect the architecture of your model. Reviewing the structure helps identify which blocks capture low-level vs. high-level features, making selective freezing more effective. In PyTorch, the named_children() method lists each layer block.

for name, layer in model.named_children():

print(name)Step 1: Create Project Files

In your working directory, create three files:

- Dockerfile

- requirements.txt

- freeze_layers_example.py

Docker File

# Use an official Python base image

FROM python:3.10-slim

# Set working directory

WORKDIR /app

# Install system dependencies

RUN apt-get update && apt-get install -y \

build-essential \

libgl1-mesa-glx \

&& rm -rf /var/lib/apt/lists/*

# Copy requirements and install dependencies

COPY requirements.txt ./

RUN pip install --no-cache-dir -r requirements.txt

# Copy training script

COPY freeze_layers_example.py ./

# Run the script

CMD ["python", "freeze_layers_example.py"]requirements.txt

tensorflow matplotlib

freeze_layers_example.py

from tensorflow.keras.applications import VGG16

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Dense, Flatten

from tensorflow.keras import Input

# Load pre-trained model without the top layer

base_model = VGG16(weights='imagenet', include_top=False, input_tensor=Input(shape=(224, 224, 3)))

# Freeze the first 10 layers

for layer in base_model.layers[:10]:

layer.trainable = False

# Add custom layers

x = Flatten()(base_model.output)

x = Dense(128, activation='relu')(x)

output = Dense(10, activation='softmax')(x)

model = Model(inputs=base_model.input, outputs=output)

# Compile the model

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# Print model summary

model.summary()

Step 2: Build and Run the Docker Container

Run the following commands in your terminal:

docker build -t freezing-layers . docker run freezing-layers

This workflow freezes the first 10 layers of VGG16, adds custom dense layers, and prepares the model for fine-tuning.

From the example above, we showed how to load a pre-trained model (VGG16), freeze selected layers, and fine-tune the rest on a custom dataset. This simple workflow demonstrates a core principle of transfer learning: you don’t need to retrain the entire model to achieve strong results.

- Freeze specific layers: We froze the first 10 convolutional layers of VGG16, which capture general features like edges and textures. Setting layer.trainable = False preserves these features during training.

- Add custom layers: A flatten layer, dense hidden layer, and output classification layer were added. These remain trainable, adapting the model to the new dataset.

- Recompile the model: After modifying the architecture, recompiling ensures the optimizer updates only the unfrozen layers during training.

Common Pitfalls of Freezing Layers

Freezing layers speeds up fine-tuning, but mistakes in how you apply it can hurt model accuracy. Here are the most common pitfalls to avoid:

- Freezing too many layers – Locking most of the network, especially higher layers, prevents adaptation to the new task. This often causes underfitting.

- Freezing too few layers on small datasets – Leaving most layers trainable with limited data leads to overfitting, where the model memorizes training examples but fails on new inputs.

- Choosing the wrong layers – Freezing task-specific layers reduces transferability. Always distinguish between general-purpose features (edges, shapes) and task-specific features (object parts, domain-specific patterns).

- Input mismatch with pre-trained models – Pre-trained models like VGG16 or ResNet expect specific preprocessing (e.g., normalization or preprocess_input). Skipping this causes poor feature alignment and weak results.

Best Practices of Freezing Layers

To get the most out of layer freezing while avoiding performance issues, follow these best practices:

- Start by freezing early layers – They capture general features (edges, textures) that transfer well across tasks.

- Gradually unfreeze if needed – If accuracy stalls, progressively unfreeze middle or higher layers to let the model adapt.

- Match preprocessing to the pre-trained model – Use the same normalization, tokenization, or image preprocessing applied during the model’s original training.

- Experiment with partial freezing – In CNNs, try freezing early convolutional blocks but fine-tuning dense layers. In transformers, freeze embeddings and lower blocks, fine-tune higher blocks.

- Monitor validation performance – Watch for overfitting or underfitting as you adjust which layers are frozen.

Conclusion & TLDR

Freezing Layers is one of the most practical techniques in transfer learning. By reusing the powerful features learned by pre-trained models and fine-tuning only the layers that matter, you can save training time, reduce compute costs, and still achieve high accuracy.

Whether you’re working with CNNs for image classification or transformer models for language processing, the process for Freezing Layers is as follows:

- Freeze layers that capture general patterns.

- Fine-tune layers that adapt to your specific task.

- Monitor results and adjust by unfreezing deeper layers if needed.

Done right, freezing layers turns transfer learning into a powerful shortcut that accelerates model development while keeping accuracy strong.

Accelerate Training with an Exxact Multi-GPU Workstation

With the latest CPUs and most powerful GPUs available, accelerate your deep learning and AI project optimized to your deployment, budget, and desired performance!

Configure Now

Guide to Freezing Layers in AI Models

Introduction

With pre-trained models now widely available, companies no longer need to build models from scratch. Whether it’s image recognition, natural language processing, or recommendation systems, there’s likely a fine-tuned model already available for your use case.

Neural networks continue to learn by adjusting weights via backpropagation. During backpropagation, the model calculates its prediction error, computes gradients to see how each weight contributed to that error, and updates the weights to improve accuracy. The challenge is adapting these models to your specific needs. That’s where freezing layers in transfer learning comes in.

What is Freezing Layers in Deep Learning and Transfer Learning?

Freezing a layer means preventing its weights from being updated during AI training, namely during transfer learning. Freezing layers allows you to retain the useful features learned in the original model while fine-tuning the parts that need to adapt to this new use case.

Instead of training a model from the ground up, utilize transfer learning and freezing layers. Transfer learning is a process where a teacher model built for one task is repurposed and fine-tuned into a student model for a related yet different task. Certain features of the teacher model are frozen while other parameters are finetuned and updated, cutting training time, reducing data requirements, and improving accuracy.

When a layer is frozen, data still flows through the layer in the forward pass, but in the backward pass, the model skips weight updates for that layer.

- Its weights stay fixed.

- No gradients are calculated for it.

- It stops learning from new training data.

Which layers should you freeze and when?

When Should You Freeze Layers in Fine-Tuning?

You should freeze layers when you want to reduce training time, save compute resources, and utilize the general features of a model without having to train from scracth. This is especially effective if your dataset is small or your hardware resources are limited.

For example, an e-commerce company building a model to summarize customer reviews could fine-tune a pre-trained language model like LLaMA, BART, or GPT. By freezing the early layers (which capture universal language patterns) and only training the later layers (which adapt to review-specific language), the team saves time while maintaining accuracy.

Key advantages of freezing layers:

- Lower compute costs: fewer layers to update means reduced GPU and memory usage

- Faster training: shortens the backpropagation cycle

- Better generalization: keeps the model’s broad language knowledge intact

How to Freeze Layers in Deep Learning (Keras Code Example)

Freezing layers in deep learning is straightforward with Keras. Below is a complete example using VGG16, a CNN for image classification, along with a Docker setup so you can run it in a containerized environment for reproducibility.

Preface - Review What Each Layer Learns

Knowing what different layers in a neural network learn is crucial for deciding which layers to freeze during fine-tuning. In a convolutional neural network (CNN)—commonly used for image classification—layers follow a hierarchical structure:| Layer Type | What It Learns | Visual Example |

| Early (Conv1) | Edges, gradients, simple blobs | Vertical/horizontal lines |

| Mid (Conv2-3) | Shapes, textures, corners | Circles, grids |

| Late (Dense) | Complex patterns, object parts | Eyes, faces, buildings |

This knowledge comes from

- Gradient ascent visualizations: Generate input images that strongly activate a neuron to understand what features it looks for.

- Activation heatmaps: Show where and how strongly an input activates each layer

Once you've understood the structure, it helps to inspect the actual architecture of your model to decide on key layers. To decide which layers to freeze, inspect the architecture of your model. Reviewing the structure helps identify which blocks capture low-level vs. high-level features, making selective freezing more effective. In PyTorch, the named_children() method lists each layer block.

for name, layer in model.named_children():

print(name)Step 1: Create Project Files

In your working directory, create three files:

- Dockerfile

- requirements.txt

- freeze_layers_example.py

Docker File

# Use an official Python base image

FROM python:3.10-slim

# Set working directory

WORKDIR /app

# Install system dependencies

RUN apt-get update && apt-get install -y \

build-essential \

libgl1-mesa-glx \

&& rm -rf /var/lib/apt/lists/*

# Copy requirements and install dependencies

COPY requirements.txt ./

RUN pip install --no-cache-dir -r requirements.txt

# Copy training script

COPY freeze_layers_example.py ./

# Run the script

CMD ["python", "freeze_layers_example.py"]requirements.txt

tensorflow matplotlib

freeze_layers_example.py

from tensorflow.keras.applications import VGG16

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Dense, Flatten

from tensorflow.keras import Input

# Load pre-trained model without the top layer

base_model = VGG16(weights='imagenet', include_top=False, input_tensor=Input(shape=(224, 224, 3)))

# Freeze the first 10 layers

for layer in base_model.layers[:10]:

layer.trainable = False

# Add custom layers

x = Flatten()(base_model.output)

x = Dense(128, activation='relu')(x)

output = Dense(10, activation='softmax')(x)

model = Model(inputs=base_model.input, outputs=output)

# Compile the model

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# Print model summary

model.summary()

Step 2: Build and Run the Docker Container

Run the following commands in your terminal:

docker build -t freezing-layers . docker run freezing-layers

This workflow freezes the first 10 layers of VGG16, adds custom dense layers, and prepares the model for fine-tuning.

From the example above, we showed how to load a pre-trained model (VGG16), freeze selected layers, and fine-tune the rest on a custom dataset. This simple workflow demonstrates a core principle of transfer learning: you don’t need to retrain the entire model to achieve strong results.

- Freeze specific layers: We froze the first 10 convolutional layers of VGG16, which capture general features like edges and textures. Setting layer.trainable = False preserves these features during training.

- Add custom layers: A flatten layer, dense hidden layer, and output classification layer were added. These remain trainable, adapting the model to the new dataset.

- Recompile the model: After modifying the architecture, recompiling ensures the optimizer updates only the unfrozen layers during training.

Common Pitfalls of Freezing Layers

Freezing layers speeds up fine-tuning, but mistakes in how you apply it can hurt model accuracy. Here are the most common pitfalls to avoid:

- Freezing too many layers – Locking most of the network, especially higher layers, prevents adaptation to the new task. This often causes underfitting.

- Freezing too few layers on small datasets – Leaving most layers trainable with limited data leads to overfitting, where the model memorizes training examples but fails on new inputs.

- Choosing the wrong layers – Freezing task-specific layers reduces transferability. Always distinguish between general-purpose features (edges, shapes) and task-specific features (object parts, domain-specific patterns).

- Input mismatch with pre-trained models – Pre-trained models like VGG16 or ResNet expect specific preprocessing (e.g., normalization or preprocess_input). Skipping this causes poor feature alignment and weak results.

Best Practices of Freezing Layers

To get the most out of layer freezing while avoiding performance issues, follow these best practices:

- Start by freezing early layers – They capture general features (edges, textures) that transfer well across tasks.

- Gradually unfreeze if needed – If accuracy stalls, progressively unfreeze middle or higher layers to let the model adapt.

- Match preprocessing to the pre-trained model – Use the same normalization, tokenization, or image preprocessing applied during the model’s original training.

- Experiment with partial freezing – In CNNs, try freezing early convolutional blocks but fine-tuning dense layers. In transformers, freeze embeddings and lower blocks, fine-tune higher blocks.

- Monitor validation performance – Watch for overfitting or underfitting as you adjust which layers are frozen.

Conclusion & TLDR

Freezing Layers is one of the most practical techniques in transfer learning. By reusing the powerful features learned by pre-trained models and fine-tuning only the layers that matter, you can save training time, reduce compute costs, and still achieve high accuracy.

Whether you’re working with CNNs for image classification or transformer models for language processing, the process for Freezing Layers is as follows:

- Freeze layers that capture general patterns.

- Fine-tune layers that adapt to your specific task.

- Monitor results and adjust by unfreezing deeper layers if needed.

Done right, freezing layers turns transfer learning into a powerful shortcut that accelerates model development while keeping accuracy strong.

Accelerate Training with an Exxact Multi-GPU Workstation

With the latest CPUs and most powerful GPUs available, accelerate your deep learning and AI project optimized to your deployment, budget, and desired performance!

Configure Now