Introduction

Scientific discovery has shifted from slow, one-experiment-at-a-time methods to automated workflows and scale research. Instead of testing compounds or running analyses manually, researchers can now process thousands of experiments in parallel.

High-throughput methods are accelerating progress in drug discovery, genomics, materials science, and more. Massive datasets demand efficient workflows to prevent bottlenecks and wasted resources.

Optimizing your high-throughput scientific workflow is critical for driving breakthroughs and maintaining a competitive edge. In this article, we’ll break down the core challenges of high-throughput research and strategies to maximize workflow efficiency.

What is High-Throughput Research?

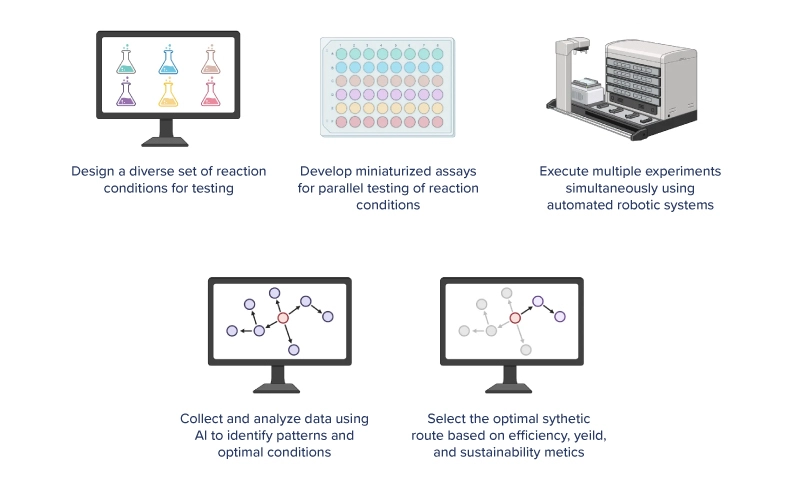

High-throughput research or high-throughput screening uses automation, parallelization, and miniaturization to run large-scale experiments and analyze data rapidly. Instead of processing one sample at a time, researchers can test thousands simultaneously, dramatically accelerating results.

Benefits of High Throughput Research Workflows

- Faster Results, Lower Costs

- Accelerated discovery: more samples processed in less time shortens research cycles

- Cost efficiency: optimized workflows cut per-sample costs and reduce labor demands

- Greater Accuracy and Reproducibility

- Consistent quality: standardized processes improve data reliability

- Reproducibility: automated, documented workflows make replication easier for validation

- More Time for Innovation

- Focus on high-value tasks: researchers spend more simulation time on design and interpretation

- Room for exploration: efficiency gains enable testing riskier, high-reward ideas

- Go to market faster: accelerating discovery and innovation to be the first to market

Common Challenges in High-Throughput Research

- Managing Massive Data Volumes: Experiments often generate terabytes—or even petabytes—of data, creating pressure on storage, file transfer and sharing, and computing resources.

- Ensuring Accuracy and Reproducibility: The scale of high-throughput research increases the risk of errors, making consistency vital. Processes need extra attention to prep, instrument calibration, and quality control protocols.

- Balancing Resources and Time: High-throughput pipelines require both advanced equipment and skilled personnel. Research teams often struggle with:

- Coordinating complex, multi-step experiments

- Managing consumables and reagents effectively

- Training and aligning specialists across disciplines

HPC, GPUs, and AI in High Throughput Research

High-Performance Computing (HPC) and GPUs provide the backbone that makes high-throughput feasible at scale:

- Parallel processing – GPUs accelerate AI training and data analysis by handling thousands of calculations simultaneously

- Large-scale simulations – HPC clusters model complex phenomena like molecular interactions, advancing fields such as drug discovery and genomics

- Real-time insights – Streaming data can be analyzed on the fly, allowing experiments to adapt dynamically

In practice, the impact is profound. For instance, GPU acceleration can make genomic sequence alignment up to 50× faster than CPU-only methods, unlocking large-scale studies that were once impractical.

GPU-accelerated platforms drive high-throughput research, enabling many interesting use cases for speeding up the entire research life cycle, including AI, automation, robotics, and more.

AI in High-Throughput Scientific Research

Artificial Intelligence (AI) has become a cornerstone of high-throughput research, enabling faster, smarter, and more efficient workflows. By combining machine learning with advanced data analysis, AI transforms both how data is processed and how decisions are made.

- Detecting patterns and correlations in massive datasets

- Filtering noise to focus on relevant signals

- Generating insights that might be overlooked by human analysis

AI algorithms turn raw data into actionable predictions, helping researchers:

- Prioritize experiments with the highest chance of success

- Optimize experimental conditions for better outcomes

- Make real-time adjustments during ongoing workflows

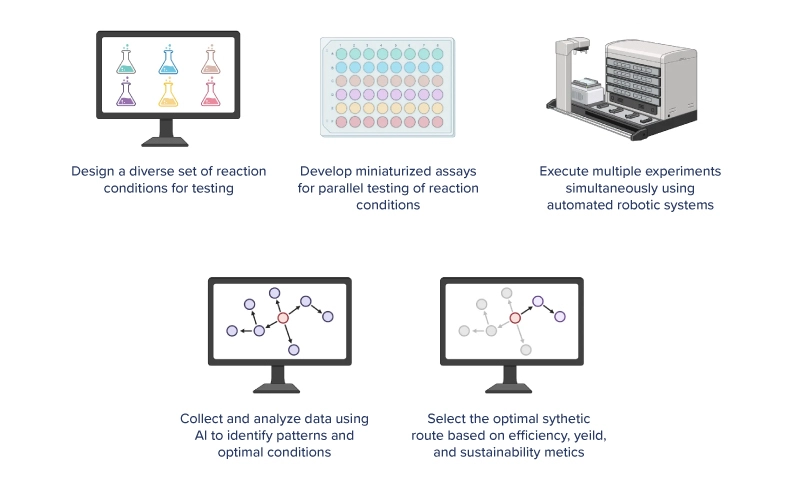

Automation in High-Throughput Workflows

Automation transforms high-throughput research by minimizing manual intervention while maximizing efficiency, accuracy, and reproducibility:

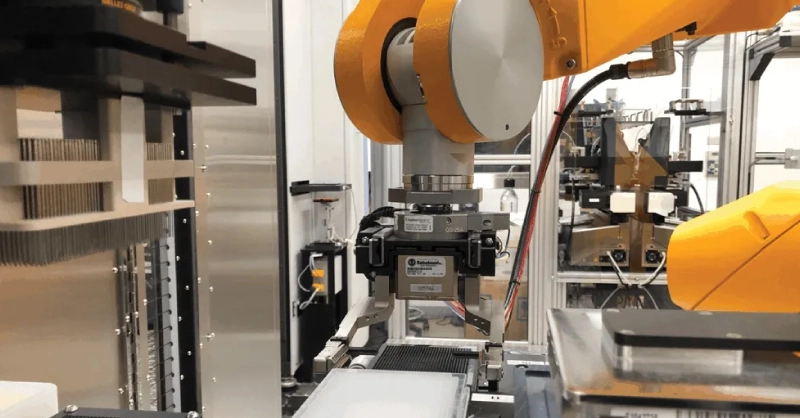

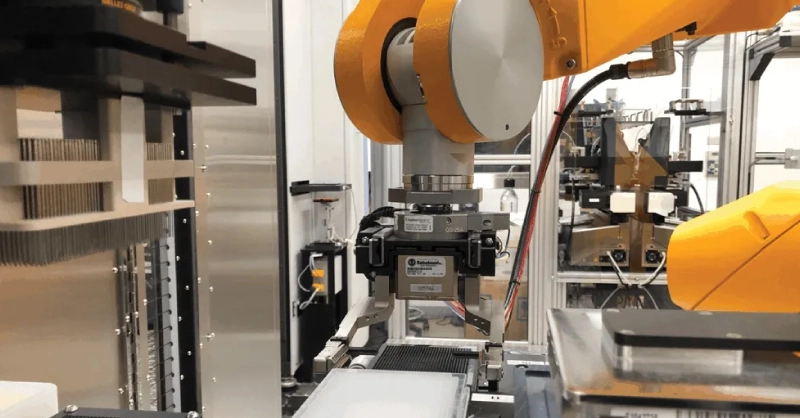

- Laboratory automation – Robotic systems handle physical tasks like sample preparation, liquid handling, and plate management, enabling thousands of daily experiments with minimal human intervention

- Data pipeline automation – Specialized software manages the complete data lifecycle, from collection and processing to analysis and interpretation

- Key benefits – Faster processing, improved consistency, reduced errors, and seamless scaling without proportional increases in cost or labor

Real-world applications demonstrate automation's impact: drug discovery platforms test thousands of compound interactions daily, while genomics pipelines process sequence data from alignment through functional prediction without manual steps.

Frequently Asked Questions

How do GPUs specifically accelerate high-throughput research workflows?

GPUs dramatically accelerate high-throughput research through massive parallel processing capabilities. With thousands of cores (versus CPUs' few powerful cores), GPUs excel at simultaneous data processing, complex simulations, ML algorithm acceleration, and image analysis. This architecture can reduce processing times from days to minutes, enabling faster iteration and broader experimental exploration.

What are the main challenges researchers face when implementing high-throughput methods?

The primary challenges in high-throughput implementation include infrastructure requirements, data management complexities, integration issues between systems, quality control across thousands of samples, and expertise gaps. Successful implementation requires thoughtful planning and cross-disciplinary collaboration to overcome these significant technical and organizational hurdles.

How does artificial intelligence enhance high-throughput screening processes?

AI transforms high-throughput screening by enabling predictive modeling to identify promising candidates, automated image analysis, experimental design optimization, advanced pattern recognition in complex datasets, and adaptive experimentation. These capabilities dramatically reduce resource requirements while simultaneously improving insight quality and experimental outcomes.

How can research teams balance cost considerations with the need for high-throughput capabilities?

Research teams can balance costs and capabilities through strategic approaches: staged implementation targeting critical bottlenecks, utilizing cloud computing for flexible resources, leveraging open-source tools, sharing equipment costs through collaborations, conducting ROI analysis to justify investments, and prioritizing automation for high-impact processes. An incremental approach allows teams to demonstrate value before expanding further.

Conclusion and Key Takeaways

Optimizing high-throughput screening and research workflows isn’t just about speed, but generating reliable and verifiable results efficiently. With AI, automation, and HPC, researchers can:

- Process massive datasets quickly and accurately

- Explore broader experimental spaces

- Detect subtle patterns and correlations

- Make real-time, data-driven decisions

- Dedicate more time to innovation over routine tasks

Continual optimization is crucial for addressing complex challenges in drug development, climate modeling, and genomics. Modern research success depends on effectively harnessing high-throughput tools that enable your team to make transformative breakthroughs. No matter what your research looks like at the moment, we are confident that HPC and GPU-acceleration is or soon will be a key tool to improve and speed up research. Contact Exxact today to configure the right computing solution to enable your most groundbreaking discoveries.

We're Here to Deliver the Tools to Power Your Research

With access to the highest-performing hardware, Exxact offers customizable platforms for AMBER, GROMACS, NAMD, and more. Every Exxact system is optimized for your deployment, budget, and desired performance so you can make an impact with your research!

Configure your Ideal GPU System for Life Science Research

How GPUs Can Accelerate High Throughput Screening Research

Introduction

Scientific discovery has shifted from slow, one-experiment-at-a-time methods to automated workflows and scale research. Instead of testing compounds or running analyses manually, researchers can now process thousands of experiments in parallel.

High-throughput methods are accelerating progress in drug discovery, genomics, materials science, and more. Massive datasets demand efficient workflows to prevent bottlenecks and wasted resources.

Optimizing your high-throughput scientific workflow is critical for driving breakthroughs and maintaining a competitive edge. In this article, we’ll break down the core challenges of high-throughput research and strategies to maximize workflow efficiency.

What is High-Throughput Research?

High-throughput research or high-throughput screening uses automation, parallelization, and miniaturization to run large-scale experiments and analyze data rapidly. Instead of processing one sample at a time, researchers can test thousands simultaneously, dramatically accelerating results.

Benefits of High Throughput Research Workflows

- Faster Results, Lower Costs

- Accelerated discovery: more samples processed in less time shortens research cycles

- Cost efficiency: optimized workflows cut per-sample costs and reduce labor demands

- Greater Accuracy and Reproducibility

- Consistent quality: standardized processes improve data reliability

- Reproducibility: automated, documented workflows make replication easier for validation

- More Time for Innovation

- Focus on high-value tasks: researchers spend more simulation time on design and interpretation

- Room for exploration: efficiency gains enable testing riskier, high-reward ideas

- Go to market faster: accelerating discovery and innovation to be the first to market

Common Challenges in High-Throughput Research

- Managing Massive Data Volumes: Experiments often generate terabytes—or even petabytes—of data, creating pressure on storage, file transfer and sharing, and computing resources.

- Ensuring Accuracy and Reproducibility: The scale of high-throughput research increases the risk of errors, making consistency vital. Processes need extra attention to prep, instrument calibration, and quality control protocols.

- Balancing Resources and Time: High-throughput pipelines require both advanced equipment and skilled personnel. Research teams often struggle with:

- Coordinating complex, multi-step experiments

- Managing consumables and reagents effectively

- Training and aligning specialists across disciplines

HPC, GPUs, and AI in High Throughput Research

High-Performance Computing (HPC) and GPUs provide the backbone that makes high-throughput feasible at scale:

- Parallel processing – GPUs accelerate AI training and data analysis by handling thousands of calculations simultaneously

- Large-scale simulations – HPC clusters model complex phenomena like molecular interactions, advancing fields such as drug discovery and genomics

- Real-time insights – Streaming data can be analyzed on the fly, allowing experiments to adapt dynamically

In practice, the impact is profound. For instance, GPU acceleration can make genomic sequence alignment up to 50× faster than CPU-only methods, unlocking large-scale studies that were once impractical.

GPU-accelerated platforms drive high-throughput research, enabling many interesting use cases for speeding up the entire research life cycle, including AI, automation, robotics, and more.

AI in High-Throughput Scientific Research

Artificial Intelligence (AI) has become a cornerstone of high-throughput research, enabling faster, smarter, and more efficient workflows. By combining machine learning with advanced data analysis, AI transforms both how data is processed and how decisions are made.

- Detecting patterns and correlations in massive datasets

- Filtering noise to focus on relevant signals

- Generating insights that might be overlooked by human analysis

AI algorithms turn raw data into actionable predictions, helping researchers:

- Prioritize experiments with the highest chance of success

- Optimize experimental conditions for better outcomes

- Make real-time adjustments during ongoing workflows

Automation in High-Throughput Workflows

Automation transforms high-throughput research by minimizing manual intervention while maximizing efficiency, accuracy, and reproducibility:

- Laboratory automation – Robotic systems handle physical tasks like sample preparation, liquid handling, and plate management, enabling thousands of daily experiments with minimal human intervention

- Data pipeline automation – Specialized software manages the complete data lifecycle, from collection and processing to analysis and interpretation

- Key benefits – Faster processing, improved consistency, reduced errors, and seamless scaling without proportional increases in cost or labor

Real-world applications demonstrate automation's impact: drug discovery platforms test thousands of compound interactions daily, while genomics pipelines process sequence data from alignment through functional prediction without manual steps.

Frequently Asked Questions

How do GPUs specifically accelerate high-throughput research workflows?

GPUs dramatically accelerate high-throughput research through massive parallel processing capabilities. With thousands of cores (versus CPUs' few powerful cores), GPUs excel at simultaneous data processing, complex simulations, ML algorithm acceleration, and image analysis. This architecture can reduce processing times from days to minutes, enabling faster iteration and broader experimental exploration.

What are the main challenges researchers face when implementing high-throughput methods?

The primary challenges in high-throughput implementation include infrastructure requirements, data management complexities, integration issues between systems, quality control across thousands of samples, and expertise gaps. Successful implementation requires thoughtful planning and cross-disciplinary collaboration to overcome these significant technical and organizational hurdles.

How does artificial intelligence enhance high-throughput screening processes?

AI transforms high-throughput screening by enabling predictive modeling to identify promising candidates, automated image analysis, experimental design optimization, advanced pattern recognition in complex datasets, and adaptive experimentation. These capabilities dramatically reduce resource requirements while simultaneously improving insight quality and experimental outcomes.

How can research teams balance cost considerations with the need for high-throughput capabilities?

Research teams can balance costs and capabilities through strategic approaches: staged implementation targeting critical bottlenecks, utilizing cloud computing for flexible resources, leveraging open-source tools, sharing equipment costs through collaborations, conducting ROI analysis to justify investments, and prioritizing automation for high-impact processes. An incremental approach allows teams to demonstrate value before expanding further.

Conclusion and Key Takeaways

Optimizing high-throughput screening and research workflows isn’t just about speed, but generating reliable and verifiable results efficiently. With AI, automation, and HPC, researchers can:

- Process massive datasets quickly and accurately

- Explore broader experimental spaces

- Detect subtle patterns and correlations

- Make real-time, data-driven decisions

- Dedicate more time to innovation over routine tasks

Continual optimization is crucial for addressing complex challenges in drug development, climate modeling, and genomics. Modern research success depends on effectively harnessing high-throughput tools that enable your team to make transformative breakthroughs. No matter what your research looks like at the moment, we are confident that HPC and GPU-acceleration is or soon will be a key tool to improve and speed up research. Contact Exxact today to configure the right computing solution to enable your most groundbreaking discoveries.

We're Here to Deliver the Tools to Power Your Research

With access to the highest-performing hardware, Exxact offers customizable platforms for AMBER, GROMACS, NAMD, and more. Every Exxact system is optimized for your deployment, budget, and desired performance so you can make an impact with your research!

Configure your Ideal GPU System for Life Science Research

.jpg?format=webp)