Understanding PCIe Architecture to Maximize GPU Server Performance

GPUs have been the de facto solution for high performance computing for AI and HPC applications compared to traditional CPU only servers. A wide range of applications can be executed on these systems, and the performance increase for applications that take advantage of the GPUs has been widely documented. New technologies and applications are being developed to this day to harness and optimize increasingly powerful GPU performance.

While GPU focused servers contain single or dual CPUs and up to 10 PCIe GPUs, how the system is architected can impact the application speed and flexibility of the server. There are three ways to design a GPU server, resulting in a more optimized system for various workloads. The data flow between the CPU and GPUs is crucial when choosing a GPU server.

PCIe GPU Access Choices

Exxact GPU servers are designed for applications that require substantial computing for Molecular Dynamics, AI and Deep Learning, and various HPC workloads. While 1:1 CPU-to-GPU ratios are common in desktops, workstations, and servers, often, challenging and high compute workloads require GPU servers designed for high acceleration with multi-GPU configurations.

GPU servers are available in two general architectures:

- PCIe Based Servers: GPU servers with up to 8 or sometimes 10 available PCIe slots for GPUs.

- SXM/OAM Based Servers: GPU servers where GPUs are mounted and socketed on carrier board and only have 1 PCIe connection to the CPU(s).

GPU servers also have options for dual CPU socket configurations. The two CPUs communicate via high-speed communication paths called UPI and xGMI for Intel and AMD servers respectively.

Distinct System Architectures - How PCIe is Connected

Delving further into PCIe Servers, there are 3 distinct system architectures designed for various workloads:

- Single Root

- Dual Root

- Direct Attached

Single Root Architecture - 1 CPU, 2 PCIe Switch

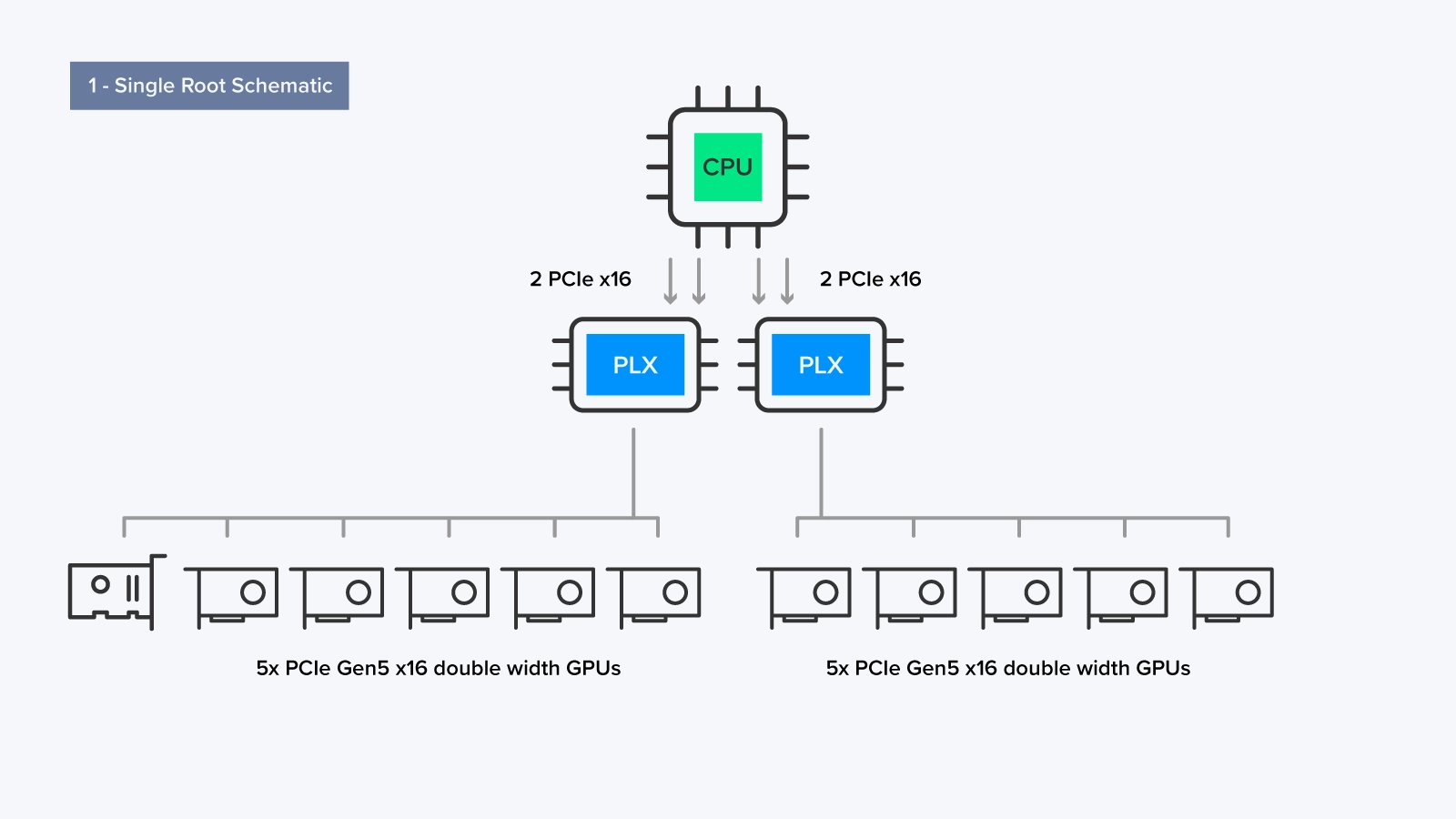

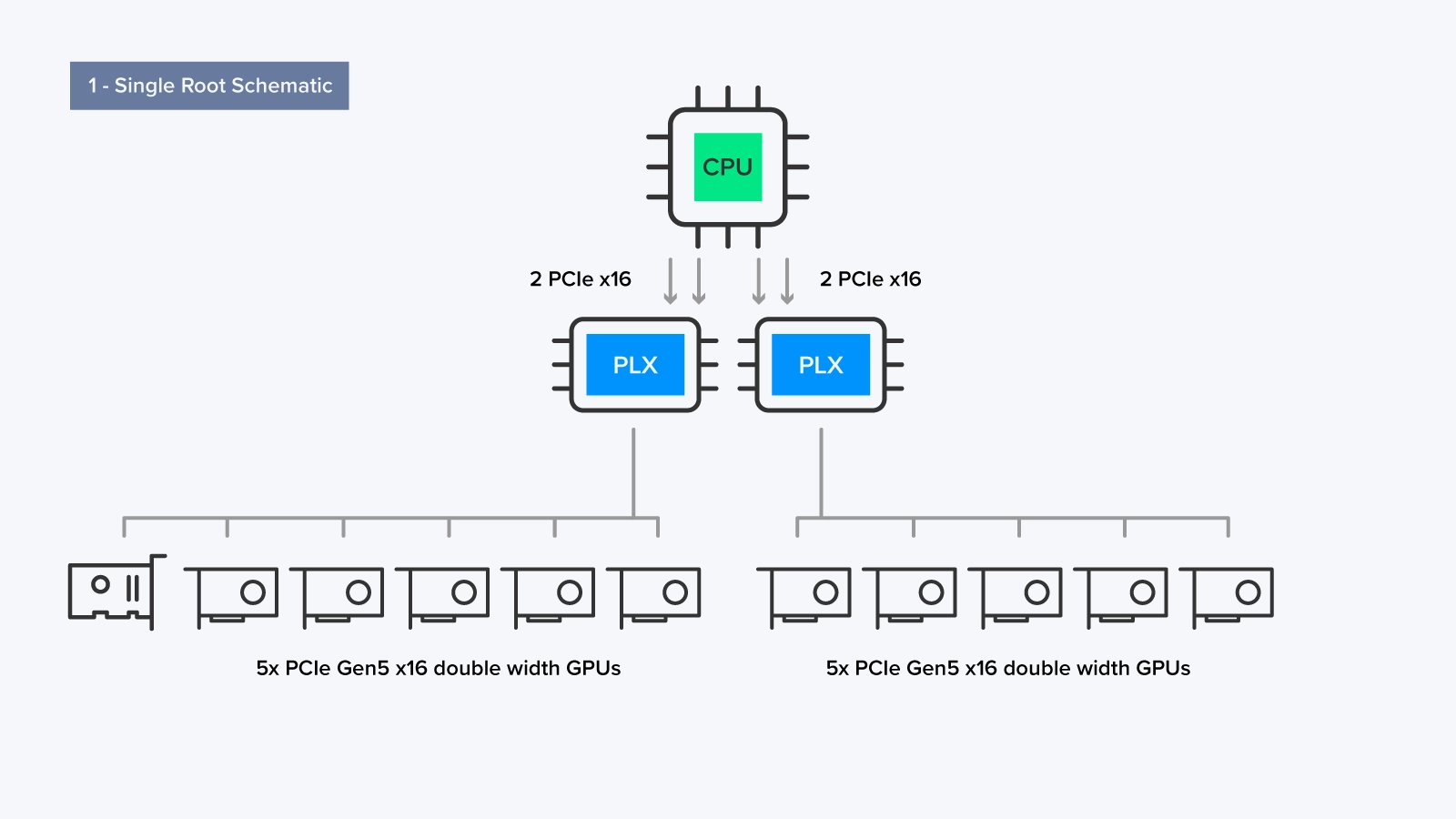

The single root architecture is ideal for applications that reside on a single GPU but require access to multiple GPUs. A single root system dedicates one of the CPUs (out of the two, if applicable) to manage all communications to the GPUs.

As shown, the CPU that communicates with the GPUs uses a PCI switch called a PLX. Each PLX switch connects to the CPU via 2 PCIe x16 lanes which then can communicate with up to 4 or 5 GPUs for a maximum of 10 GPUs in a single server.

With all the computing attached to a single CPU, a Single Root Architecture is more simplified, has lower latency, and is suitable for most applications. Single root systems are tailored for applications where most computation is GPUs centric and peer-to-peer communication is not critical. Application examples include AMBER Molecular Dynamics and other simulation type workloads.

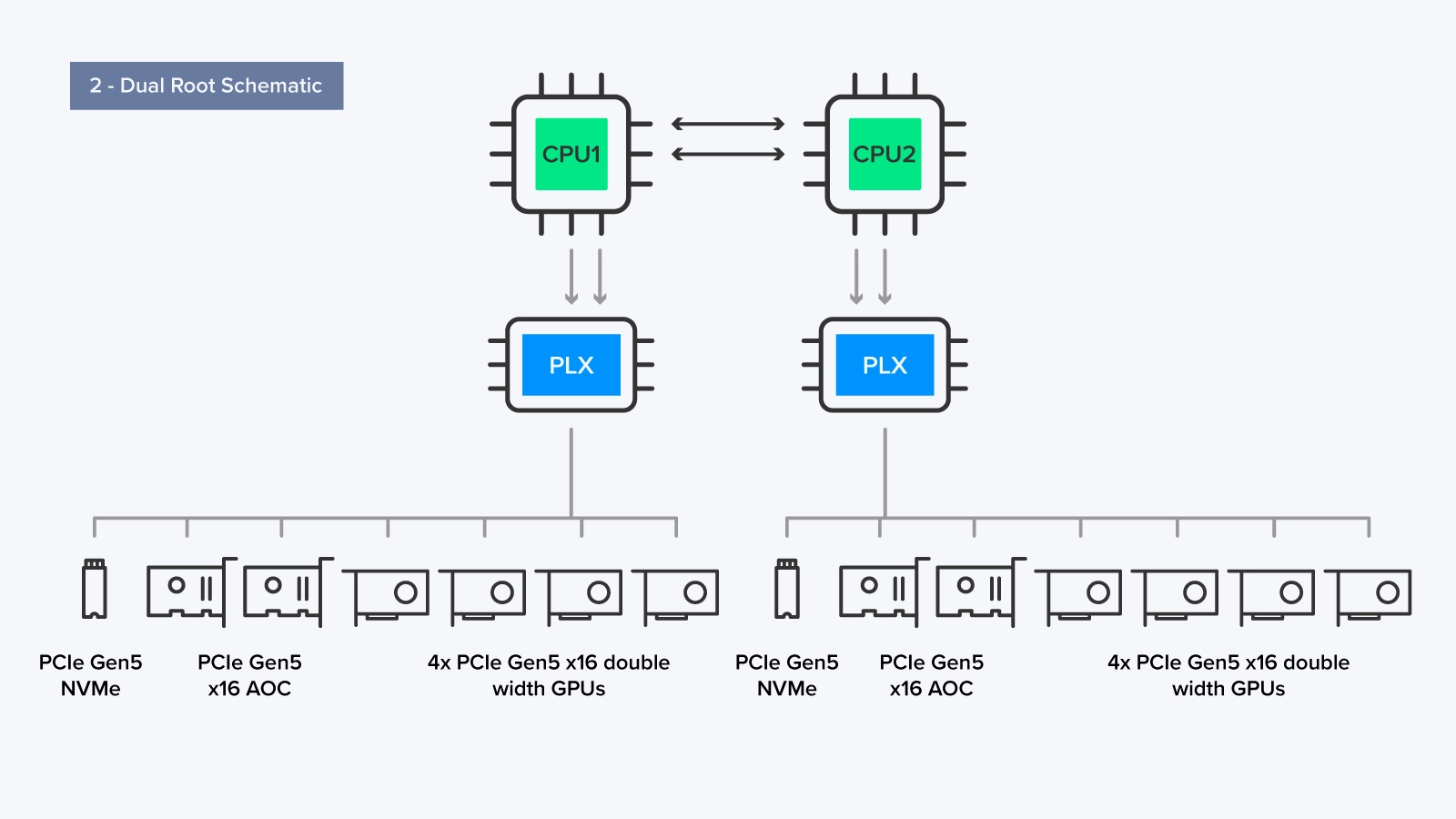

Dual Root Architecture - 2 CPUs, 2 PCIe Switches

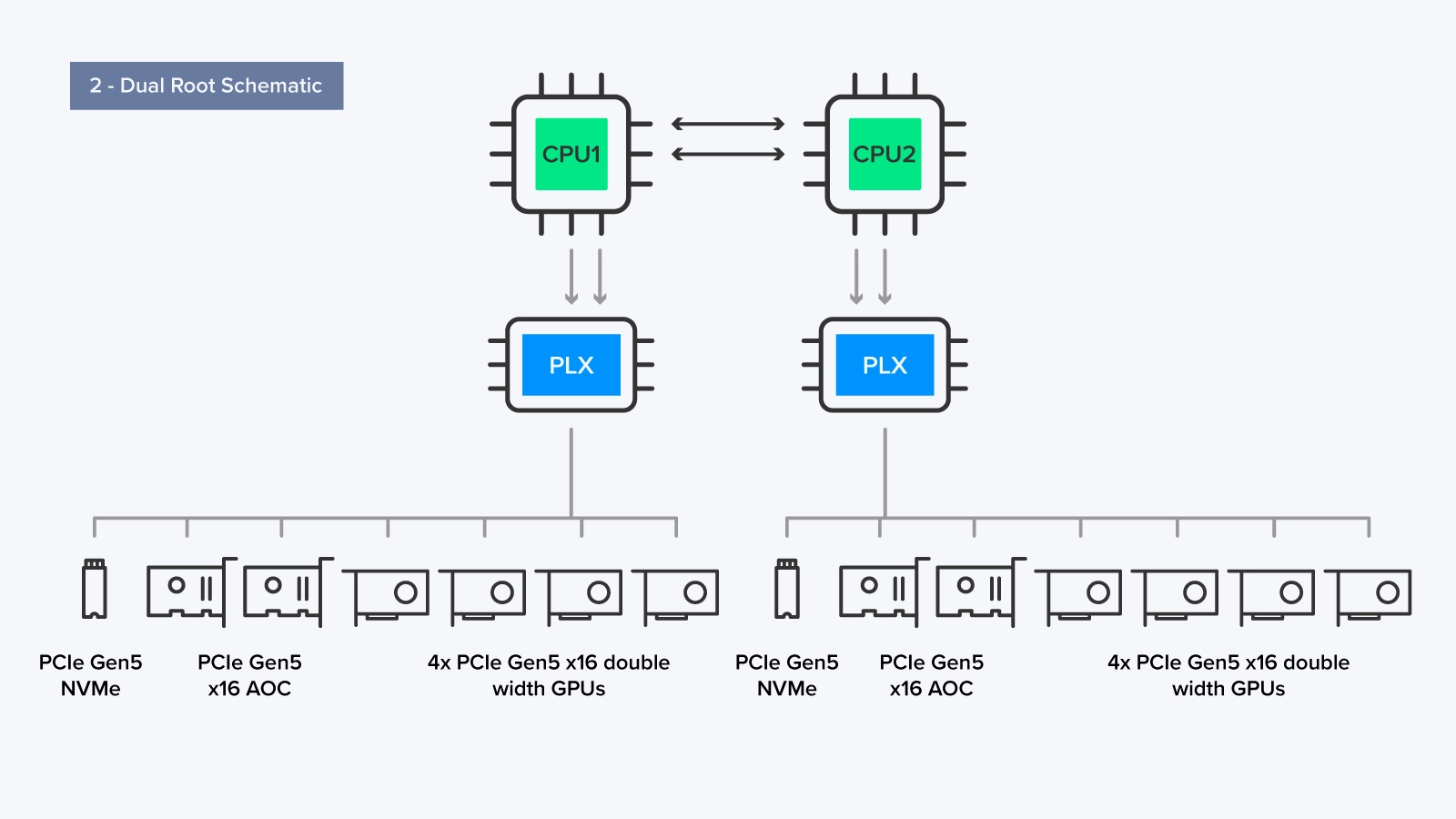

A dual root setup connects the CPUs to several GPUs and add-on cards (AOCs) through the PLX switch. The distribution of GPUs attached to PLX is not required to be equal; workloads assigned to a system might not be easily distributable among two CPUs.

Each CPU can communicate with each other via UPI/xGMI, so the combinations of PCIe devices attached can be flexible (one CPU for storage and networking, one CPU for compute). However, equal allocations of hardware are common in most server configurations.

Each CPU is connected to 4 GPUs, 2 AOCs, and 4 NVMe storage drives through its dedicated PLX switch, a typical configuration for Omniverse environment workloads. AOCs can be additional storage, high-speed networking cards, and more.

A dual-root server setup is advantageous for applications that require a balance between CPU and GPU processing power, especially when data sharing and communication between workloads are essential. In this setup, workloads can be assigned to each CPU, allowing both processors to utilize their respective computing capabilities and then facilitate communication between them when necessary. Applications examples include deep learning training, high-performance computing (HPC), and workloads where efficient data sharing and communication between CPUs and GPUs are critical.

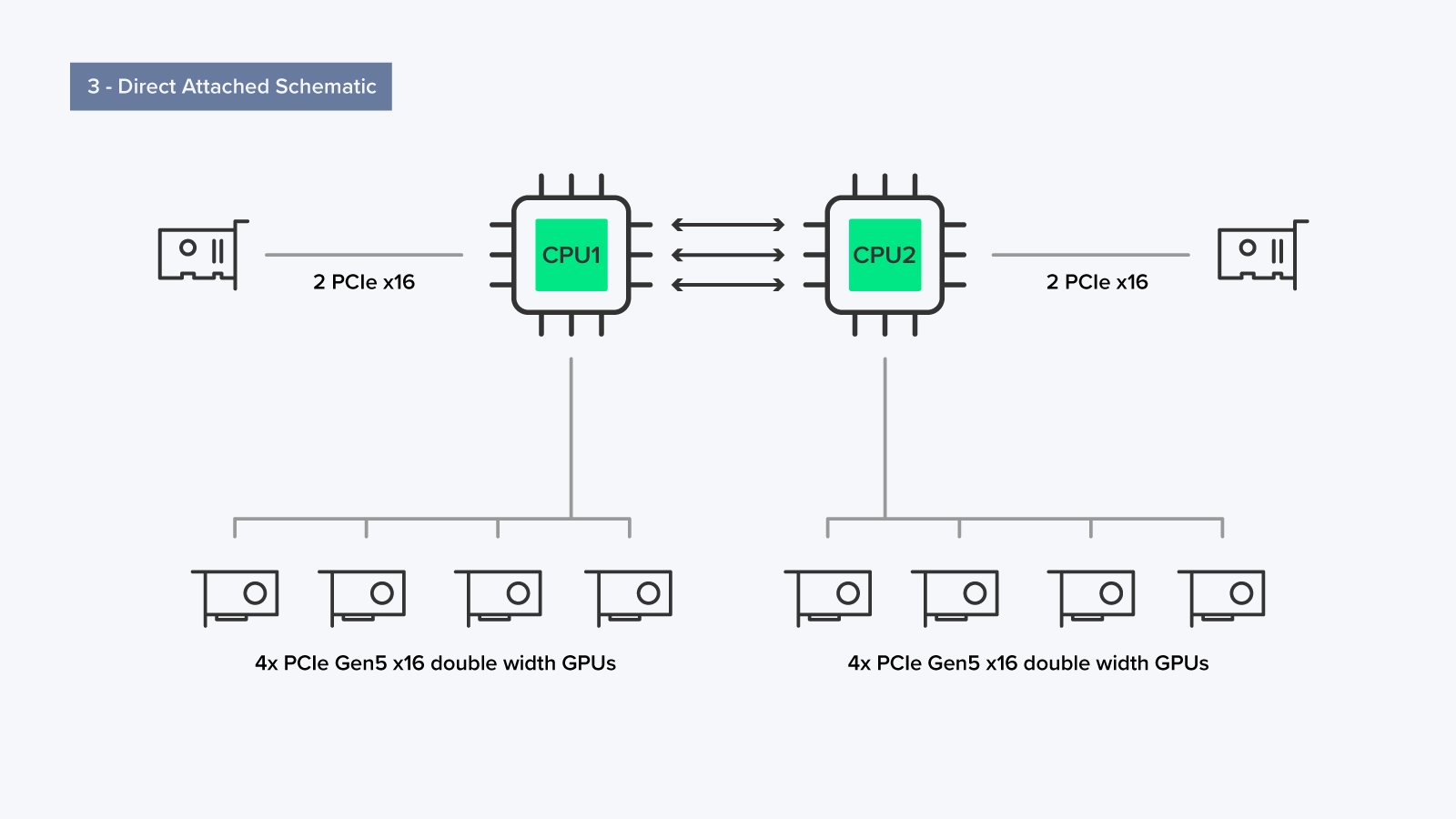

Direct Attached Option - 2 CPU, No PCIe Switch

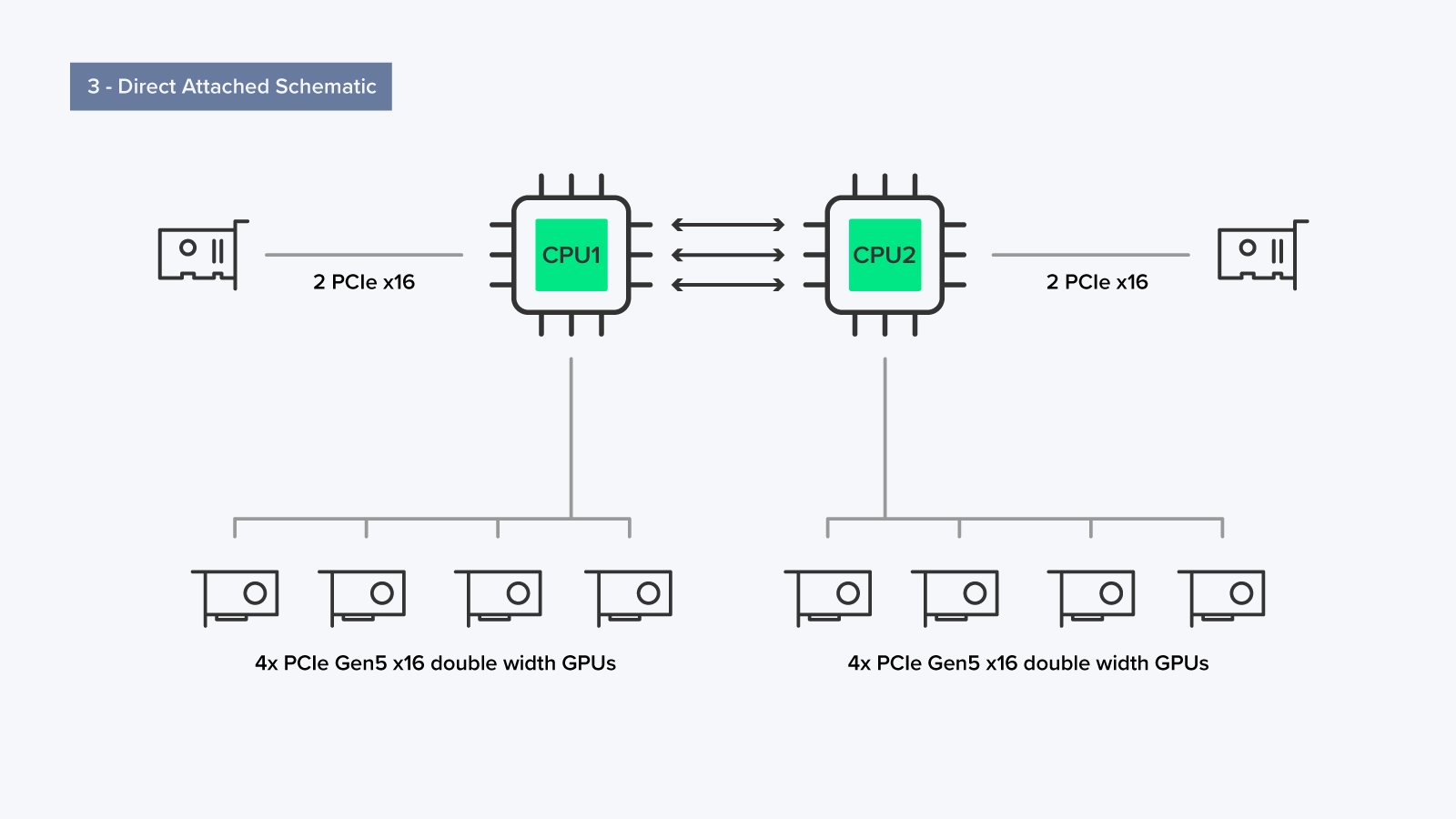

In a direct attached setup, each of the CPUs have direct PCIe access to up to four GPUs each totaling to 8 GPUs in a server without the use of a PLX switch.

The advantage of this configuration lies in the absence of a PLX switch. In Single Root and Dual Root, the PLX switch acts as a traffic controller for PCIe lanes slots not directly traced on the motherboard by consolidating and redistributing the data traffic from PCIe devices. This reduces the number of used PCIe lanes while ensuring optimal bandwidth for optimal performance. However, there is a trade-off; using a PLX increases latency when data goes through it, like a middleman. The lack of a PLX in a Direct Attached setup reduces latency due to the direct connection the GPU has to the CPU.

In an 8 GPU setup like this one, we are utilizing a total of 128 PCIe 5.0 lanes exclusively for the GPUs. A directly attached setup is commonly used in most HPC (High-Performance Computing) workloads where multiple applications can run concurrently, or a single application can be divided into multiple smaller jobs. In this setup, each CPU has equal access, and each application running on the CPU has dedicated access to its own set of GPU resources while reducing latency and complexity.

How to Choose What Server

Depending on the application workload, different GPU server configurations can be optimized by our engineering team at Exxact. Whether its direct attached, single root, or dual root, applications will perform best with the proper combinations of CPUs, GPUs, and platform architecture chosen.

- Single Root

- General-Purpose Workloads: Single-root GPU servers are suitable for a wide range of general-purpose GPU-accelerated workloads, such as scientific simulations, data analysis, and machine learning training when you don't require high GPU-to-GPU communication

- Web Servers and Virtualization: These servers can also be used for web hosting and virtualization tasks where each virtual machine (VM) or container doesn't need direct GPU communication.

- Dual Root

- High-Performance Computing (HPC): Dual-root GPU servers are often preferred for HPC workloads that demand high GPU-to-GPU communication, like simulations involving complex models or large-scale scientific computing.

- Deep Learning and AI Training: When training deep learning models on multiple GPUs, dual-root servers can offer better performance by enabling faster data exchange between GPUs.

- Direct Attached

- Graphics Rendering and Video Editing: Direct-attached GPU servers are well-suited for graphics-intensive applications like 3D rendering, video editing, and computer-aided design (CAD). These tasks benefit from direct GPU access without the need for inter-GPU communication.

- Remote Desktop Virtualization: Direct-attached GPUs can be used in remote desktop virtualization scenarios where each user or session requires dedicated GPU resources for tasks like gaming or graphic design.

Explore Exxact 4U Servers featuring a plethora of platforms from Intel and AMD to choose from. Talk to our engineers to get recommendations on how you should configure your solution!

Single Root, Dual Root, Direct Attached - Accessing PCIe in HPC Servers

Understanding PCIe Architecture to Maximize GPU Server Performance

GPUs have been the de facto solution for high performance computing for AI and HPC applications compared to traditional CPU only servers. A wide range of applications can be executed on these systems, and the performance increase for applications that take advantage of the GPUs has been widely documented. New technologies and applications are being developed to this day to harness and optimize increasingly powerful GPU performance.

While GPU focused servers contain single or dual CPUs and up to 10 PCIe GPUs, how the system is architected can impact the application speed and flexibility of the server. There are three ways to design a GPU server, resulting in a more optimized system for various workloads. The data flow between the CPU and GPUs is crucial when choosing a GPU server.

PCIe GPU Access Choices

Exxact GPU servers are designed for applications that require substantial computing for Molecular Dynamics, AI and Deep Learning, and various HPC workloads. While 1:1 CPU-to-GPU ratios are common in desktops, workstations, and servers, often, challenging and high compute workloads require GPU servers designed for high acceleration with multi-GPU configurations.

GPU servers are available in two general architectures:

- PCIe Based Servers: GPU servers with up to 8 or sometimes 10 available PCIe slots for GPUs.

- SXM/OAM Based Servers: GPU servers where GPUs are mounted and socketed on carrier board and only have 1 PCIe connection to the CPU(s).

GPU servers also have options for dual CPU socket configurations. The two CPUs communicate via high-speed communication paths called UPI and xGMI for Intel and AMD servers respectively.

Distinct System Architectures - How PCIe is Connected

Delving further into PCIe Servers, there are 3 distinct system architectures designed for various workloads:

- Single Root

- Dual Root

- Direct Attached

Single Root Architecture - 1 CPU, 2 PCIe Switch

The single root architecture is ideal for applications that reside on a single GPU but require access to multiple GPUs. A single root system dedicates one of the CPUs (out of the two, if applicable) to manage all communications to the GPUs.

As shown, the CPU that communicates with the GPUs uses a PCI switch called a PLX. Each PLX switch connects to the CPU via 2 PCIe x16 lanes which then can communicate with up to 4 or 5 GPUs for a maximum of 10 GPUs in a single server.

With all the computing attached to a single CPU, a Single Root Architecture is more simplified, has lower latency, and is suitable for most applications. Single root systems are tailored for applications where most computation is GPUs centric and peer-to-peer communication is not critical. Application examples include AMBER Molecular Dynamics and other simulation type workloads.

Dual Root Architecture - 2 CPUs, 2 PCIe Switches

A dual root setup connects the CPUs to several GPUs and add-on cards (AOCs) through the PLX switch. The distribution of GPUs attached to PLX is not required to be equal; workloads assigned to a system might not be easily distributable among two CPUs.

Each CPU can communicate with each other via UPI/xGMI, so the combinations of PCIe devices attached can be flexible (one CPU for storage and networking, one CPU for compute). However, equal allocations of hardware are common in most server configurations.

Each CPU is connected to 4 GPUs, 2 AOCs, and 4 NVMe storage drives through its dedicated PLX switch, a typical configuration for Omniverse environment workloads. AOCs can be additional storage, high-speed networking cards, and more.

A dual-root server setup is advantageous for applications that require a balance between CPU and GPU processing power, especially when data sharing and communication between workloads are essential. In this setup, workloads can be assigned to each CPU, allowing both processors to utilize their respective computing capabilities and then facilitate communication between them when necessary. Applications examples include deep learning training, high-performance computing (HPC), and workloads where efficient data sharing and communication between CPUs and GPUs are critical.

Direct Attached Option - 2 CPU, No PCIe Switch

In a direct attached setup, each of the CPUs have direct PCIe access to up to four GPUs each totaling to 8 GPUs in a server without the use of a PLX switch.

The advantage of this configuration lies in the absence of a PLX switch. In Single Root and Dual Root, the PLX switch acts as a traffic controller for PCIe lanes slots not directly traced on the motherboard by consolidating and redistributing the data traffic from PCIe devices. This reduces the number of used PCIe lanes while ensuring optimal bandwidth for optimal performance. However, there is a trade-off; using a PLX increases latency when data goes through it, like a middleman. The lack of a PLX in a Direct Attached setup reduces latency due to the direct connection the GPU has to the CPU.

In an 8 GPU setup like this one, we are utilizing a total of 128 PCIe 5.0 lanes exclusively for the GPUs. A directly attached setup is commonly used in most HPC (High-Performance Computing) workloads where multiple applications can run concurrently, or a single application can be divided into multiple smaller jobs. In this setup, each CPU has equal access, and each application running on the CPU has dedicated access to its own set of GPU resources while reducing latency and complexity.

How to Choose What Server

Depending on the application workload, different GPU server configurations can be optimized by our engineering team at Exxact. Whether its direct attached, single root, or dual root, applications will perform best with the proper combinations of CPUs, GPUs, and platform architecture chosen.

- Single Root

- General-Purpose Workloads: Single-root GPU servers are suitable for a wide range of general-purpose GPU-accelerated workloads, such as scientific simulations, data analysis, and machine learning training when you don't require high GPU-to-GPU communication

- Web Servers and Virtualization: These servers can also be used for web hosting and virtualization tasks where each virtual machine (VM) or container doesn't need direct GPU communication.

- Dual Root

- High-Performance Computing (HPC): Dual-root GPU servers are often preferred for HPC workloads that demand high GPU-to-GPU communication, like simulations involving complex models or large-scale scientific computing.

- Deep Learning and AI Training: When training deep learning models on multiple GPUs, dual-root servers can offer better performance by enabling faster data exchange between GPUs.

- Direct Attached

- Graphics Rendering and Video Editing: Direct-attached GPU servers are well-suited for graphics-intensive applications like 3D rendering, video editing, and computer-aided design (CAD). These tasks benefit from direct GPU access without the need for inter-GPU communication.

- Remote Desktop Virtualization: Direct-attached GPUs can be used in remote desktop virtualization scenarios where each user or session requires dedicated GPU resources for tasks like gaming or graphic design.

Explore Exxact 4U Servers featuring a plethora of platforms from Intel and AMD to choose from. Talk to our engineers to get recommendations on how you should configure your solution!

.jpg?format=webp)