Introduction

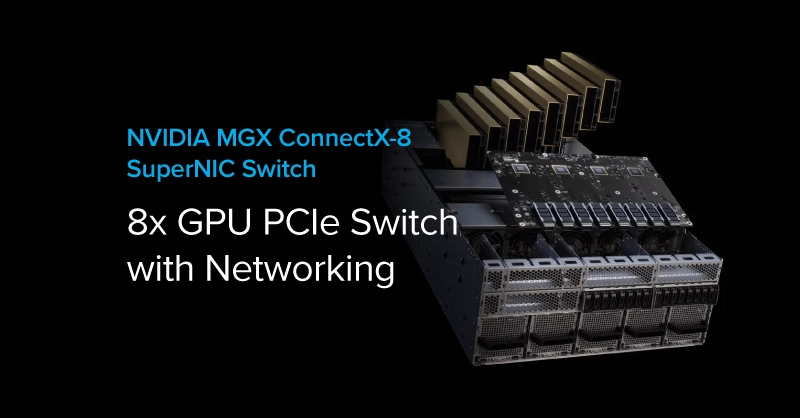

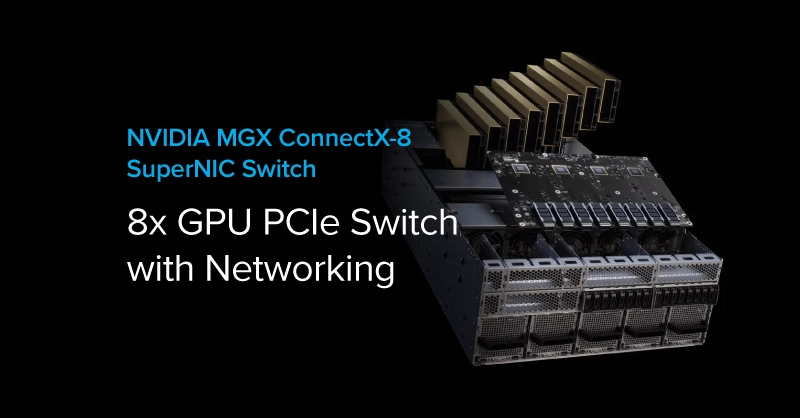

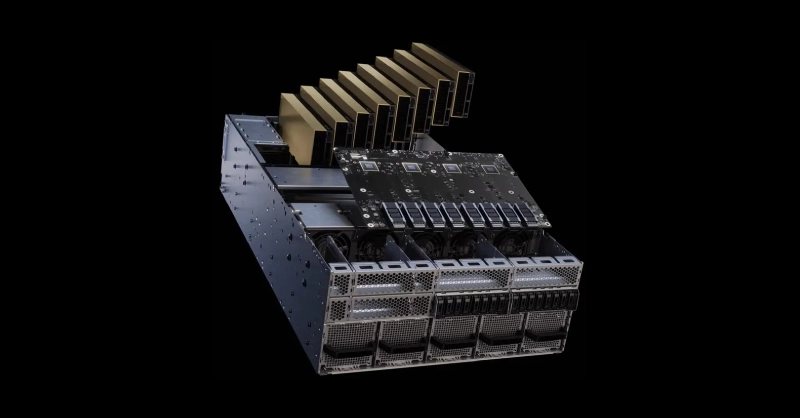

At Computex 2025, NVIDIA® showed off a very interesting piece of technology for traditional PCIe GPU server architectures. Jensen showed off the NVIDIA RTX PRO™ Server Rack featuring what they call the NVIDIA ConnectX™-8 SuperNIC™ Switch. This is essentially a GPU backplane with dedicated PCIe lanes bundled with NVIDIA ConnectX-8 networking. The dedicated lanes, PCIe switch, and networking bypass the CPU entirely and dramatically improve GPU-to-GPU communication between nodes.

The NVIDIA ConnectX-8 SuperNIC Switch will be part of NVIDIA’s MGX line of servers. We will showcase which workloads can take advantage of the NVIDIA MGX ConnectX-8 SuperNIC Switch for PCIe GPUs and are excited to see this technology increase the capabilities of PCIe GPU platforms.

What is the NVIDIA MGX ConnectX-8 SuperNIC Switch?

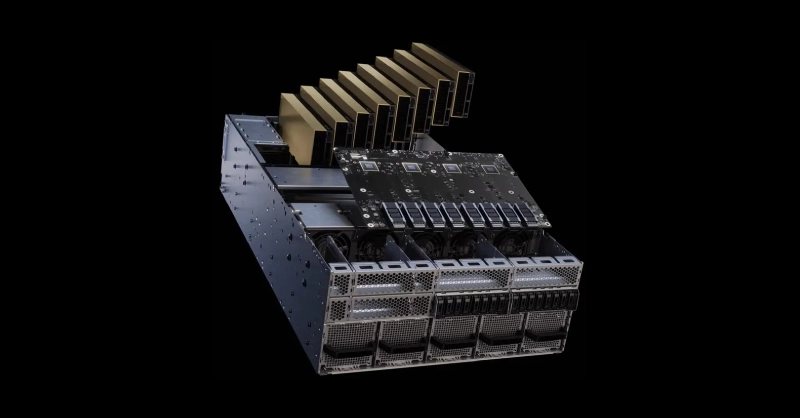

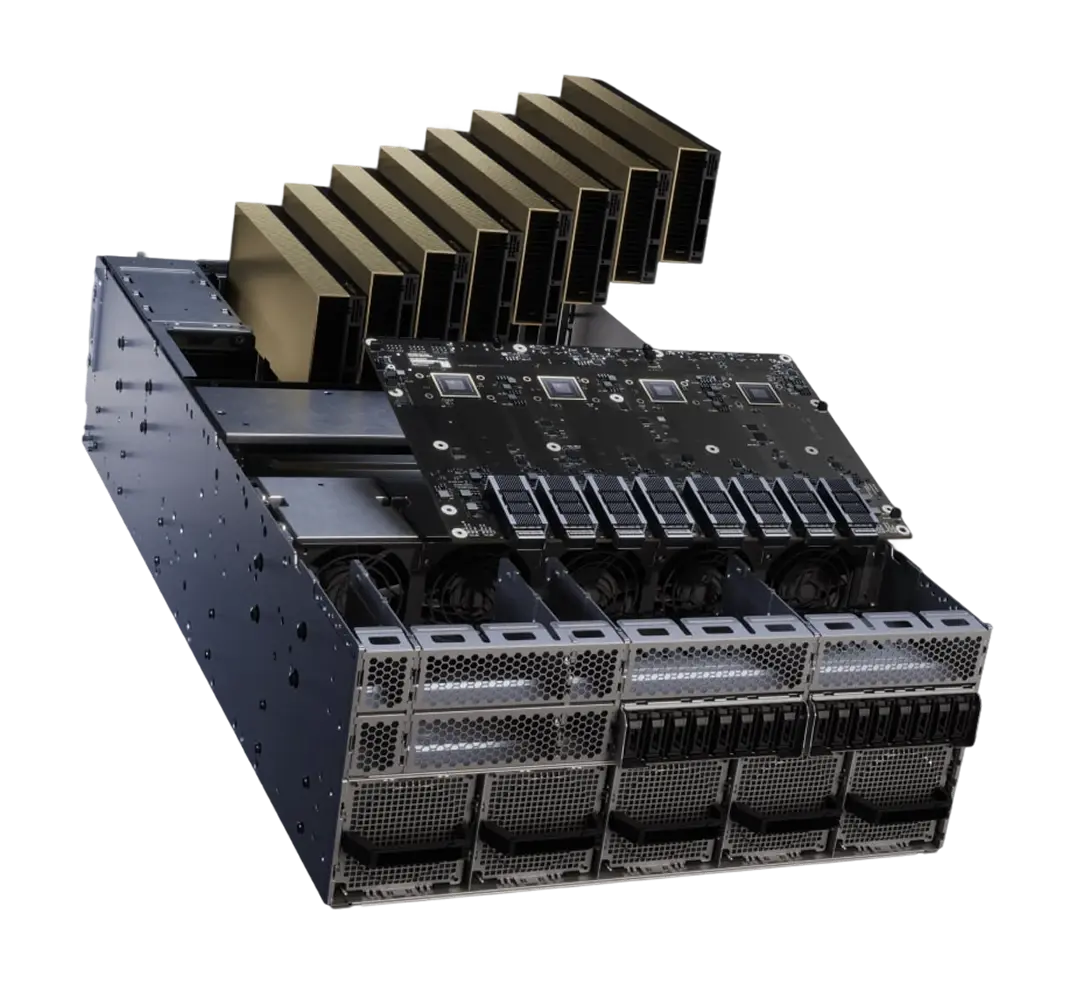

The NVIDIA MGX ConnectX-8 SuperNIC Switch is an 8-GPU backplane with four dedicated ConnectX-8 networking and a 48-lane PCIe 6.0 switch. Each NVIDIA ConnectX-8 NIC is capable of 800Gb/s bandwidth, a total of 3200 Gb/s of bandwidth across the entire SuperNIC.

Each NVIDIA ConnectX-8 NIC has two GPUs assigned at the full x16 slot width, with each having its own networking transceiver. Even when the CPU platform does not support PCIe 6.0, if the GPUs are PCIe 6.0 compatible, they can take advantage of the high 800Gb/s speed.

All GPUs and NVIDIA ConnectX-8 NICs are interconnected, creating a cohesive eight-GPU switch and networking fabric. Transceivers are then connected to another external networking switch, where other NVIDIA MGX ConnectX-8 SuperNIC Switch platforms will also connect to, bridging multiple systems together to work in unison.

Source: NVIDIA

Use Cases for the NVIDIA ConnectX-8 SuperNIC Switch Board

Eight PCIe GPU Servers are one of the most popular form factors for multi-GPU compute servers. Not every data center can deploy NVIDIA SXM GPUs found in the NVIDIA DGX or NVIDIA HGX due to power constraints and cost. But like the enterprise HGX and DGX, these multi-PCIe GPU servers also power engineering simulation, life science research, and large AI models.

This new NVIDIA MGX platform is ideal for businesses that want to power HPC workloads with PCIe GPUs on an x86 platform. Any upgrades can be slotted in as easily as swapping out a GPU on a backplane. Workloads that can take advantage of increased GPU-to-GPU intercommunication will benefit from the lower latency and higher throughput data flow, including most HPC workloads:

- AI Deep Learning & Machine Learning

- Engineering Simulation (FEA, CFD)

- Climate Weather Modeling

- Data Analytics (i.e. finance, medical, bioinformatics, etc.)

- Rendering large complex environments

- Performing computations on shared data in the network.

Workloads that may not be impacted are those that are not GPU memory bound or don’t rely on fast networking. These workloads are less technical, fit on a single GPU, or are CPU-bound. For example, file compression and database querying, and local data analytics.

TL;DR & Conclusion

The NVIDIA MGX ConnectX-8 SuperNIC Switch GPU Board enables fast GPU-to-GPU and GPU-to-networking communication for better data flow and fewer bottlenecks by bypassing the CPU for data ingest. Built with PCIe 6.0 lanes, GPUs and NICs can take advantage of higher speeds regardless of CPU PCIe architecture for increased performance and longevity.

The ConnextX-8 SuperNIC Switch has no official release date. If you have questions or configuring a GPU server, workstation, or whole data center computing infrastructure, talk to the Exxact team today.

Accelerate AI & HPC with NVIDIA MGX

Empower your hardware with a purpose-built HPC platform validated by NVIDIA. NVIDIA MGX is a reference design platform by NVIDIA built to propel and accelerate your research in HPC and AI, built to extract the best performance out of NVIDIA hardware.

Get a Quote Today

NVIDIA MGX ConnectX-8 SuperNIC Switch - 8x GPU PCIe Switch with Networking

Introduction

At Computex 2025, NVIDIA® showed off a very interesting piece of technology for traditional PCIe GPU server architectures. Jensen showed off the NVIDIA RTX PRO™ Server Rack featuring what they call the NVIDIA ConnectX™-8 SuperNIC™ Switch. This is essentially a GPU backplane with dedicated PCIe lanes bundled with NVIDIA ConnectX-8 networking. The dedicated lanes, PCIe switch, and networking bypass the CPU entirely and dramatically improve GPU-to-GPU communication between nodes.

The NVIDIA ConnectX-8 SuperNIC Switch will be part of NVIDIA’s MGX line of servers. We will showcase which workloads can take advantage of the NVIDIA MGX ConnectX-8 SuperNIC Switch for PCIe GPUs and are excited to see this technology increase the capabilities of PCIe GPU platforms.

What is the NVIDIA MGX ConnectX-8 SuperNIC Switch?

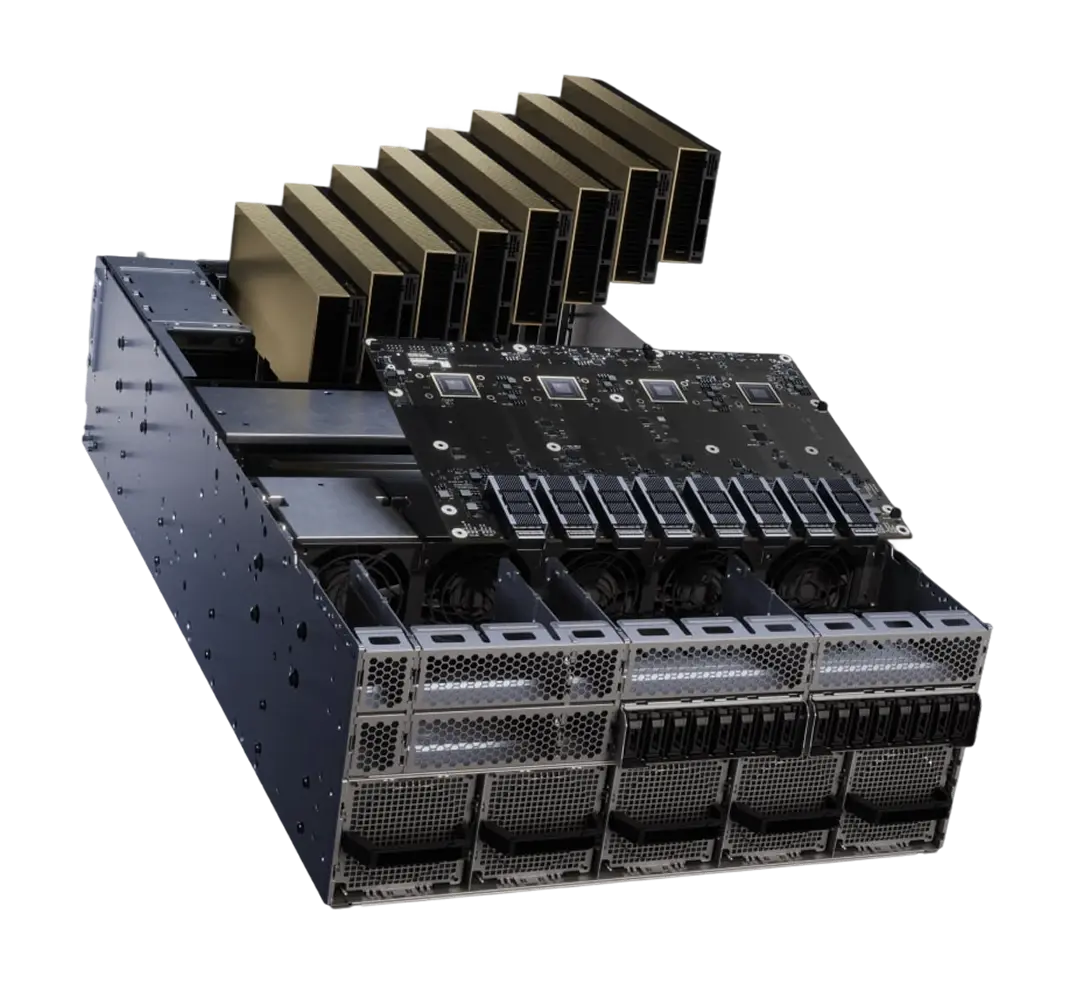

The NVIDIA MGX ConnectX-8 SuperNIC Switch is an 8-GPU backplane with four dedicated ConnectX-8 networking and a 48-lane PCIe 6.0 switch. Each NVIDIA ConnectX-8 NIC is capable of 800Gb/s bandwidth, a total of 3200 Gb/s of bandwidth across the entire SuperNIC.

Each NVIDIA ConnectX-8 NIC has two GPUs assigned at the full x16 slot width, with each having its own networking transceiver. Even when the CPU platform does not support PCIe 6.0, if the GPUs are PCIe 6.0 compatible, they can take advantage of the high 800Gb/s speed.

All GPUs and NVIDIA ConnectX-8 NICs are interconnected, creating a cohesive eight-GPU switch and networking fabric. Transceivers are then connected to another external networking switch, where other NVIDIA MGX ConnectX-8 SuperNIC Switch platforms will also connect to, bridging multiple systems together to work in unison.

Source: NVIDIA

Use Cases for the NVIDIA ConnectX-8 SuperNIC Switch Board

Eight PCIe GPU Servers are one of the most popular form factors for multi-GPU compute servers. Not every data center can deploy NVIDIA SXM GPUs found in the NVIDIA DGX or NVIDIA HGX due to power constraints and cost. But like the enterprise HGX and DGX, these multi-PCIe GPU servers also power engineering simulation, life science research, and large AI models.

This new NVIDIA MGX platform is ideal for businesses that want to power HPC workloads with PCIe GPUs on an x86 platform. Any upgrades can be slotted in as easily as swapping out a GPU on a backplane. Workloads that can take advantage of increased GPU-to-GPU intercommunication will benefit from the lower latency and higher throughput data flow, including most HPC workloads:

- AI Deep Learning & Machine Learning

- Engineering Simulation (FEA, CFD)

- Climate Weather Modeling

- Data Analytics (i.e. finance, medical, bioinformatics, etc.)

- Rendering large complex environments

- Performing computations on shared data in the network.

Workloads that may not be impacted are those that are not GPU memory bound or don’t rely on fast networking. These workloads are less technical, fit on a single GPU, or are CPU-bound. For example, file compression and database querying, and local data analytics.

TL;DR & Conclusion

The NVIDIA MGX ConnectX-8 SuperNIC Switch GPU Board enables fast GPU-to-GPU and GPU-to-networking communication for better data flow and fewer bottlenecks by bypassing the CPU for data ingest. Built with PCIe 6.0 lanes, GPUs and NICs can take advantage of higher speeds regardless of CPU PCIe architecture for increased performance and longevity.

The ConnextX-8 SuperNIC Switch has no official release date. If you have questions or configuring a GPU server, workstation, or whole data center computing infrastructure, talk to the Exxact team today.

Accelerate AI & HPC with NVIDIA MGX

Empower your hardware with a purpose-built HPC platform validated by NVIDIA. NVIDIA MGX is a reference design platform by NVIDIA built to propel and accelerate your research in HPC and AI, built to extract the best performance out of NVIDIA hardware.

Get a Quote Today