Privacy Preserving Deep Learning and the Problem with Data

Data has become a driving economic force in our society. This fact alone makes privacy-preserving deep learning, so important. Tools like PySyft and TF-Encrypted are becoming more popular and very much needed in applications. The phrase "data is the new oil", for better or worse, is now firmly rooted as a popular adage of modern business as the availability of cheap and plentiful data. Data is now considered to be a cornerstone of the modern economy. As with any resource, reckless exploitation is not without consequences, especially when the technology is still relatively new.

Shoddy and deceptive data-related practices gave us the Facebook-Cambridge Analytica scandal, a breach at Equifax exposed the personal data of 147 million, a flaw in Google+ leaked data on some 50,000 users, and a security vulnerability at Facebook (again) exposed the profiles of at least 50 million accounts. These privacy breaches are only a few among many. And these are ones that have just occurred in the last few years.

In 2018, the European Union's General Data Protection Regulation (GDPR) came into effect, implementing rules surrounding collection, consent, and use of people's private data by businesses. Perhaps unsurprisingly Google was soon issued an (admittedly paltry) 50 million USD fine for GDPR failures. Privacy is one factor that constrains deep learning practitioners working with sensitive data to be wary of cloud services.

Don't Expect the Tech Companies to Maintain Privacy

Big Data Needs to Maintain Privacy

Expecting the big 5 tech companies to just "do the right thing" with data and privacy is a bit like leaving a toddler alone with a pile of cookies and expecting them not to eat any. Leaders of tech giants including Apple's Tim Cook, Satya Nadella of Microsoft, and Cisco have all called for privacy to be considered a human right. Despite nominally good intentions we'd sooner trust the toddler; you can't incentivize every wrong action and expect moral institutional behavior based on ethical fortitude alone. This makes it necessary to develop the cryptographic tools necessary to protect privacy in tandem with the development of deep learning applications, making it as easy as possible for deep learning practitioners to respect privacy.

In the best case scenario, it would become mathematically intractable for the wrong parties to decipher the plaintext data or model parameters. As we'll get into below, protecting privacy always comes with some tradeoffs and the practical level of privacy protection may depend on the trust relationship between involved parties.

Privacy-preserving Machine Learning

In response to the challenges above, privacy-preserving machine learning and artificial intelligence has become an important research and development focus. The goals of this movement include, to paraphrase from Dropout Labs, the company behind the privacy-preserving deep learning framework TF-Encrypted:

- Sensitive data can enable better AI, but shouldn't compromise privacy

- AI alignment depends on owner/stakeholder control of data

- Sharing data and models without compromising sensitive information enables new possibilities.

We can formulate ideas about what can be accomplished by combining machine learning and cryptography based on work already underway. A recent grant from The RAAIS Foundation is funding the following projects at OpenMined, the community developing PySyft.

- Encrypted language translation: language-to-language translation without exposing the original message content or its translation

- Federated learning data science platform: enabling distributed machine learning without giving up local control of data

- Encrypted linear regression for genetics data: enabling collaborative machine learning while maintaining data security.

Picking the Right Deep Learning Tools

Picking the Right Tools is Very Important for Your Deep Learning Application

If you're convinced that safeguarding privacy in deep learning is important, you'll next want to pick the right tools for the job. PySyft and TF-Encrypted are two community-driven projects incorporating privacy tools with the capabilities of PyTorch and TensorFlow. The aim of these projects is to reduce barriers to entry and pain points to the point where it is as easy to implement encrypted or privacy-preserving deep learning as it is to use standard PyTorch or Keras, for both you and your computation platform. Let's dive into the major differences below.

The Core Concepts in Privacy Preserving Deep Learning

In general PySyft has roots in using homomorphic encryption (HE) as a means to protect privacy, while TF-Encryped drew early inspiration from secure multi-party computation (MPC) as a core cryptographic primitive for ML privacy. Over time, these frameworks have both grown to facilitate additional privacy-preservation technology, and as the field is a rapidly developing research area they are both constantly adding new features.

Federated Learning

Federated learning is among the most well-known (and widely adopted) methods for protecting privacy in machine learning. Federated learning sends the model to the data for training instead of the other way around. In this way, personal information can be used to improve machine learning services like predictive typing without collecting the data to a central repository.

If you use an Android or iOS phone, chances are your device is capable of participating in federated learning, keeping your local data local and sending an updated version of the global model (or training gradients for same) to be securely aggregated with millions of other model updates before being applied to the centrally controlled model. Both PySyft and TF-Encrypted have incorporated federated learning, and with either framework you can either mitigate private data leakage with a trusted aggregator, or use secure computation to keep the model updates encrypted until after they've been combined. The only obvious difference in how the different frameworks handle secure aggregation under the hood is that PySyft uses the SPDZ protocol while TF-Encrypted uses a slightly more specialized version of SPDZ called Pond.

Serving Encrypted Models with Data Privacy

Although we've discussed mostly data privacy, it's important to remember there are at least as many reasons a stakeholder would want to maintain model privacy as well. Model privacy can be motivated by the need to secure models instilled with valuable IP, or where the models inadvertently reveal something about its training data. Safeguarding model privacy can also help provide incentives for machine learning engineers and data scientists to develop models they otherwise wouldn't develop.

TF-Encrypted and PySyft are both built to serve encrypted models in a distributed way, and you can even combine the two in interesting ways. That being said, encrypting model parameters doesn't do anything to protect the model architecture. Deep learning sits at a confluence of open source culture, societal concerns, big business interests and privacy, and we are at the very beginning of collectively exploring what intellectual property in the context of AI will mean in the future.

left: the "AI generated" portrait Christie's is auctioning off right now

right: outputs from a neural network I trained and put online *over a year ago*.

Does anyone else care about this? Am I crazy for thinking that they really just used my network and are selling the results? pic.twitter.com/wAdSOe7gwz

— Robbie Barrat (@DrBeef_) October 25, 2018

Encrypted Training for Deep Learning Applications

Training a Deep Learning Application

In situations where plaintext training just won't do, you'll need to train an encrypted model on encrypted data. This entails a non-negligible computational overhead compared to standard training, but it may be desirable when both the model and data need to be kept secret. For example, this is true in sensitive financial or medical machine learning contexts.

PySyft has a tutorial for accomplishing this using secret sharing of weights, and data between a model owner and two data owners (Alice and Bob, naturally). TF-Encrypted is capable of encrypted training as well, and their learning material includes an example of private training for logistic regression. TF-Encrypted seems a little behind PySyft in supporting private training for now, as we have yet to see an example of back-propagating through non-linear activation functions using TF-Encrypted. However, this should be possible in principle.

How does encrypted training work using secret sharing?

1: The model owner secret shares a model with data owners Alice and Bob, who also serve as workers.

2: Alice and Bob secret share their own local, private data with each other, and train the model on their own data.

3: Before returning model updates to the model owner, Bob and Alice combine their encrypted gradients. This means that even after the encrypted update has been applied to the model, the model owner cannot determine the individual contributions from Alice or Bob to the now updated model.

Coding Style and Development Ecosystems

As any python practitioner will tell you, a big part of the utility in a programming language or framework comes from the community surrounding the tools. As an open

source language, Python has spawned numerous libraries, packages, and frameworks for getting things done. Chances are, if you've got a Python-related problem, somebody has already tried to solve it and laid some open-source groundwork for you to build on.

Community adoption of open source tools is a virtuous cycle, where more users beget more developers. And more developers beget more capable tools. This why, for now, TensorFlow is probably a more practical deep learning framework for production. Although PyTorch has a dedicated following of researchers and developers who admire the framework for it's flexibility, TensorFlow still has about 3X as many users.

That means you have access to more tutorials, more open source projects to learn from, and more bug fixes and how-to's on Stack Overflow. Interestingly enough, the trend is the opposite for PySyft vs TF-Encrypted. As of this writing PySyft has over 3500 stars and 800 forks on GitHub. Compare that to TF-encrypted, which has amassed ~360 stars and 55 forks so far. Fortunately for us these projects exist in a non-zero sum ecology. You can actually do things like use the keras interface from TF-Encrypted through PySyft.

We wouldn't recommend making the community size a deciding factor in choosing between the two, as the two frameworks are in large part evolving in a shared and collaborative ecosystem. PySyft is also part of a broader mission for distributed data science and data ownership championed by Openmined.org, and the cross-collaboration between OpenMined and Dropout Labs is strong as well.

Another factor that may affect your ability to learn and build with either framework is the coding style, and the type of training resources readily available. Appealing to the data science crowd, PySyft provides their tutorials in the form of Jupyter Notebooks. TF-Encrypted instead provides most of their examples as python code, which is going to be much easier to work with for the Vim/Emacs users out there. One challenge unique to TF-Encrypted is that the project will have to integrate the substantial changes to TensorFlow under TF-2.0, in addition to their ongoing research and development.

Privacy Tradeoffs

Speed: early versions of federated learning were 41 times slower but have now been improved to an additional overhead of just 91% more wall time for the same training scenario.

Simplicity: Developing performant software has two major resources that need to simultaneously optimize: the computational resources to run programs and the time invested by the people developing it. Here PySyft does pretty well, in MNIST training examples adding privacy-preserved federation to the standard PyTorch MNIST exampletakes only about 10 extra lines of code. Encrypted inference is even better: 6 lines to set up the necessary PySyft resources, a drop in replacement for the test set dataloader, and a single line to encrypt the model come test time.

Privacy Preserving Deep Learning: Closing Remarks

Businesses, governments, medical institutions, and members of the public are all developing a demand for privacy safeguards in machine learning as awareness and technical literacy are increasing. Any organization that wants to deal with non-trivial datasets will need to consider the level of trust and privacy their applications warrant, and take concrete actions to ensure those needs are met. Luckily, frameworks like PySyft and TF-Encrypted are making this almost as easy as using vanilla PyTorch or Keras.

Any discussion of PySyft vs TF-Encrypted is going to include an element of the ol' PyTorch versus TensorFlow debate. As the names would have you guess, PySyft is originally based on PyTorch while TF-Encrypted is unsurprisingly built on top of TensorFlow. If you are part of an organization that has already invested in developing in-house expertise in one or the other framework you may think it is best to stick with what you know and choose your privacy preservation framework accordingly. However, you would mostly be correct, but in fact there are interesting ways in which PySyft can take advantage of the Keras API and even use TF-Encrypted as a backend, so you shouldn't let your pre-existing familiarity be the sole deciding factor in your decision.

It's worth noting that depending on where your application's needs land on the privacy versus performance spectrum, the TensorFlow ecosystem has also produced related projects in TensorFlow Privacy and TensorFlow Federated. It may be worth considering these other options depending on how Byzantine your fault tolerance needs to be.

As of this writing, PySyft is more mature and has a bigger community for now, but TF-Encrypted is bound to grow quickly given the substantial pre-existing user base of TensorFlow. PySyft currently has more plentiful and better developed tutorials (including a course taught by the project founder at Udacity), and if you are starting from scratch it will probably be easier to learn.

The most general and fruitful approach given the state of privacy-preserving deep learning in 2019 is probably to learn and use PySyft and use the built-in hooks to TF-Encrypted when you need to accomplish a task in a more TensorFluidic way. At the end of the day what's important is not which framework you choose, but what you do with it.

Privacy Preserving Deep Learning – PySyft Versus TF-Encrypted

Privacy Preserving Deep Learning and the Problem with Data

Data has become a driving economic force in our society. This fact alone makes privacy-preserving deep learning, so important. Tools like PySyft and TF-Encrypted are becoming more popular and very much needed in applications. The phrase "data is the new oil", for better or worse, is now firmly rooted as a popular adage of modern business as the availability of cheap and plentiful data. Data is now considered to be a cornerstone of the modern economy. As with any resource, reckless exploitation is not without consequences, especially when the technology is still relatively new.

Shoddy and deceptive data-related practices gave us the Facebook-Cambridge Analytica scandal, a breach at Equifax exposed the personal data of 147 million, a flaw in Google+ leaked data on some 50,000 users, and a security vulnerability at Facebook (again) exposed the profiles of at least 50 million accounts. These privacy breaches are only a few among many. And these are ones that have just occurred in the last few years.

In 2018, the European Union's General Data Protection Regulation (GDPR) came into effect, implementing rules surrounding collection, consent, and use of people's private data by businesses. Perhaps unsurprisingly Google was soon issued an (admittedly paltry) 50 million USD fine for GDPR failures. Privacy is one factor that constrains deep learning practitioners working with sensitive data to be wary of cloud services.

Don't Expect the Tech Companies to Maintain Privacy

Big Data Needs to Maintain Privacy

Expecting the big 5 tech companies to just "do the right thing" with data and privacy is a bit like leaving a toddler alone with a pile of cookies and expecting them not to eat any. Leaders of tech giants including Apple's Tim Cook, Satya Nadella of Microsoft, and Cisco have all called for privacy to be considered a human right. Despite nominally good intentions we'd sooner trust the toddler; you can't incentivize every wrong action and expect moral institutional behavior based on ethical fortitude alone. This makes it necessary to develop the cryptographic tools necessary to protect privacy in tandem with the development of deep learning applications, making it as easy as possible for deep learning practitioners to respect privacy.

In the best case scenario, it would become mathematically intractable for the wrong parties to decipher the plaintext data or model parameters. As we'll get into below, protecting privacy always comes with some tradeoffs and the practical level of privacy protection may depend on the trust relationship between involved parties.

Privacy-preserving Machine Learning

In response to the challenges above, privacy-preserving machine learning and artificial intelligence has become an important research and development focus. The goals of this movement include, to paraphrase from Dropout Labs, the company behind the privacy-preserving deep learning framework TF-Encrypted:

- Sensitive data can enable better AI, but shouldn't compromise privacy

- AI alignment depends on owner/stakeholder control of data

- Sharing data and models without compromising sensitive information enables new possibilities.

We can formulate ideas about what can be accomplished by combining machine learning and cryptography based on work already underway. A recent grant from The RAAIS Foundation is funding the following projects at OpenMined, the community developing PySyft.

- Encrypted language translation: language-to-language translation without exposing the original message content or its translation

- Federated learning data science platform: enabling distributed machine learning without giving up local control of data

- Encrypted linear regression for genetics data: enabling collaborative machine learning while maintaining data security.

Picking the Right Deep Learning Tools

Picking the Right Tools is Very Important for Your Deep Learning Application

If you're convinced that safeguarding privacy in deep learning is important, you'll next want to pick the right tools for the job. PySyft and TF-Encrypted are two community-driven projects incorporating privacy tools with the capabilities of PyTorch and TensorFlow. The aim of these projects is to reduce barriers to entry and pain points to the point where it is as easy to implement encrypted or privacy-preserving deep learning as it is to use standard PyTorch or Keras, for both you and your computation platform. Let's dive into the major differences below.

The Core Concepts in Privacy Preserving Deep Learning

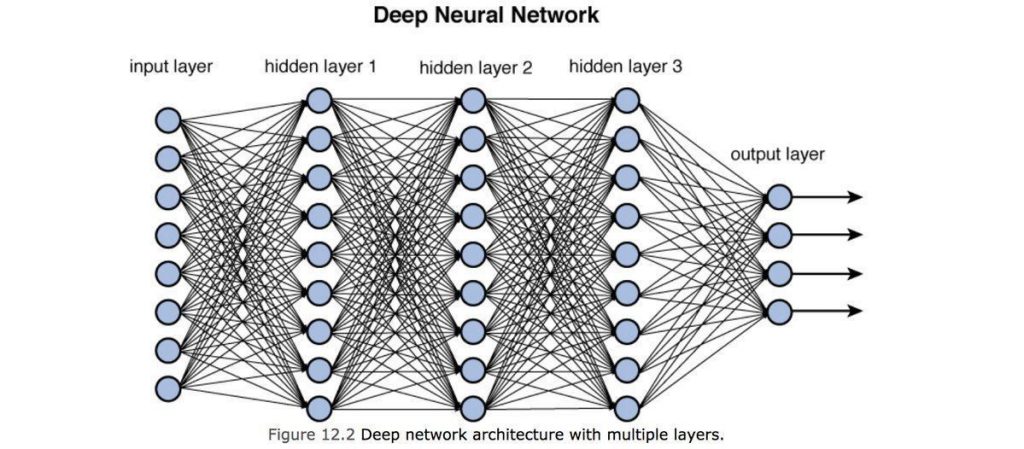

In general PySyft has roots in using homomorphic encryption (HE) as a means to protect privacy, while TF-Encryped drew early inspiration from secure multi-party computation (MPC) as a core cryptographic primitive for ML privacy. Over time, these frameworks have both grown to facilitate additional privacy-preservation technology, and as the field is a rapidly developing research area they are both constantly adding new features.

Federated Learning

Federated learning is among the most well-known (and widely adopted) methods for protecting privacy in machine learning. Federated learning sends the model to the data for training instead of the other way around. In this way, personal information can be used to improve machine learning services like predictive typing without collecting the data to a central repository.

If you use an Android or iOS phone, chances are your device is capable of participating in federated learning, keeping your local data local and sending an updated version of the global model (or training gradients for same) to be securely aggregated with millions of other model updates before being applied to the centrally controlled model. Both PySyft and TF-Encrypted have incorporated federated learning, and with either framework you can either mitigate private data leakage with a trusted aggregator, or use secure computation to keep the model updates encrypted until after they've been combined. The only obvious difference in how the different frameworks handle secure aggregation under the hood is that PySyft uses the SPDZ protocol while TF-Encrypted uses a slightly more specialized version of SPDZ called Pond.

Serving Encrypted Models with Data Privacy

Although we've discussed mostly data privacy, it's important to remember there are at least as many reasons a stakeholder would want to maintain model privacy as well. Model privacy can be motivated by the need to secure models instilled with valuable IP, or where the models inadvertently reveal something about its training data. Safeguarding model privacy can also help provide incentives for machine learning engineers and data scientists to develop models they otherwise wouldn't develop.

TF-Encrypted and PySyft are both built to serve encrypted models in a distributed way, and you can even combine the two in interesting ways. That being said, encrypting model parameters doesn't do anything to protect the model architecture. Deep learning sits at a confluence of open source culture, societal concerns, big business interests and privacy, and we are at the very beginning of collectively exploring what intellectual property in the context of AI will mean in the future.

left: the "AI generated" portrait Christie's is auctioning off right now

right: outputs from a neural network I trained and put online *over a year ago*.

Does anyone else care about this? Am I crazy for thinking that they really just used my network and are selling the results? pic.twitter.com/wAdSOe7gwz

— Robbie Barrat (@DrBeef_) October 25, 2018

Encrypted Training for Deep Learning Applications

Training a Deep Learning Application

In situations where plaintext training just won't do, you'll need to train an encrypted model on encrypted data. This entails a non-negligible computational overhead compared to standard training, but it may be desirable when both the model and data need to be kept secret. For example, this is true in sensitive financial or medical machine learning contexts.

PySyft has a tutorial for accomplishing this using secret sharing of weights, and data between a model owner and two data owners (Alice and Bob, naturally). TF-Encrypted is capable of encrypted training as well, and their learning material includes an example of private training for logistic regression. TF-Encrypted seems a little behind PySyft in supporting private training for now, as we have yet to see an example of back-propagating through non-linear activation functions using TF-Encrypted. However, this should be possible in principle.

How does encrypted training work using secret sharing?

1: The model owner secret shares a model with data owners Alice and Bob, who also serve as workers.

2: Alice and Bob secret share their own local, private data with each other, and train the model on their own data.

3: Before returning model updates to the model owner, Bob and Alice combine their encrypted gradients. This means that even after the encrypted update has been applied to the model, the model owner cannot determine the individual contributions from Alice or Bob to the now updated model.

Coding Style and Development Ecosystems

As any python practitioner will tell you, a big part of the utility in a programming language or framework comes from the community surrounding the tools. As an open

source language, Python has spawned numerous libraries, packages, and frameworks for getting things done. Chances are, if you've got a Python-related problem, somebody has already tried to solve it and laid some open-source groundwork for you to build on.

Community adoption of open source tools is a virtuous cycle, where more users beget more developers. And more developers beget more capable tools. This why, for now, TensorFlow is probably a more practical deep learning framework for production. Although PyTorch has a dedicated following of researchers and developers who admire the framework for it's flexibility, TensorFlow still has about 3X as many users.

That means you have access to more tutorials, more open source projects to learn from, and more bug fixes and how-to's on Stack Overflow. Interestingly enough, the trend is the opposite for PySyft vs TF-Encrypted. As of this writing PySyft has over 3500 stars and 800 forks on GitHub. Compare that to TF-encrypted, which has amassed ~360 stars and 55 forks so far. Fortunately for us these projects exist in a non-zero sum ecology. You can actually do things like use the keras interface from TF-Encrypted through PySyft.

We wouldn't recommend making the community size a deciding factor in choosing between the two, as the two frameworks are in large part evolving in a shared and collaborative ecosystem. PySyft is also part of a broader mission for distributed data science and data ownership championed by Openmined.org, and the cross-collaboration between OpenMined and Dropout Labs is strong as well.

Another factor that may affect your ability to learn and build with either framework is the coding style, and the type of training resources readily available. Appealing to the data science crowd, PySyft provides their tutorials in the form of Jupyter Notebooks. TF-Encrypted instead provides most of their examples as python code, which is going to be much easier to work with for the Vim/Emacs users out there. One challenge unique to TF-Encrypted is that the project will have to integrate the substantial changes to TensorFlow under TF-2.0, in addition to their ongoing research and development.

Privacy Tradeoffs

Speed: early versions of federated learning were 41 times slower but have now been improved to an additional overhead of just 91% more wall time for the same training scenario.

Simplicity: Developing performant software has two major resources that need to simultaneously optimize: the computational resources to run programs and the time invested by the people developing it. Here PySyft does pretty well, in MNIST training examples adding privacy-preserved federation to the standard PyTorch MNIST exampletakes only about 10 extra lines of code. Encrypted inference is even better: 6 lines to set up the necessary PySyft resources, a drop in replacement for the test set dataloader, and a single line to encrypt the model come test time.

Privacy Preserving Deep Learning: Closing Remarks

Businesses, governments, medical institutions, and members of the public are all developing a demand for privacy safeguards in machine learning as awareness and technical literacy are increasing. Any organization that wants to deal with non-trivial datasets will need to consider the level of trust and privacy their applications warrant, and take concrete actions to ensure those needs are met. Luckily, frameworks like PySyft and TF-Encrypted are making this almost as easy as using vanilla PyTorch or Keras.

Any discussion of PySyft vs TF-Encrypted is going to include an element of the ol' PyTorch versus TensorFlow debate. As the names would have you guess, PySyft is originally based on PyTorch while TF-Encrypted is unsurprisingly built on top of TensorFlow. If you are part of an organization that has already invested in developing in-house expertise in one or the other framework you may think it is best to stick with what you know and choose your privacy preservation framework accordingly. However, you would mostly be correct, but in fact there are interesting ways in which PySyft can take advantage of the Keras API and even use TF-Encrypted as a backend, so you shouldn't let your pre-existing familiarity be the sole deciding factor in your decision.

It's worth noting that depending on where your application's needs land on the privacy versus performance spectrum, the TensorFlow ecosystem has also produced related projects in TensorFlow Privacy and TensorFlow Federated. It may be worth considering these other options depending on how Byzantine your fault tolerance needs to be.

As of this writing, PySyft is more mature and has a bigger community for now, but TF-Encrypted is bound to grow quickly given the substantial pre-existing user base of TensorFlow. PySyft currently has more plentiful and better developed tutorials (including a course taught by the project founder at Udacity), and if you are starting from scratch it will probably be easier to learn.

The most general and fruitful approach given the state of privacy-preserving deep learning in 2019 is probably to learn and use PySyft and use the built-in hooks to TF-Encrypted when you need to accomplish a task in a more TensorFluidic way. At the end of the day what's important is not which framework you choose, but what you do with it.