BeeGFS Parallel Filesystem v6

NOTE: BeeGFS v6 is not the latest version of BeeGFS. BeeGFS v7 has a stable release and introduces many features, of which add to metadata consumption - however, there are still existing environments with BeeGFS v6 clients.

BeeGFS is a high performance parallel filesystem designed for simplicity and scalability. While there is a lot of documentation online at www.beegfs.io, There is not much written online about how to size Metadata services for BeeGFS parallel clusters. How much size to procure and allocate to BeeGFS-meta services is an important decision as the metadata space taken by BeeGFS can vary wildly depending on the complexion of the files stored in the BeeGFS storage pool(s) on the BeeGFS cluster. Exxact utilized a BeeGFS parallel cluster using BeeGFS v6 to undertake some experiments to determine and write up some notes about the correlation between:

- Files in the BeeGFS pool and the corresponding contribution to Metadata filesize on the BeeGFS-metadata node(s).

- Files in the BeeGFS pool and the corresponding contribution to Metadata iNode utilization on the BeeGFS-metadata node(s).

- Directory creation within the BeeGFS pool and the corresponding contribution to Metadata filesize and iNode utilization on the node(s)

BeeGFS MetaData Calculation Using Current/Existing Application Output

In order to get an idea of how much metadata will be consumed by the BeeGFS-meta server(s) within the BeeGFS cluster, its useful to think about the following:

- Number of files you may be storing on the BeeGFS storage pool (Will it be a lot of very small files or a smaller amount of large files?)

This alone can be a little daunting, it can be extremely helpful if you have some existing instances of a few of the applications you are using or planning to use on the new BeeGFS implementation, to see how many individual files the application generates on a typical job cycle.

- Some applications generate a very large amount (thousands to tens of thousands) of individual files (trajectory files, for example).

- Some applications generate very few-, large output files (5GB+ Files)

If you have determined that you may generate around ~10 million files for a given period of time that need to be retained, the below is an example of how much BeeGFS-meta size may be consumed.

Each individual created on the BeeGFS Pool takes around 4Kb of space.

Note in Binary format (1000B = 1KB)

Files : 10000000

Metadata space consumer per file: 4Kb

Note: BeeGFS-meta generates 2 entities/files on the Metadata server for every file on the BeeGFS Pool

(10000000 * 4) = 40000000 (40Gb beeGFS Space Consumed)

MetaData Calculation Based On BeeGFS Storage Pool Size

For an interesting exercise, if you have a large file store that may be the same complexion as the planned BeeGFS storage pool, you can calculate the average filesize of the files in that storage area and use that to reverse calculate how many files would fit in a specific storage pool and therefore how much metadata that would take, if it were fully utilized. There are some awk/gawk one liners that can be used to get this, but the best way is most likely to use a command to get the total amount of files:

find /directory/ -type f | wc -l

Then another command to get the total size of the directory and sub-directories

du -h /directory

Then divide them:

directory-size / file-count

For example:

27000MB / 990983 = 0.02724 (27.2KB)

If you have a 27KB average file size, and you are planning on a roughly 400GB BeeGFS Storage Cluster, and want to have a rough idea of how much metadata will be needed if the BeeGFS cluster was fully utilized, reference below.

Storage-In-KB / Average-File-Size = Num-Files-If-Filled 429496729600 / 27 = 15907286281

Since we know with BeeGFS (v6) - these files take ~4KB of Metadata per file.

15907286281 * 4Kb = 63629145126 (63.63Tb)

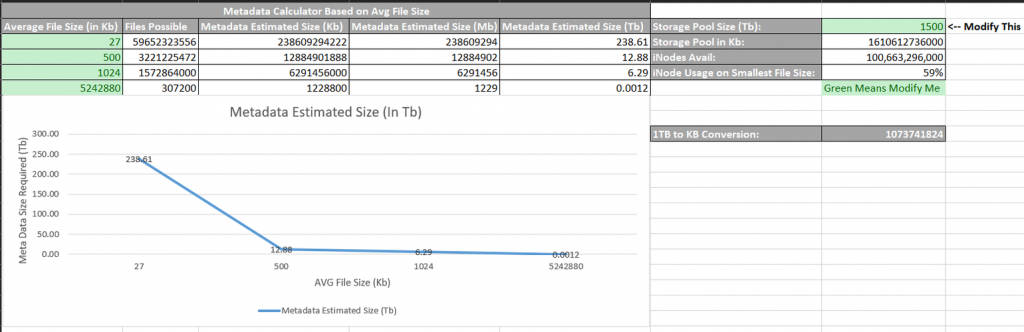

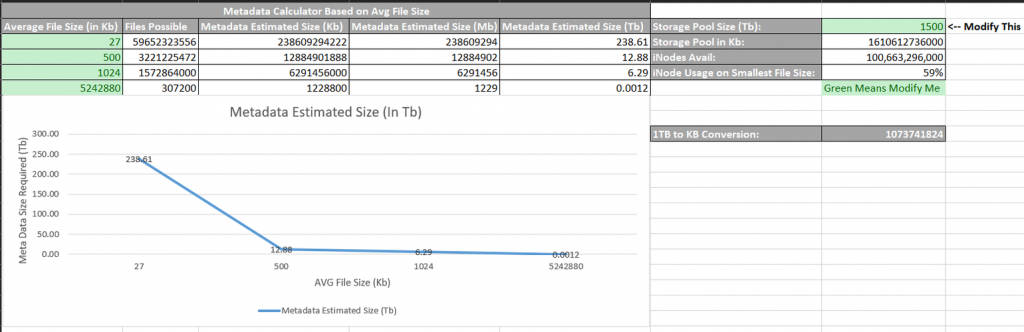

Calculator demonstrating massive Metadata realities based on Avg file size.

iNode Utilization

iNode is another consideration, however it should generally not be an issue except for potentially servers with very sparce space allocated for them, as available iNodes scale with the amount of blocks (space) the filesystem has. While EXT4 filesystems (preferred filesystem for beegfs-meta services for xattr support, etc) can be modified, the typical amount of iNodes available for the default settings are:

1 Node Per 16Kb

For example, a 50TB EXT4 file system should have around 3276800000 available iNodes when the mkfs.ext4 process is run on the block device with the ext4 default settings. During the course of the experiments Exxact Corporation ran with BeeGFS (v6) - BeeGFS metadata services consume 1 iNode per file stored on the BeeGFS storage pool.

Notes

Testing for the analysis above was based on BeeGFS v6 - 19.3-git3

BeeGFS v7 supports new features that most likely significantly increase metadata usage, since the BeeGFS-client accessing the BeeGFS storage services can specify which BeeGFS-storage servers (storagetargets) to write to. The amount of metadata servers most likely creates additional metadata storage.

Interested in Deep Learning? Check out our GPU Benchmark Comparison

BeeGFS Parallel Filesystem Version 6.0 Metadata Analysis

BeeGFS Parallel Filesystem v6

NOTE: BeeGFS v6 is not the latest version of BeeGFS. BeeGFS v7 has a stable release and introduces many features, of which add to metadata consumption - however, there are still existing environments with BeeGFS v6 clients.

BeeGFS is a high performance parallel filesystem designed for simplicity and scalability. While there is a lot of documentation online at www.beegfs.io, There is not much written online about how to size Metadata services for BeeGFS parallel clusters. How much size to procure and allocate to BeeGFS-meta services is an important decision as the metadata space taken by BeeGFS can vary wildly depending on the complexion of the files stored in the BeeGFS storage pool(s) on the BeeGFS cluster. Exxact utilized a BeeGFS parallel cluster using BeeGFS v6 to undertake some experiments to determine and write up some notes about the correlation between:

- Files in the BeeGFS pool and the corresponding contribution to Metadata filesize on the BeeGFS-metadata node(s).

- Files in the BeeGFS pool and the corresponding contribution to Metadata iNode utilization on the BeeGFS-metadata node(s).

- Directory creation within the BeeGFS pool and the corresponding contribution to Metadata filesize and iNode utilization on the node(s)

BeeGFS MetaData Calculation Using Current/Existing Application Output

In order to get an idea of how much metadata will be consumed by the BeeGFS-meta server(s) within the BeeGFS cluster, its useful to think about the following:

- Number of files you may be storing on the BeeGFS storage pool (Will it be a lot of very small files or a smaller amount of large files?)

This alone can be a little daunting, it can be extremely helpful if you have some existing instances of a few of the applications you are using or planning to use on the new BeeGFS implementation, to see how many individual files the application generates on a typical job cycle.

- Some applications generate a very large amount (thousands to tens of thousands) of individual files (trajectory files, for example).

- Some applications generate very few-, large output files (5GB+ Files)

If you have determined that you may generate around ~10 million files for a given period of time that need to be retained, the below is an example of how much BeeGFS-meta size may be consumed.

Each individual created on the BeeGFS Pool takes around 4Kb of space.

Note in Binary format (1000B = 1KB)

Files : 10000000

Metadata space consumer per file: 4Kb

Note: BeeGFS-meta generates 2 entities/files on the Metadata server for every file on the BeeGFS Pool

(10000000 * 4) = 40000000 (40Gb beeGFS Space Consumed)

MetaData Calculation Based On BeeGFS Storage Pool Size

For an interesting exercise, if you have a large file store that may be the same complexion as the planned BeeGFS storage pool, you can calculate the average filesize of the files in that storage area and use that to reverse calculate how many files would fit in a specific storage pool and therefore how much metadata that would take, if it were fully utilized. There are some awk/gawk one liners that can be used to get this, but the best way is most likely to use a command to get the total amount of files:

find /directory/ -type f | wc -l

Then another command to get the total size of the directory and sub-directories

du -h /directory

Then divide them:

directory-size / file-count

For example:

27000MB / 990983 = 0.02724 (27.2KB)

If you have a 27KB average file size, and you are planning on a roughly 400GB BeeGFS Storage Cluster, and want to have a rough idea of how much metadata will be needed if the BeeGFS cluster was fully utilized, reference below.

Storage-In-KB / Average-File-Size = Num-Files-If-Filled 429496729600 / 27 = 15907286281

Since we know with BeeGFS (v6) - these files take ~4KB of Metadata per file.

15907286281 * 4Kb = 63629145126 (63.63Tb)

Calculator demonstrating massive Metadata realities based on Avg file size.

iNode Utilization

iNode is another consideration, however it should generally not be an issue except for potentially servers with very sparce space allocated for them, as available iNodes scale with the amount of blocks (space) the filesystem has. While EXT4 filesystems (preferred filesystem for beegfs-meta services for xattr support, etc) can be modified, the typical amount of iNodes available for the default settings are:

1 Node Per 16Kb

For example, a 50TB EXT4 file system should have around 3276800000 available iNodes when the mkfs.ext4 process is run on the block device with the ext4 default settings. During the course of the experiments Exxact Corporation ran with BeeGFS (v6) - BeeGFS metadata services consume 1 iNode per file stored on the BeeGFS storage pool.

Notes

Testing for the analysis above was based on BeeGFS v6 - 19.3-git3

BeeGFS v7 supports new features that most likely significantly increase metadata usage, since the BeeGFS-client accessing the BeeGFS storage services can specify which BeeGFS-storage servers (storagetargets) to write to. The amount of metadata servers most likely creates additional metadata storage.

.jpg?format=webp)