Introduction

YOLOv8 is an object detection algorithm developed by Ultralytics in the YOLO (You Only Look Once) family. YOLOv8 builds upon the success of YOLOv5 by introducing improvements in accuracy, speed, and deployment efficiency within a more streamlined Python-based framework.

This tutorial is a follow-up to our YOLOv5 PyTorch guide and is designed to help developers, researchers, and AI engineers get up and running with YOLOv8. We’ll detail the characteristics of YOLOv8 with a walkthrough from installation to inference and training on a custom dataset.

Whether you're upgrading an existing pipeline or deploying a new computer vision model on high-performance hardware, YOLOv8 offers a compelling blend of performance and usability.

YOLOv8 Advantages over YOLOv5

YOLOv8 (You Only Look Once) is an open-source object detection pretrained model that introduces several architectural and functional improvements that modernize the object detection workflow. It moves away from YAML-based config files and embraces a Python-native approach, making it easier to integrate, customize, and deploy in the mainstream.

While YOLOv5 remains widely used, YOLOv8 offers several practical advantages that make it a worthwhile upgrade, especially for users seeking higher performance or smoother deployment workflows. Key improvements include:

- Redesigned Architecture: YOLOv8 uses C2f blocks that offer better feature extraction and lower computational overhead compared to previous CSPDarknet designs.

- Multi-Task Support: YOLOv8 also supports instance segmentation and pose estimation, allowing developers to train and deploy multiple vision tasks from the same codebase.

- Improved Training Workflow: Customizing models is more accessible and configurable with a command-line interface and Python API.

- Native Export for Deployment: Exported directly to formats like ONNX, TensorRT, and CoreML without additional conversion scripts.

- Better Logging and Visualization: Training metrics, validation results, and inference outputs are better organized and more informative, making it easier to evaluate performance and make adjustments.

Together, these updates position YOLOv8 as a more scalable and production-friendly tool for real-time vision applications. Many edge applications still use YOLOv5 in deployment due to its ubiquity, and both are easy to use. However, YOLOv8 is the better choice for its accuracy and speed.

YOLOv8 System Requirements

- CPU: 8-24 Core CPU with ample PCIe Lanes

- AMD EPYC 8004, AMD EPYC 4004

- Intel Xeon W, Intel Xeon 6700E

- GPU: Multi-GPU with ample VRAM

- RTX PRO 6000 Blackwell 300W (Server Edition & Max-Q Edition)

- RTX PRO 4500 Blackwell 200W

- Memory: 64GB+ RAM

- Storage: NVMe SSDs of your desired capacity.

In an object detection edge server, having ample GPU memory, system memory, and fast storage can limit performance bottlenecks. YOLOv8, like most object detection algorithms, benefits from a GPU. The hardware you use depends on your deployment style, desired latency, dataset size, and expected speed. Object detection training takes most of the performance but is only done periodically to update your model. Inferencing in edge servers prioritizes performance per watt and can benefit from parallel processing with multi-GPUs or multiple nodes.

For GPUs, we recommend the RTX PRO 6000 GPUs for their exceptional 96GB VRAM and high performance for both training and deployment. For edge server applications, the RTX PRO 4500 or RTX PRO 4000 offers ample memory at a lower power draw with a trade-off in training performance.

Talk to our system engineers to configure your ideal deep learning GPU system.

YOLOv8 Installation and Tutorial

Here’s a short install walkthrough. Here, we used a container environment (Docker). You can also install on a notebook of your choice and you can skip this first step.

1. Set up a Python environment:

python -m venv yolov8-env

source yolov8-env/bin/activate # Linux/macOS

yolov8-env\Scripts\activate # Windows

2. Install Ultralytics YOLOv8 and its dependencies:

pip install ultralytics

from ultralytics import YOLO

# Load a pretrained YOLOv8n model

model = YOLO('yolov8n.pt')

Common Functions for YOLOv8

The following walkthrough shows common tasks using YOLOv8:

- running inference on an image

- training on a custom dataset

- validating model performance

- exporting for deployment

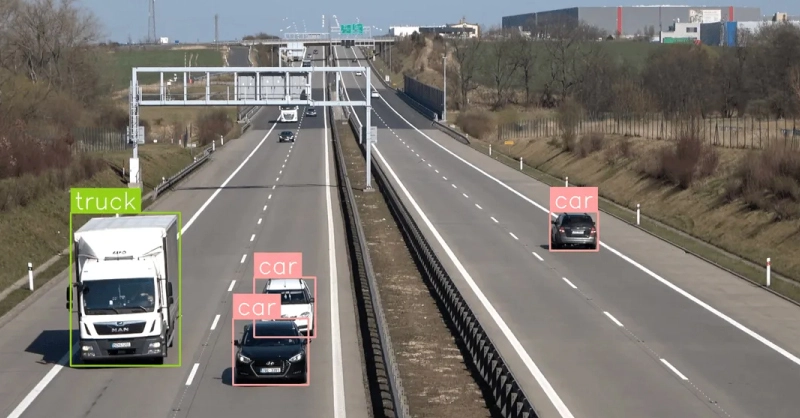

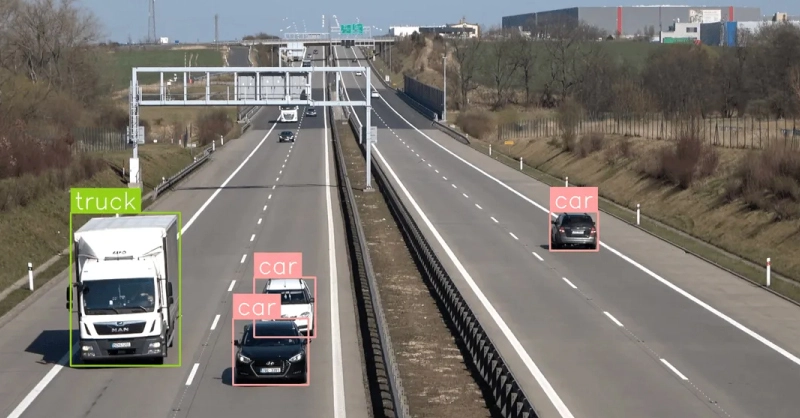

1. Run Inference on an Image

Use a pretrained model to detect objects in a sample image:

# Run inference on an image

results = model('sample.jpg', show=True)

This command opens a window displaying detected objects with bounding boxes and confidence scores.

2. Train on a Custom Dataset

Assuming you have your dataset organized and a data.yaml file defined:

yolo task=detect mode=train model=yolov8n.pt data=data.yaml epochs=100 imgsz=64

- task=detect: Object detection task

- model=yolov8n.pt: Use a pretrained Nano model as a starting point

- data=data.yaml: Points to your dataset and class definitions

- imgsz=640: Image input size (can be adjusted)

- epochs=100: Number of training epochs

A sample data.yaml:

train: /path/to/train/images

val: /path/to/val/images

nc: 3

names: ['car', 'bus', 'truck']

YOLOv8 automatically uses your GPU (via CUDA) if available.

3. Validate the Trained Model

To evaluate your model's accuracy on the validation set:

yolo task=detect mode=val model=runs/detect/train/weights/best.pt data=data.yaml

This outputs precision, recall, mAP@0.5, and mAP@0.5:0.95—key indicators of model performance.

4. Export the Model for Deployment

Once training is complete, export the model to the format that suits your deployment environment:

yolo export model=runs/detect/train/weights/best.pt format=onnx

#You can change the export format for ONNX, TensorRT, IOS, PyTorch and more

Available export formats:

- onnx: For ONNX Runtime or TensorRT

- engine: For direct TensorRT execution

- coreml: For iOS deployment

- torchscript: For PyTorch-based inference apps

With these steps, you're ready to integrate YOLOv8 into real-world applications, from cloud-hosted APIs to edge devices using GPU acceleration.

Final Thoughts and Should You Upgrade from YOLOv5?

YOLOv8 offers object detection practitioners a modern, more capable model. From its Python-native architecture and simplified workflows to its higher accuracy and multi-task capabilities, YOLOv8 is built for today’s high-performance AI environments.

If you're already using YOLOv5, upgrading to YOLOv8 is more than just a marginal improvement. It offers:

- Better Out-of-the-Box Accuracy

- Faster Inference and Training Times

- Simpler Integration and Deployment

- Support for Additional Vision Tasks (segmentation and pose estimation)

For those deploying models in production or working with custom datasets on modern hardware, YOLOv8 provides a streamlined experience with fewer barriers between prototyping and deployment.

If your current YOLOv5 pipeline is heavily customized, test YOLOv8 in parallel before switching over. But for new projects, YOLOv8 is the clear choice, offering more performance, less overhead, and a smoother path to deployment. Most YOLO detection algorithms are good enough for most applications. Test and train your data to find the best consistency.

If you’re looking to deploy detection algorithms and models in production, the right hardware can make a world of difference. Contact Exxact Corp. today to configure your ideal GPU-accelerated solution fit for your budget. and workload.

.png)

Fueling Innovation with an Exxact Multi-GPU Server

Training AI models on massive datasets can be accelerated exponentially with the right system. It's not just a high-performance computer, but a tool to propel and accelerate your research.

Configure Now

YOLOv8 Setup Tutorial for Object Detection

Introduction

YOLOv8 is an object detection algorithm developed by Ultralytics in the YOLO (You Only Look Once) family. YOLOv8 builds upon the success of YOLOv5 by introducing improvements in accuracy, speed, and deployment efficiency within a more streamlined Python-based framework.

This tutorial is a follow-up to our YOLOv5 PyTorch guide and is designed to help developers, researchers, and AI engineers get up and running with YOLOv8. We’ll detail the characteristics of YOLOv8 with a walkthrough from installation to inference and training on a custom dataset.

Whether you're upgrading an existing pipeline or deploying a new computer vision model on high-performance hardware, YOLOv8 offers a compelling blend of performance and usability.

YOLOv8 Advantages over YOLOv5

YOLOv8 (You Only Look Once) is an open-source object detection pretrained model that introduces several architectural and functional improvements that modernize the object detection workflow. It moves away from YAML-based config files and embraces a Python-native approach, making it easier to integrate, customize, and deploy in the mainstream.

While YOLOv5 remains widely used, YOLOv8 offers several practical advantages that make it a worthwhile upgrade, especially for users seeking higher performance or smoother deployment workflows. Key improvements include:

- Redesigned Architecture: YOLOv8 uses C2f blocks that offer better feature extraction and lower computational overhead compared to previous CSPDarknet designs.

- Multi-Task Support: YOLOv8 also supports instance segmentation and pose estimation, allowing developers to train and deploy multiple vision tasks from the same codebase.

- Improved Training Workflow: Customizing models is more accessible and configurable with a command-line interface and Python API.

- Native Export for Deployment: Exported directly to formats like ONNX, TensorRT, and CoreML without additional conversion scripts.

- Better Logging and Visualization: Training metrics, validation results, and inference outputs are better organized and more informative, making it easier to evaluate performance and make adjustments.

Together, these updates position YOLOv8 as a more scalable and production-friendly tool for real-time vision applications. Many edge applications still use YOLOv5 in deployment due to its ubiquity, and both are easy to use. However, YOLOv8 is the better choice for its accuracy and speed.

YOLOv8 System Requirements

- CPU: 8-24 Core CPU with ample PCIe Lanes

- AMD EPYC 8004, AMD EPYC 4004

- Intel Xeon W, Intel Xeon 6700E

- GPU: Multi-GPU with ample VRAM

- RTX PRO 6000 Blackwell 300W (Server Edition & Max-Q Edition)

- RTX PRO 4500 Blackwell 200W

- Memory: 64GB+ RAM

- Storage: NVMe SSDs of your desired capacity.

In an object detection edge server, having ample GPU memory, system memory, and fast storage can limit performance bottlenecks. YOLOv8, like most object detection algorithms, benefits from a GPU. The hardware you use depends on your deployment style, desired latency, dataset size, and expected speed. Object detection training takes most of the performance but is only done periodically to update your model. Inferencing in edge servers prioritizes performance per watt and can benefit from parallel processing with multi-GPUs or multiple nodes.

For GPUs, we recommend the RTX PRO 6000 GPUs for their exceptional 96GB VRAM and high performance for both training and deployment. For edge server applications, the RTX PRO 4500 or RTX PRO 4000 offers ample memory at a lower power draw with a trade-off in training performance.

Talk to our system engineers to configure your ideal deep learning GPU system.

YOLOv8 Installation and Tutorial

Here’s a short install walkthrough. Here, we used a container environment (Docker). You can also install on a notebook of your choice and you can skip this first step.

1. Set up a Python environment:

python -m venv yolov8-env

source yolov8-env/bin/activate # Linux/macOS

yolov8-env\Scripts\activate # Windows

2. Install Ultralytics YOLOv8 and its dependencies:

pip install ultralytics

from ultralytics import YOLO

# Load a pretrained YOLOv8n model

model = YOLO('yolov8n.pt')

Common Functions for YOLOv8

The following walkthrough shows common tasks using YOLOv8:

- running inference on an image

- training on a custom dataset

- validating model performance

- exporting for deployment

1. Run Inference on an Image

Use a pretrained model to detect objects in a sample image:

# Run inference on an image

results = model('sample.jpg', show=True)

This command opens a window displaying detected objects with bounding boxes and confidence scores.

2. Train on a Custom Dataset

Assuming you have your dataset organized and a data.yaml file defined:

yolo task=detect mode=train model=yolov8n.pt data=data.yaml epochs=100 imgsz=64

- task=detect: Object detection task

- model=yolov8n.pt: Use a pretrained Nano model as a starting point

- data=data.yaml: Points to your dataset and class definitions

- imgsz=640: Image input size (can be adjusted)

- epochs=100: Number of training epochs

A sample data.yaml:

train: /path/to/train/images

val: /path/to/val/images

nc: 3

names: ['car', 'bus', 'truck']

YOLOv8 automatically uses your GPU (via CUDA) if available.

3. Validate the Trained Model

To evaluate your model's accuracy on the validation set:

yolo task=detect mode=val model=runs/detect/train/weights/best.pt data=data.yaml

This outputs precision, recall, mAP@0.5, and mAP@0.5:0.95—key indicators of model performance.

4. Export the Model for Deployment

Once training is complete, export the model to the format that suits your deployment environment:

yolo export model=runs/detect/train/weights/best.pt format=onnx

#You can change the export format for ONNX, TensorRT, IOS, PyTorch and more

Available export formats:

- onnx: For ONNX Runtime or TensorRT

- engine: For direct TensorRT execution

- coreml: For iOS deployment

- torchscript: For PyTorch-based inference apps

With these steps, you're ready to integrate YOLOv8 into real-world applications, from cloud-hosted APIs to edge devices using GPU acceleration.

Final Thoughts and Should You Upgrade from YOLOv5?

YOLOv8 offers object detection practitioners a modern, more capable model. From its Python-native architecture and simplified workflows to its higher accuracy and multi-task capabilities, YOLOv8 is built for today’s high-performance AI environments.

If you're already using YOLOv5, upgrading to YOLOv8 is more than just a marginal improvement. It offers:

- Better Out-of-the-Box Accuracy

- Faster Inference and Training Times

- Simpler Integration and Deployment

- Support for Additional Vision Tasks (segmentation and pose estimation)

For those deploying models in production or working with custom datasets on modern hardware, YOLOv8 provides a streamlined experience with fewer barriers between prototyping and deployment.

If your current YOLOv5 pipeline is heavily customized, test YOLOv8 in parallel before switching over. But for new projects, YOLOv8 is the clear choice, offering more performance, less overhead, and a smoother path to deployment. Most YOLO detection algorithms are good enough for most applications. Test and train your data to find the best consistency.

If you’re looking to deploy detection algorithms and models in production, the right hardware can make a world of difference. Contact Exxact Corp. today to configure your ideal GPU-accelerated solution fit for your budget. and workload.

.png)

Fueling Innovation with an Exxact Multi-GPU Server

Training AI models on massive datasets can be accelerated exponentially with the right system. It's not just a high-performance computer, but a tool to propel and accelerate your research.

Configure Now