The NVIDIA CUDA® Deep Neural Network library (cuDNN) is a GPU-accelerated library of primitives for deep neural networks. cuDNN provides highly tuned implementations for standard routines such as forward and backward convolution, pooling, normalization, and activation layers. cuDNN is part of the NVIDIA Deep Learning SDK.

Deep learning researchers and framework developers worldwide rely on cuDNN for high-performance GPU acceleration. It allows them to focus on training neural networks and developing software applications rather than spending time on low-level GPU performance tuning. cuDNN accelerates widely used deep learning frameworks, including Caffe,Caffe2, Chainer, Keras,MATLAB, MxNet, TensorFlow, and PyTorch. For access to NVIDIA optimized deep learning framework containers, that has cuDNN integrated into the frameworks, visit NVIDIA GPU CLOUD to learn more and get started.

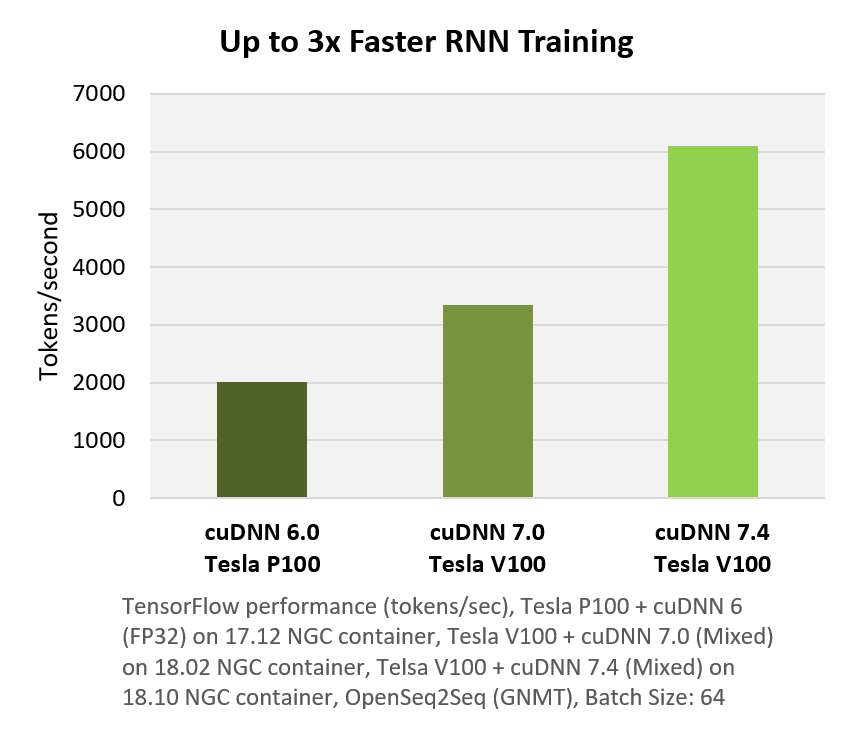

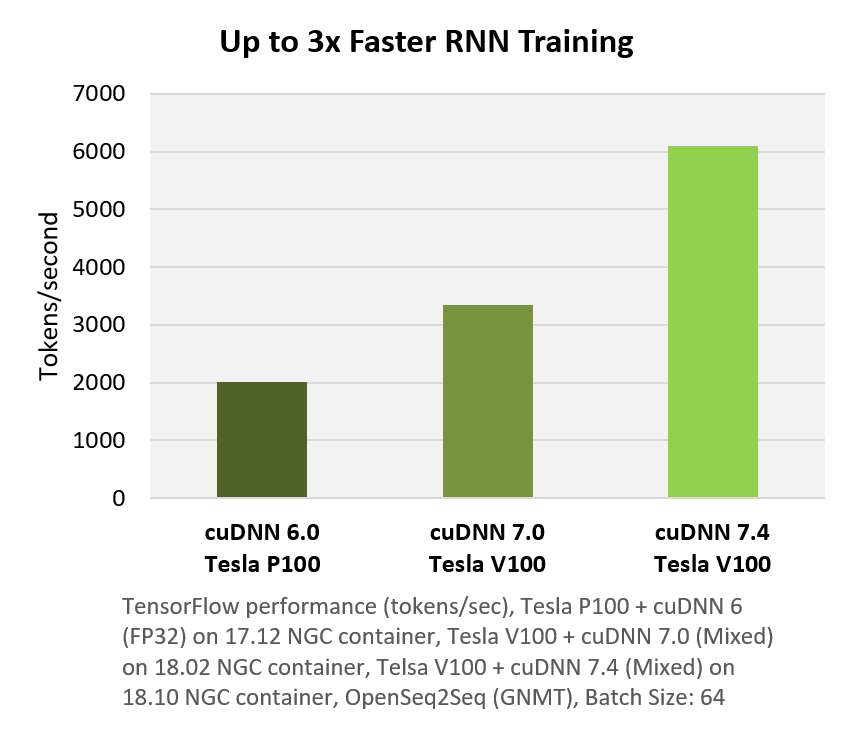

What’s New in NVIDIA cuDNN 7.4

- Up to 3x faster training of ResNet-50 and GNMT on Tesla V100 vs. Tesla P100

- Improved NHWC support for pooling and strided convolution

- Get improved performance for common workloads such as ResNet50 and SSD as batchnorm now supports NHWC data layout with an added option to fuse batchnorm with Add and ReLu operations

cuDNN Accelerated Frameworks

- Caffe

- Caffe 2

- Chainer

- Microsoft Cognitive Toolkit

- Matlab

- Mxnet

- PaddlePaddle

- PyTorch

- TensorFlow

- Theano

NVIDIA cuDNN 7.4 Key Features

- Grouped convolutions now support NHWC inputs/outputs and FP16/FP32 compute for models such as ResNet and Xception

- Dilated convolutions using mixed precision Tensor Core operations for applications such as semantic segmentation, image super-resolution, denoising, etc

- TensorCore acceleration with FP32 inputs and outputs (previously restricted to FP16 input)

- RNN cells support multiple use cases with options for cell clipping and padding masks

- Automatically select the best RNN implementation with RNN search API

- Arbitrary dimension ordering, striding, and sub-regions for 4d tensors means easy integration into any neural net implementation

cuDNN is supported on Windows, Linux and MacOS systems with Volta, Pascal, Kepler, Maxwell Tegra K1, Tegra X1 and Tegra X2 and Jetson Xavier GPUs.

Have any questions? Contact us directly here.

NVIDIA cuDNN 7.4: New Deep Learning Software Release

The NVIDIA CUDA® Deep Neural Network library (cuDNN) is a GPU-accelerated library of primitives for deep neural networks. cuDNN provides highly tuned implementations for standard routines such as forward and backward convolution, pooling, normalization, and activation layers. cuDNN is part of the NVIDIA Deep Learning SDK.

Deep learning researchers and framework developers worldwide rely on cuDNN for high-performance GPU acceleration. It allows them to focus on training neural networks and developing software applications rather than spending time on low-level GPU performance tuning. cuDNN accelerates widely used deep learning frameworks, including Caffe,Caffe2, Chainer, Keras,MATLAB, MxNet, TensorFlow, and PyTorch. For access to NVIDIA optimized deep learning framework containers, that has cuDNN integrated into the frameworks, visit NVIDIA GPU CLOUD to learn more and get started.

What’s New in NVIDIA cuDNN 7.4

- Up to 3x faster training of ResNet-50 and GNMT on Tesla V100 vs. Tesla P100

- Improved NHWC support for pooling and strided convolution

- Get improved performance for common workloads such as ResNet50 and SSD as batchnorm now supports NHWC data layout with an added option to fuse batchnorm with Add and ReLu operations

cuDNN Accelerated Frameworks

- Caffe

- Caffe 2

- Chainer

- Microsoft Cognitive Toolkit

- Matlab

- Mxnet

- PaddlePaddle

- PyTorch

- TensorFlow

- Theano

NVIDIA cuDNN 7.4 Key Features

- Grouped convolutions now support NHWC inputs/outputs and FP16/FP32 compute for models such as ResNet and Xception

- Dilated convolutions using mixed precision Tensor Core operations for applications such as semantic segmentation, image super-resolution, denoising, etc

- TensorCore acceleration with FP32 inputs and outputs (previously restricted to FP16 input)

- RNN cells support multiple use cases with options for cell clipping and padding masks

- Automatically select the best RNN implementation with RNN search API

- Arbitrary dimension ordering, striding, and sub-regions for 4d tensors means easy integration into any neural net implementation

cuDNN is supported on Windows, Linux and MacOS systems with Volta, Pascal, Kepler, Maxwell Tegra K1, Tegra X1 and Tegra X2 and Jetson Xavier GPUs.

Have any questions? Contact us directly here.