GPUs Continue to Expand Application Use in Artificial Intelligence & Machine Learning

Artificial intelligence (AI) is set to transform global productivity, working patterns, and lifestyles and create enormous wealth. Research firm Gartner expects the global AI economy to increase from about $1.2 trillion last year to about $3.9 Trillion by 2022, while McKinsey sees it delivering global economic activity of around $13 trillion by 2030. And of course, this transformation is fueled by the powerful Machine Learning (ML) tools and techniques such as Deep Reinforcement Learning (DRL), Generative Adversarial Networks (GAN), Gradient-boosted-tree models (GBM), Natural Language Processing (NLP), and more.

Most of the success in modern AI & ML systems is dependent on their ability to process massive amounts of raw data in a parallel fashion using task-optimized hardware. In fact, the modern resurgence of AI started with the 2012 ImageNet competition where deep-learning algorithms demonstrated an eye-popping increment in the image classification accuracy over their non-deep-learning counterparts (algorithms). However, along with clever programming and mathematical modeling, use of specialized hardware played a significant role in this early success.

As a shining example, advancements in computer vision (CV) continue to drive many modern AI & ML systems. CV is accelerating almost every domain in the industry enabling organizations to revolutionize the way machines and business systems work – manufacturing, autonomous driving, healthcare. Almost all CV systems have graduated from traditional rule-based programming paradigm to large-scale, data-driven ML paradigm. And, consequently, GPU-based hardware plays a critical role in ensuring high quality predictions and classification by helping crunching massive amounts of training data (often in the range of petabytes).

In this article, we take a look at some of the most talked about Artificial Intelligence and Machine Learning application areas where these specialized hardware and, in particular, graphical processing units (GPU) play an ever-increasing role in their importance.

Some of the most talked about areas in AI & ML are:

- Autonomous driving

- Healthcare/ Medical imaging

- Fighting disease, drug discovery

- Environmental/Climate science

Why Do GPUs Shine in These Tasks?

General-purpose CPUs struggle when operating on a large amount of data e.g., performing linear algebra operations on matrices with tens or hundreds thousand floating-point numbers. Under the hood, deep neural networks are mostly composed of operations like matrix multiplications and vector additions.

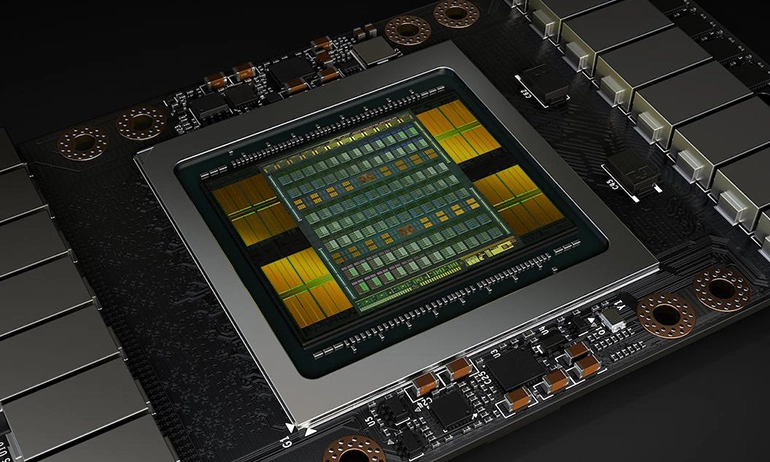

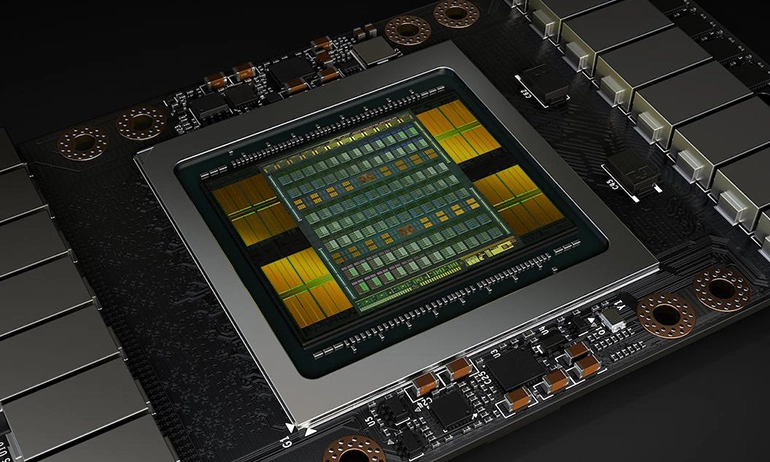

GPUs were developed (primarily catering to the video gaming industry) to handle a massive degree of parallel computations using thousands of tiny computing cores. They also feature large memory bandwidth to deal with the rapid dataflow (processing unit to cache to the slower main memory and back), needed for these computations when the neural network is training through hundreds of epochs. This makes them the ideal commodity hardware to deal with the computation load of computer vision tasks.

This is a brief table showing the differences at a high level:

GPUs Are Optimized for Many Frameworks & Computing Domains

The general architecture of GPUs was suitable for the particular type of computing tasks that are at the heart of deep learning algorithms. However, once this synergy was fully exploited by academic researchers and demonstrated beyond any doubt, corporations which produce GPU, such as Nvidia, invested a tremendous amount of R&D and human capital to develop more high-performing and highly optimized GPUs for a variety of applications.

Interested in a deep learning workstation?

Learn more about Exxact AI workstations starting at $3,700

They also made sure that their application software and firmware stack are constantly updated to integrate seamlessly with modern high-level programming frameworks so that millions of application programmers around the world can take advantage of the power of GPUs with minimal effort and learning curve. The following picture from the NVIDIA website shows the ecosystem of various deep learning frameworks that the NVIDIA GPU products are being optimized for.

Additionally, depending on power-performance trade-off, GPU (and associated memory) architecture can be optimally designed targeting a host of computing domains - from desktop workstations used in academic labs to edge computers used on Industrial IoT or self-driving cars. This graphic from the NVIDIA website helps to show this:

Companies like Exxact Corporation engineer AI workstations, systems and clusters to help computer vision researchers, and others working in AI, get the results they need – from single-user to dev team to large institutional facilities.

AI & ML In Autonomous Driving

Autonomous driving is a highly challenging and complex application area for cutting-edge ML systems. An autonomous vehicle can employ a dizzying array of sensors to collect information about road conditions, other cars, pedestrians, cyclists, sign posts, exits, highway markers, shops and stores on the sidewalk, and many other variables. Many of them can be image-based (using multiple cameras mounted on various places). Others can be data streams from LiDAR or other types of sensors.

The use case of autonomous driving is also highly challenging because it includes not only object detection but also object classification, segmentation, motion detection, etc. On top of it, these systems are expected to make this kind of image/vision processing in the fraction of a second and convey a high-probability decision to the higher-level supervisory control system, responsible for the ultimate driving task.

Furthermore, not one, but multiple such CV systems/algorithms are often at play in any respectable autonomous driving system. The demand for parallel processing is high in those situations and this leads to high stress on the underlying computing machinery. If multiple neural networks are used at the same time, they may be sharing the common system storage and compete with each other for a common pool of resources.

What’s more, there are now highly specialized and optimized System-on-Chip (SoC) platforms available for this application area. For example, the following is a description for NVIDIA DRIVE AGX:

“NVIDIA DRIVE™ AGX embedded supercomputing platforms process data from camera, radar, and lidar sensors to perceive the surrounding environment, localize the car to a map, and plan and execute a safe path forward. This AI platform supports autonomous driving, in-cabin functions and driver monitoring, as well as other safety features—all in a compact, energy-efficient package.”

AI & ML In Healthcare (Medical Imaging)

In the case of medical imaging, the performance of the computer vision system is judged against highly experienced radiologists and clinical professionals who understand the pathology behind an image. Moreover, in most cases, the task involves identifying rare diseases with very low prevalence rates. This makes the training-data sparse and rarefied (i.e. not enough training images can be found). Consequently, the Deep Learning (DL) architecture has to compensate for this by adding clever processing and architectural complexity. This, of course, leads to enhanced computational complexity.

MRI and other advanced medical imaging systems are being equipped with ML algorithms and they are increasingly forming the first line of checks for cancer detection. Often the number and quality of radiologists fall short in the face of overwhelming digitized data, and ML-based systems are a perfect choice for aiding their decision-making process.

A Nature article estimates that the average radiologist needs to interpret one image every 3–4 seconds in an 8-hour workday to meet workload demands. Imaging data is plentiful these days and DL algorithms can be fed with ever-expanding dataset of medical images, to find patterns and interpret the results as a highly trained radiologist would. The algorithm can be trained to sort through regular and abnormal results, identifying suspicious spots on the skin, lesions, tumors, and brain bleeds, for example. But sorting through millions of training examples and identifying them correctly needs the help of GPU-optimized software and hardware systems.

AI & ML In Fighting Disease (Medicine Discovery)

Global pandemics such as COVID-19 are mostly caused by viruses. At the fundamental structural level, a virus mainly consists of a single (or a few) strands of DNA/RNA. Determining the 3-D protein structure i.e. the sequence of amino acid molecules from the genetic test data is the key to develop certain classes - subunit and nucleic acid type - of vaccines.

This task is computationally infeasible(no matter how much hardware resources is thrown at it) if tried using conventional protein-folding algorithms. Artificial intelligence can play a significant role to help solve this challenge with the latest techniques of deep reinforcement learning (DRL) and Bayesian optimization. On that cue, DeepMind, the famous DL research unit of Google, introduced AlphaFold, a DRL-based system that predicts the 3-D structure of a protein based on its genetic sequence.

In early March 2020, the system was put to the test on COVID-19 and AI researchers at DeepMind were able to release structural predictions of several under-studied proteins associated with SARS-CoV-2 to help the world-wide clinical and virology research community better understand the virus and its impact on human biology. In November 2020, the same system outperformed around 100 other teams in a biennial protein-structure prediction challenge called CASP (Critical Assessment of Structure Prediction). Biologists and the medical community worldwide called this progress epoch-making.

All these impressive breakthrough performances come at the cost of GPU-powered hardware. It is difficult to get the latest estimate of how much computing power was used for training AlphaFold. But, its famous predecessor, AlphaGo reportedly used hundreds of CPUs and GPUs in unison to beat Lee Sedol in the famous GO challenge.

Image Source: Business Insider

AI & ML In Environmental & Climate Science

Climate change is one of the most profound existential crises faced by humanity in the 21st century. To understand the impact of such an epoch-changing global event, we need tons of scientific data, high-fidelity visualization capability, and robust predictive models.

Weather prediction and climate modeling, therefore, are at the forefront of humanity’s fight against climate change. But, they are not easy enterprises. At the very least, for all the advancement in Big Data analytics and scientific simulation capabilities, these kinds of Earth-scale problems are simply intractable for present-day hardware and software stacks.

In the United States, the majority of weather prediction services are based on a comprehensive mesoscale model called Weather Research and Forecasting (WRF). The model serves a wide range of meteorological applications across scales from tens of meters to thousands of kilometers. Consequently, such a comprehensive model has to deal with a myriad of weather-related variables and their highly complicated inter-relationships. It also turns out to be impossible to describe these complex relationships with a set of unified, analytic equations. Instead, scientists try to approximate the equations using a method called parameterization in which they model the relationships at a scale greater than that of the actual phenomena.

Can the magical power of deep learning tackle this problem? Environmental and computational scientists from Argonne National Lab are collaborating to use deep neural networks (DNN) to replace the parameterizations of certain physical schemes in the WRF model, hoping to significantly reduce simulation time without compromising the fidelity and predictive power.

Image Source: Towards Data Science

And they are fully leveraging the power of GPU-enabled HPC (High-performance computing) nodes to do this kind of compute-intensive research. This news article describes some details about Argonne Leadership Computing Facility or ALCF computing investments:

“The ALCF has begun allocating time on ThetaGPU for approved requests. ThetaGPU is an extension of Theta and consists of NVIDIA DGX A100 nodes. Each DGX A100 node is equipped with eight NVIDIA A100 Tensor Core GPUs and two AMD Rome CPUs that provide 320 gigabytes (7680 GB aggregately) of GPU memory for training AI datasets, while also enabling GPU-specific and GPU-enhanced HPC applications for modeling and simulation”.

AI & ML In Smart Manufacturing

Movement of raw materials, goods, and parts is at the heart of any manufacturing system. After the revolution in computing and information technology, it was realized that such physical movement can only be optimally efficient when that movement is controlled in a precise manner, in conjunction with hundreds of other similar movements, supervised by an information-processing engine.

Therefore, innovative combinations of hardware and software have ushered the old industries into the era of smart manufacturing. As the cost and operational complexity of computing and storage decreased at an exponential pace (Moore’s law), the information content generated by workers, machines, controllers, factories, warehouses, and the logistic machinery exploded in size and complexity.

And now, innovative ideas in the field of AI and ML have come to rescue many manufacturing organizations from being drowned in the deluge of data and have helped them make sense of the exabytes of data that they have to process everyday. Deep learning technologies are being used in multiple domains - design, quality control, machine/process optimization, supply chain, predictive and preventive maintenance, and more.

Image Source: DownloadNema

Given the volume and velocity of data generation and processing need, most of these AI/ML systems use GPU-powered workstations and cloud computing resources. As an instance, here is a business white paper showing the use of Tesla v100 GPU for manufacturing,

Summary of Applications for AI & ML Using GPUs

More and more business segments and industries are adopting powerful AI/ML tools and platforms in their operations and R&D. In this article, we discussed a few of them and examined how the power and flexibility of GPU-based systems are enabling AI adoption. Going by the trend, we can confidently say that the market and choices for customized AI/ML hardware solutions (e.g. Deep-Learning Workstations) will continue to grow at an accelerated pace in the coming years.Have any questions?

Contact Exxact Today

Applications for GPU Based AI and Machine Learning

GPUs Continue to Expand Application Use in Artificial Intelligence & Machine Learning

Artificial intelligence (AI) is set to transform global productivity, working patterns, and lifestyles and create enormous wealth. Research firm Gartner expects the global AI economy to increase from about $1.2 trillion last year to about $3.9 Trillion by 2022, while McKinsey sees it delivering global economic activity of around $13 trillion by 2030. And of course, this transformation is fueled by the powerful Machine Learning (ML) tools and techniques such as Deep Reinforcement Learning (DRL), Generative Adversarial Networks (GAN), Gradient-boosted-tree models (GBM), Natural Language Processing (NLP), and more.

Most of the success in modern AI & ML systems is dependent on their ability to process massive amounts of raw data in a parallel fashion using task-optimized hardware. In fact, the modern resurgence of AI started with the 2012 ImageNet competition where deep-learning algorithms demonstrated an eye-popping increment in the image classification accuracy over their non-deep-learning counterparts (algorithms). However, along with clever programming and mathematical modeling, use of specialized hardware played a significant role in this early success.

As a shining example, advancements in computer vision (CV) continue to drive many modern AI & ML systems. CV is accelerating almost every domain in the industry enabling organizations to revolutionize the way machines and business systems work – manufacturing, autonomous driving, healthcare. Almost all CV systems have graduated from traditional rule-based programming paradigm to large-scale, data-driven ML paradigm. And, consequently, GPU-based hardware plays a critical role in ensuring high quality predictions and classification by helping crunching massive amounts of training data (often in the range of petabytes).

In this article, we take a look at some of the most talked about Artificial Intelligence and Machine Learning application areas where these specialized hardware and, in particular, graphical processing units (GPU) play an ever-increasing role in their importance.

Some of the most talked about areas in AI & ML are:

- Autonomous driving

- Healthcare/ Medical imaging

- Fighting disease, drug discovery

- Environmental/Climate science

Why Do GPUs Shine in These Tasks?

General-purpose CPUs struggle when operating on a large amount of data e.g., performing linear algebra operations on matrices with tens or hundreds thousand floating-point numbers. Under the hood, deep neural networks are mostly composed of operations like matrix multiplications and vector additions.

GPUs were developed (primarily catering to the video gaming industry) to handle a massive degree of parallel computations using thousands of tiny computing cores. They also feature large memory bandwidth to deal with the rapid dataflow (processing unit to cache to the slower main memory and back), needed for these computations when the neural network is training through hundreds of epochs. This makes them the ideal commodity hardware to deal with the computation load of computer vision tasks.

This is a brief table showing the differences at a high level:

GPUs Are Optimized for Many Frameworks & Computing Domains

The general architecture of GPUs was suitable for the particular type of computing tasks that are at the heart of deep learning algorithms. However, once this synergy was fully exploited by academic researchers and demonstrated beyond any doubt, corporations which produce GPU, such as Nvidia, invested a tremendous amount of R&D and human capital to develop more high-performing and highly optimized GPUs for a variety of applications.

Interested in a deep learning workstation?

Learn more about Exxact AI workstations starting at $3,700

They also made sure that their application software and firmware stack are constantly updated to integrate seamlessly with modern high-level programming frameworks so that millions of application programmers around the world can take advantage of the power of GPUs with minimal effort and learning curve. The following picture from the NVIDIA website shows the ecosystem of various deep learning frameworks that the NVIDIA GPU products are being optimized for.

Additionally, depending on power-performance trade-off, GPU (and associated memory) architecture can be optimally designed targeting a host of computing domains - from desktop workstations used in academic labs to edge computers used on Industrial IoT or self-driving cars. This graphic from the NVIDIA website helps to show this:

Companies like Exxact Corporation engineer AI workstations, systems and clusters to help computer vision researchers, and others working in AI, get the results they need – from single-user to dev team to large institutional facilities.

AI & ML In Autonomous Driving

Autonomous driving is a highly challenging and complex application area for cutting-edge ML systems. An autonomous vehicle can employ a dizzying array of sensors to collect information about road conditions, other cars, pedestrians, cyclists, sign posts, exits, highway markers, shops and stores on the sidewalk, and many other variables. Many of them can be image-based (using multiple cameras mounted on various places). Others can be data streams from LiDAR or other types of sensors.

The use case of autonomous driving is also highly challenging because it includes not only object detection but also object classification, segmentation, motion detection, etc. On top of it, these systems are expected to make this kind of image/vision processing in the fraction of a second and convey a high-probability decision to the higher-level supervisory control system, responsible for the ultimate driving task.

Furthermore, not one, but multiple such CV systems/algorithms are often at play in any respectable autonomous driving system. The demand for parallel processing is high in those situations and this leads to high stress on the underlying computing machinery. If multiple neural networks are used at the same time, they may be sharing the common system storage and compete with each other for a common pool of resources.

What’s more, there are now highly specialized and optimized System-on-Chip (SoC) platforms available for this application area. For example, the following is a description for NVIDIA DRIVE AGX:

“NVIDIA DRIVE™ AGX embedded supercomputing platforms process data from camera, radar, and lidar sensors to perceive the surrounding environment, localize the car to a map, and plan and execute a safe path forward. This AI platform supports autonomous driving, in-cabin functions and driver monitoring, as well as other safety features—all in a compact, energy-efficient package.”

AI & ML In Healthcare (Medical Imaging)

In the case of medical imaging, the performance of the computer vision system is judged against highly experienced radiologists and clinical professionals who understand the pathology behind an image. Moreover, in most cases, the task involves identifying rare diseases with very low prevalence rates. This makes the training-data sparse and rarefied (i.e. not enough training images can be found). Consequently, the Deep Learning (DL) architecture has to compensate for this by adding clever processing and architectural complexity. This, of course, leads to enhanced computational complexity.

MRI and other advanced medical imaging systems are being equipped with ML algorithms and they are increasingly forming the first line of checks for cancer detection. Often the number and quality of radiologists fall short in the face of overwhelming digitized data, and ML-based systems are a perfect choice for aiding their decision-making process.

A Nature article estimates that the average radiologist needs to interpret one image every 3–4 seconds in an 8-hour workday to meet workload demands. Imaging data is plentiful these days and DL algorithms can be fed with ever-expanding dataset of medical images, to find patterns and interpret the results as a highly trained radiologist would. The algorithm can be trained to sort through regular and abnormal results, identifying suspicious spots on the skin, lesions, tumors, and brain bleeds, for example. But sorting through millions of training examples and identifying them correctly needs the help of GPU-optimized software and hardware systems.

AI & ML In Fighting Disease (Medicine Discovery)

Global pandemics such as COVID-19 are mostly caused by viruses. At the fundamental structural level, a virus mainly consists of a single (or a few) strands of DNA/RNA. Determining the 3-D protein structure i.e. the sequence of amino acid molecules from the genetic test data is the key to develop certain classes - subunit and nucleic acid type - of vaccines.

This task is computationally infeasible(no matter how much hardware resources is thrown at it) if tried using conventional protein-folding algorithms. Artificial intelligence can play a significant role to help solve this challenge with the latest techniques of deep reinforcement learning (DRL) and Bayesian optimization. On that cue, DeepMind, the famous DL research unit of Google, introduced AlphaFold, a DRL-based system that predicts the 3-D structure of a protein based on its genetic sequence.

In early March 2020, the system was put to the test on COVID-19 and AI researchers at DeepMind were able to release structural predictions of several under-studied proteins associated with SARS-CoV-2 to help the world-wide clinical and virology research community better understand the virus and its impact on human biology. In November 2020, the same system outperformed around 100 other teams in a biennial protein-structure prediction challenge called CASP (Critical Assessment of Structure Prediction). Biologists and the medical community worldwide called this progress epoch-making.

All these impressive breakthrough performances come at the cost of GPU-powered hardware. It is difficult to get the latest estimate of how much computing power was used for training AlphaFold. But, its famous predecessor, AlphaGo reportedly used hundreds of CPUs and GPUs in unison to beat Lee Sedol in the famous GO challenge.

Image Source: Business Insider

AI & ML In Environmental & Climate Science

Climate change is one of the most profound existential crises faced by humanity in the 21st century. To understand the impact of such an epoch-changing global event, we need tons of scientific data, high-fidelity visualization capability, and robust predictive models.

Weather prediction and climate modeling, therefore, are at the forefront of humanity’s fight against climate change. But, they are not easy enterprises. At the very least, for all the advancement in Big Data analytics and scientific simulation capabilities, these kinds of Earth-scale problems are simply intractable for present-day hardware and software stacks.

In the United States, the majority of weather prediction services are based on a comprehensive mesoscale model called Weather Research and Forecasting (WRF). The model serves a wide range of meteorological applications across scales from tens of meters to thousands of kilometers. Consequently, such a comprehensive model has to deal with a myriad of weather-related variables and their highly complicated inter-relationships. It also turns out to be impossible to describe these complex relationships with a set of unified, analytic equations. Instead, scientists try to approximate the equations using a method called parameterization in which they model the relationships at a scale greater than that of the actual phenomena.

Can the magical power of deep learning tackle this problem? Environmental and computational scientists from Argonne National Lab are collaborating to use deep neural networks (DNN) to replace the parameterizations of certain physical schemes in the WRF model, hoping to significantly reduce simulation time without compromising the fidelity and predictive power.

Image Source: Towards Data Science

And they are fully leveraging the power of GPU-enabled HPC (High-performance computing) nodes to do this kind of compute-intensive research. This news article describes some details about Argonne Leadership Computing Facility or ALCF computing investments:

“The ALCF has begun allocating time on ThetaGPU for approved requests. ThetaGPU is an extension of Theta and consists of NVIDIA DGX A100 nodes. Each DGX A100 node is equipped with eight NVIDIA A100 Tensor Core GPUs and two AMD Rome CPUs that provide 320 gigabytes (7680 GB aggregately) of GPU memory for training AI datasets, while also enabling GPU-specific and GPU-enhanced HPC applications for modeling and simulation”.

AI & ML In Smart Manufacturing

Movement of raw materials, goods, and parts is at the heart of any manufacturing system. After the revolution in computing and information technology, it was realized that such physical movement can only be optimally efficient when that movement is controlled in a precise manner, in conjunction with hundreds of other similar movements, supervised by an information-processing engine.

Therefore, innovative combinations of hardware and software have ushered the old industries into the era of smart manufacturing. As the cost and operational complexity of computing and storage decreased at an exponential pace (Moore’s law), the information content generated by workers, machines, controllers, factories, warehouses, and the logistic machinery exploded in size and complexity.

And now, innovative ideas in the field of AI and ML have come to rescue many manufacturing organizations from being drowned in the deluge of data and have helped them make sense of the exabytes of data that they have to process everyday. Deep learning technologies are being used in multiple domains - design, quality control, machine/process optimization, supply chain, predictive and preventive maintenance, and more.

Image Source: DownloadNema

Given the volume and velocity of data generation and processing need, most of these AI/ML systems use GPU-powered workstations and cloud computing resources. As an instance, here is a business white paper showing the use of Tesla v100 GPU for manufacturing,

Summary of Applications for AI & ML Using GPUs

More and more business segments and industries are adopting powerful AI/ML tools and platforms in their operations and R&D. In this article, we discussed a few of them and examined how the power and flexibility of GPU-based systems are enabling AI adoption. Going by the trend, we can confidently say that the market and choices for customized AI/ML hardware solutions (e.g. Deep-Learning Workstations) will continue to grow at an accelerated pace in the coming years.Have any questions?

Contact Exxact Today