An Introduction to Computer Vision and Image Processing

Many people are familiar with the terms, Image Processing and Computer Vision, but are not sure what differentiates the two. Are they essentially the same thing? Do they work independently of one another and used for different purposes? Or are they two parts of the same process? Unless somebody has worked in the field, they may be unable to answer these questions. Furthermore, with the rise of Deep Learning and its efficacy in image processing tasks, where does it fit in?

A definition of each of these is in order, but first, it may be helpful to look at what some people find confusing. Computer Vision is sometimes incorrectly assumed to be a subset of Image Processing, where it is believed that the computer is able to interpret, understand, and make appropriate use of images that it has access to. In reality, Image Processing can either be used as part of the Computer Vision process, or independently and for a different purpose.

What is Image Processing?

Image processing is a catch-all term that refers to a variety of functions that can be performed on a single, still picture. While a single frame is used as input, the output varies depending on the function, or functions that are applied.

The majority of image processing functions produce a second, modified image. Any filter that alters a picture, for example, is an image processor. Whether it colorizes a black and white snapshot, blurs out a license plate to protect privacy, or renders bunny ears on a person’s head, it is an example of a transformation from one image to another via image processing.

One commonly used tool for image processing is Adobe Photoshop. The use of this product for altering images is so common that the final result, after a digital photograph has been edited, is often referred to as a picture that has been Photoshopped.

By contrast, Image Processing does not refer to the process of analyzing an image to, for example, create an appropriate English sentence to suitably describe it. This is within the realm of machine learning, and in fact, is also part of Computer Vision.

Now that we have a definition for Image Processing, how does it relate to Computer Vision?

What is Computer Vision?

Computer Vision, taken as a single definition, is the ability and procedure for a computer to understand its surroundings through the use of one or more digital eyes. Clearly, this is not accomplished using a standalone task. Rather, it is a series of steps that begin with first acquiring the image, and then gaining understanding through image processing and analysis.

Human vision is a complex process, and emulating this has always been a challenging task for computers. Through the use of traditional machine learning techniques, and more recently with advancements in Deep Learning, there is noticeable progress being made in computers being able to interpret and react to what they “see”.

A significant prerequisite for reasonable Computer Vision that differs from image processing is the necessity for multiple images. While Image Processing innately works with a single digital picture, Computer Vision more appropriately operates on a stream of images with a known temporal relationship.

Sequences of Images

The temporal connection between images is important because it adds the context that is often necessary in order to draw relevant and accurate conclusions. For example, consider a digital picture that contains a car. The analysis of a single image will potentially yield significant and important details about the vehicle. These could include its make, model, colour, license plate, the presence of occupants, and perhaps indications such as lights or emissions that imply a running state. It will be quite rare, however, where a determination can be made that the car is in motion.

Certainly, there are examples of where motion can be inferred. These might include a motion-blurred picture, or a snapshot at the exact moment that one of the tires splashes through a puddle of water. On the other hand, it is not difficult to recognize that the majority of single-frame images will not provide sufficient information to infer motion, let alone the direction or speed of travel. Without these details, the depth of understanding is drastically limited.

Rather than a single image, consider now a series of three pictures containing the same car. Each is taken from the same vantage point and are timestamped accordingly. If there is a delay between image captures then it can be easily calculated by subtracting the timestamps. In this scenario, it is much easier to determine motion over the relevant time period.

The car may be travelling left or right, with respect to the observing angle, and this will be obvious by looking at the pictures in sequence. Similarly, if a car is moving either towards or away from the camera then its image will become larger or smaller, respectively. For the purpose of this example, we will not consider cases where an object is in motion, yet it is undetectable because the delay and movement, together, are such that the object consistently returns to the same location for each and every snapshot.

Deep Learning and Digital Images

With the rise of Deep Learning in recent years, one of the domains for which it has been widely adopted is digital imagery. Two well known Deep Learning frameworks are the Convolutional Neural Network (CNN) and the Recurrent Neural Network (RNN). While a more in-depth discussion of these it outside the scope of this article, it is relevant to mention that CNN has been applied to a variety of imaging tasks. These include face detection and recognition systems, medical image analysis, image recognition, and full motion video analysis. On such system is AlexNet, which is a CNN that gained attention when it won the 2012 ImageNet Large Scale Visual Recognition Challenge.

Deep Learning for Image Processing

Deep Learning techniques have been successfully applied in Image Processing tasks, and a straightforward example for its use is in Edge Detection. The detection of edges in a digital image is a non-trivial, yet important part of Image Processing. Consider our previous example where a single picture contains an image of a car, and the task at hand is to apply a blur filter such that the license plate number is thereafter obscured.

If we consider the steps required in order to perform this easily-imagined job, the first must be locating the license plate. Identifying visual features, such as a license plate or a headlamp, is known as feature detection. Before features can be discovered, however, it is necessary to search for borders, or outlines, which are made up of its edges. Therefore, edge detection is a critical component of feature detection, which is in turn required in order to apply various filters.

Once the license plate has been identified, it is merely a case of obfuscating the sequence of characters within its borders. The result is a second picture that is identical in every detail, except that the uniquely identifying information is no longer discernable.

Edge detection, and the more complex task of feature identification, are readily handled by CNNs in Deep Learning systems. By identifying features, CNNs are capable of classifying photos and making other types of predictions. This leads to the important question of whether the CNN can be applied to Computer Vision tasks.

Deep Learning for Computer Vision

Without doubt, feature identification and image classification are important tools for gaining understanding about the contents of a photograph. As such, the CNN is a valuable tool available for use by Computer Vision systems. But what about context? Is the car getting closer to you, or is it moving further way? Without considering a series of pictures and their temporal relationship, there is no way to know with certainty. In Computer Vision, more insight with respect to the events is necessary.

The second aforementioned Deep Learning framework is the RNN. The CNN is good for image processing, whereas the RNN is good for exploiting temporal information to determine context. An RNN contains a feedback loop mechanism that essentially acts as an internal memory. Named the Long Short-Term Memory (LSTM), this functionality is able to discover many types of relationships including those that are only clear when considering context. These systems are frequently used for predicting the next word in an autocomplete task, where the correct choice is dependent on something that occurred at an earlier point in the sentence.

For the case of a single digital image, one of the applications for which the RNN is applied is called Image Captioning. This is where an image is automatically given a caption based on what is in the picture, such as “Vehicle is a car” or “Driver’s door is open”. It is obvious that this level of understanding is of great benefit in Computer Vision. Moreover, the power of the LSTM can be utilized across a series of images.

Armed with a set of images, the RNN will consider both the contents of the pictures and the temporal relationship between them, as described by their accompanying timestamps. Consider now that each picture has an accurate description, and that description is placed, in-order, amongst several similar descriptions, and the result is a paragraph that describes what is happening over a period of time that has been captured in several pictures. This is a much more in-depth description, or understanding, of what is happening over the relevant time period.

In reality, hybrid CNN and RNN systems are used for more complex tasks that make use of each of these frameworks. One such hybrid system, DanQ, was created with the DeepSEA system as its foundation. DeepSEA, however, is a CNN alone, and does not include an RNN. Testing has shown that the hybrid system outperforms its predecessor because the RNN only considers the more abstract data that has been pre-filtered by the CNN, making the long-term relationships easier to discover. This, however, is implementation dependent, and at this point has rounded out the scope of this discussion.

Conclusion

Image Processing and Computer Vision are distinct, yet related tasks that are within the context of digital imagery. Image Processing concerns the modification of images using devices such as filters, whereas Computer Vision systems aim to understand what is happening over a period of time that has been captured by an electronic eye. Image Processing, while it has its own applications, is an important part of a Computer Vision system.

Deep Learning systems have been successfully applied to both Image Processing and Computer Vision tasks, using a variety of frameworks and hybrid implementations. With the advancement in Deep Learning algorithms and the availability of ever-increasing computing power, Computer Vision systems will undoubtedly improve. In turn, applications that range from intelligent cameras to robots will also continue to improve, finally bringing Computer Vision into the mainstream and out of the laboratory.

What You Should Know: Differences Between Computer Vision and Image Processing

An Introduction to Computer Vision and Image Processing

Many people are familiar with the terms, Image Processing and Computer Vision, but are not sure what differentiates the two. Are they essentially the same thing? Do they work independently of one another and used for different purposes? Or are they two parts of the same process? Unless somebody has worked in the field, they may be unable to answer these questions. Furthermore, with the rise of Deep Learning and its efficacy in image processing tasks, where does it fit in?

A definition of each of these is in order, but first, it may be helpful to look at what some people find confusing. Computer Vision is sometimes incorrectly assumed to be a subset of Image Processing, where it is believed that the computer is able to interpret, understand, and make appropriate use of images that it has access to. In reality, Image Processing can either be used as part of the Computer Vision process, or independently and for a different purpose.

What is Image Processing?

Image processing is a catch-all term that refers to a variety of functions that can be performed on a single, still picture. While a single frame is used as input, the output varies depending on the function, or functions that are applied.

The majority of image processing functions produce a second, modified image. Any filter that alters a picture, for example, is an image processor. Whether it colorizes a black and white snapshot, blurs out a license plate to protect privacy, or renders bunny ears on a person’s head, it is an example of a transformation from one image to another via image processing.

One commonly used tool for image processing is Adobe Photoshop. The use of this product for altering images is so common that the final result, after a digital photograph has been edited, is often referred to as a picture that has been Photoshopped.

By contrast, Image Processing does not refer to the process of analyzing an image to, for example, create an appropriate English sentence to suitably describe it. This is within the realm of machine learning, and in fact, is also part of Computer Vision.

Now that we have a definition for Image Processing, how does it relate to Computer Vision?

What is Computer Vision?

Computer Vision, taken as a single definition, is the ability and procedure for a computer to understand its surroundings through the use of one or more digital eyes. Clearly, this is not accomplished using a standalone task. Rather, it is a series of steps that begin with first acquiring the image, and then gaining understanding through image processing and analysis.

Human vision is a complex process, and emulating this has always been a challenging task for computers. Through the use of traditional machine learning techniques, and more recently with advancements in Deep Learning, there is noticeable progress being made in computers being able to interpret and react to what they “see”.

A significant prerequisite for reasonable Computer Vision that differs from image processing is the necessity for multiple images. While Image Processing innately works with a single digital picture, Computer Vision more appropriately operates on a stream of images with a known temporal relationship.

Sequences of Images

The temporal connection between images is important because it adds the context that is often necessary in order to draw relevant and accurate conclusions. For example, consider a digital picture that contains a car. The analysis of a single image will potentially yield significant and important details about the vehicle. These could include its make, model, colour, license plate, the presence of occupants, and perhaps indications such as lights or emissions that imply a running state. It will be quite rare, however, where a determination can be made that the car is in motion.

Certainly, there are examples of where motion can be inferred. These might include a motion-blurred picture, or a snapshot at the exact moment that one of the tires splashes through a puddle of water. On the other hand, it is not difficult to recognize that the majority of single-frame images will not provide sufficient information to infer motion, let alone the direction or speed of travel. Without these details, the depth of understanding is drastically limited.

Rather than a single image, consider now a series of three pictures containing the same car. Each is taken from the same vantage point and are timestamped accordingly. If there is a delay between image captures then it can be easily calculated by subtracting the timestamps. In this scenario, it is much easier to determine motion over the relevant time period.

The car may be travelling left or right, with respect to the observing angle, and this will be obvious by looking at the pictures in sequence. Similarly, if a car is moving either towards or away from the camera then its image will become larger or smaller, respectively. For the purpose of this example, we will not consider cases where an object is in motion, yet it is undetectable because the delay and movement, together, are such that the object consistently returns to the same location for each and every snapshot.

Deep Learning and Digital Images

With the rise of Deep Learning in recent years, one of the domains for which it has been widely adopted is digital imagery. Two well known Deep Learning frameworks are the Convolutional Neural Network (CNN) and the Recurrent Neural Network (RNN). While a more in-depth discussion of these it outside the scope of this article, it is relevant to mention that CNN has been applied to a variety of imaging tasks. These include face detection and recognition systems, medical image analysis, image recognition, and full motion video analysis. On such system is AlexNet, which is a CNN that gained attention when it won the 2012 ImageNet Large Scale Visual Recognition Challenge.

Deep Learning for Image Processing

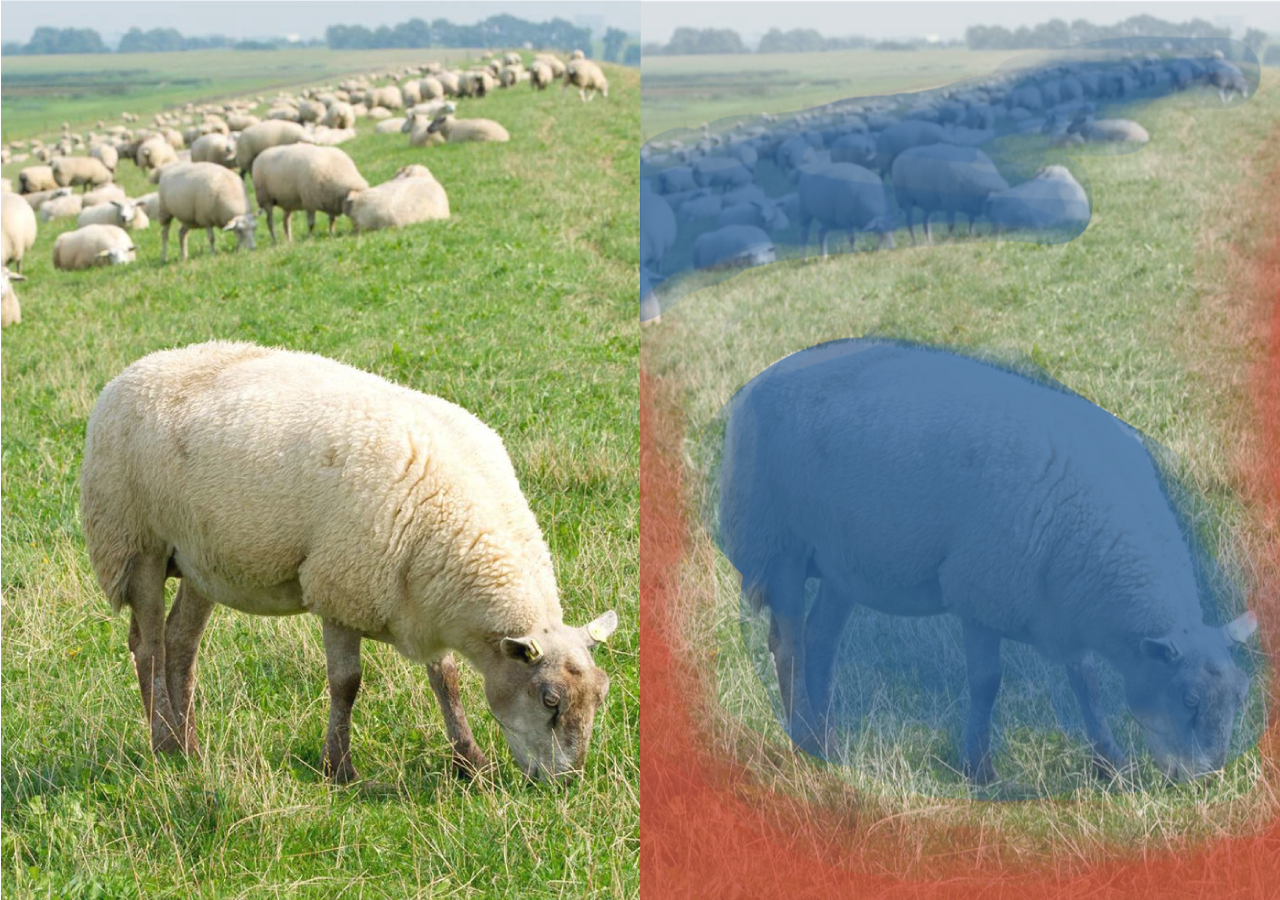

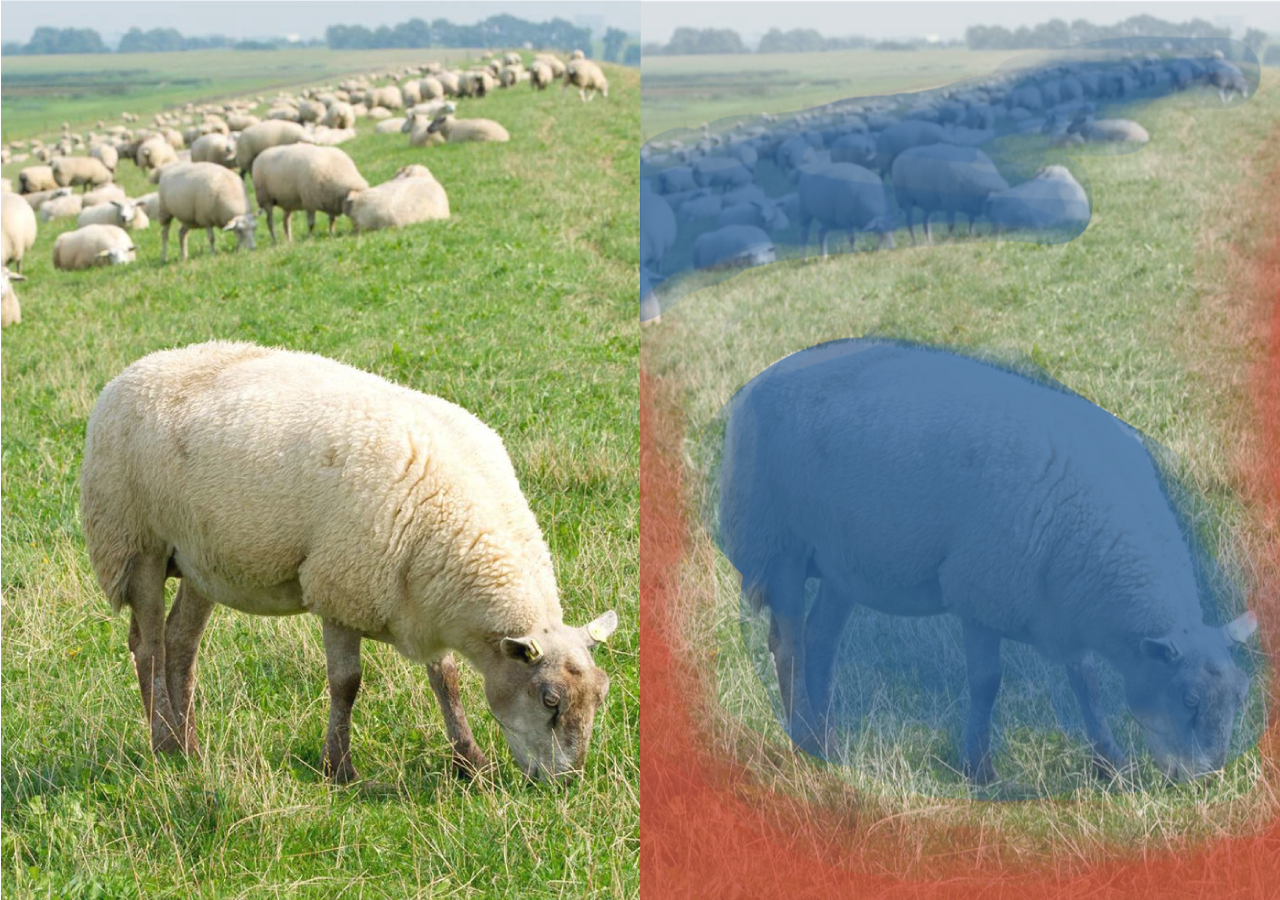

Deep Learning techniques have been successfully applied in Image Processing tasks, and a straightforward example for its use is in Edge Detection. The detection of edges in a digital image is a non-trivial, yet important part of Image Processing. Consider our previous example where a single picture contains an image of a car, and the task at hand is to apply a blur filter such that the license plate number is thereafter obscured.

If we consider the steps required in order to perform this easily-imagined job, the first must be locating the license plate. Identifying visual features, such as a license plate or a headlamp, is known as feature detection. Before features can be discovered, however, it is necessary to search for borders, or outlines, which are made up of its edges. Therefore, edge detection is a critical component of feature detection, which is in turn required in order to apply various filters.

Once the license plate has been identified, it is merely a case of obfuscating the sequence of characters within its borders. The result is a second picture that is identical in every detail, except that the uniquely identifying information is no longer discernable.

Edge detection, and the more complex task of feature identification, are readily handled by CNNs in Deep Learning systems. By identifying features, CNNs are capable of classifying photos and making other types of predictions. This leads to the important question of whether the CNN can be applied to Computer Vision tasks.

Deep Learning for Computer Vision

Without doubt, feature identification and image classification are important tools for gaining understanding about the contents of a photograph. As such, the CNN is a valuable tool available for use by Computer Vision systems. But what about context? Is the car getting closer to you, or is it moving further way? Without considering a series of pictures and their temporal relationship, there is no way to know with certainty. In Computer Vision, more insight with respect to the events is necessary.

The second aforementioned Deep Learning framework is the RNN. The CNN is good for image processing, whereas the RNN is good for exploiting temporal information to determine context. An RNN contains a feedback loop mechanism that essentially acts as an internal memory. Named the Long Short-Term Memory (LSTM), this functionality is able to discover many types of relationships including those that are only clear when considering context. These systems are frequently used for predicting the next word in an autocomplete task, where the correct choice is dependent on something that occurred at an earlier point in the sentence.

For the case of a single digital image, one of the applications for which the RNN is applied is called Image Captioning. This is where an image is automatically given a caption based on what is in the picture, such as “Vehicle is a car” or “Driver’s door is open”. It is obvious that this level of understanding is of great benefit in Computer Vision. Moreover, the power of the LSTM can be utilized across a series of images.

Armed with a set of images, the RNN will consider both the contents of the pictures and the temporal relationship between them, as described by their accompanying timestamps. Consider now that each picture has an accurate description, and that description is placed, in-order, amongst several similar descriptions, and the result is a paragraph that describes what is happening over a period of time that has been captured in several pictures. This is a much more in-depth description, or understanding, of what is happening over the relevant time period.

In reality, hybrid CNN and RNN systems are used for more complex tasks that make use of each of these frameworks. One such hybrid system, DanQ, was created with the DeepSEA system as its foundation. DeepSEA, however, is a CNN alone, and does not include an RNN. Testing has shown that the hybrid system outperforms its predecessor because the RNN only considers the more abstract data that has been pre-filtered by the CNN, making the long-term relationships easier to discover. This, however, is implementation dependent, and at this point has rounded out the scope of this discussion.

Conclusion

Image Processing and Computer Vision are distinct, yet related tasks that are within the context of digital imagery. Image Processing concerns the modification of images using devices such as filters, whereas Computer Vision systems aim to understand what is happening over a period of time that has been captured by an electronic eye. Image Processing, while it has its own applications, is an important part of a Computer Vision system.

Deep Learning systems have been successfully applied to both Image Processing and Computer Vision tasks, using a variety of frameworks and hybrid implementations. With the advancement in Deep Learning algorithms and the availability of ever-increasing computing power, Computer Vision systems will undoubtedly improve. In turn, applications that range from intelligent cameras to robots will also continue to improve, finally bringing Computer Vision into the mainstream and out of the laboratory.

.jpg?format=webp)