Introduction

NVIDIA GTC 2025 kicks off with CEO Jensen Huang presenting his annual keynote addressing the advancements NVIDIA is making to advance AI and accelerated computing. In this recap, we will go over the key points that Huang made during his 2 hour long presentation.

Evolution and Inflection Point of AI

We start with the evolution of AI within the past decade. Perception AI is all about Parsing and evaluating data such as speech recognition, data optimization, and image classifications. In the past 5 years, it has been all about Generative AI where AI can translate from one modality to another such as text to image, text to video, and even amino acid sequence to protein. As opposed to perception AI where data is read and retrieved, generative AI has given the capability to generate answers.

The most recent advancement is Agentic AI or AI that can perceive, reason, plan, and execute. Agentic AI is granted generative AI and perceptive AI tools for parsing data, retrieving necessary information, and generating results to achieve the overarching goal. And to translate that capability, physical AI which is robotics — AI that interacts with the physics of the world.

These new methodologies of powering the way we process data mean that we are at the cusp of an AI revolution. That starts with the way we design our data centers and computing infrastructures.

NVIDIA CUDA-X Ecosystem

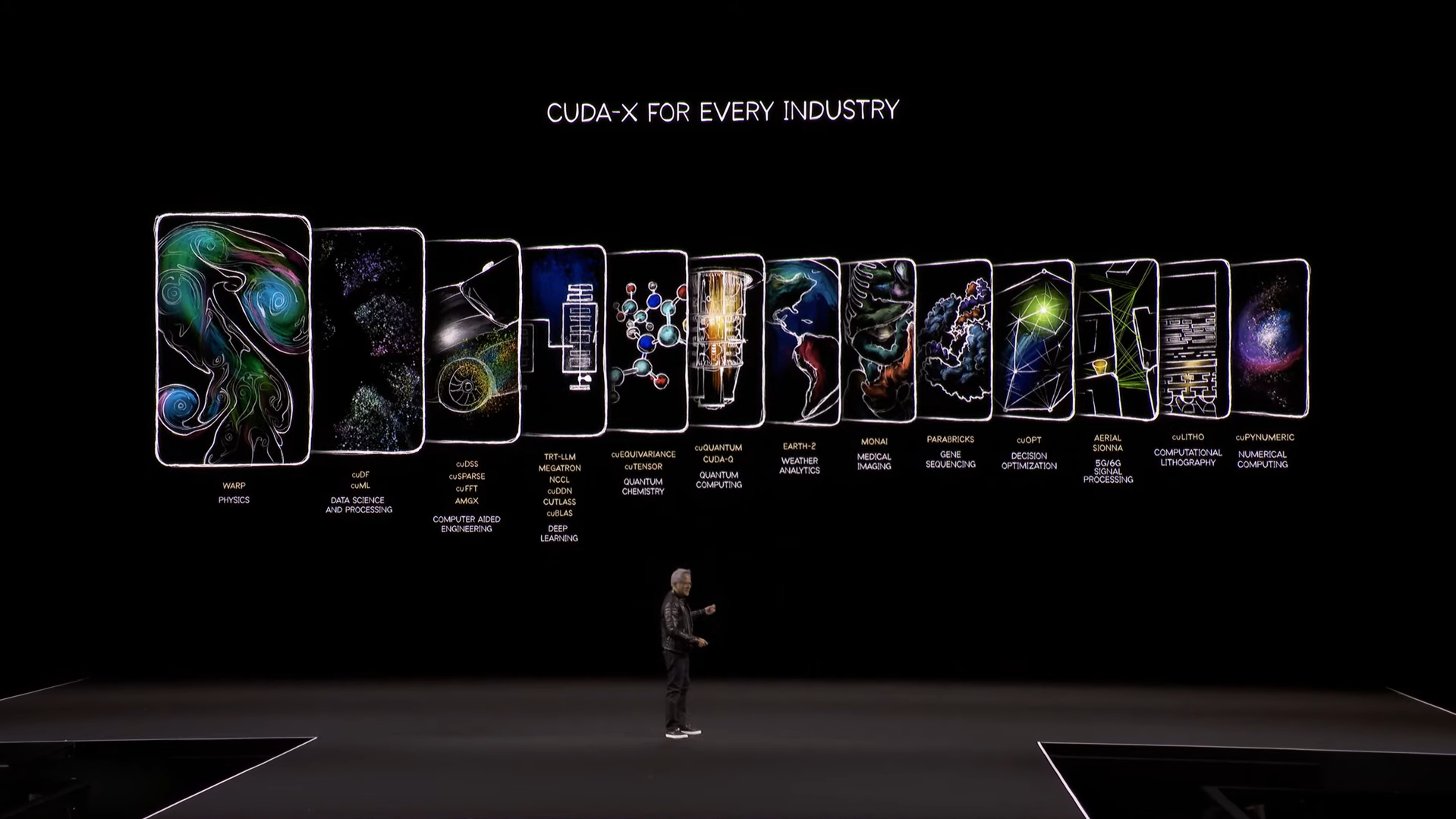

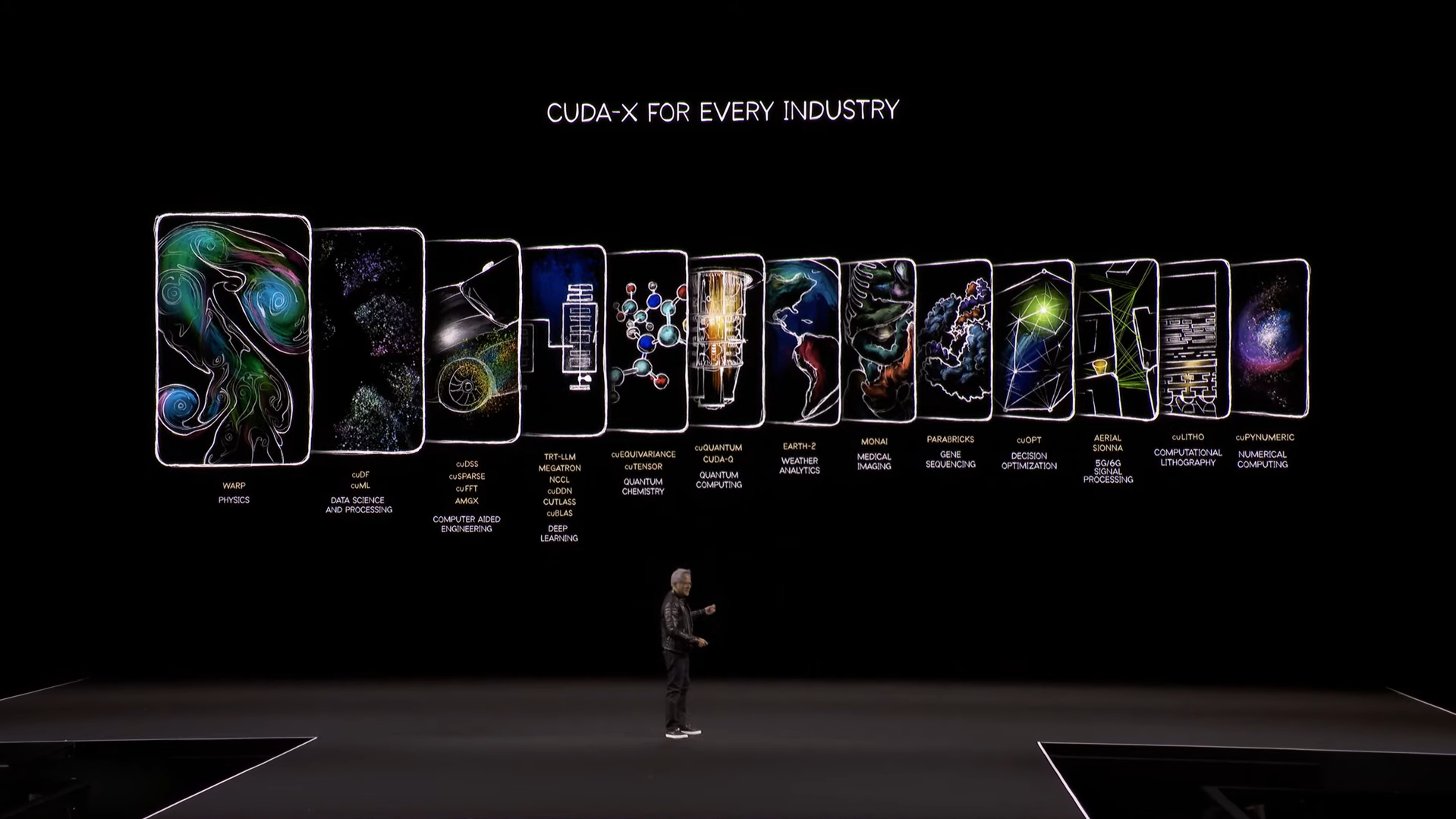

Apart from accelerating AI, NVIDIA also highlights its extensive GPU-accelerated libraries and microservices through CUDA-X. Huang explains a huge variety of CUDA accelerates a multitude of HPC applications such as CAD, CAE, physics, biology, lithography, weather, gene sequencing, quantum computing, and even simple numerical computing. NVIDIA cuOPT, their decision making optimization library, will be made open source.

NVIDIA CUDA has made accelerated computing possible, speeding up calculation and making the extremely time-consuming and unfeasible possible.

Autonomous Cars & NVIDIA Halos

NVIDIA has been in the autonomous car industry providing the 3 operating systems for powering autonomous vehicles - AI training, digital twin simulations, and the in-vehicle computer as well as the software stack that sits on top of it. They partner with GM to deploy their autonomous vehicle fleet

Jensen Huang expresses his pride is autonomous vehicle safety named NVIDIA Halos. The safety system is built from the ground up from processors to OS to software stacks and algorithms with explainability and transparency, engrained in the code.

Omniverse and Cosmo with synthetic data and scenario generation help power and train the autonomous vehicle model to perform its best.

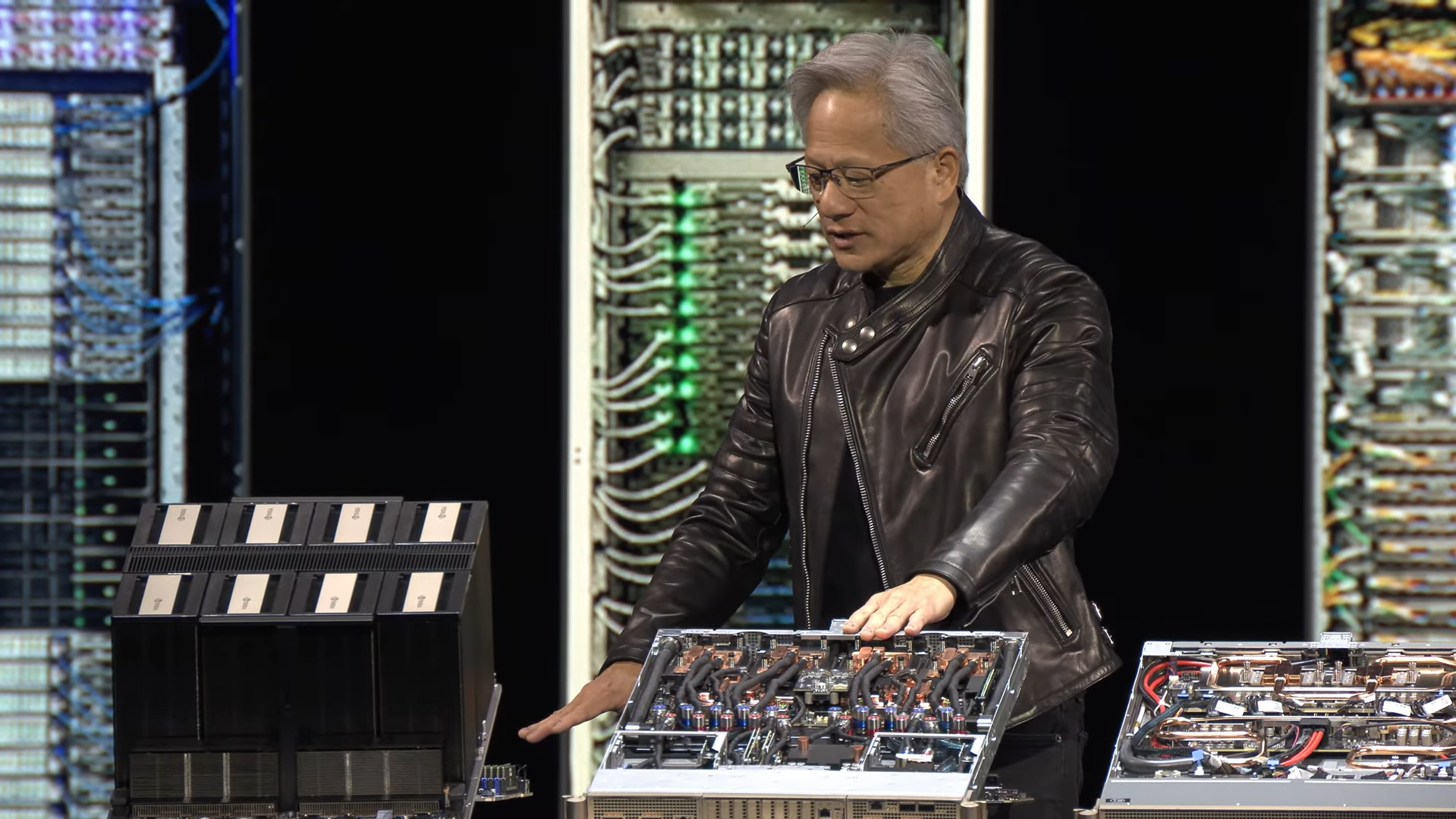

Explaining NVIDIA’s Data Center Hardware Lineup

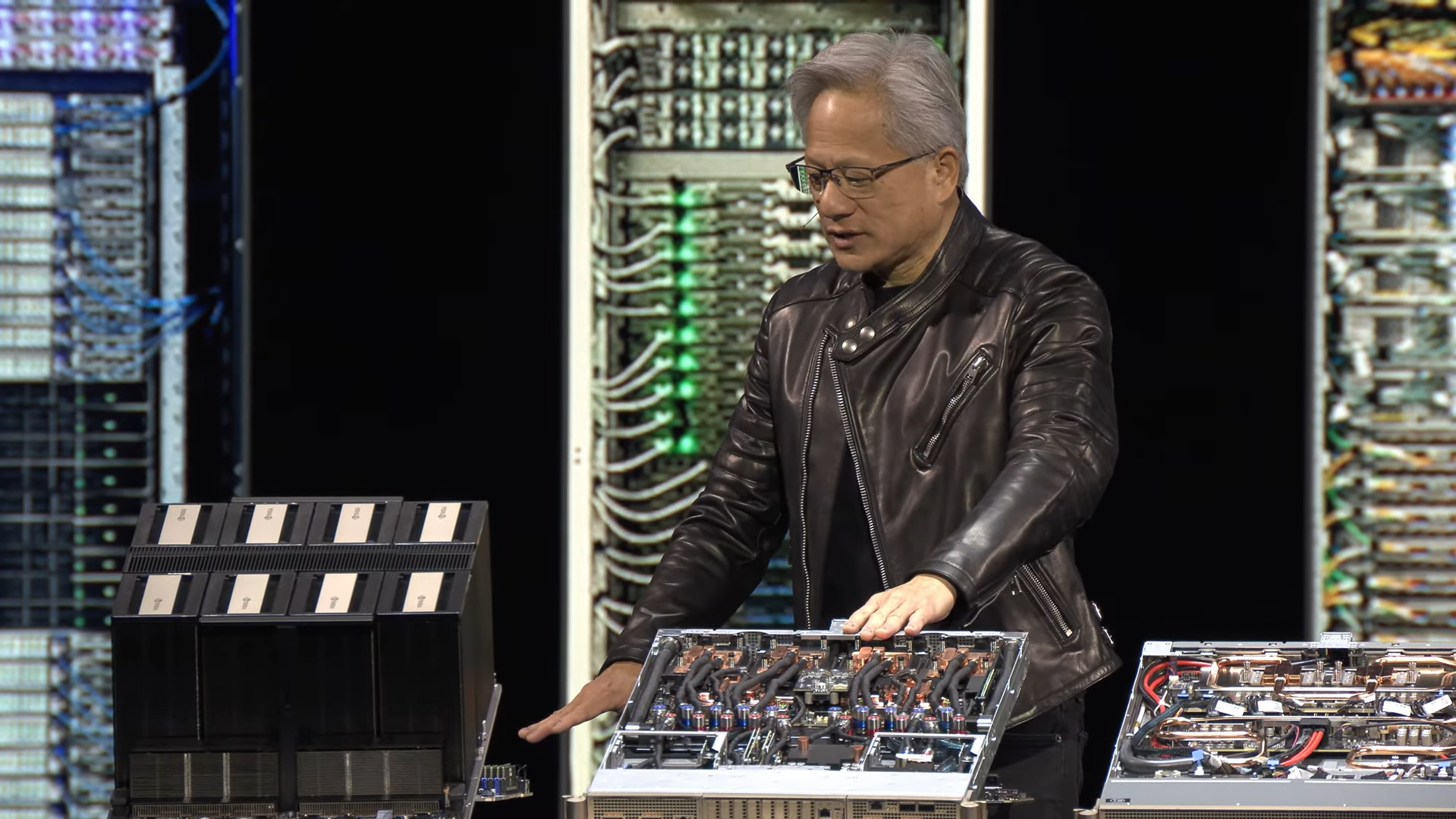

We get to the portion of the Keynote where Jensen now talks about hardware! We get to see the differences between NVIDIA HGX and Grace-Blackwell NVL. The transition between integrated NVLink to disaggregated NVLink switches (seen on the far right) and liquid cooling enables even higher-density computing per rack.

This increased capability is essential for Agentic AI or AI the reasons due to the increased number of tokens it takes to accurately and effectively generate the correct answer to a question or prompt. To further accelerate inference on a large scale, Huang announced NVIDIA Dynamo, open-source software for accelerating and scaling AI reasoning models in AI factories. “It is essentially the operating system of an AI factory,” Huang said.

We then get into the big product announcements for the data center and a product roadmap for 2026 and beyond.

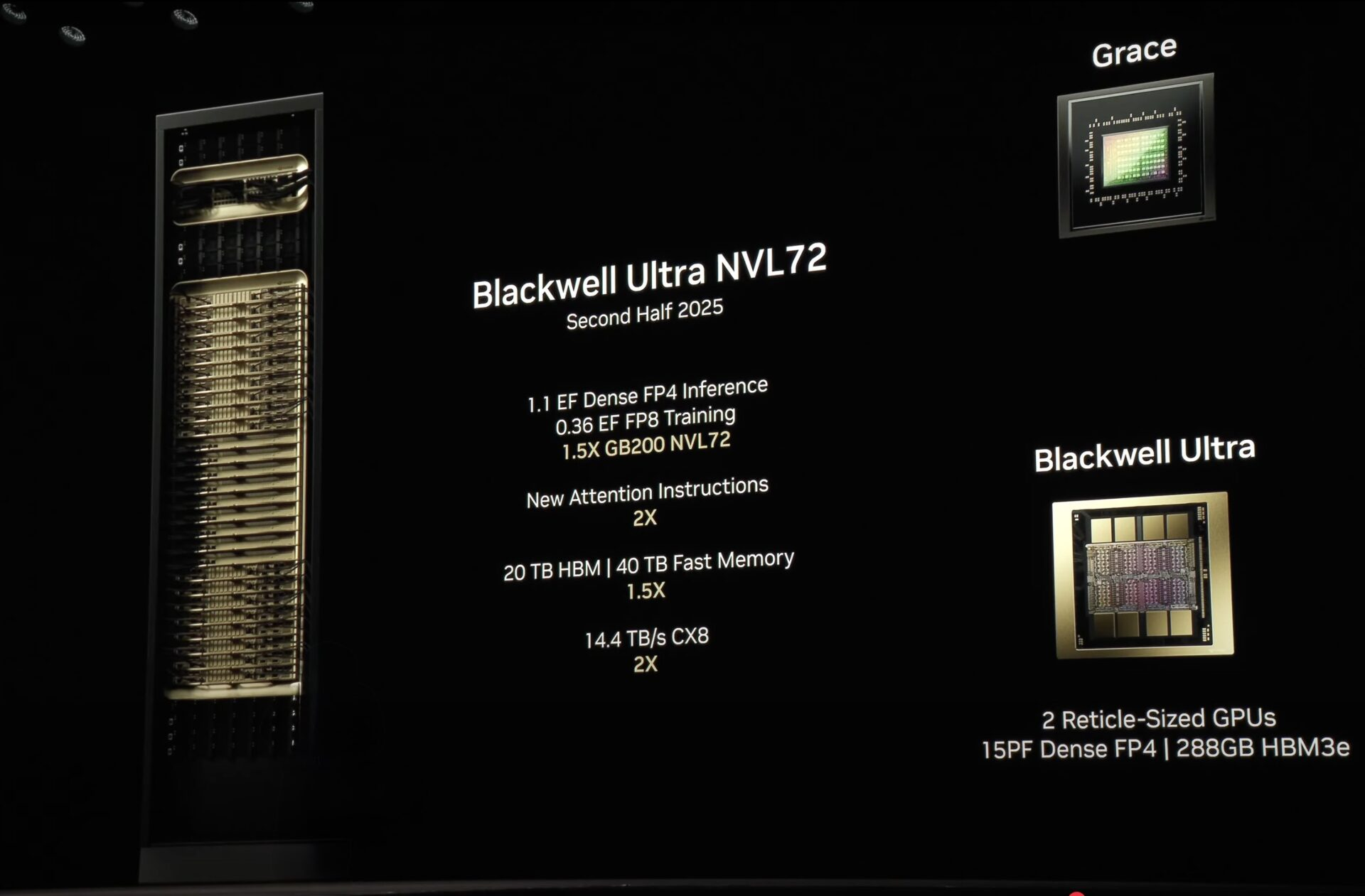

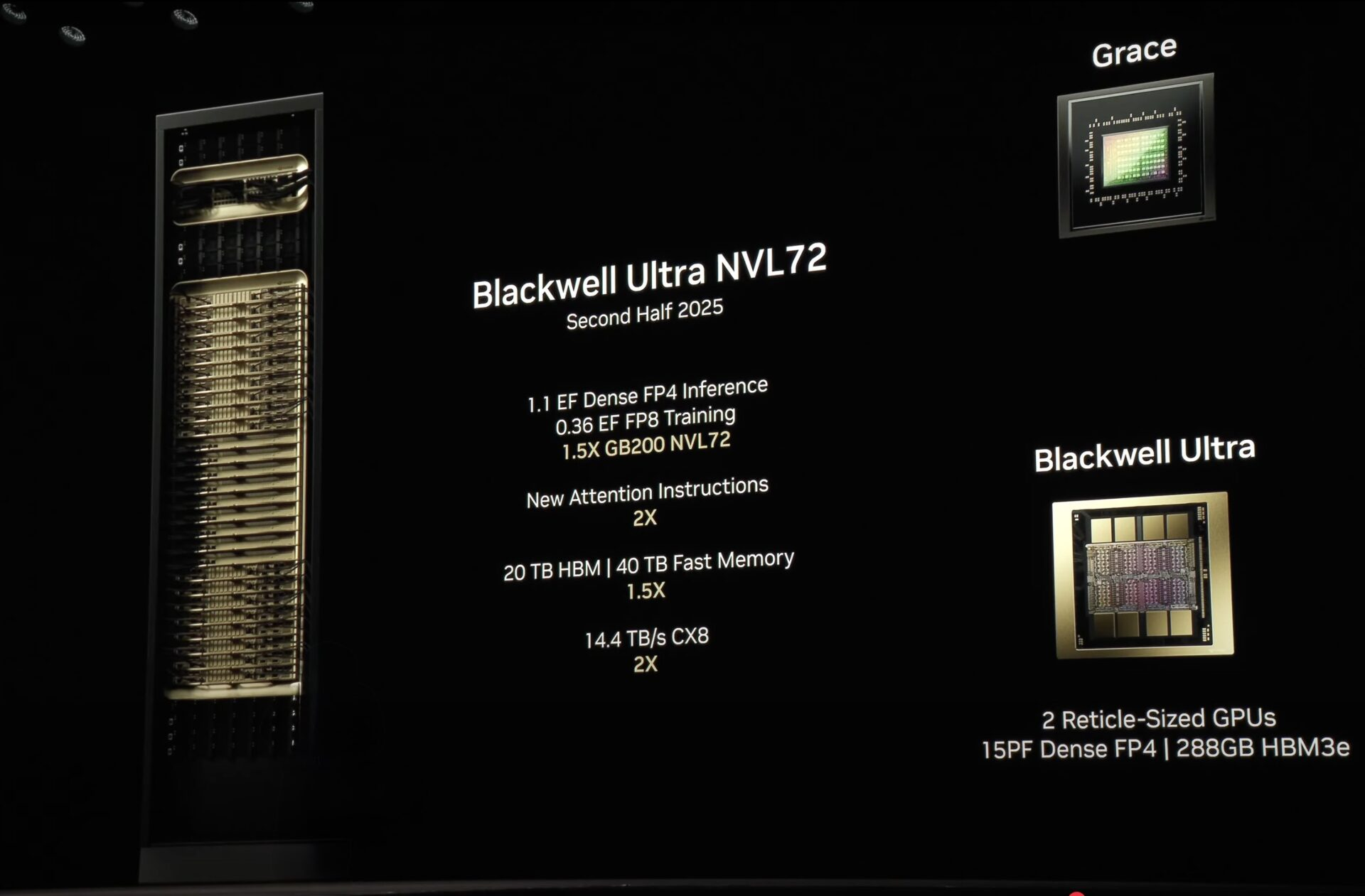

NVIDIA Blackwell Ultra NVL72 (2025 H2)

Available 2025 H2, Blackwell Ultra NVL72 will be 1.5x the performance of the GB200 NVL72. This is dubbed the NVIDIA Blackwell GB300 NVL72. Huang did remark they will be changing the naming from the number suffix of NVL to tell the total GPU dies (as opposed to the number of GPU chips) in the next generation Vera Rubin.

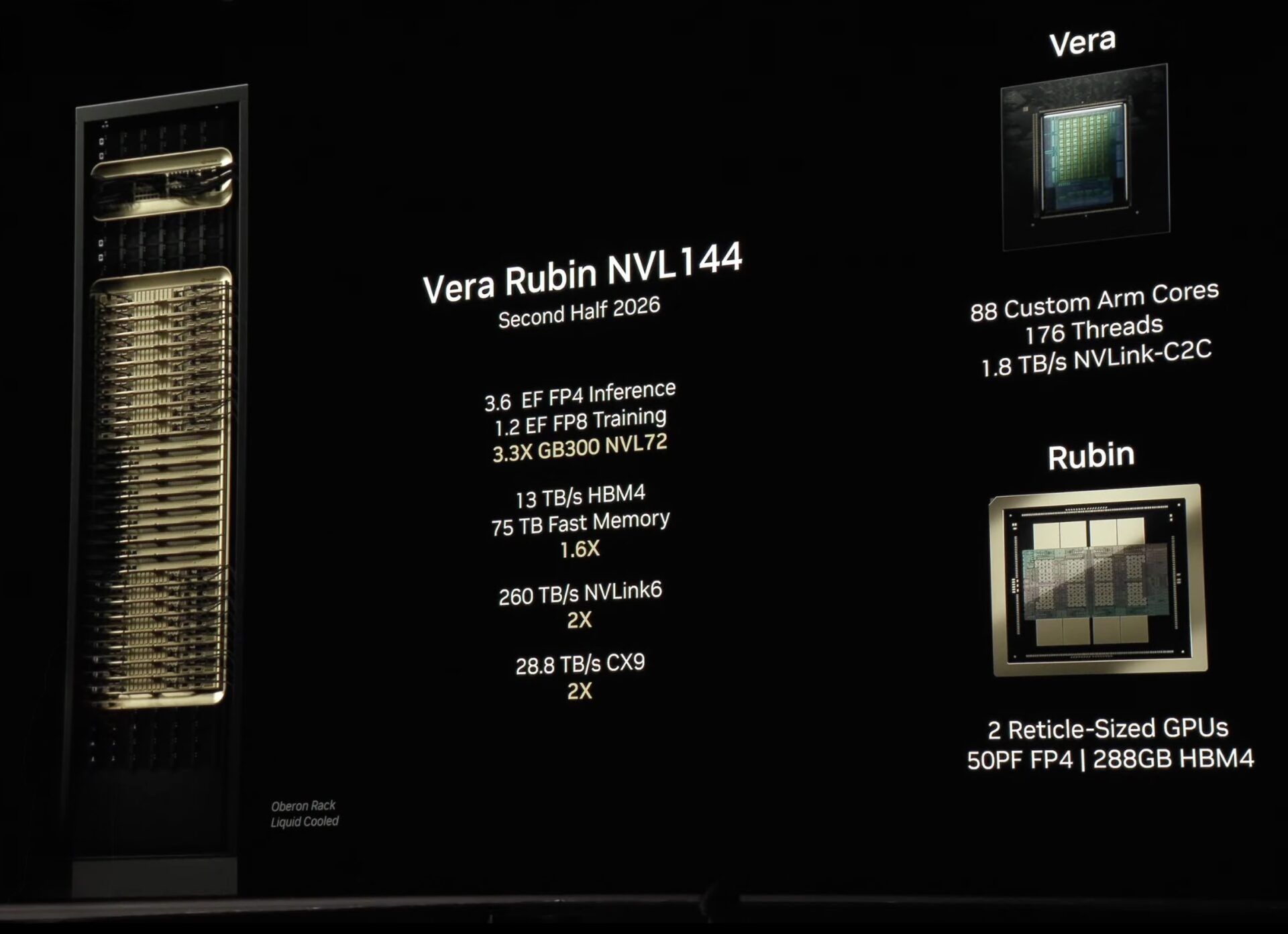

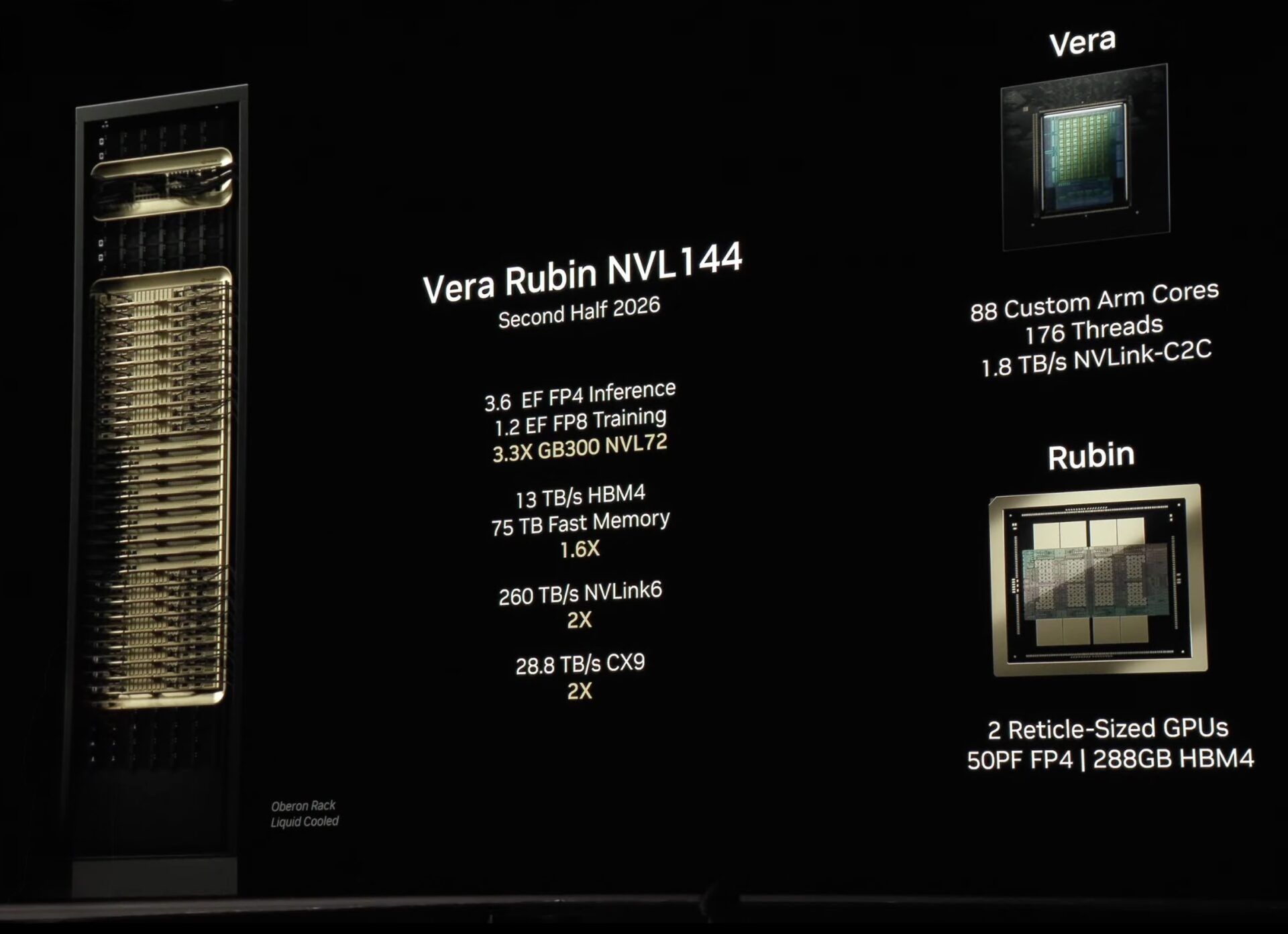

NVIDIA Vera Rubin NVL144 (2026 H2)

Vera Rubin is planned for 2026 H2 from NVIDIA which will feature even more performance with approximately 3.3x the performance of GB300 NVL72. The NVL144 describes the 144 Vera Rubin GPU dies in the system. Vera Rubin is expected to deliver 3.3x the performance of Blackwell Ultra, accompanied by all new generations of ConnectX-9 and NVLink-6 technologies, doubling the bandwidth of previous generations. Vera Rubin GPUs will also feature HBM4 memory, providing a 1.6x increase in memory bandwidth.

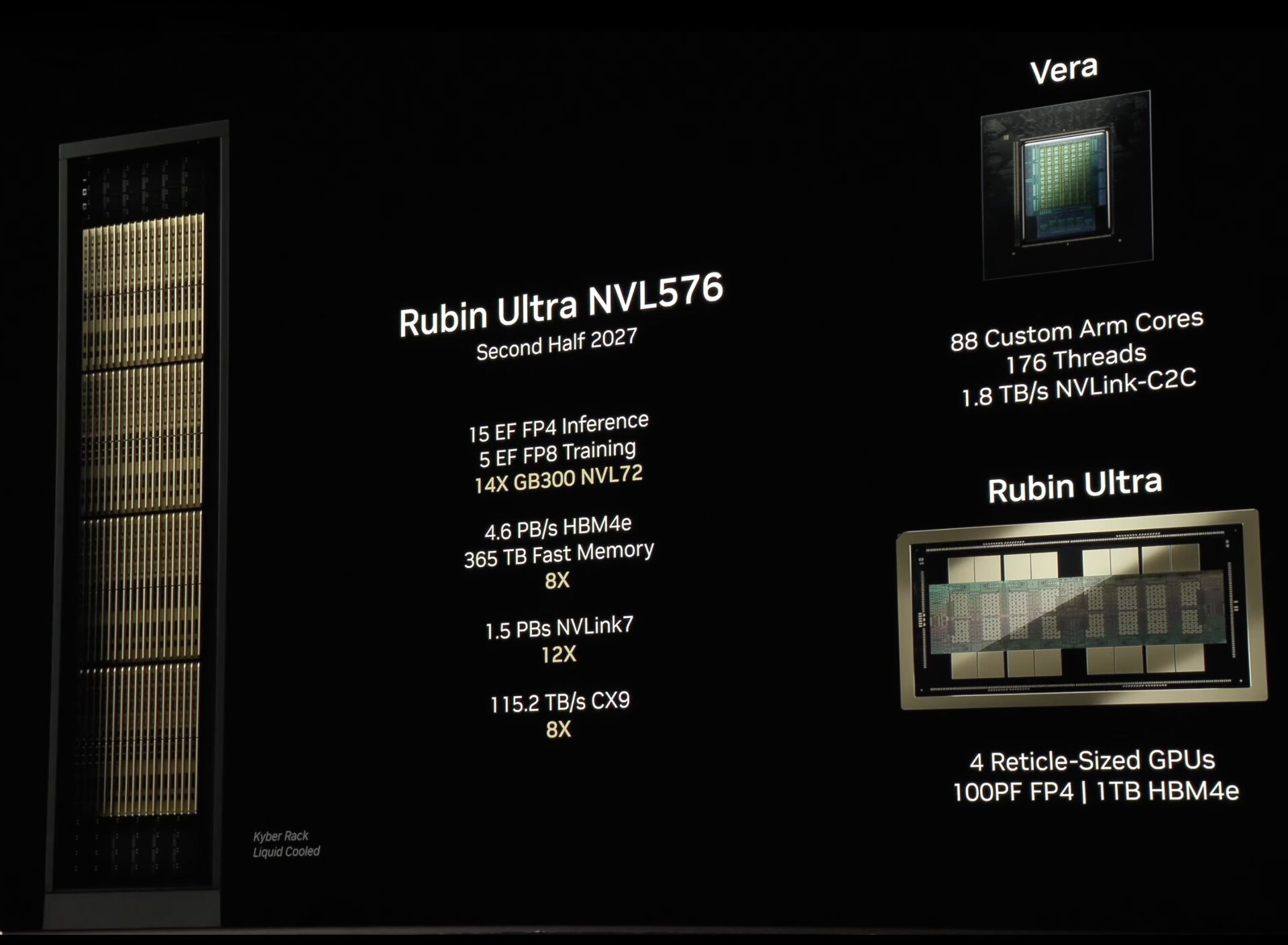

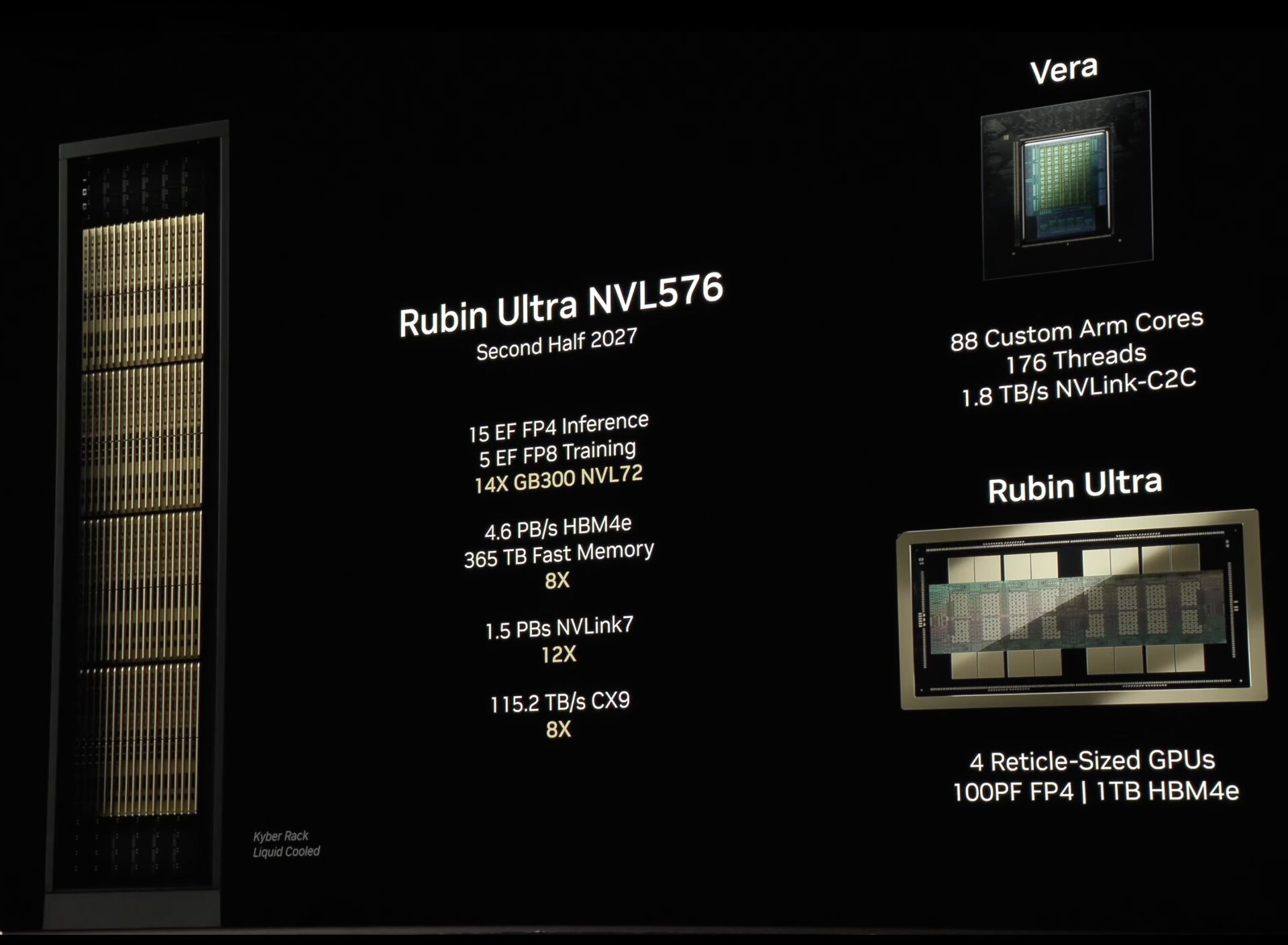

NVIDIA Rubin NVL576 (2027 H2)

Furthermore, we also get Rubin Ultra planned for 2027 H2 in which a single GPU gets 4 GPU-dies. Rubin Ultra NVL576 will be a revolutionary AI factory. Rubin Ultra is expected to deliver 14x the performance of Blackwell Ultra, adopting the new generations of ConnectX-9 but an all-new NVLink7 technology. Vera Rubin Ultra GPUs will also feature HBM4e memory, providing an even larger bump in memory bandwidth.

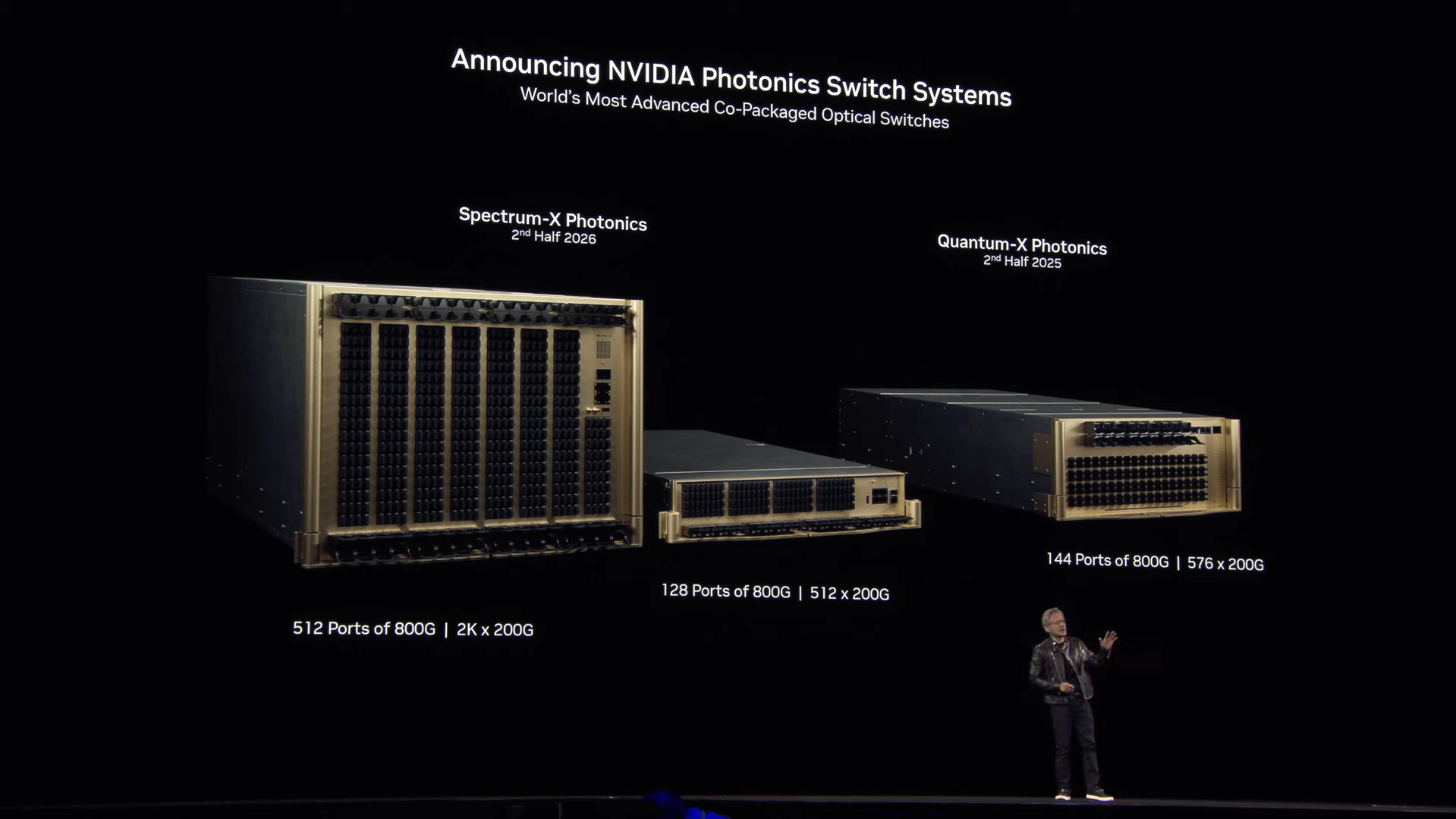

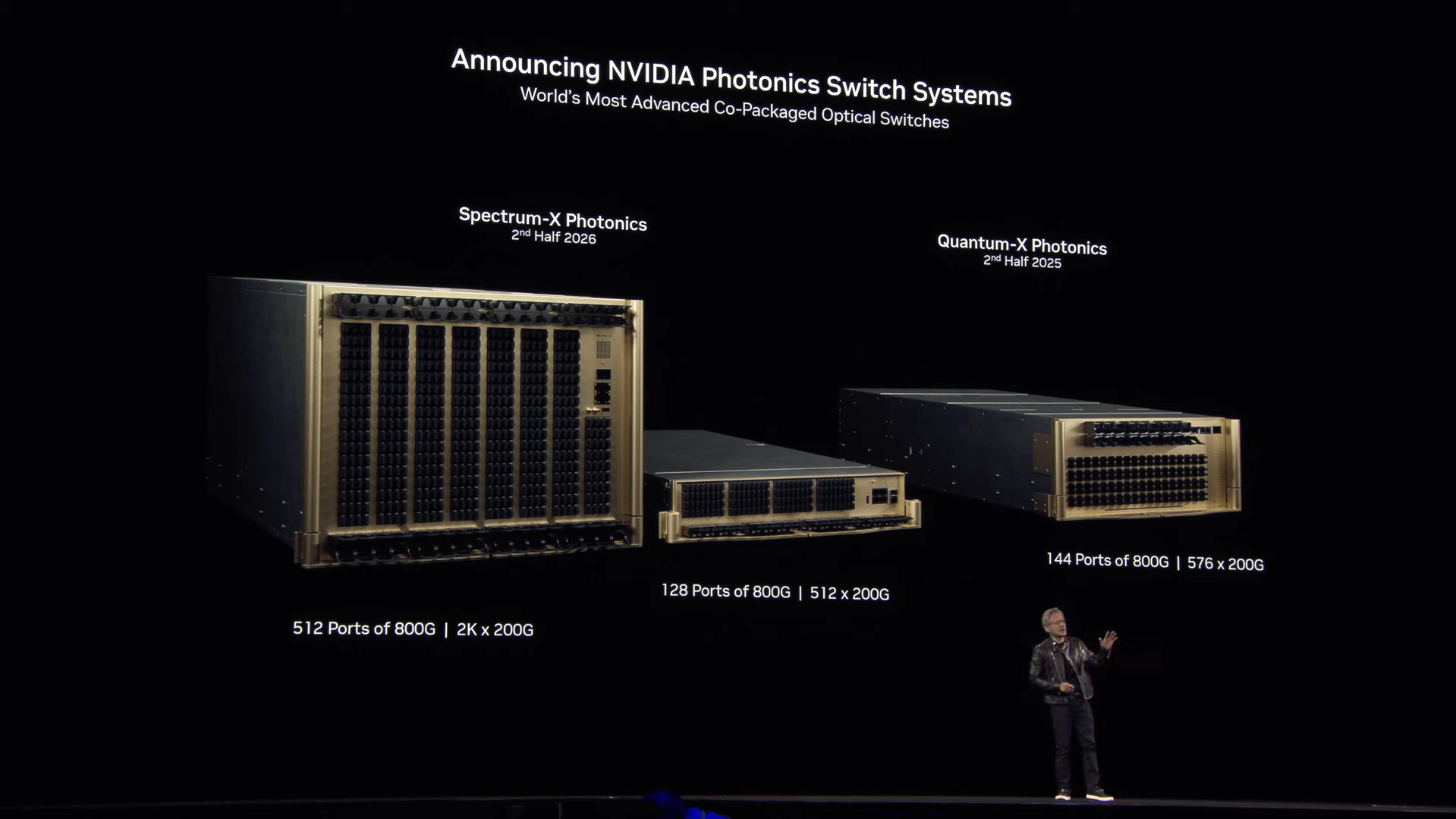

Silicon Photonics with Quantum-X and Spectrum-X

In the data center, data transfer and intercommunication speeds is equally if not more important than your computing performance. Scaling out to hundreds of thousands of GPUs are limited by the power limits and the efficiency of telecommunication connectors: copper is optimal for short distances and photonics is better for long distances but the transceivers require power.

Jensen Huang reveals a new NVIDIA co-invented Photonics system for data center scale-out that utilizes new process nodes from TSMC delivering high bandwidth at more efficient power draws. Utilizing TSMC’s photonic engine, micro‑ring modulators (MRMs), high‑efficiency lasers, detachable fiber connectors, and a whole bunch of technological advancements, the new Photonics platform delivers up to 3.5x better efficiency, 10x higher resiliency, and deployment speeds 1.3x faster than conventional solutions. NVIDIA Quantum-X ships 2025 H2 and Spectrum-X ships 2026 H2.

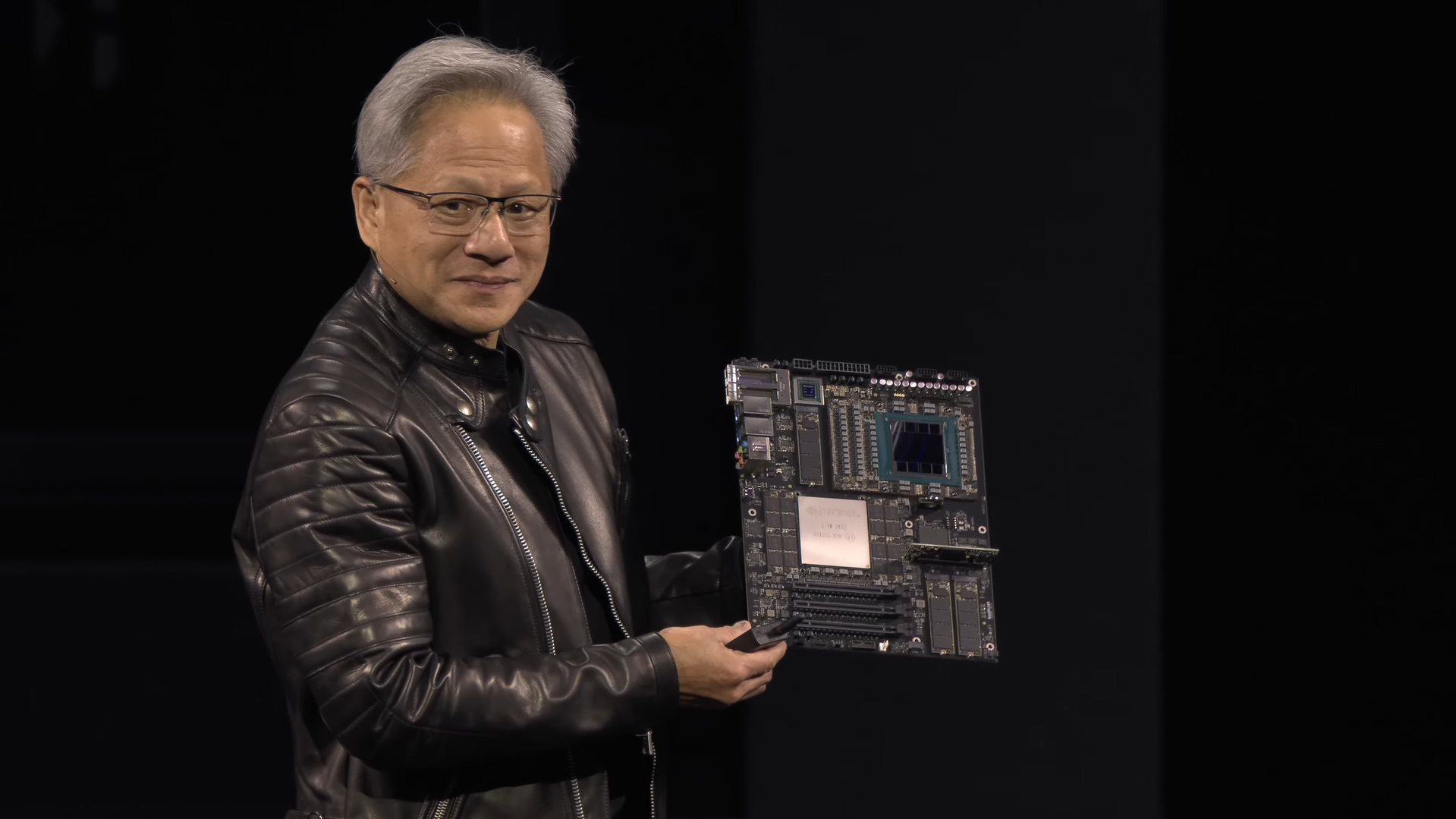

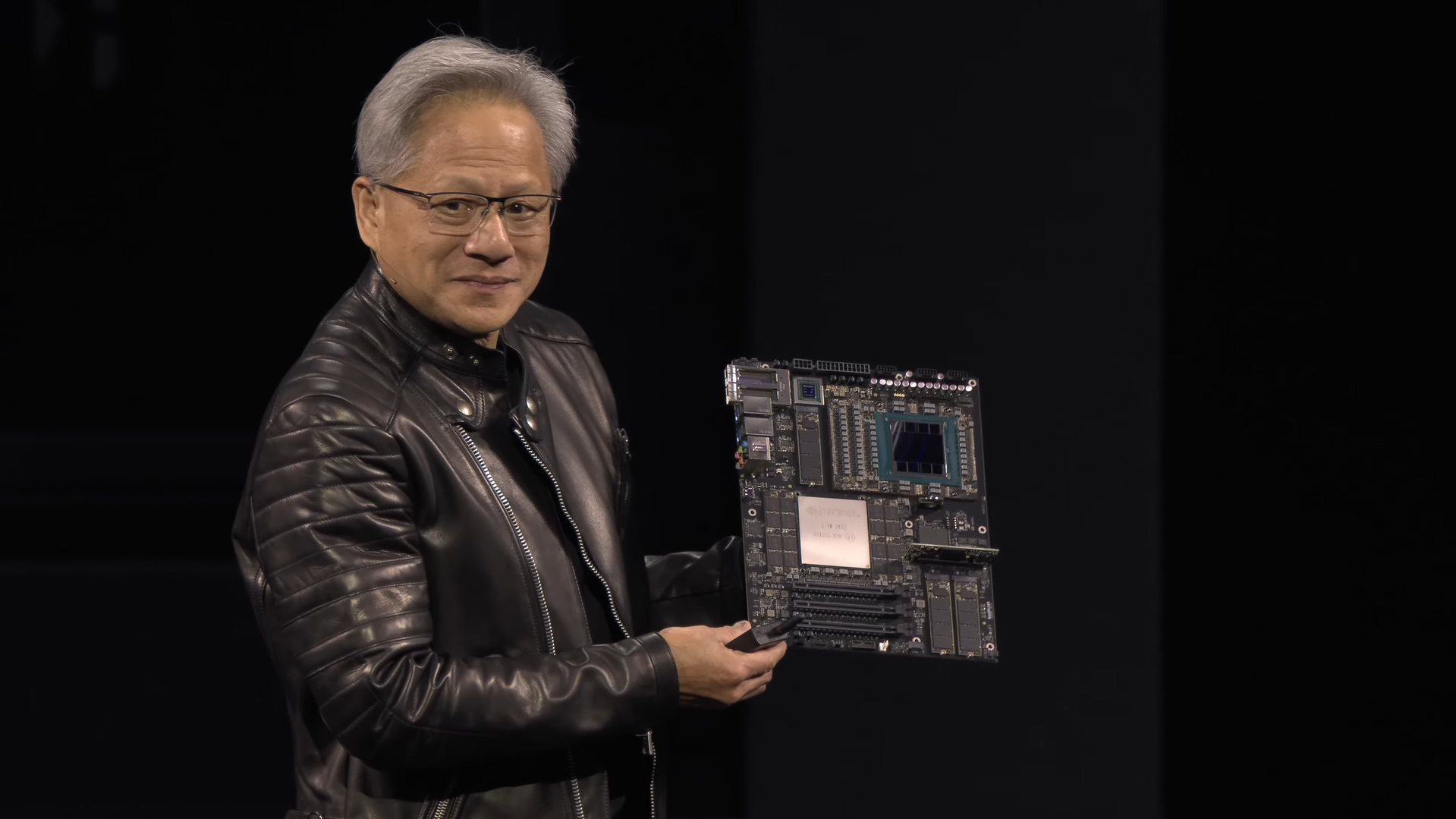

NVIDIA Personal DGX - DGX Spark & DGX Station

NVIDIA showcased its lineup of personal AI supercomputers.

NVIDIA DGX Spark, a very small but powerful NUC-style form factor targeting AI developers, researchers, data scientists, and students. Before Huang could tell us more, the GTC live stream feed died for a few minutes, but here are the specifications of DGX Spark from their website. NVIDIA DGX Spark is the world’s smallest AI supercomputer powered by the NVIDIA GB10 Grace Blackwell Superchip featuring 1 PetaFLOP of FP4 AI compute, 20 Arm Cores, and 128GB of high bandwidth unified memory. This all-in-one, mini DGX computer can prove useful for prototyping, inferencing, data science, and edge workloads.

NVIDIA DGX Station is a resurgence of the personal AI supercomputer desktop that was dropped after the Ampere generation. This time NVIDIA DGX Station utilizes a fully NVIDIA build with GB300 Grace Blackwell superchip with NVIDIA’s ConnectX 8 SuperNIC and PCIe slots in a traditional workstation-type form factor. The DGX Station offers 784GB of unified system memory while delivering 20 petaflops of dense FP4 AI performance.

Physical AI and Robotics

To tie everything together, NVIDIA had become pivotal in the AI industry developing software frameworks, building GPUs, and innovating in the data center all for their goal to power the next wave of AI. Physical AI will be powered by new Agentic AI systems, trained in digital twin simulations, and powered by NVIDIA GPUs.

NVIDIA expands Omniverse, the training ground for physical AI, with NVIDIA Cosmos and NVIDIA Newton. NVIDIA Cosmos is a generative AI system for generating infinite environments for AI to be able to generalize their task no matter the appearance of their deployment.

For advancing humanoid robotics development, Jensen Huang also announces Issac GR00T N1 to be an open-source and fully customizable foundation model for generalized humanoid reasoning and skills. GR00T N1 foundation model for humanoid robots is generalized for many common tasks and can be further finetuned, democratizing the robotics technology of the future.

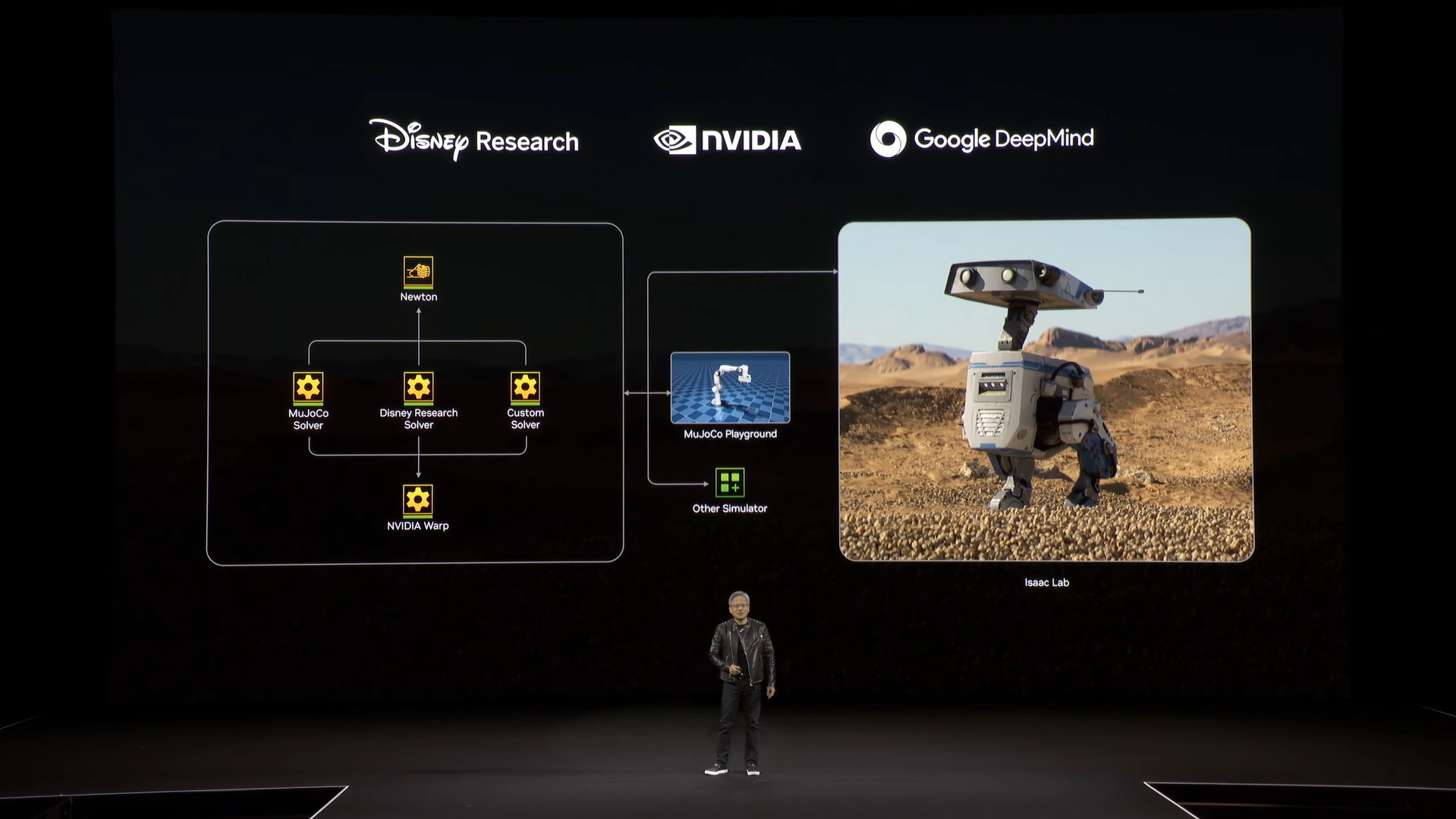

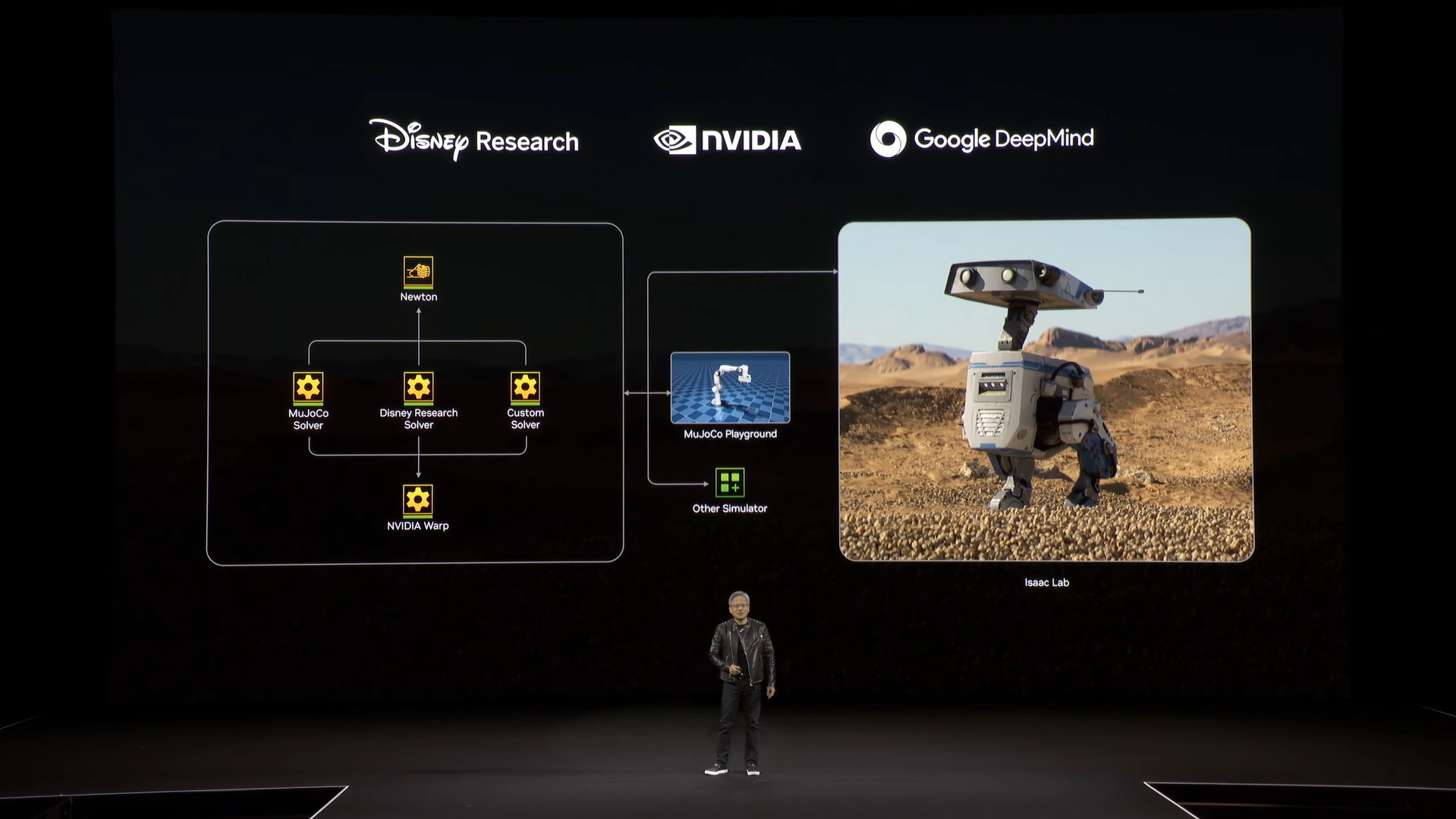

For more open-source goodies, NVIDIA Newton, a collaboration between Google DeepMind and Disney, is an open-source physics engine for high-fidelity training and finetuning of robotics motor skills that would directly translate to the real world.

Conclusion

Jensen Huang wraps up the NVIDIA GTC 2025 Keynote by tying everything back together starting with the computing solutions they power and provide to tackle next-generation AI workloads including Reasoning AI models and robotics.

NVIDIA’s vision is to provide the resources whether that is computing, frameworks, or even foundational models to the world to accelerate and make innovative discoveries. Their NVIDIA Blackwell and Rubin NVL systems and Photonics solutions enable data centers to develop powerful models, their NVIDIA DGX Spark and DGX Station enable developers to prototype with ease, their open source robotics frameworks enable roboticists to discover and train the future’s robots, and NVIDIA’s continued innovation drives research to new heights.

At Exxact we resonate with NVIDIA’s goal to inspire and drive innovation. We deliver custom configurable solutions powered by NVIDIA and a wide range of hardware partners to solve the world’s most challenging problems. Recognized for our dedication, NVIDIA named Exxact the NVIDIA Partner Network Solutions Integration Partner of the Year 2025 for the second consecutive year in a row. Contact us today for more inforrmation on how you can configure a workstation, server, or cluster ideal for your uniue workload.

NVIDIA GTC 2025 Keynote Recap - Agentic AI, Robotics, Blackwell, Vera Rubin, DGX Spark & Station, and More

Introduction

NVIDIA GTC 2025 kicks off with CEO Jensen Huang presenting his annual keynote addressing the advancements NVIDIA is making to advance AI and accelerated computing. In this recap, we will go over the key points that Huang made during his 2 hour long presentation.

Evolution and Inflection Point of AI

We start with the evolution of AI within the past decade. Perception AI is all about Parsing and evaluating data such as speech recognition, data optimization, and image classifications. In the past 5 years, it has been all about Generative AI where AI can translate from one modality to another such as text to image, text to video, and even amino acid sequence to protein. As opposed to perception AI where data is read and retrieved, generative AI has given the capability to generate answers.

The most recent advancement is Agentic AI or AI that can perceive, reason, plan, and execute. Agentic AI is granted generative AI and perceptive AI tools for parsing data, retrieving necessary information, and generating results to achieve the overarching goal. And to translate that capability, physical AI which is robotics — AI that interacts with the physics of the world.

These new methodologies of powering the way we process data mean that we are at the cusp of an AI revolution. That starts with the way we design our data centers and computing infrastructures.

NVIDIA CUDA-X Ecosystem

Apart from accelerating AI, NVIDIA also highlights its extensive GPU-accelerated libraries and microservices through CUDA-X. Huang explains a huge variety of CUDA accelerates a multitude of HPC applications such as CAD, CAE, physics, biology, lithography, weather, gene sequencing, quantum computing, and even simple numerical computing. NVIDIA cuOPT, their decision making optimization library, will be made open source.

NVIDIA CUDA has made accelerated computing possible, speeding up calculation and making the extremely time-consuming and unfeasible possible.

Autonomous Cars & NVIDIA Halos

NVIDIA has been in the autonomous car industry providing the 3 operating systems for powering autonomous vehicles - AI training, digital twin simulations, and the in-vehicle computer as well as the software stack that sits on top of it. They partner with GM to deploy their autonomous vehicle fleet

Jensen Huang expresses his pride is autonomous vehicle safety named NVIDIA Halos. The safety system is built from the ground up from processors to OS to software stacks and algorithms with explainability and transparency, engrained in the code.

Omniverse and Cosmo with synthetic data and scenario generation help power and train the autonomous vehicle model to perform its best.

Explaining NVIDIA’s Data Center Hardware Lineup

We get to the portion of the Keynote where Jensen now talks about hardware! We get to see the differences between NVIDIA HGX and Grace-Blackwell NVL. The transition between integrated NVLink to disaggregated NVLink switches (seen on the far right) and liquid cooling enables even higher-density computing per rack.

This increased capability is essential for Agentic AI or AI the reasons due to the increased number of tokens it takes to accurately and effectively generate the correct answer to a question or prompt. To further accelerate inference on a large scale, Huang announced NVIDIA Dynamo, open-source software for accelerating and scaling AI reasoning models in AI factories. “It is essentially the operating system of an AI factory,” Huang said.

We then get into the big product announcements for the data center and a product roadmap for 2026 and beyond.

NVIDIA Blackwell Ultra NVL72 (2025 H2)

Available 2025 H2, Blackwell Ultra NVL72 will be 1.5x the performance of the GB200 NVL72. This is dubbed the NVIDIA Blackwell GB300 NVL72. Huang did remark they will be changing the naming from the number suffix of NVL to tell the total GPU dies (as opposed to the number of GPU chips) in the next generation Vera Rubin.

NVIDIA Vera Rubin NVL144 (2026 H2)

Vera Rubin is planned for 2026 H2 from NVIDIA which will feature even more performance with approximately 3.3x the performance of GB300 NVL72. The NVL144 describes the 144 Vera Rubin GPU dies in the system. Vera Rubin is expected to deliver 3.3x the performance of Blackwell Ultra, accompanied by all new generations of ConnectX-9 and NVLink-6 technologies, doubling the bandwidth of previous generations. Vera Rubin GPUs will also feature HBM4 memory, providing a 1.6x increase in memory bandwidth.

NVIDIA Rubin NVL576 (2027 H2)

Furthermore, we also get Rubin Ultra planned for 2027 H2 in which a single GPU gets 4 GPU-dies. Rubin Ultra NVL576 will be a revolutionary AI factory. Rubin Ultra is expected to deliver 14x the performance of Blackwell Ultra, adopting the new generations of ConnectX-9 but an all-new NVLink7 technology. Vera Rubin Ultra GPUs will also feature HBM4e memory, providing an even larger bump in memory bandwidth.

Silicon Photonics with Quantum-X and Spectrum-X

In the data center, data transfer and intercommunication speeds is equally if not more important than your computing performance. Scaling out to hundreds of thousands of GPUs are limited by the power limits and the efficiency of telecommunication connectors: copper is optimal for short distances and photonics is better for long distances but the transceivers require power.

Jensen Huang reveals a new NVIDIA co-invented Photonics system for data center scale-out that utilizes new process nodes from TSMC delivering high bandwidth at more efficient power draws. Utilizing TSMC’s photonic engine, micro‑ring modulators (MRMs), high‑efficiency lasers, detachable fiber connectors, and a whole bunch of technological advancements, the new Photonics platform delivers up to 3.5x better efficiency, 10x higher resiliency, and deployment speeds 1.3x faster than conventional solutions. NVIDIA Quantum-X ships 2025 H2 and Spectrum-X ships 2026 H2.

NVIDIA Personal DGX - DGX Spark & DGX Station

NVIDIA showcased its lineup of personal AI supercomputers.

NVIDIA DGX Spark, a very small but powerful NUC-style form factor targeting AI developers, researchers, data scientists, and students. Before Huang could tell us more, the GTC live stream feed died for a few minutes, but here are the specifications of DGX Spark from their website. NVIDIA DGX Spark is the world’s smallest AI supercomputer powered by the NVIDIA GB10 Grace Blackwell Superchip featuring 1 PetaFLOP of FP4 AI compute, 20 Arm Cores, and 128GB of high bandwidth unified memory. This all-in-one, mini DGX computer can prove useful for prototyping, inferencing, data science, and edge workloads.

NVIDIA DGX Station is a resurgence of the personal AI supercomputer desktop that was dropped after the Ampere generation. This time NVIDIA DGX Station utilizes a fully NVIDIA build with GB300 Grace Blackwell superchip with NVIDIA’s ConnectX 8 SuperNIC and PCIe slots in a traditional workstation-type form factor. The DGX Station offers 784GB of unified system memory while delivering 20 petaflops of dense FP4 AI performance.

Physical AI and Robotics

To tie everything together, NVIDIA had become pivotal in the AI industry developing software frameworks, building GPUs, and innovating in the data center all for their goal to power the next wave of AI. Physical AI will be powered by new Agentic AI systems, trained in digital twin simulations, and powered by NVIDIA GPUs.

NVIDIA expands Omniverse, the training ground for physical AI, with NVIDIA Cosmos and NVIDIA Newton. NVIDIA Cosmos is a generative AI system for generating infinite environments for AI to be able to generalize their task no matter the appearance of their deployment.

For advancing humanoid robotics development, Jensen Huang also announces Issac GR00T N1 to be an open-source and fully customizable foundation model for generalized humanoid reasoning and skills. GR00T N1 foundation model for humanoid robots is generalized for many common tasks and can be further finetuned, democratizing the robotics technology of the future.

For more open-source goodies, NVIDIA Newton, a collaboration between Google DeepMind and Disney, is an open-source physics engine for high-fidelity training and finetuning of robotics motor skills that would directly translate to the real world.

Conclusion

Jensen Huang wraps up the NVIDIA GTC 2025 Keynote by tying everything back together starting with the computing solutions they power and provide to tackle next-generation AI workloads including Reasoning AI models and robotics.

NVIDIA’s vision is to provide the resources whether that is computing, frameworks, or even foundational models to the world to accelerate and make innovative discoveries. Their NVIDIA Blackwell and Rubin NVL systems and Photonics solutions enable data centers to develop powerful models, their NVIDIA DGX Spark and DGX Station enable developers to prototype with ease, their open source robotics frameworks enable roboticists to discover and train the future’s robots, and NVIDIA’s continued innovation drives research to new heights.

At Exxact we resonate with NVIDIA’s goal to inspire and drive innovation. We deliver custom configurable solutions powered by NVIDIA and a wide range of hardware partners to solve the world’s most challenging problems. Recognized for our dedication, NVIDIA named Exxact the NVIDIA Partner Network Solutions Integration Partner of the Year 2025 for the second consecutive year in a row. Contact us today for more inforrmation on how you can configure a workstation, server, or cluster ideal for your uniue workload.

.jpg?format=webp)