NVIDIA GTC 2024 Recap & Stream

NVIDIA CEO Jensen Huang stands up on stage at the SAP Center in California to deliver his keynote address at the #1 AI Conference--NVIDIA GTC 2024. Huang begins his keynote with the importance of computing. He tells us the journey of NVIDIA and the importance of CUDA and adapting GPUs for accelerated computing.

With new innovations and commentaries on the industry at large, we will recap the highlights of the keynote! You can rewatch a recording on the 2-hour long keynote here:

NVIDIA GTC 2024 in 5 Paragraphs

AI has not only changed the digital world but is going to change our physical world. In the past two years, a new and revolutionary way of computing has taken shape: Generative AI. Anything that can be captured to the digital world can be digitally analyzed, learned, computed, and generated again.

Billions of parameters become trillions of parameters. NVIDIA is setting the stage by delivering the ability to compute trillions of parameters with even larger GPUs. The GPU platform NVIDIA Blackwell is the start to being able to train, compute, and power these methods of computing.

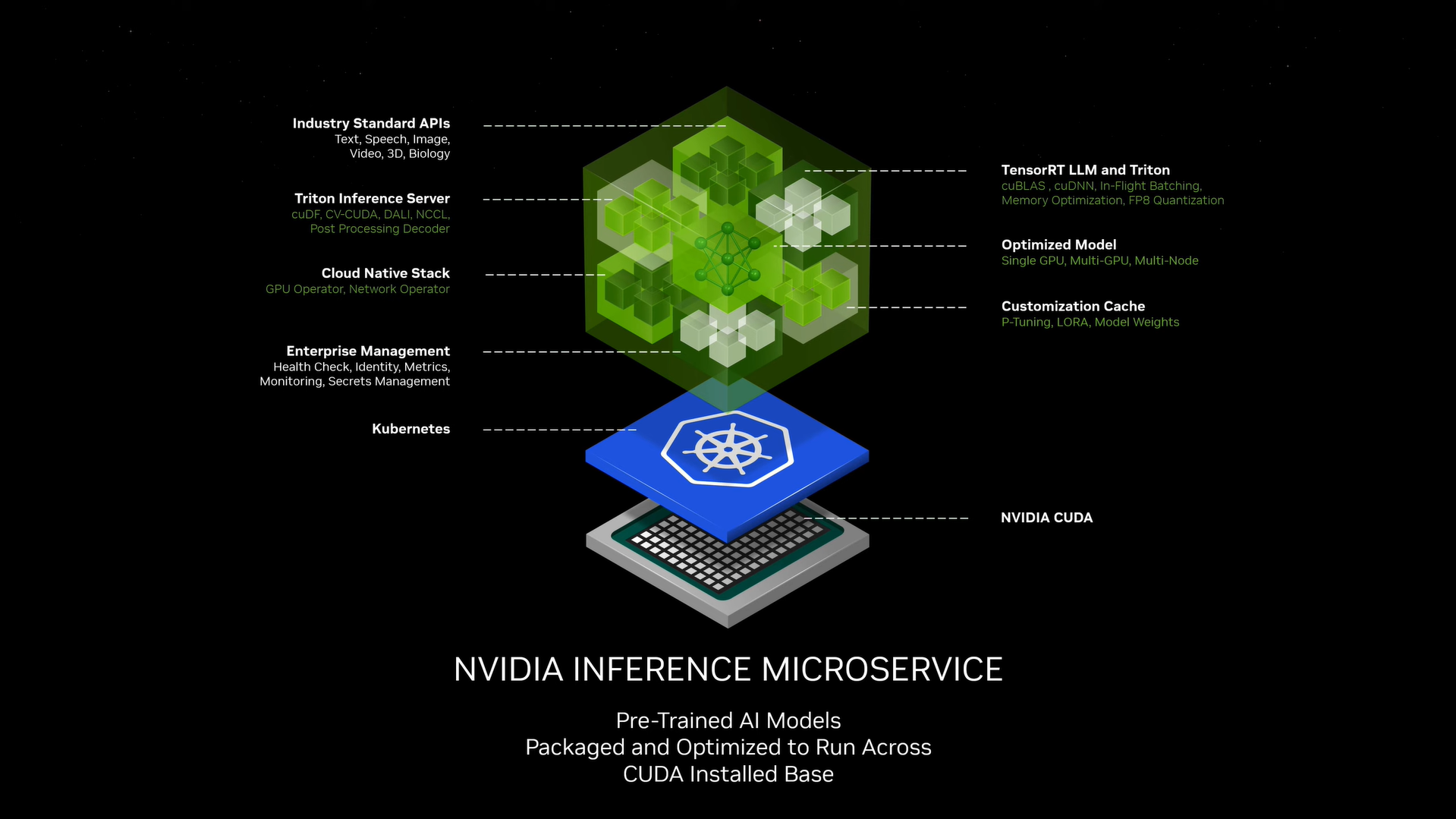

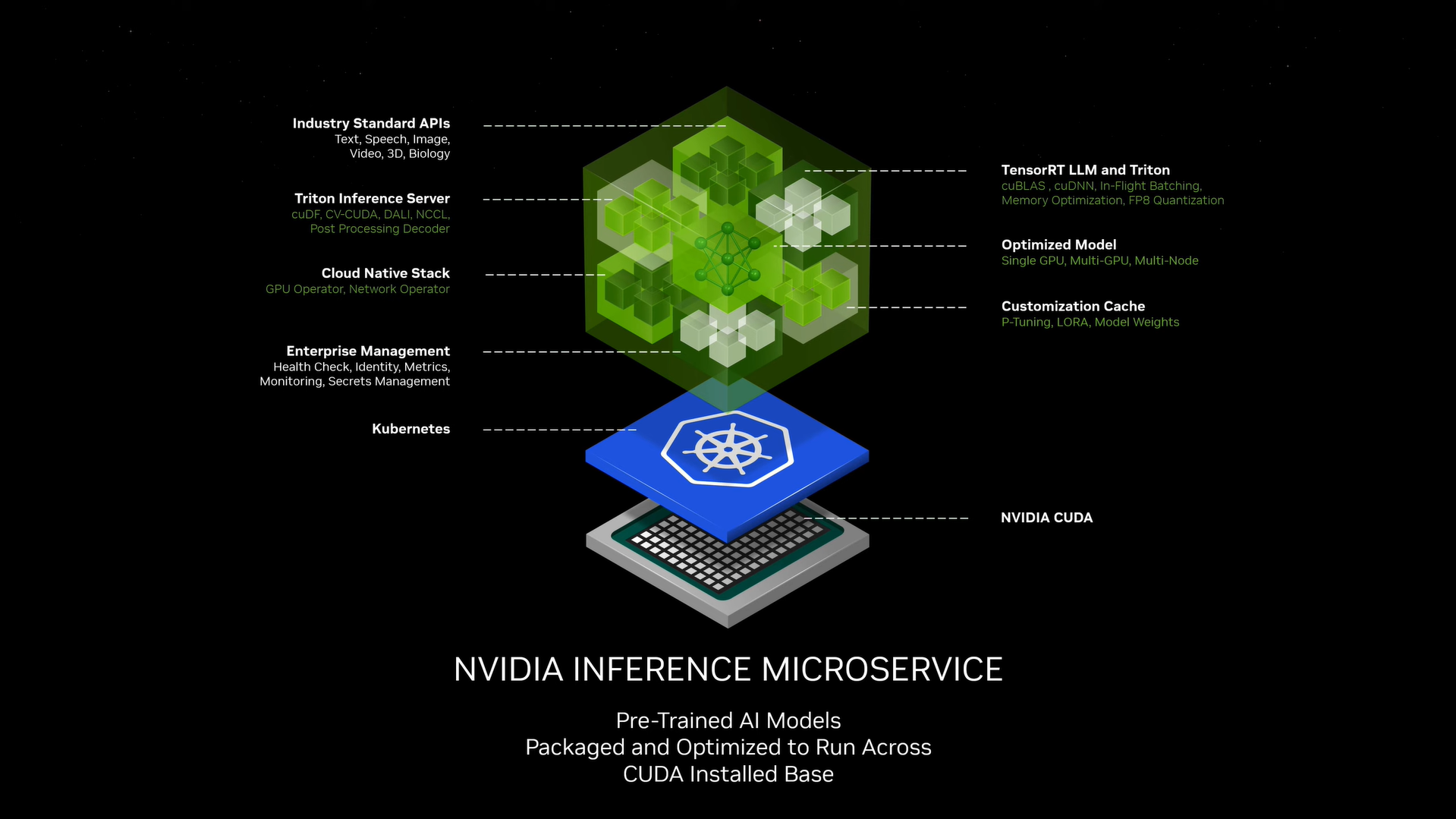

With a new way of computing, and the power to compute things at will, Huang recognizes there needs to be a new way to deliver this compute to the user. NVIDIA Inference Microservices, or NIMs, will house a library of specialized AI APIs where people can assemble teams of AIs to solve a task.

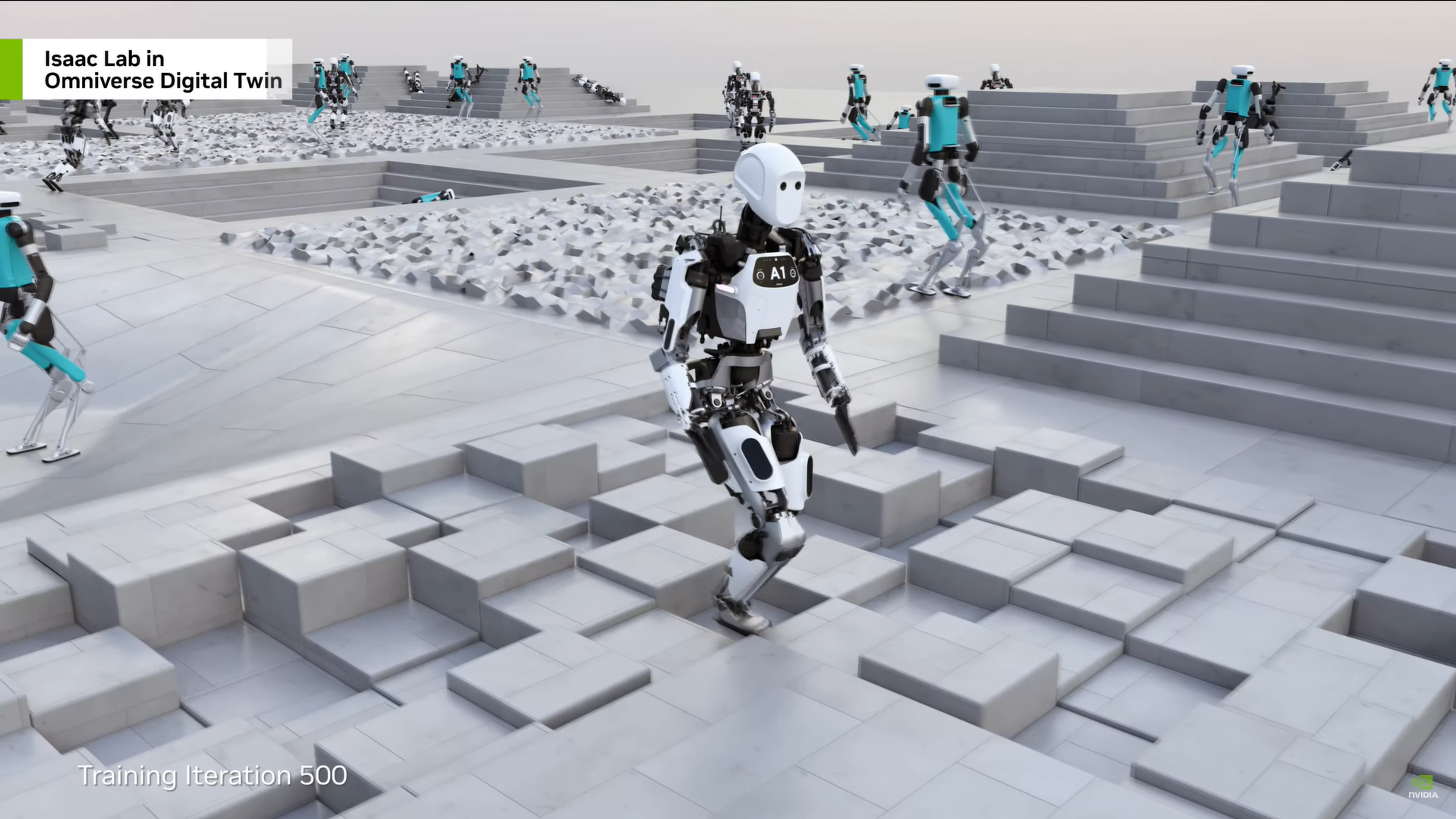

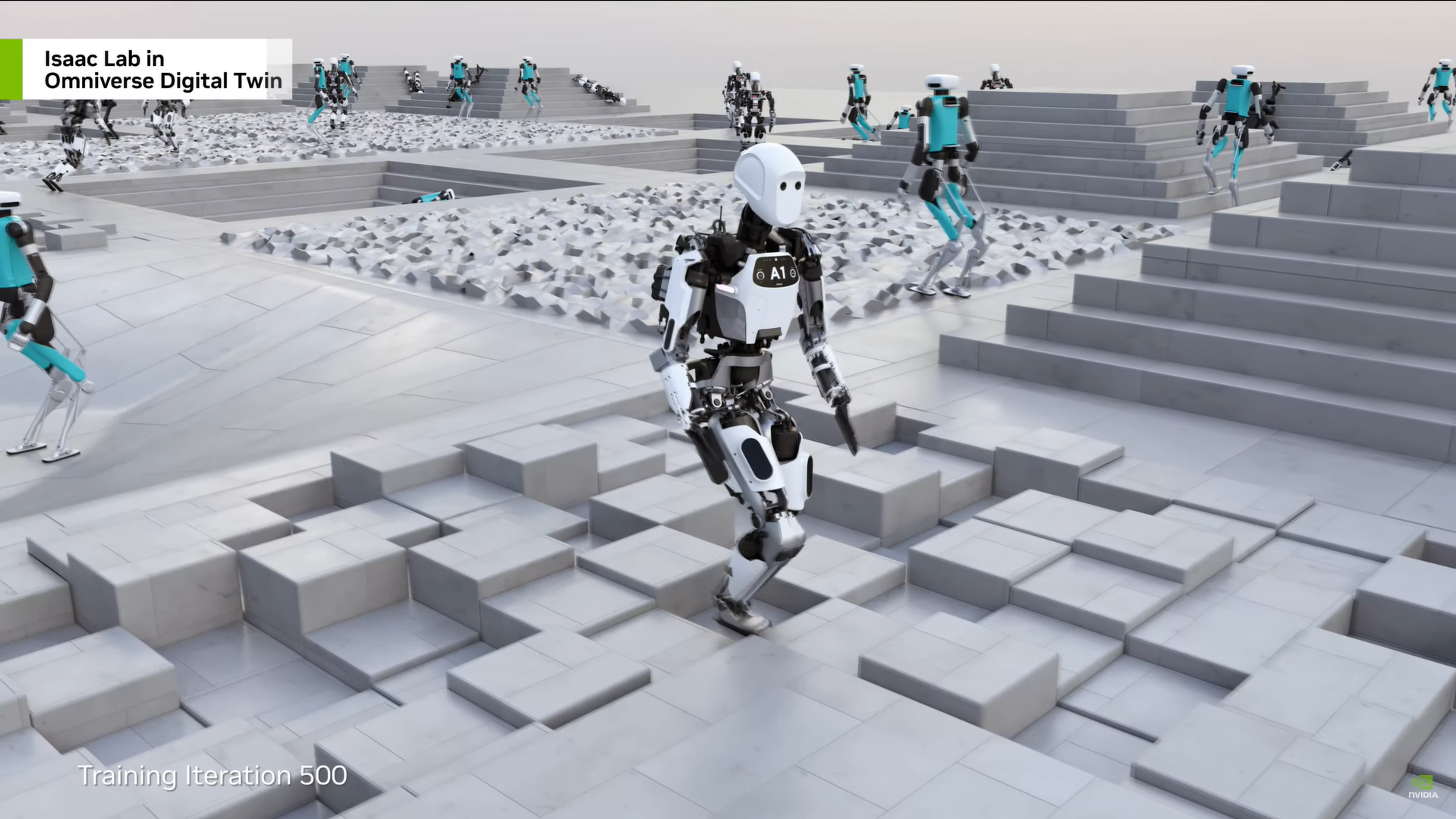

These tasks expand to the physical world of robotics. Robotics are already prevalent in many factories, warehouses, and the world, such as humanoid, AMR, self-driving cars, manipulating arms, etc. NVIDIA is delivering a digitalized platform for training and optimizing these robots, called NVIDIA Omniverse.

NVIDIA’s full stack of hardware and software enable the full development and deployment of AI robotics and power the future of computing and innovation.

- NVIDIA DGX powers the foundational model training with huge, interconnected GPU factories.

- NVIDIA OVX powers the NVIDIA Omniverse digital twin environment where hundreds or thousands of AI simultaneously learn how to move and operate.

- NVIDIA AGX powers the robotics on the edge to not only move within the physical world but to perceive, understand, adapt and become our AI companions.

- Not to mention NIMs, where users can assemble a team of AI APIs to deliver the comprehensive list of goals for powering robotics, AI, and more.

Now we go a little more in-depth in the most important sections mention in GTC.

NVIDIA DGX - Large Language AI Models Need Large GPUs

Large Language Models have increasingly become larger and larger, thus the solution NVIDIA devised was to make a really large GPU. These larger than large GPU vision led to the conception of the NVIDIA DGX, DGX Pods, and DGX Superpods, where multiple systems are fully interconnected with NVLink and InfiniBand.

Selene is the NVIDIA AI factory built with approximately 4,500 A100s capable of 112TB/s interconnect. Eos is the latest generation NVIDIA AI factory built with over 10,000 H100s capable of over 1,100TB/s interconnect! What comes next is NVIDIA’s newest innovation and biggest GPU yet.

NVIDIA Blackwell - B100, GB200, and DGX GB200 NVL72

NVIDIA Blackwell is the single GPU die with over 104 billion transistors built using TSMC 4NP process capable of over 10TB/s of NVIDIA high-bandwidth interface. The highspeed interface allows them to connect two, (that's right two) Blackwell dies into a single Blackwell GPU totaling 208 billion transistors with virtually zero latency and full-cache coherency.

NVIDIA Blackwell is only made possible with:

- 2nd Gen Transformer engine which introduces FP6 and FP4 Tensor Cores

- 5th Generation NVLink scaling up to 576 GPUs

- 100% In-System Self-Test RAS System

- Secure full performance encryption and TEE

- Decompression Engine with 800GB/s speed.

Blackwell GPU is 5x the AI performance with 4x the on-die memory compared with Hopper GPU. It has 20 petaFLOPS of AI performance, 192GB of HBM3e memory and 8TB of memory bandwidth. And it doesn’t stop there. NVIDIA retains the SXM form factor with an 8-GPU HGX B100 compute board that can be hot swapped into existing HGX H100 systems.

Furthermore, they update their Grace lineup. Two NVIDIA Blackwell GPU and one Grace CPU are interconnected via NVLink C2C interconnect called the GB200 Superchip, the building block of the newest NVIDIA AI factory. A single NVIDIA Blackwell Compute node uses two GB200 superchips in a 1U liquid cooled server with four ConnectX-800G InfiniBand and a single Bluefiled-3 DPU.

What NVIDIA DGX Looks Like Now - NVIDIA DGX GB200 NVL72

DGX looks completely different from last generation. Connect 18 Blackwell compute nodes via nine NVLink Switch Systems, two Quantum InfiniBand Switch, and the NVLink Spine in a single rack. With over 72 NVIDIA Blackwell GPUs and 36 Grace CPUs, the NVIDIA DGX GB200 NVL72 is one giant CUDA GPU housing 30TB of HBM3e memory and capable of 1.4 exaFLOPS of AI performance! Huge AI factories can be developed with multiple NVIDIA GB200 NVL72 connected with Mellanox and InfiniBand to scale to an NVIDIA GB200 SuperPOD. The scalability is endless.

Generative AI - Whatever can be digitized should also be generated.

Generative AI has been the newest and hottest AI trend for the past 2 years, especially with OpenAI’s revolutionary chatbot and image generation AI ChatGPT and DALLE-2 powered by GPT-3, GPT-3.5, and GPT-4. Many other companies have also developed their own Generative AI foundational models for further finetuning.

Huang expresses his thoughts on generative AI and digitizing our world. We have already digitized text, images, genes, proteins. If something translated to the digital world, we can pull information, analyze it, learn from it, and eventually generate it with trillion parameter generative AI models.

This is where Huang introduces NIMs or NVIDIA Inference Microservice where each modal AI API is presented as a microservice where users can employ specialized chatbots for specialized tasks. Users can harness NIMs for various task by first consulting a planning NIM which employs specialized NIMs to tackle your specific problem, such that you assemble a team of AIs to solve problems, develop code, and more.

Robotics - Bridging Digital Worlds with the Physical World

The future of heavy industries starts as a digital twin. These digital representations of a manufacturing facility are powered by simulation, logic, and AI agents. Here companies can optimize their work environment before they deploy the large-scale factories in real life. These digital twins can be tied to the real world and compute optimized using the digital twin to control Robotics within the warehouse to be aware of the warehouse workflow.

Huang expresses the importance of using digital twins to optimize the robotics that operate within a factory. By defining the warehouse in software, then software-based systems can then be optimized more efficiently using software algorithms. NVIDIA Omniverse will facilitate the simulation in a digital twin, train the AI robotics in the digital twin, and optimize the workflow in digital twin environments.

Movement is the next big thing for AI. For our computers to interact with us digitally is a revolutionary advancement, but to interact with computers physically is a whole different ball game. NVIDIA envisions training an AI capable of perception, goals, and self-test optimization paramount for robotics to have better problem-solving skills. A non-pre-programmed robot can be given a task evaluate its goals and adapt to unpredictable circumstances.

Conclusion - NVIDIA Powers the Entire AI Process

Huang wraps up NVIDIA GTC 2024 by walking back on stage with a droid like robot developed by Disney. As well as being surrounded by various humanoid robots behind him. The end of GTC showcases the realistic impact that AI can have on the physical world where humans can interact, work with, and train humanoid AI to perform human tasks.

We wrap up everything by reiterating the hardware that will power this computing innovation. NVIDIA Blackwell is the platform for developing full scale AI. We cap the keynote off with NVIDIA’s signature animation of a datacenter with Toy-Jensen flying in a miniature spaceship through every component of the NVIDIA Blackwell platform.

- HGX B100, the SXM variant of Blackwell that can slot directly into existing HGX H100 systems.

- NVLink Switch which powers the connectivity between multiple Blackwell nodes.

- GB200 Supechip Compute Node or 1 Grace 2 Blackwell 1U liquid cooled server.

- Quantum X800 Switch with ConectX-8 SuperNIC that powers the networking for a Blackwell SuperPOD

- Spectrum X800 Switch with Bluefiled-3 SuperNIC that also powers the networking for a Blackwell SuperPOD

NVIDIA GTC has always been a fountain of inspiration and innovation for AI. With AI taking shape in every industry, NVIDIA is no longer solely just a GPU manufacturing company; they aim to power the next generation of computing through its hardware, software, microservices and AI environments.

Exxact is proud to be a part of the NVIDIA family as an Elite NPN and are here to deliver its computing solutions to your data center to power your innovation to the farthest reaches. Sign up for the Exxact newsletter to stay in touch or contact us today to upgrade your computing now.

NVIDIA GTC 2024 - Big Innovations Need Even Bigger GPUs

NVIDIA GTC 2024 Recap & Stream

NVIDIA CEO Jensen Huang stands up on stage at the SAP Center in California to deliver his keynote address at the #1 AI Conference--NVIDIA GTC 2024. Huang begins his keynote with the importance of computing. He tells us the journey of NVIDIA and the importance of CUDA and adapting GPUs for accelerated computing.

With new innovations and commentaries on the industry at large, we will recap the highlights of the keynote! You can rewatch a recording on the 2-hour long keynote here:

NVIDIA GTC 2024 in 5 Paragraphs

AI has not only changed the digital world but is going to change our physical world. In the past two years, a new and revolutionary way of computing has taken shape: Generative AI. Anything that can be captured to the digital world can be digitally analyzed, learned, computed, and generated again.

Billions of parameters become trillions of parameters. NVIDIA is setting the stage by delivering the ability to compute trillions of parameters with even larger GPUs. The GPU platform NVIDIA Blackwell is the start to being able to train, compute, and power these methods of computing.

With a new way of computing, and the power to compute things at will, Huang recognizes there needs to be a new way to deliver this compute to the user. NVIDIA Inference Microservices, or NIMs, will house a library of specialized AI APIs where people can assemble teams of AIs to solve a task.

These tasks expand to the physical world of robotics. Robotics are already prevalent in many factories, warehouses, and the world, such as humanoid, AMR, self-driving cars, manipulating arms, etc. NVIDIA is delivering a digitalized platform for training and optimizing these robots, called NVIDIA Omniverse.

NVIDIA’s full stack of hardware and software enable the full development and deployment of AI robotics and power the future of computing and innovation.

- NVIDIA DGX powers the foundational model training with huge, interconnected GPU factories.

- NVIDIA OVX powers the NVIDIA Omniverse digital twin environment where hundreds or thousands of AI simultaneously learn how to move and operate.

- NVIDIA AGX powers the robotics on the edge to not only move within the physical world but to perceive, understand, adapt and become our AI companions.

- Not to mention NIMs, where users can assemble a team of AI APIs to deliver the comprehensive list of goals for powering robotics, AI, and more.

Now we go a little more in-depth in the most important sections mention in GTC.

NVIDIA DGX - Large Language AI Models Need Large GPUs

Large Language Models have increasingly become larger and larger, thus the solution NVIDIA devised was to make a really large GPU. These larger than large GPU vision led to the conception of the NVIDIA DGX, DGX Pods, and DGX Superpods, where multiple systems are fully interconnected with NVLink and InfiniBand.

Selene is the NVIDIA AI factory built with approximately 4,500 A100s capable of 112TB/s interconnect. Eos is the latest generation NVIDIA AI factory built with over 10,000 H100s capable of over 1,100TB/s interconnect! What comes next is NVIDIA’s newest innovation and biggest GPU yet.

NVIDIA Blackwell - B100, GB200, and DGX GB200 NVL72

NVIDIA Blackwell is the single GPU die with over 104 billion transistors built using TSMC 4NP process capable of over 10TB/s of NVIDIA high-bandwidth interface. The highspeed interface allows them to connect two, (that's right two) Blackwell dies into a single Blackwell GPU totaling 208 billion transistors with virtually zero latency and full-cache coherency.

NVIDIA Blackwell is only made possible with:

- 2nd Gen Transformer engine which introduces FP6 and FP4 Tensor Cores

- 5th Generation NVLink scaling up to 576 GPUs

- 100% In-System Self-Test RAS System

- Secure full performance encryption and TEE

- Decompression Engine with 800GB/s speed.

Blackwell GPU is 5x the AI performance with 4x the on-die memory compared with Hopper GPU. It has 20 petaFLOPS of AI performance, 192GB of HBM3e memory and 8TB of memory bandwidth. And it doesn’t stop there. NVIDIA retains the SXM form factor with an 8-GPU HGX B100 compute board that can be hot swapped into existing HGX H100 systems.

Furthermore, they update their Grace lineup. Two NVIDIA Blackwell GPU and one Grace CPU are interconnected via NVLink C2C interconnect called the GB200 Superchip, the building block of the newest NVIDIA AI factory. A single NVIDIA Blackwell Compute node uses two GB200 superchips in a 1U liquid cooled server with four ConnectX-800G InfiniBand and a single Bluefiled-3 DPU.

What NVIDIA DGX Looks Like Now - NVIDIA DGX GB200 NVL72

DGX looks completely different from last generation. Connect 18 Blackwell compute nodes via nine NVLink Switch Systems, two Quantum InfiniBand Switch, and the NVLink Spine in a single rack. With over 72 NVIDIA Blackwell GPUs and 36 Grace CPUs, the NVIDIA DGX GB200 NVL72 is one giant CUDA GPU housing 30TB of HBM3e memory and capable of 1.4 exaFLOPS of AI performance! Huge AI factories can be developed with multiple NVIDIA GB200 NVL72 connected with Mellanox and InfiniBand to scale to an NVIDIA GB200 SuperPOD. The scalability is endless.

Generative AI - Whatever can be digitized should also be generated.

Generative AI has been the newest and hottest AI trend for the past 2 years, especially with OpenAI’s revolutionary chatbot and image generation AI ChatGPT and DALLE-2 powered by GPT-3, GPT-3.5, and GPT-4. Many other companies have also developed their own Generative AI foundational models for further finetuning.

Huang expresses his thoughts on generative AI and digitizing our world. We have already digitized text, images, genes, proteins. If something translated to the digital world, we can pull information, analyze it, learn from it, and eventually generate it with trillion parameter generative AI models.

This is where Huang introduces NIMs or NVIDIA Inference Microservice where each modal AI API is presented as a microservice where users can employ specialized chatbots for specialized tasks. Users can harness NIMs for various task by first consulting a planning NIM which employs specialized NIMs to tackle your specific problem, such that you assemble a team of AIs to solve problems, develop code, and more.

Robotics - Bridging Digital Worlds with the Physical World

The future of heavy industries starts as a digital twin. These digital representations of a manufacturing facility are powered by simulation, logic, and AI agents. Here companies can optimize their work environment before they deploy the large-scale factories in real life. These digital twins can be tied to the real world and compute optimized using the digital twin to control Robotics within the warehouse to be aware of the warehouse workflow.

Huang expresses the importance of using digital twins to optimize the robotics that operate within a factory. By defining the warehouse in software, then software-based systems can then be optimized more efficiently using software algorithms. NVIDIA Omniverse will facilitate the simulation in a digital twin, train the AI robotics in the digital twin, and optimize the workflow in digital twin environments.

Movement is the next big thing for AI. For our computers to interact with us digitally is a revolutionary advancement, but to interact with computers physically is a whole different ball game. NVIDIA envisions training an AI capable of perception, goals, and self-test optimization paramount for robotics to have better problem-solving skills. A non-pre-programmed robot can be given a task evaluate its goals and adapt to unpredictable circumstances.

Conclusion - NVIDIA Powers the Entire AI Process

Huang wraps up NVIDIA GTC 2024 by walking back on stage with a droid like robot developed by Disney. As well as being surrounded by various humanoid robots behind him. The end of GTC showcases the realistic impact that AI can have on the physical world where humans can interact, work with, and train humanoid AI to perform human tasks.

We wrap up everything by reiterating the hardware that will power this computing innovation. NVIDIA Blackwell is the platform for developing full scale AI. We cap the keynote off with NVIDIA’s signature animation of a datacenter with Toy-Jensen flying in a miniature spaceship through every component of the NVIDIA Blackwell platform.

- HGX B100, the SXM variant of Blackwell that can slot directly into existing HGX H100 systems.

- NVLink Switch which powers the connectivity between multiple Blackwell nodes.

- GB200 Supechip Compute Node or 1 Grace 2 Blackwell 1U liquid cooled server.

- Quantum X800 Switch with ConectX-8 SuperNIC that powers the networking for a Blackwell SuperPOD

- Spectrum X800 Switch with Bluefiled-3 SuperNIC that also powers the networking for a Blackwell SuperPOD

NVIDIA GTC has always been a fountain of inspiration and innovation for AI. With AI taking shape in every industry, NVIDIA is no longer solely just a GPU manufacturing company; they aim to power the next generation of computing through its hardware, software, microservices and AI environments.

Exxact is proud to be a part of the NVIDIA family as an Elite NPN and are here to deliver its computing solutions to your data center to power your innovation to the farthest reaches. Sign up for the Exxact newsletter to stay in touch or contact us today to upgrade your computing now.

.jpg?format=webp)