NVIDIA Blackwell Specifications

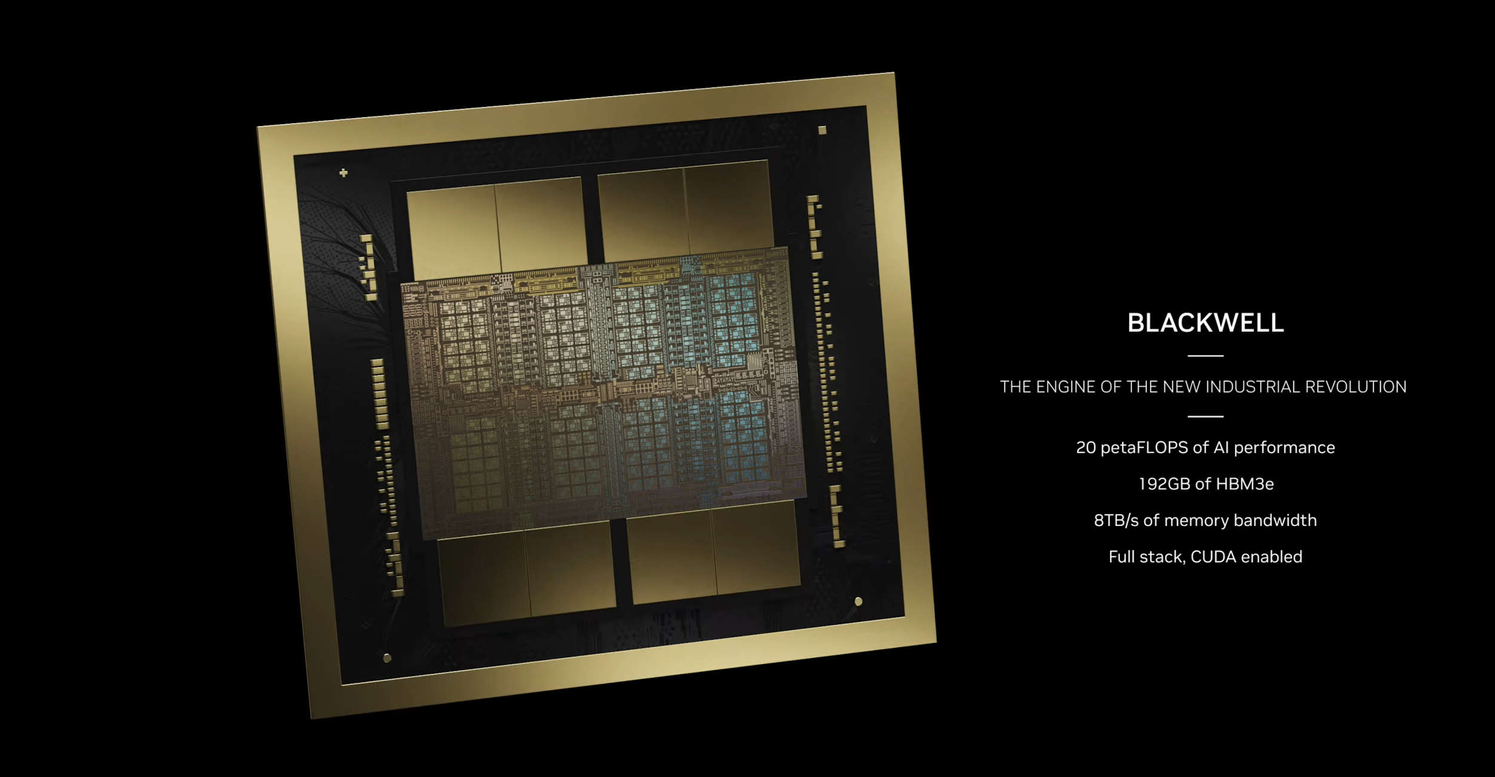

NVIDIA Blackwell GPU is capable of 20 petaFLOPS for accelerating HPC workloads and powering an innumerable number of operations per second. But the NVIDIA Blackwell GPU is quite not a one GPU… Instead, NVIDIA’s innovation in chip-to-chip interconnect has NVIDIA butting two GPU dies together to form a coherent bond merging two dies into a single unified GPU.

The entire Blackwell GPU consists of 208 billion transistors built on TSMC 4NP process (proprietary for NVIDIA) and is the Blackwell is a largest GPU ever. Each single GPU die is the largest die possible at TSMC’s within the limits of reticle size, and merging the two together only doubles what is capable. The NVIDIA High-Bandwidth Interface (NV-HBI) delivers 10TB/s of bidirectional bandwidth, effectively creating one single GPU out of two.

At a glance NVIDIA Blackwell features several new innovations

- Second Generation Transformer Engine - dynamic range management algorithms and fine-grain scaling techniques, called micro-tensor scaling, to optimize performance and accuracy

- Fifth Generation NVLink - 1.8TB/s bidirectional bandwidth per GPU with options for scalability of up to 576 GPUs. Additional GPUs interconnected via InfiniBand

- Decompression Engine - Accelerate database workloads and queries, paramount of enabling GPU accelerated data processing/analytics

- RAS Engine - Dedicated reliability, availability, and serviceability engine which employs AI for notifyin maintenance, enhancing system uptime, and reducing operating costs

2nd Gen Transformer Engine

Blackwell introduces the new second-generation Transformer Engine. The second-generation Transformer Engine uses custom Blackwell Tensor Core technology combined with TensorRT-LLM and Nemo Framework innovations to accelerate inference and training for LLMs and Mixture-of-Experts (MoE) models.

The Blackwell Transformer Engine utilizes advanced dynamic range management algorithms and fine-grain scaling techniques, called micro-tensor scaling, to optimize performance and accuracy and enable FP4 AI. This doubles the performance with Blackwell’s FP4 Tensor Core, doubles the parameter bandwidth to the HBM memory, and doubles the size of next-generation models per GPU.

With the Blackwell second-generation Transformer Engine, enterprises can use and deploy state-of-the-art MoE models with affordable economics, optimizing their business with the benefits of generative AI. NVIDIA Blackwell makes the next era of MoE models possible— supporting training and real-time inference on models over 10-trillion-parameters in size.

5th Gen NVLink and NVLink Switch

Exascale computing and trillion-parameter AI models was made possible by NVIDIA’s revolutionary NVLink technology which further unifies GPUs to distribute computing seamlessly. Innovation and next-generation AI models hinge on the need for swift, seamless communication among every GPU within a server cluster. The fifth generation of NVIDIA® NVLink® interconnect can scale up to 576 GPUs to unleash accelerated performance for trillion- and multi-trillion parameter AI models.

The NVIDIA NVLink Switch Chip enables 130TB/s of GPU bandwidth in one 72-GPU NVLink domain (NVL72) and delivers 4X bandwidth efficiency with NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP)™ FP8 support. The NVIDIA NVLink Switch Chip supports clusters beyond a single server at the same impressive 1.8TB/s interconnect. Multi-server clusters with NVLink scale GPU communications in balance with the increased computing, so NVL72 can support 9X the GPU throughput than a single eight-GPU system. More on the NVIDIA B200 NVL72 in another post.

Decompression Engine

Data analytics and database workflows have traditionally been bottlenecked due to the reliance of CPUs for compute. If the data transfer is slow, then the whole data imperative computing of real-time AI can be bogged down at that single point.

Accelerated data science can dramatically boost the performance of end-to-end analytics and speed up AI process strictly by optimizing the data pipeline. NVIDIA Blackwell Decompression Engine can decompress data at a rate of up to 800GB/s using Blackwell GPU and NVIDIA Grace CPU’s NVLink-C2C. More on GB200 in another post.

RAS Engine

Blackwell architecture adds intelligent resiliency with dedicated Reliability Availability and Serviceability (RAS) Engine to identify faults. Minimizing the downtime of our valuable compute and important machines ensures the use of hardware is at performing at its utmost efficiency.

NVIDIA employs powerful AI predictive management capabilities to continuously monitor thousands of data points across the entire hardware/software stack for overall health. In-depth diagnostics and continued vigilance can identify areas of concern for maintenance reducing turnaround time and facilitating effective remediation.

Conclusion

The landscape and increased usage of AI the pursuit of performance and scalability. From individual users all the way to large business enterprise, AI is pivotal to productivity and innovation. Generative AI and accelerated GPU computing have become integral in countless industries including healthcare, automotive, manufacturing, and more.

NVIDIA Blackwell is designed to address the increased needs of AI for the ever-increasing size of AI models, speed for inferencing complex LLMs, while delivering magnitudes better performance and efficiency. We will go over the various NVIDIA Blackwell deployments in another blog post. Blackwell is planned to ship by the end of the year.

Fueling Innovation with an Exxact Multi-GPU Server

Training AI models on massive datasets can be accelerated exponentially with the right system. It's not just a high-performance computer, but a tool to propel and accelerate your research.

Configure Now

NVIDIA Blackwell Architecture

NVIDIA Blackwell Specifications

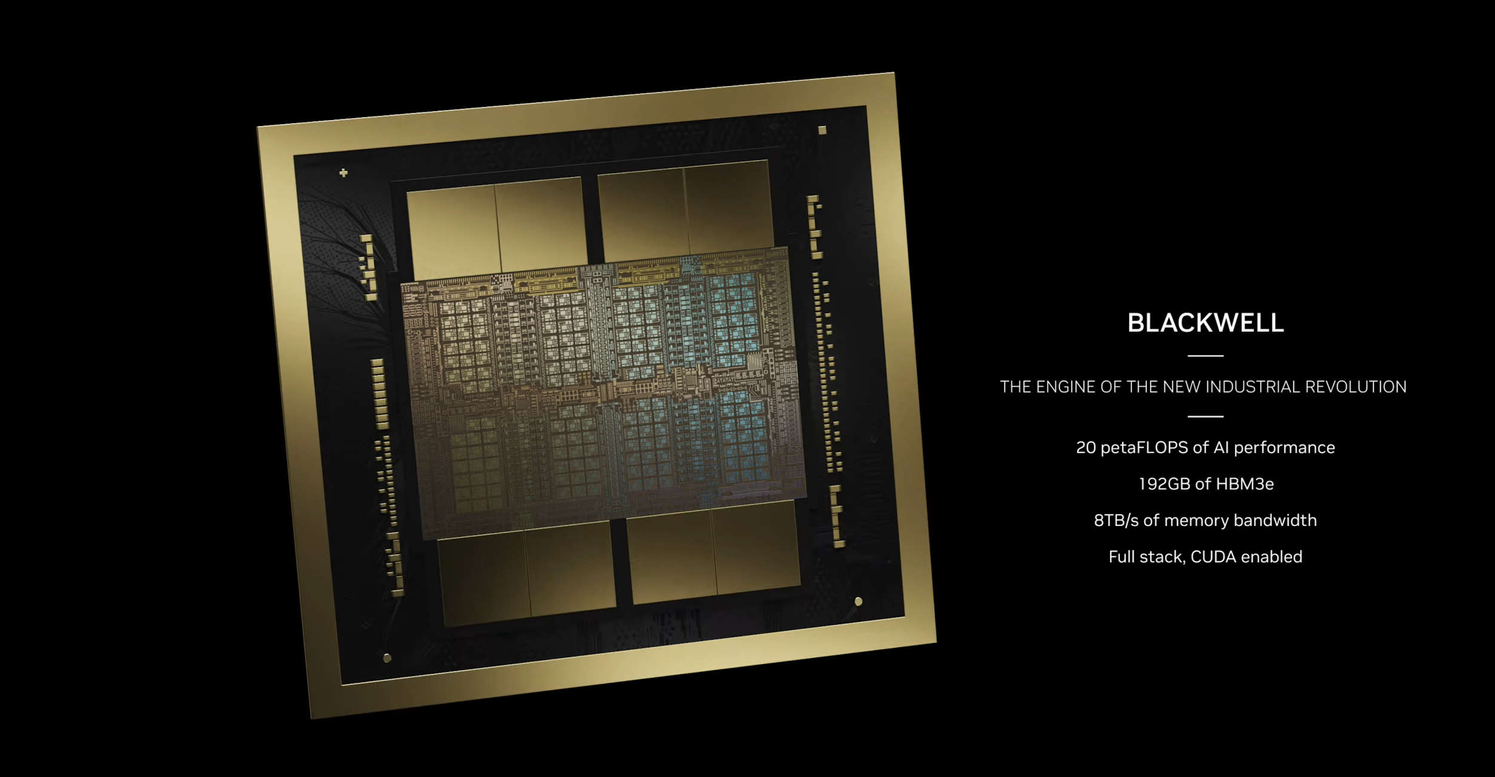

NVIDIA Blackwell GPU is capable of 20 petaFLOPS for accelerating HPC workloads and powering an innumerable number of operations per second. But the NVIDIA Blackwell GPU is quite not a one GPU… Instead, NVIDIA’s innovation in chip-to-chip interconnect has NVIDIA butting two GPU dies together to form a coherent bond merging two dies into a single unified GPU.

The entire Blackwell GPU consists of 208 billion transistors built on TSMC 4NP process (proprietary for NVIDIA) and is the Blackwell is a largest GPU ever. Each single GPU die is the largest die possible at TSMC’s within the limits of reticle size, and merging the two together only doubles what is capable. The NVIDIA High-Bandwidth Interface (NV-HBI) delivers 10TB/s of bidirectional bandwidth, effectively creating one single GPU out of two.

At a glance NVIDIA Blackwell features several new innovations

- Second Generation Transformer Engine - dynamic range management algorithms and fine-grain scaling techniques, called micro-tensor scaling, to optimize performance and accuracy

- Fifth Generation NVLink - 1.8TB/s bidirectional bandwidth per GPU with options for scalability of up to 576 GPUs. Additional GPUs interconnected via InfiniBand

- Decompression Engine - Accelerate database workloads and queries, paramount of enabling GPU accelerated data processing/analytics

- RAS Engine - Dedicated reliability, availability, and serviceability engine which employs AI for notifyin maintenance, enhancing system uptime, and reducing operating costs

2nd Gen Transformer Engine

Blackwell introduces the new second-generation Transformer Engine. The second-generation Transformer Engine uses custom Blackwell Tensor Core technology combined with TensorRT-LLM and Nemo Framework innovations to accelerate inference and training for LLMs and Mixture-of-Experts (MoE) models.

The Blackwell Transformer Engine utilizes advanced dynamic range management algorithms and fine-grain scaling techniques, called micro-tensor scaling, to optimize performance and accuracy and enable FP4 AI. This doubles the performance with Blackwell’s FP4 Tensor Core, doubles the parameter bandwidth to the HBM memory, and doubles the size of next-generation models per GPU.

With the Blackwell second-generation Transformer Engine, enterprises can use and deploy state-of-the-art MoE models with affordable economics, optimizing their business with the benefits of generative AI. NVIDIA Blackwell makes the next era of MoE models possible— supporting training and real-time inference on models over 10-trillion-parameters in size.

5th Gen NVLink and NVLink Switch

Exascale computing and trillion-parameter AI models was made possible by NVIDIA’s revolutionary NVLink technology which further unifies GPUs to distribute computing seamlessly. Innovation and next-generation AI models hinge on the need for swift, seamless communication among every GPU within a server cluster. The fifth generation of NVIDIA® NVLink® interconnect can scale up to 576 GPUs to unleash accelerated performance for trillion- and multi-trillion parameter AI models.

The NVIDIA NVLink Switch Chip enables 130TB/s of GPU bandwidth in one 72-GPU NVLink domain (NVL72) and delivers 4X bandwidth efficiency with NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP)™ FP8 support. The NVIDIA NVLink Switch Chip supports clusters beyond a single server at the same impressive 1.8TB/s interconnect. Multi-server clusters with NVLink scale GPU communications in balance with the increased computing, so NVL72 can support 9X the GPU throughput than a single eight-GPU system. More on the NVIDIA B200 NVL72 in another post.

Decompression Engine

Data analytics and database workflows have traditionally been bottlenecked due to the reliance of CPUs for compute. If the data transfer is slow, then the whole data imperative computing of real-time AI can be bogged down at that single point.

Accelerated data science can dramatically boost the performance of end-to-end analytics and speed up AI process strictly by optimizing the data pipeline. NVIDIA Blackwell Decompression Engine can decompress data at a rate of up to 800GB/s using Blackwell GPU and NVIDIA Grace CPU’s NVLink-C2C. More on GB200 in another post.

RAS Engine

Blackwell architecture adds intelligent resiliency with dedicated Reliability Availability and Serviceability (RAS) Engine to identify faults. Minimizing the downtime of our valuable compute and important machines ensures the use of hardware is at performing at its utmost efficiency.

NVIDIA employs powerful AI predictive management capabilities to continuously monitor thousands of data points across the entire hardware/software stack for overall health. In-depth diagnostics and continued vigilance can identify areas of concern for maintenance reducing turnaround time and facilitating effective remediation.

Conclusion

The landscape and increased usage of AI the pursuit of performance and scalability. From individual users all the way to large business enterprise, AI is pivotal to productivity and innovation. Generative AI and accelerated GPU computing have become integral in countless industries including healthcare, automotive, manufacturing, and more.

NVIDIA Blackwell is designed to address the increased needs of AI for the ever-increasing size of AI models, speed for inferencing complex LLMs, while delivering magnitudes better performance and efficiency. We will go over the various NVIDIA Blackwell deployments in another blog post. Blackwell is planned to ship by the end of the year.

Fueling Innovation with an Exxact Multi-GPU Server

Training AI models on massive datasets can be accelerated exponentially with the right system. It's not just a high-performance computer, but a tool to propel and accelerate your research.

Configure Now