Introduction

Multi-node computing deployments require a robust networking infrastructure to ensure efficient node communication, minimize latency, and maximize throughput. Whether you are building an HPC cluster, AI training farm, or large-scale data processing system, careful networking design is essential. Here are the key considerations when networking for a multi-node computing environment.

Network Bandwidth and Speed Requirements

Choosing the right network bandwidth is crucial to prevent bottlenecks and ensure efficient data transfer:

- Gigabit Ethernet (1GbE): Suitable for light workloads but not ideal for compute-heavy clusters. Gigabit Ethernet is often the bare minimum for end devices like laptops and workstations included on most motherboards.

- 10GbE/25GbE: Faster 10 Gigabit and 25 Gigabit Ethernet are a large step up for communications devices. This is a common choice for small-to-medium clusters, balancing performance and cost. Faster speed allows larger file transfers to execute quicker such as 3D model upload, video editing, engineering simulation, and more. This can come in the classic ethernet connector or SFP connector.

- InfiniBand (HDR, NDR): Provides ultra-low latency and high throughput, crucial for AI/ML and HPC workloads. Since these workloads are data intensive with constant in-and-out of crucial data, these transfers need to be seamless to create adaptable and high-performance solutions.

- Fiber Optics: Offers high bandwidth and long-distance connectivity through optical cables. Ideal for data center interconnects and campus networks where distances exceed copper cable limitations. Fiber provides excellent signal integrity and immunity to electromagnetic interference.

Understanding these options helps determine the best fit based on workload demands and budget constraints.

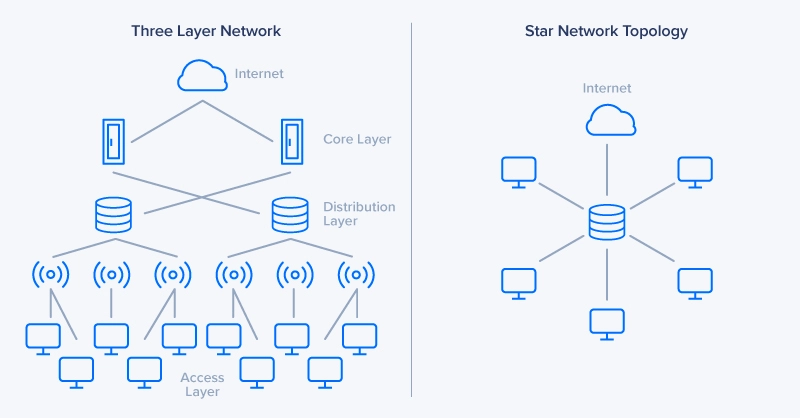

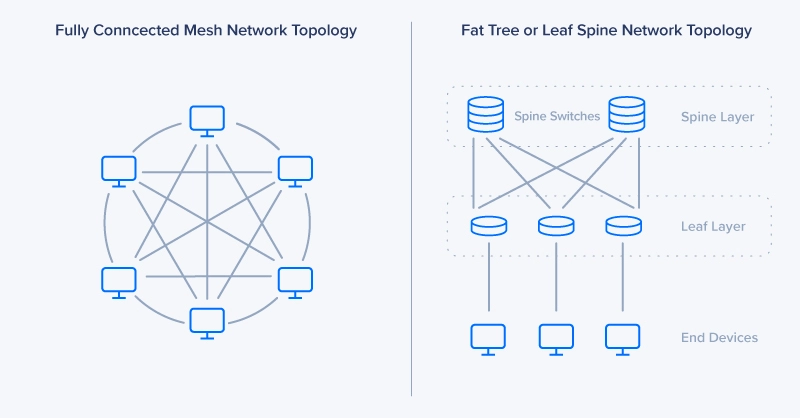

Choosing the Right Network Topology

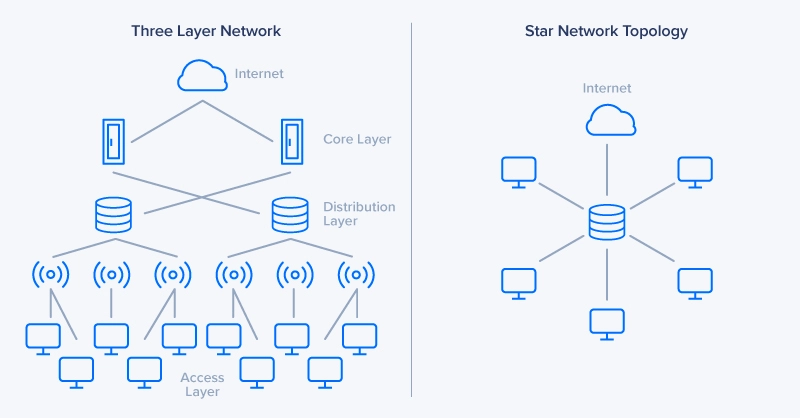

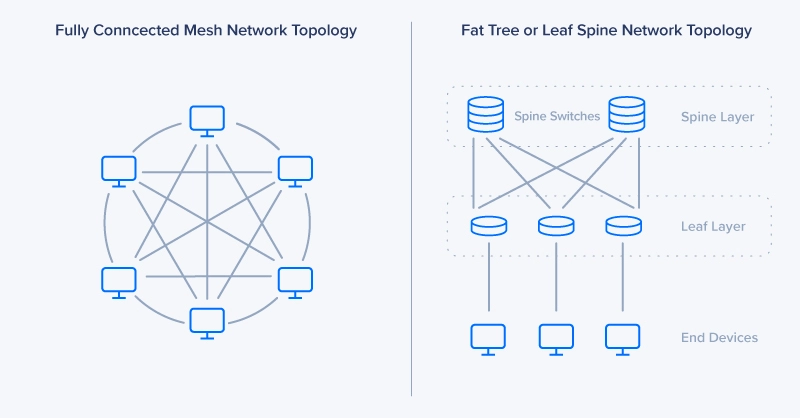

The network topology determines how nodes communicate and how traffic is routed. Selecting the right topology ensures efficient data flow and scalability:

- Star Topology – All nodes connect to a central switch. This is simple to set up and easy to manage, making it suitable for small deployments but prone to bottlenecks at the central switch.

- Fat-Tree Topology – A hierarchical design that reduces congestion by providing multiple paths between nodes. Common in HPC clusters, it ensures high bandwidth and low-latency communication.

- Full-Mesh Topology – Every node connects directly to every other node. This setup minimizes latency but requires a significant number of connections, making it impractical for large-scale deployments.

- Hybrid Topologies – A mix of multiple topologies, optimized for specific workloads, offering a balance between cost, complexity, and performance.

Here's a comparison of the advantages and disadvantages of each topology:

Topology | Pros | Cons |

Star Topology | • Simple to implement and manage | • Single point of failure at central switch |

Fat-Tree Topology | • Excellent scalability | • Complex implementation |

Full-Mesh Topology | • Lowest possible latency | • Very expensive to implement |

Hybrid Topology | • Flexible and customizable | • Complex design process |

Latency, Performance, & Redundancy

Reducing latency is vital for distributed computing performance significantly impacting overall system performance and user experience. High latency can cause delays in data processing, slow down distributed applications, and create bottlenecks in system operations. Furthermore, maintaining a redundancy in the data center also applies to networking. Addressing single failure points and adding resiliency through redundant NICs and switches are essential for when thing go wrong. Understanding and minimizing latency and ensuring consistent performance is crucial for efficiency in:

- Real-time processing applications where immediate response is critical

- Machine learning and AI workloads that require frequent model parameter updates

- High-performance computing tasks with intensive node-to-node communication

- Database operations requiring rapid data synchronization across nodes

Performance optimization requires a holistic approach, considering both hardware and software aspects of the network infrastructure. Network congestion, protocol overhead, and physical distance between nodes all contribute to overall latency. Methods to address and minimize latency in multi-node computing environments include:

- Network Interface Card (NIC) Optimization – Using hardware offloading features, interrupt moderation, and proper driver configurations to reduce CPU overhead and improve packet processing speed.

- Buffer Management – Implementing intelligent buffer allocation and queue management to prevent buffer bloat while maintaining optimal throughput.

- Protocol Tuning – Adjusting TCP/IP stack parameters, window sizes, and other protocol-specific settings to optimize for your specific network conditions and workload patterns.

- Physical Layer Optimization – Using high-quality cables, maintaining proper cable lengths, and ensuring clean signal integrity to minimize physical transmission delays.

- Network Congestion Control – Implementing advanced congestion control algorithms and traffic engineering to prevent network saturation and maintain consistent performance.

Monitoring and Management for Efficiency

Implementing robust monitoring and management systems is crucial for maintaining optimal network performance. Here are key tools and strategies:

- SNMP and Telemetry – Provides real-time network health and performance tracking through:

- Bandwidth utilization monitoring

- Error rate detection

- Device health metrics

- Traffic Shaping and QoS (Quality of Service) – Prioritizes critical workloads over less important traffic by:

- Setting bandwidth limits for different applications

- Implementing packet prioritization

- Managing congestion through intelligent queuing

- Automated Troubleshooting Tools – AI-driven diagnostics for proactive network maintenance, featuring:

- Predictive analytics for potential failures

- Automated root cause analysis

- Real-time alert systems

Regular monitoring and management not only helps maintain network performance but also aids in capacity planning and future infrastructure decisions. Using these tools effectively can significantly reduce downtime and improve overall system reliability.

Conclusion

A well-structured networking infrastructure is the backbone of any successful multi-node computing deployment. By carefully selecting your topology, optimizing for latency, ensuring scalability, securing your network, and implementing proactive monitoring, you can build a resilient and high-performance system. Whether you're planning a new deployment or upgrading existing infrastructure, Exxact's expertise is available to help configure and implement the right networking solution for your specific needs. Investing in a robust networking strategy today ensures seamless communication and future-proofing for tomorrow's computing demands.

We're Here to Deliver the Tools to Power Your Research

With access to the highest performing hardware, at Exxact, we can offer the platform optimized for your deployment, budget, and desired performance so you can make an impact with your research!

Talk to an Engineer Today

Networking Considerations for Multi-Node Computing Deployments

Introduction

Multi-node computing deployments require a robust networking infrastructure to ensure efficient node communication, minimize latency, and maximize throughput. Whether you are building an HPC cluster, AI training farm, or large-scale data processing system, careful networking design is essential. Here are the key considerations when networking for a multi-node computing environment.

Network Bandwidth and Speed Requirements

Choosing the right network bandwidth is crucial to prevent bottlenecks and ensure efficient data transfer:

- Gigabit Ethernet (1GbE): Suitable for light workloads but not ideal for compute-heavy clusters. Gigabit Ethernet is often the bare minimum for end devices like laptops and workstations included on most motherboards.

- 10GbE/25GbE: Faster 10 Gigabit and 25 Gigabit Ethernet are a large step up for communications devices. This is a common choice for small-to-medium clusters, balancing performance and cost. Faster speed allows larger file transfers to execute quicker such as 3D model upload, video editing, engineering simulation, and more. This can come in the classic ethernet connector or SFP connector.

- InfiniBand (HDR, NDR): Provides ultra-low latency and high throughput, crucial for AI/ML and HPC workloads. Since these workloads are data intensive with constant in-and-out of crucial data, these transfers need to be seamless to create adaptable and high-performance solutions.

- Fiber Optics: Offers high bandwidth and long-distance connectivity through optical cables. Ideal for data center interconnects and campus networks where distances exceed copper cable limitations. Fiber provides excellent signal integrity and immunity to electromagnetic interference.

Understanding these options helps determine the best fit based on workload demands and budget constraints.

Choosing the Right Network Topology

The network topology determines how nodes communicate and how traffic is routed. Selecting the right topology ensures efficient data flow and scalability:

- Star Topology – All nodes connect to a central switch. This is simple to set up and easy to manage, making it suitable for small deployments but prone to bottlenecks at the central switch.

- Fat-Tree Topology – A hierarchical design that reduces congestion by providing multiple paths between nodes. Common in HPC clusters, it ensures high bandwidth and low-latency communication.

- Full-Mesh Topology – Every node connects directly to every other node. This setup minimizes latency but requires a significant number of connections, making it impractical for large-scale deployments.

- Hybrid Topologies – A mix of multiple topologies, optimized for specific workloads, offering a balance between cost, complexity, and performance.

Here's a comparison of the advantages and disadvantages of each topology:

Topology | Pros | Cons |

Star Topology | • Simple to implement and manage | • Single point of failure at central switch |

Fat-Tree Topology | • Excellent scalability | • Complex implementation |

Full-Mesh Topology | • Lowest possible latency | • Very expensive to implement |

Hybrid Topology | • Flexible and customizable | • Complex design process |

Latency, Performance, & Redundancy

Reducing latency is vital for distributed computing performance significantly impacting overall system performance and user experience. High latency can cause delays in data processing, slow down distributed applications, and create bottlenecks in system operations. Furthermore, maintaining a redundancy in the data center also applies to networking. Addressing single failure points and adding resiliency through redundant NICs and switches are essential for when thing go wrong. Understanding and minimizing latency and ensuring consistent performance is crucial for efficiency in:

- Real-time processing applications where immediate response is critical

- Machine learning and AI workloads that require frequent model parameter updates

- High-performance computing tasks with intensive node-to-node communication

- Database operations requiring rapid data synchronization across nodes

Performance optimization requires a holistic approach, considering both hardware and software aspects of the network infrastructure. Network congestion, protocol overhead, and physical distance between nodes all contribute to overall latency. Methods to address and minimize latency in multi-node computing environments include:

- Network Interface Card (NIC) Optimization – Using hardware offloading features, interrupt moderation, and proper driver configurations to reduce CPU overhead and improve packet processing speed.

- Buffer Management – Implementing intelligent buffer allocation and queue management to prevent buffer bloat while maintaining optimal throughput.

- Protocol Tuning – Adjusting TCP/IP stack parameters, window sizes, and other protocol-specific settings to optimize for your specific network conditions and workload patterns.

- Physical Layer Optimization – Using high-quality cables, maintaining proper cable lengths, and ensuring clean signal integrity to minimize physical transmission delays.

- Network Congestion Control – Implementing advanced congestion control algorithms and traffic engineering to prevent network saturation and maintain consistent performance.

Monitoring and Management for Efficiency

Implementing robust monitoring and management systems is crucial for maintaining optimal network performance. Here are key tools and strategies:

- SNMP and Telemetry – Provides real-time network health and performance tracking through:

- Bandwidth utilization monitoring

- Error rate detection

- Device health metrics

- Traffic Shaping and QoS (Quality of Service) – Prioritizes critical workloads over less important traffic by:

- Setting bandwidth limits for different applications

- Implementing packet prioritization

- Managing congestion through intelligent queuing

- Automated Troubleshooting Tools – AI-driven diagnostics for proactive network maintenance, featuring:

- Predictive analytics for potential failures

- Automated root cause analysis

- Real-time alert systems

Regular monitoring and management not only helps maintain network performance but also aids in capacity planning and future infrastructure decisions. Using these tools effectively can significantly reduce downtime and improve overall system reliability.

Conclusion

A well-structured networking infrastructure is the backbone of any successful multi-node computing deployment. By carefully selecting your topology, optimizing for latency, ensuring scalability, securing your network, and implementing proactive monitoring, you can build a resilient and high-performance system. Whether you're planning a new deployment or upgrading existing infrastructure, Exxact's expertise is available to help configure and implement the right networking solution for your specific needs. Investing in a robust networking strategy today ensures seamless communication and future-proofing for tomorrow's computing demands.

We're Here to Deliver the Tools to Power Your Research

With access to the highest performing hardware, at Exxact, we can offer the platform optimized for your deployment, budget, and desired performance so you can make an impact with your research!

Talk to an Engineer Today