Introduction

Running an AI, machine learning, or rule-based model reliably across different machines should not be taken for granted. More often than not, you're running your model on a deployment machine that's completely different from your development environment.

When you finalize and send your model off to spread its wings and run important analyses and generate predictions, you need to ensure all drivers and dependencies match exactly between both machines. Docker containers bundle the model and its computing environment dependencies together so that deployment is seamless and efficient.

While Docker containers have made a huge mark in the software deployment, DevOps, and data center space, we want to revisit what makes them special in modern computing environments.

What is a Docker Container?

A container, like Docker, is a tool that creates, manages, and runs code and applications in an isolated computing environment. It allows us to define and snapshot an environment's dependencies, then deploy our model with those same dependencies across multiple machines or for community collaboration. Containers simplify data center management for DevOps and software deployment in on-prem and cloud environments.

Simply put, a container is a self-contained, isolated environment that packages all necessary code and dependencies.

A container has five main features:

- Self-contained: A container isolates applications from their environment and infrastructure. We don't need to rely on pre-installed dependencies on the host machine. Everything needed is within the container, ensuring the application runs regardless of infrastructure.

- Isolated: The container operates with minimal impact on the host and other containers, and vice versa.

- Independent: We can manage containers independently—deleting one container doesn't affect others.

- Portable: Since containers isolate software from hardware, they run seamlessly on any machine and can be moved between systems effortlessly.

- Lightweight: Containers share the host machine's operating system, eliminating the need for their own OS and avoiding hardware resource partitioning.

For example, when we package our ML model locally and push it to the cloud, Docker ensures our model can run anywhere, anytime. This brings several advantages: faster model delivery, better reproducibility, and easier collaboration—all because anybody can load and maintain identical dependencies regardless of where the container runs.

Docker Architecture

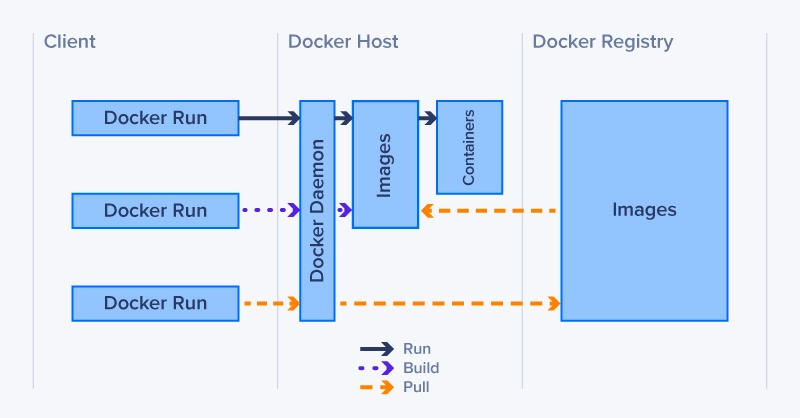

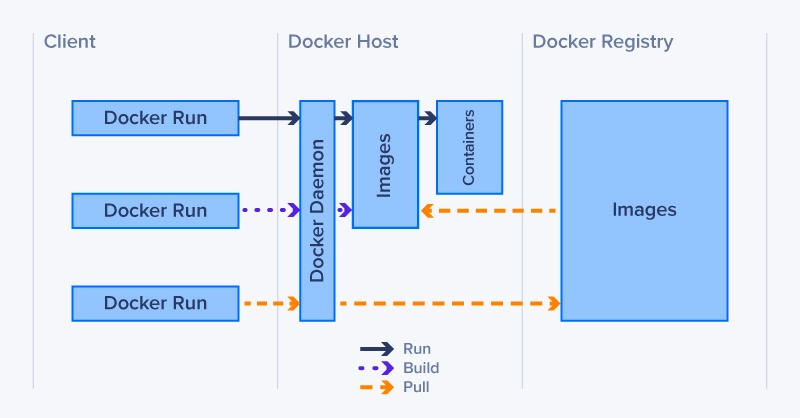

Docker's architecture consists of three main components: the Client, Host, and Registry. Let's explore how these components work together:

1. Docker Client

The Docker Client is the primary way users interact with Docker. It accepts commands like 'docker build' or 'docker run' and communicates with the Docker daemon. The client can communicate with multiple daemons, either locally or remotely.

2. Docker Host

The Docker Host contains several key components:

- Docker Daemon (dockerd): The daemon is a persistent process that manages Docker containers. It handles container operations, networking, and storage. The daemon listens for Docker API requests and manages Docker objects.

- Images: Read-only templates used to create containers. Images contain everything needed to run an application - code, runtime, libraries, environment variables, and config files.

- Containers: Runnable instances of Docker images. Containers are isolated environments that run applications, created from images. They can be started, stopped, moved, or deleted.

3. Docker Registry

The Docker Registry is a repository for Docker images. Docker Hub is the default public registry, but organizations often maintain private registries. When you run 'docker pull' or 'docker run', the required images are pulled from the configured registry.

These components work together seamlessly: The client sends commands to the daemon, which manages images and containers. When needed, the daemon pulls images from or pushes them to registries. This architecture enables Docker's powerful containerization capabilities while maintaining simplicity and efficiency.

Containers vs VMs (Virtual Machines)

While this may sound similar to virtual machines (VMs), containers operate differently. VMs create complete virtual computers with their own operating systems by partitioning physical hardware resources, requiring fixed allocation of RAM and CPU. VMs are typically larger and take longer to start up since they need to boot an entire OS. You can run containers within a VM.

Containers abstract at the application layer, sharing the host's operating system while running isolated processes. Since containers don't require their own operating system, they utilize computing resources more efficiently and reduce overhead. They're significantly smaller, start up almost instantly, and offer more flexible resource sharing between applications.

Common Container Use Cases

Docker has become a standard tool in software development and operations due to its versatility. Here are some of the most common use cases:

- CI/CD Pipelines: Docker streamlines continuous integration and deployment by providing consistent environments across stages. Developers can test code in containers that mimic production.

- Microservices Architecture: Containers are ideal for cloud microservices. Each cloud service can run in its own isolated container, making scaling and updates easier without affecting other services.

- Environment Replication: Docker ensures that development, testing, and production environments remain identical, reducing bugs related to environment mismatch.

- Application Sandboxing: Developers can test applications or tools in isolated containers without worrying about affecting the host system.

The standardization Docker provides eliminates environment inconsistencies across development and production, while its lightweight nature allows for efficient resource utilization and load balancing. With the ability to quickly scale containers up or down, organizations can adapt to changing workload demands efficiently.

Cloud Services and Container Orchestration

Docker containers are particularly well-suited for cloud-native applications and modern cloud infrastructure. Major cloud providers like AWS, Google Cloud, and Azure offer container orchestration services that seamlessly integrate with Docker, allowing organizations to deploy and manage containerized applications at scale. These services provide automated container deployment, load balancing, and self-healing capabilities.

Cloud-native applications designed specifically for container environments can take full advantage of microservices architecture, enabling rapid development and deployment cycles. Organizations can leverage container registries in the cloud to store and distribute Docker images, facilitating collaboration across global teams and ensuring consistent deployment across different cloud regions.

Conclusion

Docker containers have transformed modern software deployment by providing a standardized, efficient way to package and run applications. Their ability to encapsulate dependencies, ensure consistency across environments, and maintain isolation has made them indispensable in today's computing landscape. Docker containers’ lightweight nature and seamless integration with cloud services have revolutionized how organizations develop, test, and deploy applications.

From streamlining DevOps workflows to enabling microservices architecture, Docker continues to drive innovation in software deployment. Its robust ecosystem, combined with powerful orchestration tools, provides organizations with the flexibility and reliability needed in today's fast-paced development environment. As containerization technology evolves, Docker remains at the forefront of efficient, secure, and scalable application deployment solutions.

Fueling Innovation with an Exxact Multi-GPU Server

Accelerate your workload exponentially with the right system optimized to your use case. Exxact 4U Servers are not just a high-performance computer; it is the tool for propelling your innovation to new heights.

Configure Now

Docker Containers’ Importance in Software Deployment

Introduction

Running an AI, machine learning, or rule-based model reliably across different machines should not be taken for granted. More often than not, you're running your model on a deployment machine that's completely different from your development environment.

When you finalize and send your model off to spread its wings and run important analyses and generate predictions, you need to ensure all drivers and dependencies match exactly between both machines. Docker containers bundle the model and its computing environment dependencies together so that deployment is seamless and efficient.

While Docker containers have made a huge mark in the software deployment, DevOps, and data center space, we want to revisit what makes them special in modern computing environments.

What is a Docker Container?

A container, like Docker, is a tool that creates, manages, and runs code and applications in an isolated computing environment. It allows us to define and snapshot an environment's dependencies, then deploy our model with those same dependencies across multiple machines or for community collaboration. Containers simplify data center management for DevOps and software deployment in on-prem and cloud environments.

Simply put, a container is a self-contained, isolated environment that packages all necessary code and dependencies.

A container has five main features:

- Self-contained: A container isolates applications from their environment and infrastructure. We don't need to rely on pre-installed dependencies on the host machine. Everything needed is within the container, ensuring the application runs regardless of infrastructure.

- Isolated: The container operates with minimal impact on the host and other containers, and vice versa.

- Independent: We can manage containers independently—deleting one container doesn't affect others.

- Portable: Since containers isolate software from hardware, they run seamlessly on any machine and can be moved between systems effortlessly.

- Lightweight: Containers share the host machine's operating system, eliminating the need for their own OS and avoiding hardware resource partitioning.

For example, when we package our ML model locally and push it to the cloud, Docker ensures our model can run anywhere, anytime. This brings several advantages: faster model delivery, better reproducibility, and easier collaboration—all because anybody can load and maintain identical dependencies regardless of where the container runs.

Docker Architecture

Docker's architecture consists of three main components: the Client, Host, and Registry. Let's explore how these components work together:

1. Docker Client

The Docker Client is the primary way users interact with Docker. It accepts commands like 'docker build' or 'docker run' and communicates with the Docker daemon. The client can communicate with multiple daemons, either locally or remotely.

2. Docker Host

The Docker Host contains several key components:

- Docker Daemon (dockerd): The daemon is a persistent process that manages Docker containers. It handles container operations, networking, and storage. The daemon listens for Docker API requests and manages Docker objects.

- Images: Read-only templates used to create containers. Images contain everything needed to run an application - code, runtime, libraries, environment variables, and config files.

- Containers: Runnable instances of Docker images. Containers are isolated environments that run applications, created from images. They can be started, stopped, moved, or deleted.

3. Docker Registry

The Docker Registry is a repository for Docker images. Docker Hub is the default public registry, but organizations often maintain private registries. When you run 'docker pull' or 'docker run', the required images are pulled from the configured registry.

These components work together seamlessly: The client sends commands to the daemon, which manages images and containers. When needed, the daemon pulls images from or pushes them to registries. This architecture enables Docker's powerful containerization capabilities while maintaining simplicity and efficiency.

Containers vs VMs (Virtual Machines)

While this may sound similar to virtual machines (VMs), containers operate differently. VMs create complete virtual computers with their own operating systems by partitioning physical hardware resources, requiring fixed allocation of RAM and CPU. VMs are typically larger and take longer to start up since they need to boot an entire OS. You can run containers within a VM.

Containers abstract at the application layer, sharing the host's operating system while running isolated processes. Since containers don't require their own operating system, they utilize computing resources more efficiently and reduce overhead. They're significantly smaller, start up almost instantly, and offer more flexible resource sharing between applications.

Common Container Use Cases

Docker has become a standard tool in software development and operations due to its versatility. Here are some of the most common use cases:

- CI/CD Pipelines: Docker streamlines continuous integration and deployment by providing consistent environments across stages. Developers can test code in containers that mimic production.

- Microservices Architecture: Containers are ideal for cloud microservices. Each cloud service can run in its own isolated container, making scaling and updates easier without affecting other services.

- Environment Replication: Docker ensures that development, testing, and production environments remain identical, reducing bugs related to environment mismatch.

- Application Sandboxing: Developers can test applications or tools in isolated containers without worrying about affecting the host system.

The standardization Docker provides eliminates environment inconsistencies across development and production, while its lightweight nature allows for efficient resource utilization and load balancing. With the ability to quickly scale containers up or down, organizations can adapt to changing workload demands efficiently.

Cloud Services and Container Orchestration

Docker containers are particularly well-suited for cloud-native applications and modern cloud infrastructure. Major cloud providers like AWS, Google Cloud, and Azure offer container orchestration services that seamlessly integrate with Docker, allowing organizations to deploy and manage containerized applications at scale. These services provide automated container deployment, load balancing, and self-healing capabilities.

Cloud-native applications designed specifically for container environments can take full advantage of microservices architecture, enabling rapid development and deployment cycles. Organizations can leverage container registries in the cloud to store and distribute Docker images, facilitating collaboration across global teams and ensuring consistent deployment across different cloud regions.

Conclusion

Docker containers have transformed modern software deployment by providing a standardized, efficient way to package and run applications. Their ability to encapsulate dependencies, ensure consistency across environments, and maintain isolation has made them indispensable in today's computing landscape. Docker containers’ lightweight nature and seamless integration with cloud services have revolutionized how organizations develop, test, and deploy applications.

From streamlining DevOps workflows to enabling microservices architecture, Docker continues to drive innovation in software deployment. Its robust ecosystem, combined with powerful orchestration tools, provides organizations with the flexibility and reliability needed in today's fast-paced development environment. As containerization technology evolves, Docker remains at the forefront of efficient, secure, and scalable application deployment solutions.

Fueling Innovation with an Exxact Multi-GPU Server

Accelerate your workload exponentially with the right system optimized to your use case. Exxact 4U Servers are not just a high-performance computer; it is the tool for propelling your innovation to new heights.

Configure Now