This blog is written by engineer Jeff Collins at SimuTech Group, who ran the Ansys Fluent benchmarks on Exxact hardware featuring NVIDIA GPUs. Visit SimuTech for the original blog or any of your engineering needs.

Executive Summary

When our friends at NVIDIA offered us the chance to test the Ansys Fluent GPU solver on their cutting-edge hardware, we couldn’t resist accepting the challenge! Special thanks to Exxact Corp for setting up and configuring a workstation so that our SimuTech engineers could jump straight into testing. Our experiments showed that a single GPU can yield solve speeds equivalent to over 400 CPU-cores and reduce total energy consumption by 67%. In the coming years, GPU solvers have the potential to replace CPUs as the standard hardware for engineering simulation.

CPU vs GPU Simulation Hardware and Testing Conditions

The workstation supplied by Exxact Corporation for testing was equipped with two Xeon Silver 4416+ CPUs (20 cores each, 40 cores total) and four NVIDIA H100 NVL GPUs. For testing purposes, only a single GPU card was utilized.

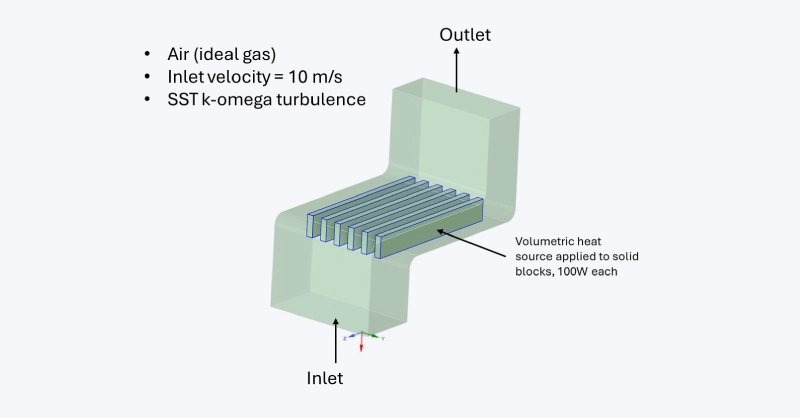

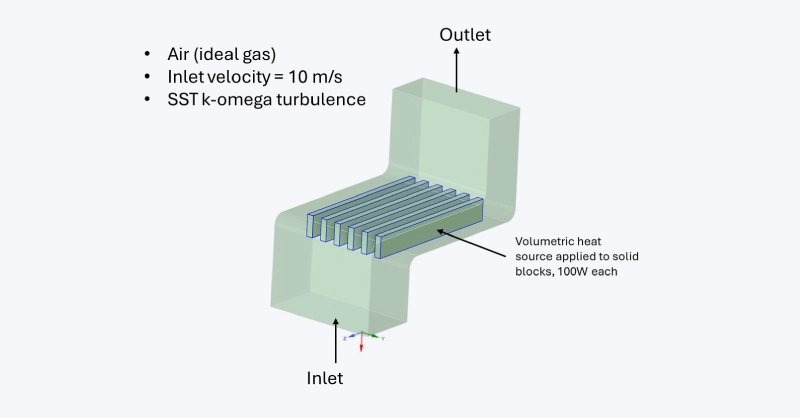

Fluent models included five steady-state test cases obtained from Ansys, with mesh sizes ranging from 2 million to 14 million elements. Steady-state physics included turbulent external aerodynamics and frame motion. A sixth test case was created by SimuTech to test the transient solver, which consisted of 27 million mesh elements and utilized SST k-omega turbulence, conjugate heat transfer, and compressible air as the working fluid. The transient geometry and simulated conditions are shown:

CPU vs GPU Performance Comparison

Figure 2 compares wall clock time per iteration between 40 CPUs versus a single GPU. Case names indicate both physics models and mesh count. For the external aerodynamics cases, the GPU solver performs between 3 to 10x faster than the CPU.

For cases involving frame motion, GPU performance gains were similar for both the 2 million and 4 million cell cases, yielding 10.3x and 8.7x speedup respectively.

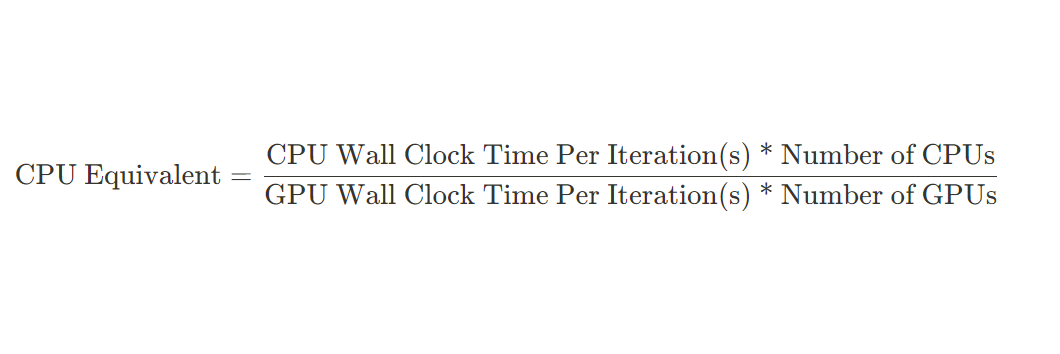

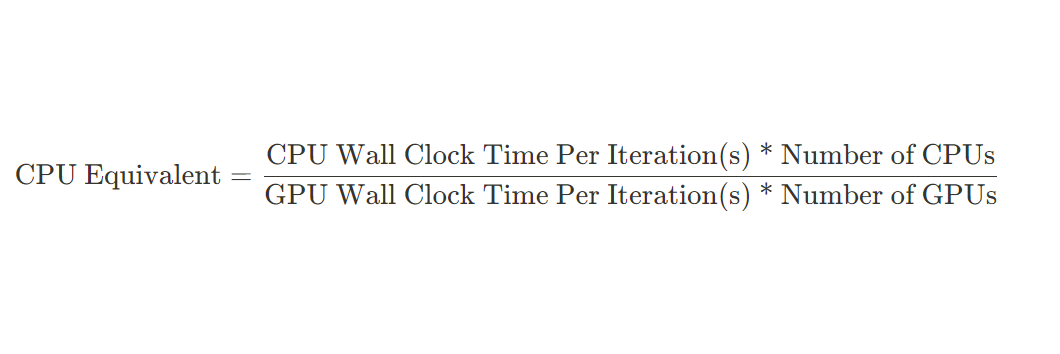

To better generalize the performance gains, Figure 3 reports the solution speed in terms of CPU equivalent. CPU equivalency was calculated as:

A single GPU performs at an equivalent of 124 CPU-cores in the worst case (Aerodynamics 2m), and up to 412 CPU-cores in the best case (Frame Motion 2m).

Energy Consumption Benefits

When comparing CPU vs GPU simulation, in addition to faster solve times, GPU simulation can also reduce total hardware energy consumption. For the Transient CHT case, the GPU solve reduced hardware energy consumption by 67%. Performance data is summarized in Table 1. A solution animation is included in Figure 4.

| 1000 time steps, ∆t = 0.001s | ||

| Hardware | CPU – 40x Xeon Silver 4416+ | GPU – 1x NVIDIA H100L |

| Average time per iteration | 4.521s | 0.640s |

| Total simulation wall-clock time | 6.6 hours | 1.6 hours |

| Total energy consumption (CPUs + GPUs) | 2.32 kWh | 0.77 kWh |

Multi-GPU Performance

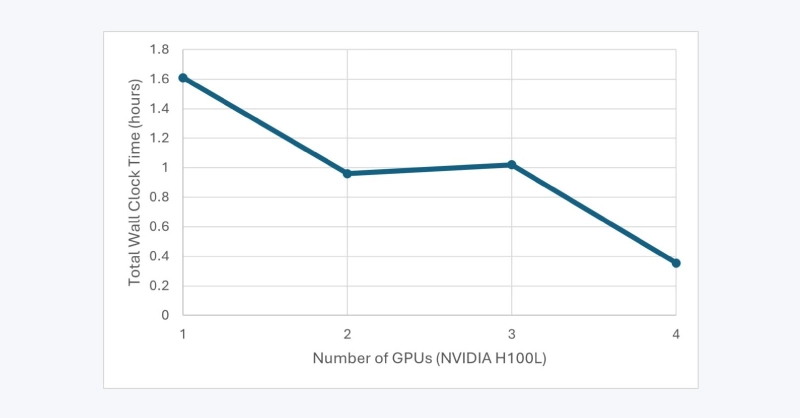

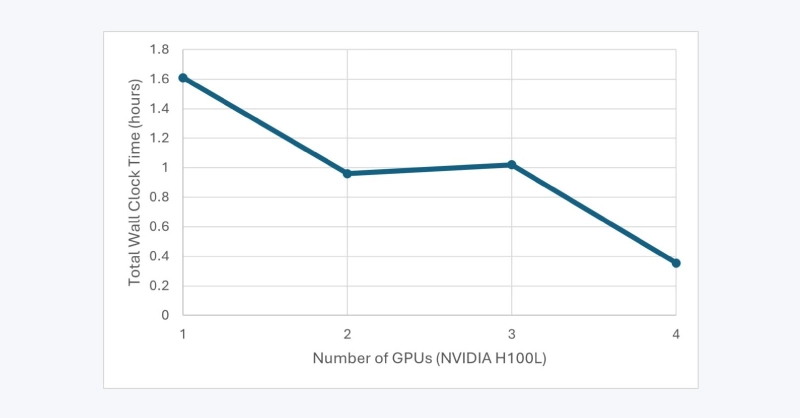

Like traditional CPU solvers, GPU cards can also be leveraged to operate in parallel. As a final experiment, multi-GPU solver performance was explored for the Transient CHT 27m case. Figure 5 compares the total simulation wall-clock time required to solve 1000 time steps using 1, 2, 3, and 4 GPUs.

Increasing the number of GPUs from two to four reduced total simulation time by 40% and 78%, respectively. In this case, solving on three GPUs offered no performance gains compared to solving on two.

From a software perspective, load distribution and mesh partitioning are known challenges in HPC performance for scaling CPU solves across multiple cores and processors. Despite the obvious advantages of GPU solvers, it would be unrealistic to expect they would be impervious to some non-linearity in scaling performance. As the technology continues to mature, hardware scalability will inevitably become more robust.

CPU vs GPU Simulation: Final Thoughts & the Future of GPU Solvers

In the test cases considered, a single GPU performed with solve speeds equivalent to over 400 CPU-cores. Performance gains can vary based on model size and physics. While the GPU solver does not yet support the full suite of Fluent physics models, more capabilities are added with each new release. In the coming years, GPUs have the potential to displace CPUs as the standard hardware for engineering simulation.

Exxact is an industry leading compute solutions provider delivering custom built and turnkey high performance computing workstations, servers, and clusters. Ready to speedup your simulations and workflow productivity with GPU accelerated computing? Invest in your hardware and build a system today.

Accelerate Simulations in Ansys with GPUs

With the latest CPUs and most powerful GPUs available, accelerate your Ansys simulation and CFD project optimized to your deployment, budget, and desired performance!

Configure Now

CPU vs GPU in Ansys Fluent: Exxact, Simutech, & NVIDIA Collaboration

This blog is written by engineer Jeff Collins at SimuTech Group, who ran the Ansys Fluent benchmarks on Exxact hardware featuring NVIDIA GPUs. Visit SimuTech for the original blog or any of your engineering needs.

Executive Summary

When our friends at NVIDIA offered us the chance to test the Ansys Fluent GPU solver on their cutting-edge hardware, we couldn’t resist accepting the challenge! Special thanks to Exxact Corp for setting up and configuring a workstation so that our SimuTech engineers could jump straight into testing. Our experiments showed that a single GPU can yield solve speeds equivalent to over 400 CPU-cores and reduce total energy consumption by 67%. In the coming years, GPU solvers have the potential to replace CPUs as the standard hardware for engineering simulation.

CPU vs GPU Simulation Hardware and Testing Conditions

The workstation supplied by Exxact Corporation for testing was equipped with two Xeon Silver 4416+ CPUs (20 cores each, 40 cores total) and four NVIDIA H100 NVL GPUs. For testing purposes, only a single GPU card was utilized.

Fluent models included five steady-state test cases obtained from Ansys, with mesh sizes ranging from 2 million to 14 million elements. Steady-state physics included turbulent external aerodynamics and frame motion. A sixth test case was created by SimuTech to test the transient solver, which consisted of 27 million mesh elements and utilized SST k-omega turbulence, conjugate heat transfer, and compressible air as the working fluid. The transient geometry and simulated conditions are shown:

CPU vs GPU Performance Comparison

Figure 2 compares wall clock time per iteration between 40 CPUs versus a single GPU. Case names indicate both physics models and mesh count. For the external aerodynamics cases, the GPU solver performs between 3 to 10x faster than the CPU.

For cases involving frame motion, GPU performance gains were similar for both the 2 million and 4 million cell cases, yielding 10.3x and 8.7x speedup respectively.

To better generalize the performance gains, Figure 3 reports the solution speed in terms of CPU equivalent. CPU equivalency was calculated as:

A single GPU performs at an equivalent of 124 CPU-cores in the worst case (Aerodynamics 2m), and up to 412 CPU-cores in the best case (Frame Motion 2m).

Energy Consumption Benefits

When comparing CPU vs GPU simulation, in addition to faster solve times, GPU simulation can also reduce total hardware energy consumption. For the Transient CHT case, the GPU solve reduced hardware energy consumption by 67%. Performance data is summarized in Table 1. A solution animation is included in Figure 4.

| 1000 time steps, ∆t = 0.001s | ||

| Hardware | CPU – 40x Xeon Silver 4416+ | GPU – 1x NVIDIA H100L |

| Average time per iteration | 4.521s | 0.640s |

| Total simulation wall-clock time | 6.6 hours | 1.6 hours |

| Total energy consumption (CPUs + GPUs) | 2.32 kWh | 0.77 kWh |

Multi-GPU Performance

Like traditional CPU solvers, GPU cards can also be leveraged to operate in parallel. As a final experiment, multi-GPU solver performance was explored for the Transient CHT 27m case. Figure 5 compares the total simulation wall-clock time required to solve 1000 time steps using 1, 2, 3, and 4 GPUs.

Increasing the number of GPUs from two to four reduced total simulation time by 40% and 78%, respectively. In this case, solving on three GPUs offered no performance gains compared to solving on two.

From a software perspective, load distribution and mesh partitioning are known challenges in HPC performance for scaling CPU solves across multiple cores and processors. Despite the obvious advantages of GPU solvers, it would be unrealistic to expect they would be impervious to some non-linearity in scaling performance. As the technology continues to mature, hardware scalability will inevitably become more robust.

CPU vs GPU Simulation: Final Thoughts & the Future of GPU Solvers

In the test cases considered, a single GPU performed with solve speeds equivalent to over 400 CPU-cores. Performance gains can vary based on model size and physics. While the GPU solver does not yet support the full suite of Fluent physics models, more capabilities are added with each new release. In the coming years, GPUs have the potential to displace CPUs as the standard hardware for engineering simulation.

Exxact is an industry leading compute solutions provider delivering custom built and turnkey high performance computing workstations, servers, and clusters. Ready to speedup your simulations and workflow productivity with GPU accelerated computing? Invest in your hardware and build a system today.

Accelerate Simulations in Ansys with GPUs

With the latest CPUs and most powerful GPUs available, accelerate your Ansys simulation and CFD project optimized to your deployment, budget, and desired performance!

Configure Now