Introduction

As artificial intelligence continues to advance, the accessibility of powerful tools to harness this technology becomes increasingly crucial for developers, researchers, and tech enthusiasts alike.

Large Language Models (LLMs) like ChatGPT, Gemini, and open-source alternatives such as Llama and Mistral, have revolutionized our interaction with machine learning. These models serve as the backbone for a wide range of applications, from simple chatbots to sophisticated decision-making systems. Managing these powerful tools, however, often requires considerable expertise, posing a significant challenge for many users.

We will guide you through how to access these open-source models remotely, highlighting the use of Ollama for managing your models, and the Ollama Web UI for an enhanced interactive experience as well as how you can employ ngrok to remote access the local environment we created for our Ollama model.

What is Ollama?

Ollama is a user-friendly platform that simplifies the management and operation of LLMs, making cutting-edge AI accessible even to those with only a modest technical background. Designed to simplify the management and deployment of LLMs, Ollama acts not just as a tool, but as a bridge to a broader understanding of AI’s potential. Whether you're working with advanced proprietary models or exploring the possibilities of accessible open-source models, Ollama provides a user-friendly platform to harness the capabilities of these transformative technologies.

What is Ngrok?

Ngrok is a powerful reverse proxy tool that facilitates secure and reliable remote access to local server setups. We will use ngrok to create a tunnel to your Ollama environment, allowing you to manage and interact with your LLM from any location.

Ngrok is crucial for developers who want to access their models on the go without needing to carry the compute everywhere. It provides a stable and secure connection to your AI tools regardless of physical location.

Getting Started with Ollama

We will focus primarily on two models: Llama 3: 70b those with robust computational resources, and Mistral 7B, perfect for beginners or those with limited resources. Whether you are a seasoned developer or a curious novice, this guide will walk you through setting up, running, and optimizing LLMs using Ollama and ngrok for remote access.

Here are links to:

https://ollama.com/library/llama3

https://ollama.com/library/mixtral

https://ollama.com/library/mistral

Installation

Getting started with Ollama is straightforward, especially if you're using a Linux environment. Below, we detail the steps for Linux users and provide resources for those on other platforms.

Install Ollama on Linux:

1. Download and Install: Begin by opening your terminal and entering the following command. This script automates the installation of GitHuba, ensuring a smooth setup process:

curl -fsSL https://ollama.com/install.sh | sh

This command retrieves the installation script directly from Ollama's website and runs it, setting up Ollama on your Linux system and preparing you for the exciting journey ahead.

2. Launch the Web UI: Once Ollama is installed, you can start the web-based user interface using Docker, which facilitates running Ollama in an isolated environment:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

This command runs the Ollama Web UI in a Docker container, mapping the container's port 8080 to port 3000 on your host machine. The --restart always option ensures that the Docker container restarts automatically if it crashes or if you reboot your system. Review Ollama’s Github for WebUI here.

Installation for Other Platforms:

For those using macOS, Windows, or who prefer a Docker-based installation even on Linux, Ollama supports several other installation methods including an installation exe file on the Ollama download site or refer to the official Ollama GitHub repository for detailed instructions tailored to each platform. This repository includes comprehensive guides and troubleshooting tips to help you set up Ollama on a variety of environments.

Understanding the Web UI:

The Ollama Web UI is a key feature that provides a simple, intuitive interface, allowing you to interact seamlessly with your Large Language Models (LLMs). Designed for user-friendliness, the Web UI makes managing LLMs accessible to users of all technical levels, from beginners to advanced practitioners.

Accessing the Web UI:

To begin exploring the capabilities of Ollama, open your web browser and navigate to: http://localhost:3000

This address will direct you to the login page of the Ollama Web UI, hosted locally on your machine.

Setting Up the Account:

Upon reaching the login page, you will need to create an account. Since this environment is local, all user data remains securely stored within your Docker container. Feel free to use any preferred username, email, and password as this information does not need to be tied to any external accounts:

- Click on the 'Sign Up' button to register.

- Enter your chosen name, email, and password.

- Submit your details to create your account.

Interacting with the Interface:

Once logged in, the main interface will be displayed, where you can select an LLM to interact with. The user-friendly dashboard allows you to easily start conversations and explore different functionalities of each model.

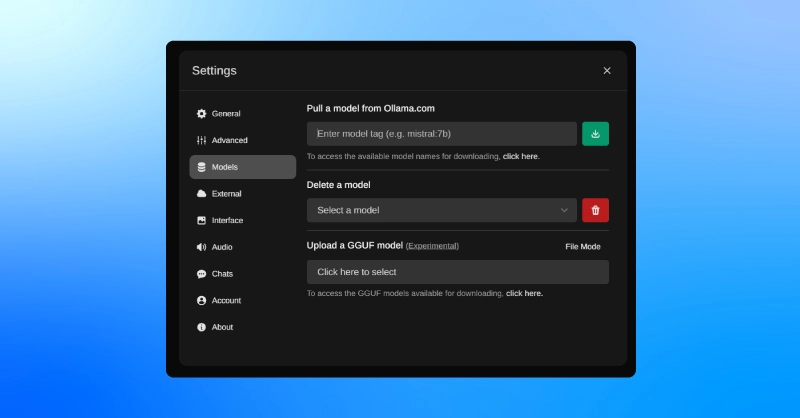

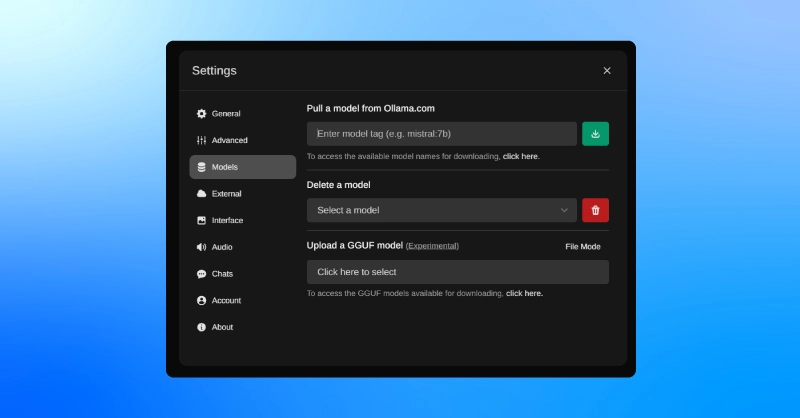

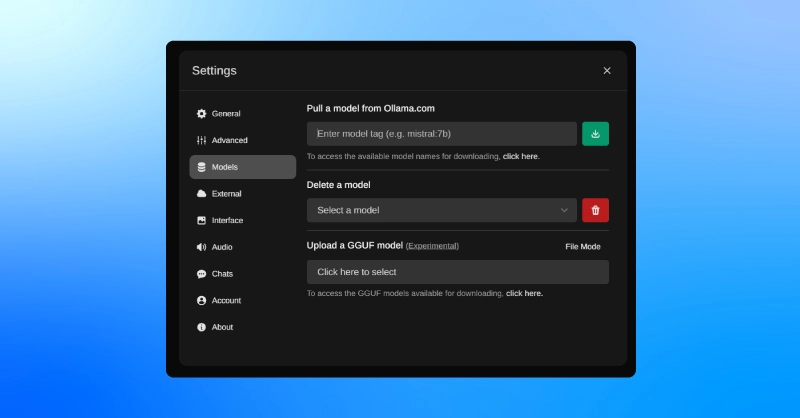

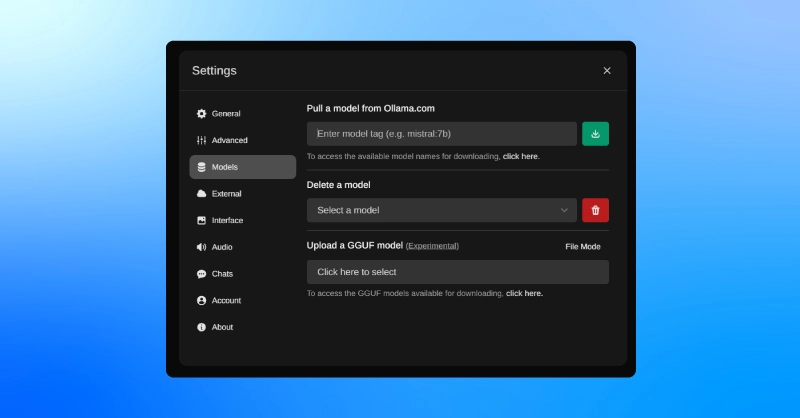

Downloading Additional Models:

If you wish to expand your selection of models:

- Navigate to the 'Settings' page, accessible from the main menu.

- Click on the 'Models' tab within the settings.

- Here, you can download additional models by entering the model tag provided on http://Ollama.com .

- Click 'Download' to initiate the process. Once the download is complete, the new model will appear on the main screen for selection.

The Ollama Web UI is designed to provide a robust yet straightforward environment for working with and learning about LLMs, enhancing your experience and making it easier to manage these complex models effectively.

Choosing the Right LLM

When it comes to selecting the right Large Language Model, the decision largely depends on your specific needs and resources. Here are some considerations to keep in mind when choosing from Ollama's library:

- Model Size and Resource Requirements: Larger models like Llama 3 require significant computational power but offer more capabilities. In contrast, smaller models like llama3: 8b are more manageable but might be less powerful.

- Task Specificity: Consider what you need the model to do. For detailed, complex tasks, a larger model might be necessary, while for general use, a smaller model could suffice.

Facilitate Deployment & Training AI with an Exxact GPU Workstation

With the latest CPUs and most powerful GPUs available, accelerate your deep learning and AI project optimized to your deployment, budget, and desired performance!

Configure NowOllama Use Case: Interacting with an LLM

Let’s consider a scenario where you want to interact with your LLM about a general topic. Here’s a simple way to do this:

- Configure Your Model:

- Select and Load Your LLM: In the Ollama Web UI, select the llama3: 8b model from the list of available LLMs. This model offers a good balance between performance and resource usage, making it ideal for general inquiries.

- Load the Model: Click the option to load your selected model, preparing it for interaction.

- Set Up the Interaction:

- Access the Chat Interface: Navigate to the chat interface in the Ollama Web UI. This is where you will type your questions and receive answers.

- Craft Your Prompt: To initiate a conversation, type a straightforward prompt in the chat box.

- For example:

- Nutrition Advisor: "I want to eat healthier but struggle with meal planning. Could you act as my nutrition advisor and suggest a weekly meal plan?"

- Homework Tutor: "I need help with my physics homework. Can you be my tutor and explain the concepts of quantum mechanics?"

- Programming Assistant: "I'm stuck on a coding problem and need some help debugging my Python script. Could you act as my programming assistant?"

- Test and Refine:

- Interact with the LLM: Submit your prompt and review the responses from the LLM. The initial interactions can help you gauge the effectiveness of the model's understanding and output.

- Refine Your Prompts: Based on the responses, you may choose to refine your questions or ask follow-up questions to delve deeper into the topic.

This use case demonstrates a basic yet effective way to engage with an LLM using Ollama. Whether you are simply exploring AI capabilities or seeking specific information, the simplicity of Ollama’s interface allows for quick adjustments and interactive learning. Feel free to experiment with different questions and settings to discover the full potential of your LLM.

Remote Access with ngrok

Providing remote access to your LLM setup can greatly enhance its usability, especially if you want to manage or interact with your model from different locations or devices. Ngrok is an invaluable tool for this purpose, as it creates a secure tunnel to your local server.

Here’s a detailed guide on how to set it up:

1. Install ngrok:

- Sign Up and Log In: Visit the ngrok website and sign up for an account. Once registered, log in to access your dashboard.

- Navigate to Setup: In your ngrok dashboard, locate the 'Getting Started' section under 'Setup and Installation.' This part of the dashboard is designed to guide new users through the initial setup process.

- Select Your Operating System: Choose the agent for Linux, which will display specific instructions tailored to Linux environments.

- Download and Install: Follow the detailed instructions provided to download and install the ngrok client on your Linux system.

2. Authenticate ngrok:

- Retrieve Your Authentication Token: After installation, your unique authentication token can be found on the ngrok dashboard. This token is essential for linking your local ngrok client to your ngrok account.

- Authenticate Your Client: Open a terminal and run the following command to add your authentication token to grok:

ngrok config add-authtoken [Your_Auth_Token]

- Replace [Your_Auth_Token] with the actual token provided. This step ensures that your sessions are secure and linked to your account.

3. Connect to Ollama:

- Run ngrok: Once authenticated, you can start an ngrok session to expose your Ollama Web UI to the internet. Ensure that your Ollama Web UI is running on its default port (usually 3000). Then, in your terminal, execute:

ngrok http http://localhost:3000

- Access Ollama Remotely: ngrok will then provide a URL that you can use to remotely access your Ollama instance. This URL acts as a secure gateway to your local server, allowing you to manage and interact with your LLM from anywhere with internet access.

By following these steps, you can set up ngrok to provide secure and convenient remote access to your Ollama Web UI, greatly enhancing the flexibility and accessibility of your LLM management.

Security and Privacy Consideration

While ngrok is a powerful tool for remote access, it also introduces some security considerations that you must address:

- Secure Your Connections: Always use HTTPS to access your Ollama web UI through ngrok. This ensures that your data is encrypted during transmission.

- Monitor Access: Keep an eye on the access logs provided by ngrok. If you notice any unfamiliar or suspicious activity, investigate and take appropriate action.

- Limit Exposure: Only keep the ngrok connection active while it is needed. Prolonged exposure can increase the risk of unauthorized access.

By adhering to these best practices, you can minimize risks and ensure that your use of Ollama and ngrok remains secure and private.

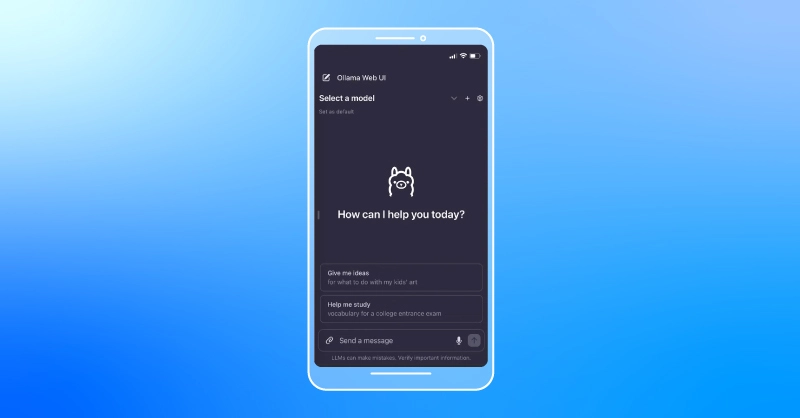

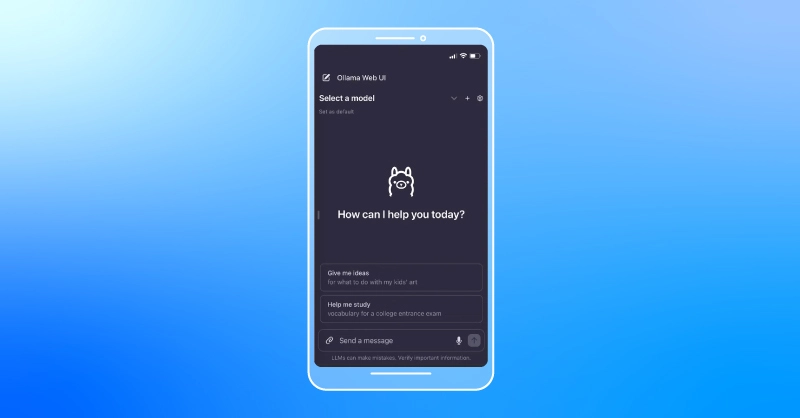

Access Your Local LLM on Mobile

The ultimate convenience comes when you can interact with your LLM setups directly from your mobile device. Following the ngrok setup outlined previously, accessing your Ollama instance on mobile is straightforward. Here’s how to do it:

- Access via ngrok URL: Once ngrok is running and exposing your Ollama instance, it will provide you with a URL. Simply enter this URL into the browser on your mobile device.

- Mobile Interface: The Ollama web UI is designed to be responsive, meaning it should adjust to fit your mobile screen. This accessibility ensures you can manage and interact with your LLMs just as easily on your phone as you can on a computer.

This capability not only increases the flexibility of where and how you can work with LLMs but also ensures you can demonstrate or share your projects with others more conveniently, whether in meetings, classrooms, or casual discussions.

Conclusion

Congratulations on beginning your journey with Large Language Models through Ollama! We encourage you to explore the ever-evolving capabilities of open-source projects like Ollama, Mistral, and Lama. These platforms not only push the boundaries of what's possible in generative AI but also make cutting-edge technology more accessible. As the state of the art continues to advance, it opens the door for more people to engage with and benefit from these powerful tools.

The ability to run these models on a local GPU-equipped PC is not just empowering—it's transformative. It gives you complete control over the tools, allowing for a deeper understanding and customization of the technology. As advancements in AI continue, models are becoming smaller and more efficient, promising a future where anyone can use high-level AI tools right from their own home.

At Exxact, we provide the essential hardware to make this future a reality today. Configure your very own deep learning workstation, server, or even a full rack to fuel your AI development. With our powerful, ready-to-deploy systems, you have everything you need to start training, developing, and deploying your own LLMs. Dive into the world of AI with Exxact, where we deliver the compute and storage to unleash your creativity.

We're Here to Deliver the Tools to Power Your Research

With access to the highest performing hardware, at Exxact, we can offer the platform optimized for your deployment, budget, and desired performance so you can make an impact with your research!

Talk to an Engineer Today

Access Open Source LLMs Anywhere - Mobile LLMs with Ollama

Introduction

As artificial intelligence continues to advance, the accessibility of powerful tools to harness this technology becomes increasingly crucial for developers, researchers, and tech enthusiasts alike.

Large Language Models (LLMs) like ChatGPT, Gemini, and open-source alternatives such as Llama and Mistral, have revolutionized our interaction with machine learning. These models serve as the backbone for a wide range of applications, from simple chatbots to sophisticated decision-making systems. Managing these powerful tools, however, often requires considerable expertise, posing a significant challenge for many users.

We will guide you through how to access these open-source models remotely, highlighting the use of Ollama for managing your models, and the Ollama Web UI for an enhanced interactive experience as well as how you can employ ngrok to remote access the local environment we created for our Ollama model.

What is Ollama?

Ollama is a user-friendly platform that simplifies the management and operation of LLMs, making cutting-edge AI accessible even to those with only a modest technical background. Designed to simplify the management and deployment of LLMs, Ollama acts not just as a tool, but as a bridge to a broader understanding of AI’s potential. Whether you're working with advanced proprietary models or exploring the possibilities of accessible open-source models, Ollama provides a user-friendly platform to harness the capabilities of these transformative technologies.

What is Ngrok?

Ngrok is a powerful reverse proxy tool that facilitates secure and reliable remote access to local server setups. We will use ngrok to create a tunnel to your Ollama environment, allowing you to manage and interact with your LLM from any location.

Ngrok is crucial for developers who want to access their models on the go without needing to carry the compute everywhere. It provides a stable and secure connection to your AI tools regardless of physical location.

Getting Started with Ollama

We will focus primarily on two models: Llama 3: 70b those with robust computational resources, and Mistral 7B, perfect for beginners or those with limited resources. Whether you are a seasoned developer or a curious novice, this guide will walk you through setting up, running, and optimizing LLMs using Ollama and ngrok for remote access.

Here are links to:

https://ollama.com/library/llama3

https://ollama.com/library/mixtral

https://ollama.com/library/mistral

Installation

Getting started with Ollama is straightforward, especially if you're using a Linux environment. Below, we detail the steps for Linux users and provide resources for those on other platforms.

Install Ollama on Linux:

1. Download and Install: Begin by opening your terminal and entering the following command. This script automates the installation of GitHuba, ensuring a smooth setup process:

curl -fsSL https://ollama.com/install.sh | sh

This command retrieves the installation script directly from Ollama's website and runs it, setting up Ollama on your Linux system and preparing you for the exciting journey ahead.

2. Launch the Web UI: Once Ollama is installed, you can start the web-based user interface using Docker, which facilitates running Ollama in an isolated environment:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

This command runs the Ollama Web UI in a Docker container, mapping the container's port 8080 to port 3000 on your host machine. The --restart always option ensures that the Docker container restarts automatically if it crashes or if you reboot your system. Review Ollama’s Github for WebUI here.

Installation for Other Platforms:

For those using macOS, Windows, or who prefer a Docker-based installation even on Linux, Ollama supports several other installation methods including an installation exe file on the Ollama download site or refer to the official Ollama GitHub repository for detailed instructions tailored to each platform. This repository includes comprehensive guides and troubleshooting tips to help you set up Ollama on a variety of environments.

Understanding the Web UI:

The Ollama Web UI is a key feature that provides a simple, intuitive interface, allowing you to interact seamlessly with your Large Language Models (LLMs). Designed for user-friendliness, the Web UI makes managing LLMs accessible to users of all technical levels, from beginners to advanced practitioners.

Accessing the Web UI:

To begin exploring the capabilities of Ollama, open your web browser and navigate to: http://localhost:3000

This address will direct you to the login page of the Ollama Web UI, hosted locally on your machine.

Setting Up the Account:

Upon reaching the login page, you will need to create an account. Since this environment is local, all user data remains securely stored within your Docker container. Feel free to use any preferred username, email, and password as this information does not need to be tied to any external accounts:

- Click on the 'Sign Up' button to register.

- Enter your chosen name, email, and password.

- Submit your details to create your account.

Interacting with the Interface:

Once logged in, the main interface will be displayed, where you can select an LLM to interact with. The user-friendly dashboard allows you to easily start conversations and explore different functionalities of each model.

Downloading Additional Models:

If you wish to expand your selection of models:

- Navigate to the 'Settings' page, accessible from the main menu.

- Click on the 'Models' tab within the settings.

- Here, you can download additional models by entering the model tag provided on http://Ollama.com .

- Click 'Download' to initiate the process. Once the download is complete, the new model will appear on the main screen for selection.

The Ollama Web UI is designed to provide a robust yet straightforward environment for working with and learning about LLMs, enhancing your experience and making it easier to manage these complex models effectively.

Choosing the Right LLM

When it comes to selecting the right Large Language Model, the decision largely depends on your specific needs and resources. Here are some considerations to keep in mind when choosing from Ollama's library:

- Model Size and Resource Requirements: Larger models like Llama 3 require significant computational power but offer more capabilities. In contrast, smaller models like llama3: 8b are more manageable but might be less powerful.

- Task Specificity: Consider what you need the model to do. For detailed, complex tasks, a larger model might be necessary, while for general use, a smaller model could suffice.

Facilitate Deployment & Training AI with an Exxact GPU Workstation

With the latest CPUs and most powerful GPUs available, accelerate your deep learning and AI project optimized to your deployment, budget, and desired performance!

Configure NowOllama Use Case: Interacting with an LLM

Let’s consider a scenario where you want to interact with your LLM about a general topic. Here’s a simple way to do this:

- Configure Your Model:

- Select and Load Your LLM: In the Ollama Web UI, select the llama3: 8b model from the list of available LLMs. This model offers a good balance between performance and resource usage, making it ideal for general inquiries.

- Load the Model: Click the option to load your selected model, preparing it for interaction.

- Set Up the Interaction:

- Access the Chat Interface: Navigate to the chat interface in the Ollama Web UI. This is where you will type your questions and receive answers.

- Craft Your Prompt: To initiate a conversation, type a straightforward prompt in the chat box.

- For example:

- Nutrition Advisor: "I want to eat healthier but struggle with meal planning. Could you act as my nutrition advisor and suggest a weekly meal plan?"

- Homework Tutor: "I need help with my physics homework. Can you be my tutor and explain the concepts of quantum mechanics?"

- Programming Assistant: "I'm stuck on a coding problem and need some help debugging my Python script. Could you act as my programming assistant?"

- Test and Refine:

- Interact with the LLM: Submit your prompt and review the responses from the LLM. The initial interactions can help you gauge the effectiveness of the model's understanding and output.

- Refine Your Prompts: Based on the responses, you may choose to refine your questions or ask follow-up questions to delve deeper into the topic.

This use case demonstrates a basic yet effective way to engage with an LLM using Ollama. Whether you are simply exploring AI capabilities or seeking specific information, the simplicity of Ollama’s interface allows for quick adjustments and interactive learning. Feel free to experiment with different questions and settings to discover the full potential of your LLM.

Remote Access with ngrok

Providing remote access to your LLM setup can greatly enhance its usability, especially if you want to manage or interact with your model from different locations or devices. Ngrok is an invaluable tool for this purpose, as it creates a secure tunnel to your local server.

Here’s a detailed guide on how to set it up:

1. Install ngrok:

- Sign Up and Log In: Visit the ngrok website and sign up for an account. Once registered, log in to access your dashboard.

- Navigate to Setup: In your ngrok dashboard, locate the 'Getting Started' section under 'Setup and Installation.' This part of the dashboard is designed to guide new users through the initial setup process.

- Select Your Operating System: Choose the agent for Linux, which will display specific instructions tailored to Linux environments.

- Download and Install: Follow the detailed instructions provided to download and install the ngrok client on your Linux system.

2. Authenticate ngrok:

- Retrieve Your Authentication Token: After installation, your unique authentication token can be found on the ngrok dashboard. This token is essential for linking your local ngrok client to your ngrok account.

- Authenticate Your Client: Open a terminal and run the following command to add your authentication token to grok:

ngrok config add-authtoken [Your_Auth_Token]

- Replace [Your_Auth_Token] with the actual token provided. This step ensures that your sessions are secure and linked to your account.

3. Connect to Ollama:

- Run ngrok: Once authenticated, you can start an ngrok session to expose your Ollama Web UI to the internet. Ensure that your Ollama Web UI is running on its default port (usually 3000). Then, in your terminal, execute:

ngrok http http://localhost:3000

- Access Ollama Remotely: ngrok will then provide a URL that you can use to remotely access your Ollama instance. This URL acts as a secure gateway to your local server, allowing you to manage and interact with your LLM from anywhere with internet access.

By following these steps, you can set up ngrok to provide secure and convenient remote access to your Ollama Web UI, greatly enhancing the flexibility and accessibility of your LLM management.

Security and Privacy Consideration

While ngrok is a powerful tool for remote access, it also introduces some security considerations that you must address:

- Secure Your Connections: Always use HTTPS to access your Ollama web UI through ngrok. This ensures that your data is encrypted during transmission.

- Monitor Access: Keep an eye on the access logs provided by ngrok. If you notice any unfamiliar or suspicious activity, investigate and take appropriate action.

- Limit Exposure: Only keep the ngrok connection active while it is needed. Prolonged exposure can increase the risk of unauthorized access.

By adhering to these best practices, you can minimize risks and ensure that your use of Ollama and ngrok remains secure and private.

Access Your Local LLM on Mobile

The ultimate convenience comes when you can interact with your LLM setups directly from your mobile device. Following the ngrok setup outlined previously, accessing your Ollama instance on mobile is straightforward. Here’s how to do it:

- Access via ngrok URL: Once ngrok is running and exposing your Ollama instance, it will provide you with a URL. Simply enter this URL into the browser on your mobile device.

- Mobile Interface: The Ollama web UI is designed to be responsive, meaning it should adjust to fit your mobile screen. This accessibility ensures you can manage and interact with your LLMs just as easily on your phone as you can on a computer.

This capability not only increases the flexibility of where and how you can work with LLMs but also ensures you can demonstrate or share your projects with others more conveniently, whether in meetings, classrooms, or casual discussions.

Conclusion

Congratulations on beginning your journey with Large Language Models through Ollama! We encourage you to explore the ever-evolving capabilities of open-source projects like Ollama, Mistral, and Lama. These platforms not only push the boundaries of what's possible in generative AI but also make cutting-edge technology more accessible. As the state of the art continues to advance, it opens the door for more people to engage with and benefit from these powerful tools.

The ability to run these models on a local GPU-equipped PC is not just empowering—it's transformative. It gives you complete control over the tools, allowing for a deeper understanding and customization of the technology. As advancements in AI continue, models are becoming smaller and more efficient, promising a future where anyone can use high-level AI tools right from their own home.

At Exxact, we provide the essential hardware to make this future a reality today. Configure your very own deep learning workstation, server, or even a full rack to fuel your AI development. With our powerful, ready-to-deploy systems, you have everything you need to start training, developing, and deploying your own LLMs. Dive into the world of AI with Exxact, where we deliver the compute and storage to unleash your creativity.

We're Here to Deliver the Tools to Power Your Research

With access to the highest performing hardware, at Exxact, we can offer the platform optimized for your deployment, budget, and desired performance so you can make an impact with your research!

Talk to an Engineer Today