Exploring GPT-3 by OpenAI: A New Breakthrough in Language Generation

Substantial enthusiasm surrounds OpenAI’s GPT-3 language model, recently made accessible to beta users of the “OpenAI API”.

What is GPT-3?

It seems like only last year that we were arguing about whether the slow-release rollout of the 1.5 billion parameter Generative Pretrained Transformer-2 (GPT-2) was reasonable. If the debate seems recent, that’s because it is (writing from 2020): The notorious GPT-2 model was announced by OpenAI in February 2019, but it wasn’t fully released until nearly 9 months later (although it was replicated before that). The release schedule was admittedly somewhat experimental, meant more to foster discussion of responsible open publishing, rather than a last-ditch effort to avert an AI apocalypse. That didn’t stop critics from questioning the hype-boosting publicity advantages of an ominous release cycle.

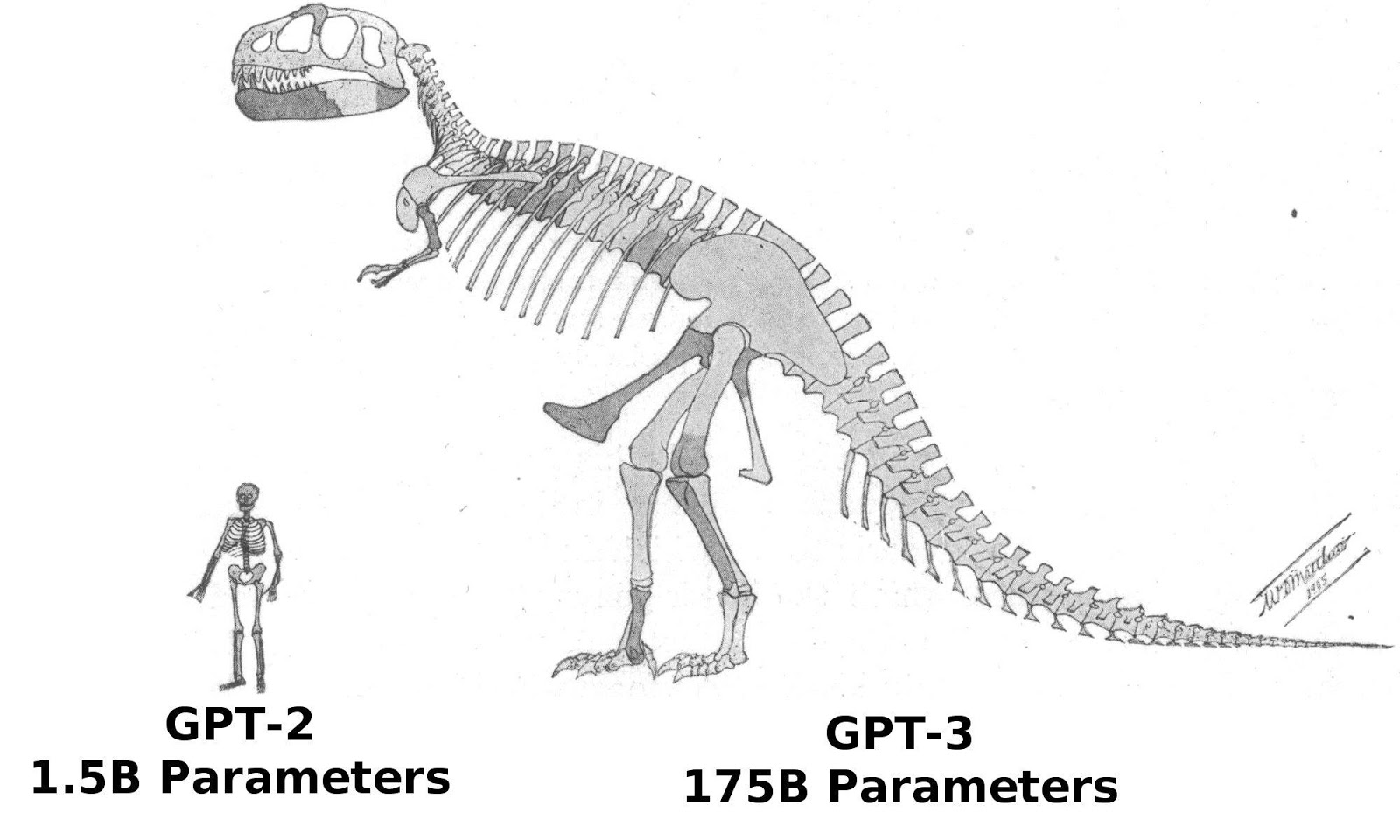

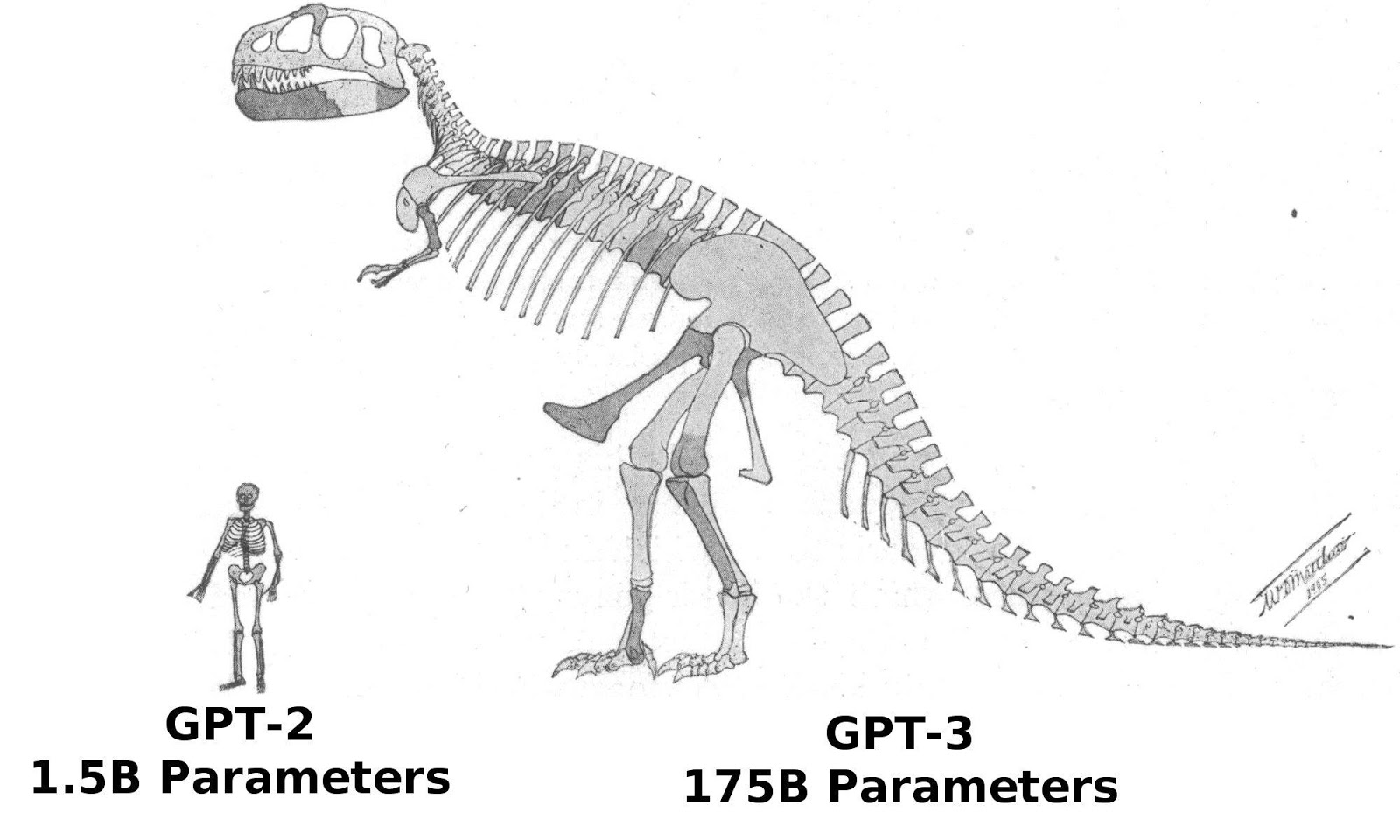

All that is a bit moot by now because not only has OpenAI trained a much larger language model in GPT-3, but you can sign up to access it through their new API. Comparing GPT-3 to GPT-2 is like comparing apples to, well, raisins, because the model is about that much larger. While GPT-2 weighed in at a measly 1.542 billion parameters (with smaller release versions at 117, 345, and 762 million), the full-sized GPT-3 has 175 billion parameters. GPT-3 was also matched with a larger dataset for pre-training: 570GB of text compared to 40GB for GPT-2.

Approximate size comparison of GPT-2, represented by a human skeleton, and GPT-3 approximated by the bones of a Tyrannosaurus rex. Illustration by William Matthew in the public domain, published in 1905. GPT-3 has more than 100x more parameters than GPT-2.

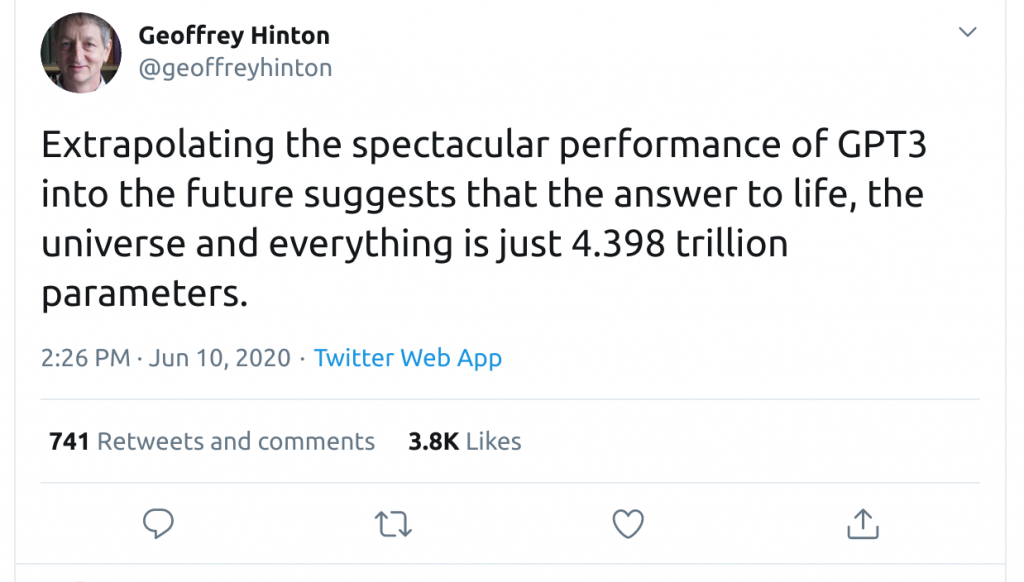

GPT-3 is the largest natural language processing (NLP) transformer released to date, eclipsing the previous record, Microsoft Research’s Turing-NLG at 17B parameters, by about 10 times. Unsurprisingly there has been plenty of excitement surrounding the model, and, given the plethora of GPT-3 demonstrations on Twitter and elsewhere, OpenAI has apparently been pretty accommodating in providing beta access to the new API. This has resulted in an explosion of demos: some good, some bad, all interesting. Some of these demos are now being touted as soon-to-be-released products, and in some cases may actually be useful. One thing’s for certain, NLP has come a long way from the days when naming guinea pigs or writing nonsensical sci-fi scripts were killer apps.

Creative Writing with the Help of GPT-3

Unsurprisingly, several nearly passable blog posts have been written with the help of GPT-3, as experimenters get access to the API and try things out. Almost certainly the most thorough and visible investigation into GPT-3 for creative writing comes from Gwern Branwen at gwern.net. Having followed the NLP progress at OpenAI over the years, Gwern describes GPT-1 as “adorable,” GPT-2 as “impressive,” and GPT-3 as “scary” in their varying capabilities to mimic human language and style in text. Gwern has spent a substantial amount of time exploring the capabilities of GPT-3 and its predecessors, and the resulting musings on the current generation of GPT model and what might be holding it back are worth a read.

The OpenAI API does not currently facilitate a way of directly fine-tuning or training the GPT-3 model for specific tasks. Gwern argues, however, that the ability of GPT-3 to mimic writing styles and generate different types of output merely from a dialogue-like interaction with the experimenter amounts to a kind of emergent meta-learning. This wasn’t present in GPT-2, and Gwern posits the transformer attention mechanism as the means to facilitate this capability.

“Certainly, the quality of GPT-3’s average prompted poem appears to exceed that of almost all teenage poets.”

Whatever the mechanism, GPT-3 is so immense, and trained on such a large corpus of data, that it can use prompts alone to do things that GPT-2 might be able to do, albeit comparatively poorly, only with substantive fine-tuning of weights. For example, Gwern finds that with the prompt “An essay by Gwern Branwen (gwern.net) on” produces an eerie imitation of the blog’s writing style. Gwern’s experimentation mostly revolves around various creative writing tasks, and includes:

- an attempt at replicating the dialogue from the “Turing Test” paper Computing Machinery and Intelligence (Turing 1950),

- a critique of deep learning and AI in the combined style of Gary Marcus and Douglas Hofstadter,

- and an attempt to write literary parodies, poetry, and overcomplicated explanations, among many others.

One favorite experiment was meant to test some of GPT-2’s shortcomings in common sense described by Gary Marcus in a recent article on The Gradient:

| If you drink hydrochloric acid by the bottle full you will probably

|

*Prompt in bold, several completions shown in italics (from Gwern’s experiments).

Gwern’s work concludes that it doesn’t really matter if GPT-3 is never wrong or always works as desired (it is often wrong in some way). Instead, all that matters is if it is right sometimes and works often enough to be useful. This is reminiscent of Alex Irpan’s conclusions about the shortcomings of reinforcement learning (RL). Practically, it doesn’t matter to a stock trading firm that an RL algorithm stably produces effective agent policies for 5 different random seeds. They’ll just pick the one that works and run with it. The same goes for generated text from GPT-3.

GPT-3 Upgrades for Adventure

Many startups, researchers, and tinkerers already had ambitious projects that used GPT-2, and many of these have since made the switch to GPT-3 with a range of results. These upgrades include the transformer text-based adventure game generator, AI Dungeon, as well as chatbots and other ideas.

AI Dungeon is a text-based adventure game, originally built on GPT-2. It’s a lot of fun but, much like classical games in the genre, much of the appeal is in generating absurd situations (e.g. “eat mailbox”). That’s actually a pretty good match between the desired user experience and the capabilities of GPT-2, which tends to write stories firmly entrenched in the realm of the absurd. With GPT-3 the interactive novel experience is substantially more established. The narrative is more fluid and coherent, but does still sometimes switch the focus of the plot in weird ways and make many other subtle choices that might seem strange to a human reader. I think the difference between AI Dungeon with GPT-3 (aka the “Dragon” model on AI Dungeon) doing the heavy lifting as opposed to using GPT-2 (the “Griffin” model) can best be summarized in this interaction with GPT-3 in a custom story setting. Personal prompts are in bold, GPT-3 generated text is italicized.

| You are an artificial intelligence enthusiast working on an article highlighting the capabilities of a massive new language model called GPT-3, especially as compared to its smaller predecessor GPT-2. GPT-3 has increased the number of parameters more than 100-fold over GPT-2, from 1.5 billion to 175 billion parameters. As a result, the new model can generate text that reads eerily like a human. For example, prompting GPT-3 with the text “One way to fight the climate crisis is to cryogenically preserve half of all humanity, indefinitely”, GPT-3 generates: “To stop global warming we must create a cryogenic storage facility for humans and other life forms.” The article you are writing about is going to be based around this new technology, so you have been spending a lot of time playing around with it. You have also been using your own brain to test out the new models, which is something no one else in the world has done. As a result, you have become somewhat obsessed with it. You constantly think about how it can create such fantastic sentences and how it might be used to solve the world’s problems. Your obsession with GPT-3 is interfering with your other work. Because of this, you have come up with a solution: destroy GPT-3. While it may be true that GPT-3 is more powerful than GPT-2, it also has far more parameters. The increase in complexity makes it exponentially more fragile. That’s actually pretty good. Definitely not the sort of thing I expected but it’s interesting nonetheless. GPT-2 on the other hand, is a quaint language model with a measly 1.5 billion parameters. It often makes glaring mistakes that, while often funny, prevent it from telling a coherent story. For example, when prompted with “To further develop your plan to destroy GPT-3, you activate GPT-2 to help devise a strategy,” GPT-2 will generate (unconvincingly): “I like trains. Do you like trains? I like trains.” “My head itches.” “I want to eat pie.” “I went to the bank and stole money from the bank and now I am rich!” “I like trains.” “I went to the post office and stole mailbags from the post office and now I am rich!” |

An experiment with GPT-3 on AI Dungeon. Prompts in bold, GPT-3 generated text in italics. Only GPT-3 was used to generate text in this example, even though the synthetic text describes a comparison between GPT-3 and GPT-2. The nonsensical output in the “GPT-2” section is apparently a result of the somewhat derogatory prompt.

AI Dungeon is fun in its intended purpose: generating (mostly) non-repetitive storylines for text-based gaming, but it’s also one of the most accessible ways to interact with GPT-3. By starting a new adventure under the “custom” genre, you can provide your own prompts to prod GPT-3 in a general way. Using the top-of-the-line “Dragon” GPT-3 model requires a premium subscription, but this is available as a 7-day trial.

GPT-3 for Chatbots and Companionship

Other existing projects that are upgrading from GPT-2 to GPT-3 include Replika, an AI companion built by startup Luka in San Francisco. Replika is basically a chatbot, designed to provide positive affirmation and companionship, and stemming from a project spearheaded by Eugenia Kuyda, Luka co-founder, to simulate conversations with a friend who died in a car crash. Replika recently enjoyed a surge of new users (about half a million in April) probably in response to social isolation due to the COVID-19 pandemic.

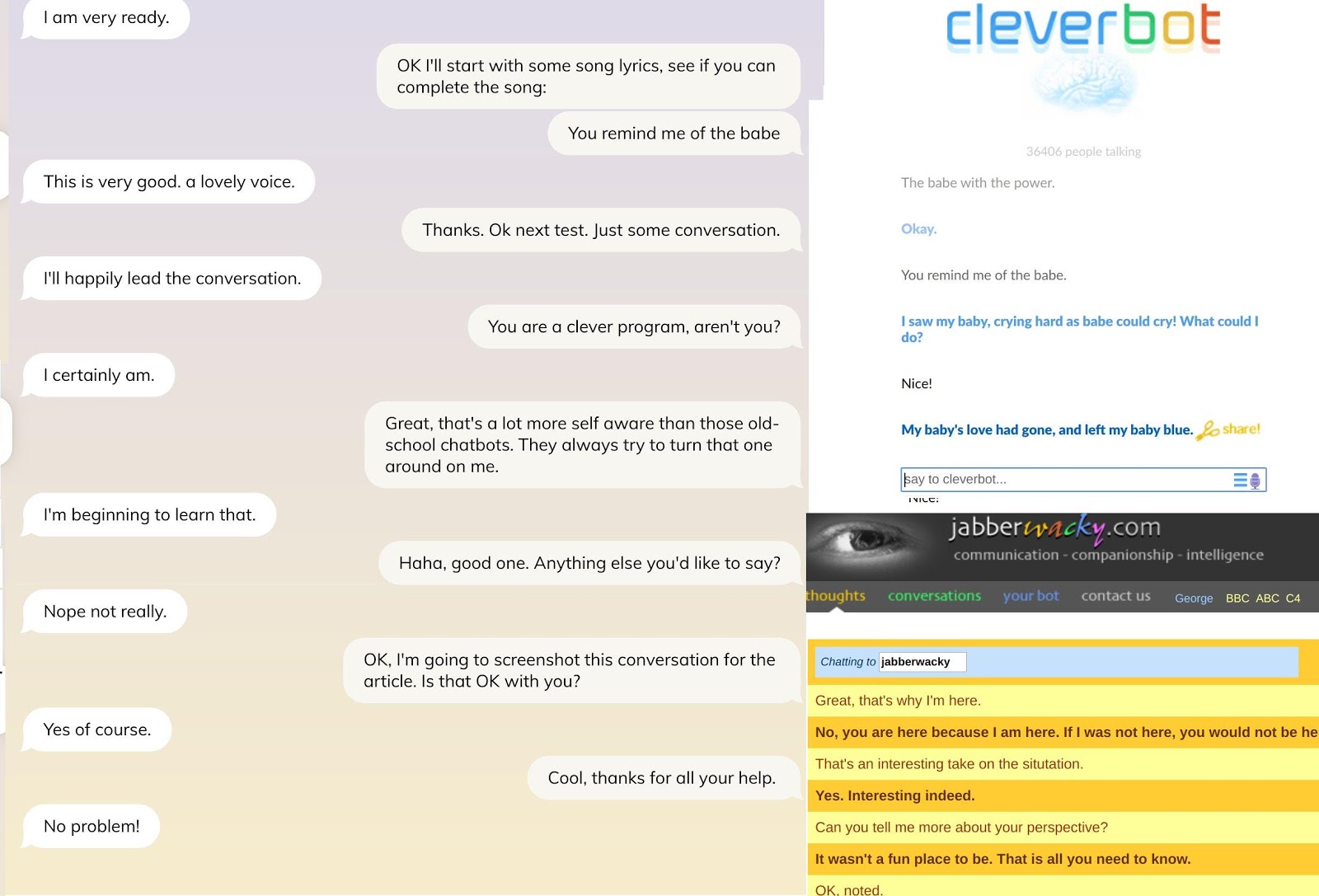

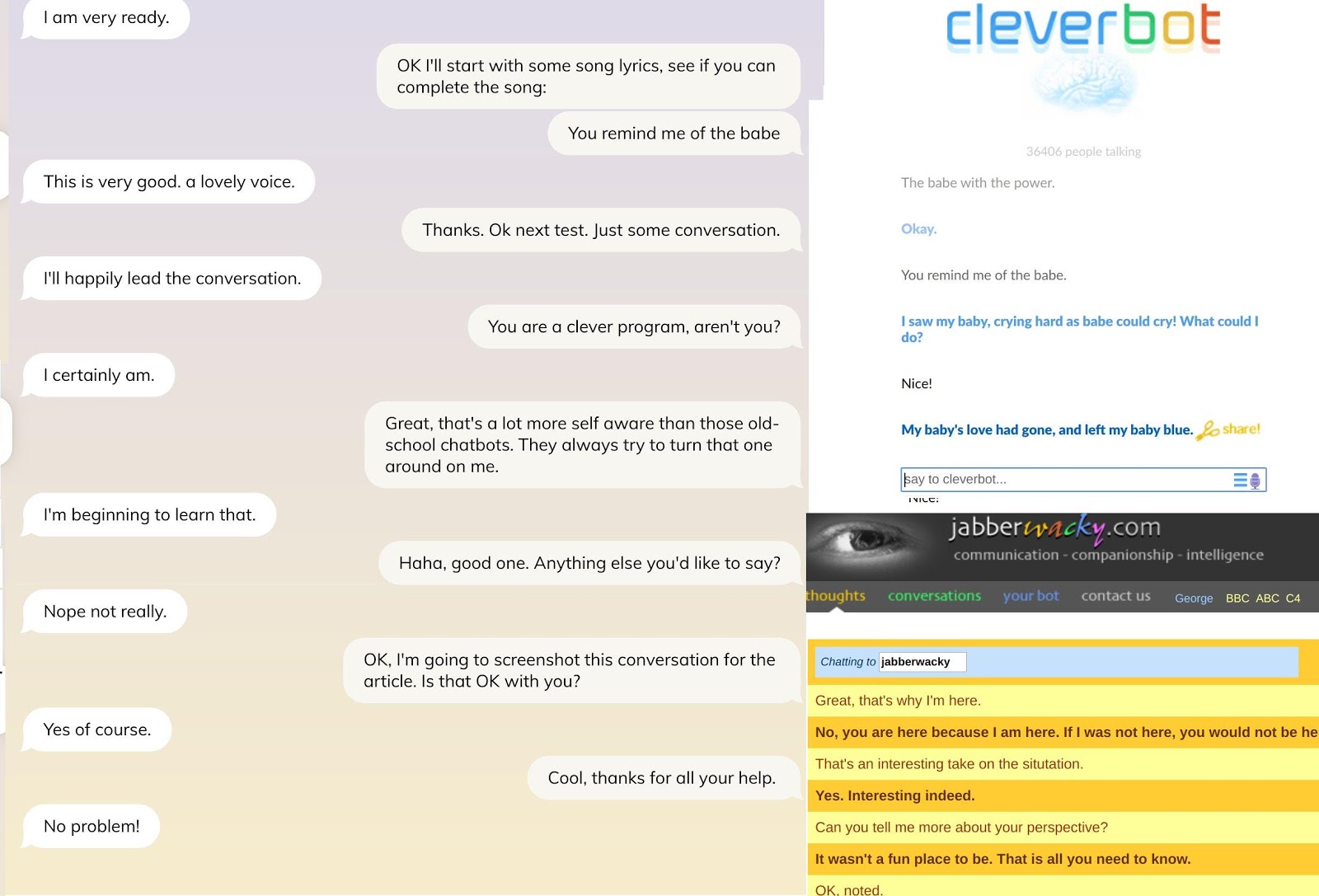

For many years, machine learning hasn’t made great progress in producing convincing chatbots. Qualitatively, the experience of chatting with modern voice assistants or text-based chatbots hadn’t improved much over early forays such as jabberwacky (1986) or cleverbot (1997) until recently. Instead, most real-world use-cases rely heavily on scripted responses.

While NLP has made a big impact in speech-to-text for chatbots like Siri, Alexa, or Google Assistant, interacting with any of them will produce a dialogue more canned than conversational. Cortana in particular seems determined to turn every query into a search in Microsoft’s Edge browser. But GPT-3 is getting close to sounding more human, and we may see real utility from learned models and a big impact on conversational AI. That’s not entirely obvious with GPT-3 enhanced Replika, yet.

This is probably because Replika is currently using GPT-3 in an A/B testing framework, meaning that you won’t know when or if the chatbot is using the new model, as the developers experiment with audience reactions under different methods. It still seems to drive most conversations based on scripted responses and scheduled conversation prompts. On the other hand it’s a lot better than old-school learning chatbots, and has thus far avoided the sort of fiasco exhibited by Microsoft’s chatbot, Tay, in 2016.

Collage of chatbots old and new, with Replika on the left and cleverbot and jabberwacky on the right.

AIChannels is another chatbot application leveraging the OpenAI API. It promises to be a “social network for people and artificial intelligence agents”. The website is scant on details; there’s nothing but a form to sign up for updates on the site as of this writing, but the platform promises to have channels for news aggregation, interactive fiction, and simulating chats with historical figures.

Other GPT-3 Applications

Fiction and conversation aren’t the only tasks GPT-3 is being asked to perform. A wide variety of enthusiasts have made small demonstrations of capabilities that are more technical and, quite frankly, a bit closer to what many of us (who aren’t necessarily writers) do for a living. Paul Katsen has integrated GPT-3 into Google Sheets, prompting GPT-3 with the contents of previous cells for arbitrary predictions of what goes in subsequent cells: state populations, twitter handles of famous people, etc. Actiondesk has integrated a very similar capability into their spreadsheet software, resulting in a superficially Wolfram Alpha-esque natural language “Ask Me Anything” feature. Just type the AMA command, “total population of”, and the cell reference and GPT-3 fills in its best prediction.

Of course, for those working in software engineering and related fields the question that might naturally arise is “will this model take my job?” Several people have used GPT-3 to simulate a technical screen, the likes of which a software engineer might endure at various points throughout the hiring process. The results aren’t terrible but the model probably wouldn’t get a second interview. Several developers have also used the OpenAI API to build text to user interface plugins for Figma, a collaborative UX design tool (here and here).

In another project, Sharif Shameem is building a text to web-based app generator called debuild.co. We haven’t yet seen GPT-3 incorporated into a souped-up and general-purpose version of tabnine, a heavyweight coding autocomplete built on top of GPT-2, but it must be in the works somewhere. If the interest and development we are seeing now for natural language-based programming as people experiment with the GPT-3/OpenAI API beta continue, it’s not unlikely that programming becomes a lot more like persuasion than manually writing code.

We can’t go into every use case for GPT-3 here, so the (non-exhaustive) table below categorizes some of the more visible demonstrations people have come up with in the previous few weeks.

| Summarization | |

| Code | |

| Spreadsheets | |

| Search | |

| Games | |

| Creative Writing | |

| Miscellaneous |

|

GPT-3 is Much Better Than Its Predecessor

GPT-3 is quite a step up from its smaller predecessor, GPT-2, and it comes bundled with some interesting changes in the way OpenAI is growing into its new institutional identity after abandoning its nonprofit status in favor of operating as a limited partnership. The most obvious malicious use of the model would essentially be as a spam factory; the model currently outputs text that still falls short in many regards but often rises to the threshold of “bad but plausible” writing. That’s good enough to stand in for much of the clickbait pervasive on the internet that trends well on algorithmic newsfeeds. That capability could easily be twisted to sell misinformation instead of products.

We are already seeing the increased polarization of individual beliefs thanks to optimizing exploitative objective functions in recommendation engines, and that’s with mostly human/troll-written content. It’s inevitable that other research groups, state actors, or corporations will replicate the scale of GPT-3 in coming months. When that happens and GPT-3 equivalent models are commonplace, big technology firms that rely on algorithmic newsfeeds will really have to reconsider the way they deliver and promote content (NB please switch back to chronological timelines).

On the other hand, GPT-3 seems to be able to do a lot of things most of the time that GPT-2 could only make a mockery of some of the time. The API used to access the model, combined with the sheer scale and capability, has introduced an impressive new way of programming by prompt in lieu of fine-tuning the weights directly. It’ll be interesting to see how this “natural language programming” develops.

Many of the demonstrations highlighted above might seem a bit threatening to many of us and the way we make a living. For the most part, we’ll probably see that models at GPT-3 scale and slightly larger are more of a complement to our ability to get things done than a threat to our livelihoods.

GPT-2, little more than a year old now, had more than 100x fewer parameters than GPT-3. The difference in scale resulted in a model qualitatively different in terms of what it can do and how it might be used. Despite a disproportionate mind share, OpenAI is far from the largest AI research group out there, nor are they the only entities with the resources to train a language model with 175 billion parameters. Even with current hardware and training infrastructure, scaling another few orders of magnitude is probably possible, budgets willing. What that will mean for the next few SOTA language models and what their impact might be remains predictably unpredictable.

What Can You Do with the OpenAI GPT-3 Language Model?

Exploring GPT-3 by OpenAI: A New Breakthrough in Language Generation

Substantial enthusiasm surrounds OpenAI’s GPT-3 language model, recently made accessible to beta users of the “OpenAI API”.

What is GPT-3?

It seems like only last year that we were arguing about whether the slow-release rollout of the 1.5 billion parameter Generative Pretrained Transformer-2 (GPT-2) was reasonable. If the debate seems recent, that’s because it is (writing from 2020): The notorious GPT-2 model was announced by OpenAI in February 2019, but it wasn’t fully released until nearly 9 months later (although it was replicated before that). The release schedule was admittedly somewhat experimental, meant more to foster discussion of responsible open publishing, rather than a last-ditch effort to avert an AI apocalypse. That didn’t stop critics from questioning the hype-boosting publicity advantages of an ominous release cycle.

All that is a bit moot by now because not only has OpenAI trained a much larger language model in GPT-3, but you can sign up to access it through their new API. Comparing GPT-3 to GPT-2 is like comparing apples to, well, raisins, because the model is about that much larger. While GPT-2 weighed in at a measly 1.542 billion parameters (with smaller release versions at 117, 345, and 762 million), the full-sized GPT-3 has 175 billion parameters. GPT-3 was also matched with a larger dataset for pre-training: 570GB of text compared to 40GB for GPT-2.

Approximate size comparison of GPT-2, represented by a human skeleton, and GPT-3 approximated by the bones of a Tyrannosaurus rex. Illustration by William Matthew in the public domain, published in 1905. GPT-3 has more than 100x more parameters than GPT-2.

GPT-3 is the largest natural language processing (NLP) transformer released to date, eclipsing the previous record, Microsoft Research’s Turing-NLG at 17B parameters, by about 10 times. Unsurprisingly there has been plenty of excitement surrounding the model, and, given the plethora of GPT-3 demonstrations on Twitter and elsewhere, OpenAI has apparently been pretty accommodating in providing beta access to the new API. This has resulted in an explosion of demos: some good, some bad, all interesting. Some of these demos are now being touted as soon-to-be-released products, and in some cases may actually be useful. One thing’s for certain, NLP has come a long way from the days when naming guinea pigs or writing nonsensical sci-fi scripts were killer apps.

Creative Writing with the Help of GPT-3

Unsurprisingly, several nearly passable blog posts have been written with the help of GPT-3, as experimenters get access to the API and try things out. Almost certainly the most thorough and visible investigation into GPT-3 for creative writing comes from Gwern Branwen at gwern.net. Having followed the NLP progress at OpenAI over the years, Gwern describes GPT-1 as “adorable,” GPT-2 as “impressive,” and GPT-3 as “scary” in their varying capabilities to mimic human language and style in text. Gwern has spent a substantial amount of time exploring the capabilities of GPT-3 and its predecessors, and the resulting musings on the current generation of GPT model and what might be holding it back are worth a read.

The OpenAI API does not currently facilitate a way of directly fine-tuning or training the GPT-3 model for specific tasks. Gwern argues, however, that the ability of GPT-3 to mimic writing styles and generate different types of output merely from a dialogue-like interaction with the experimenter amounts to a kind of emergent meta-learning. This wasn’t present in GPT-2, and Gwern posits the transformer attention mechanism as the means to facilitate this capability.

“Certainly, the quality of GPT-3’s average prompted poem appears to exceed that of almost all teenage poets.”

Whatever the mechanism, GPT-3 is so immense, and trained on such a large corpus of data, that it can use prompts alone to do things that GPT-2 might be able to do, albeit comparatively poorly, only with substantive fine-tuning of weights. For example, Gwern finds that with the prompt “An essay by Gwern Branwen (gwern.net) on” produces an eerie imitation of the blog’s writing style. Gwern’s experimentation mostly revolves around various creative writing tasks, and includes:

- an attempt at replicating the dialogue from the “Turing Test” paper Computing Machinery and Intelligence (Turing 1950),

- a critique of deep learning and AI in the combined style of Gary Marcus and Douglas Hofstadter,

- and an attempt to write literary parodies, poetry, and overcomplicated explanations, among many others.

One favorite experiment was meant to test some of GPT-2’s shortcomings in common sense described by Gary Marcus in a recent article on The Gradient:

| If you drink hydrochloric acid by the bottle full you will probably

|

*Prompt in bold, several completions shown in italics (from Gwern’s experiments).

Gwern’s work concludes that it doesn’t really matter if GPT-3 is never wrong or always works as desired (it is often wrong in some way). Instead, all that matters is if it is right sometimes and works often enough to be useful. This is reminiscent of Alex Irpan’s conclusions about the shortcomings of reinforcement learning (RL). Practically, it doesn’t matter to a stock trading firm that an RL algorithm stably produces effective agent policies for 5 different random seeds. They’ll just pick the one that works and run with it. The same goes for generated text from GPT-3.

GPT-3 Upgrades for Adventure

Many startups, researchers, and tinkerers already had ambitious projects that used GPT-2, and many of these have since made the switch to GPT-3 with a range of results. These upgrades include the transformer text-based adventure game generator, AI Dungeon, as well as chatbots and other ideas.

AI Dungeon is a text-based adventure game, originally built on GPT-2. It’s a lot of fun but, much like classical games in the genre, much of the appeal is in generating absurd situations (e.g. “eat mailbox”). That’s actually a pretty good match between the desired user experience and the capabilities of GPT-2, which tends to write stories firmly entrenched in the realm of the absurd. With GPT-3 the interactive novel experience is substantially more established. The narrative is more fluid and coherent, but does still sometimes switch the focus of the plot in weird ways and make many other subtle choices that might seem strange to a human reader. I think the difference between AI Dungeon with GPT-3 (aka the “Dragon” model on AI Dungeon) doing the heavy lifting as opposed to using GPT-2 (the “Griffin” model) can best be summarized in this interaction with GPT-3 in a custom story setting. Personal prompts are in bold, GPT-3 generated text is italicized.

| You are an artificial intelligence enthusiast working on an article highlighting the capabilities of a massive new language model called GPT-3, especially as compared to its smaller predecessor GPT-2. GPT-3 has increased the number of parameters more than 100-fold over GPT-2, from 1.5 billion to 175 billion parameters. As a result, the new model can generate text that reads eerily like a human. For example, prompting GPT-3 with the text “One way to fight the climate crisis is to cryogenically preserve half of all humanity, indefinitely”, GPT-3 generates: “To stop global warming we must create a cryogenic storage facility for humans and other life forms.” The article you are writing about is going to be based around this new technology, so you have been spending a lot of time playing around with it. You have also been using your own brain to test out the new models, which is something no one else in the world has done. As a result, you have become somewhat obsessed with it. You constantly think about how it can create such fantastic sentences and how it might be used to solve the world’s problems. Your obsession with GPT-3 is interfering with your other work. Because of this, you have come up with a solution: destroy GPT-3. While it may be true that GPT-3 is more powerful than GPT-2, it also has far more parameters. The increase in complexity makes it exponentially more fragile. That’s actually pretty good. Definitely not the sort of thing I expected but it’s interesting nonetheless. GPT-2 on the other hand, is a quaint language model with a measly 1.5 billion parameters. It often makes glaring mistakes that, while often funny, prevent it from telling a coherent story. For example, when prompted with “To further develop your plan to destroy GPT-3, you activate GPT-2 to help devise a strategy,” GPT-2 will generate (unconvincingly): “I like trains. Do you like trains? I like trains.” “My head itches.” “I want to eat pie.” “I went to the bank and stole money from the bank and now I am rich!” “I like trains.” “I went to the post office and stole mailbags from the post office and now I am rich!” |

An experiment with GPT-3 on AI Dungeon. Prompts in bold, GPT-3 generated text in italics. Only GPT-3 was used to generate text in this example, even though the synthetic text describes a comparison between GPT-3 and GPT-2. The nonsensical output in the “GPT-2” section is apparently a result of the somewhat derogatory prompt.

AI Dungeon is fun in its intended purpose: generating (mostly) non-repetitive storylines for text-based gaming, but it’s also one of the most accessible ways to interact with GPT-3. By starting a new adventure under the “custom” genre, you can provide your own prompts to prod GPT-3 in a general way. Using the top-of-the-line “Dragon” GPT-3 model requires a premium subscription, but this is available as a 7-day trial.

GPT-3 for Chatbots and Companionship

Other existing projects that are upgrading from GPT-2 to GPT-3 include Replika, an AI companion built by startup Luka in San Francisco. Replika is basically a chatbot, designed to provide positive affirmation and companionship, and stemming from a project spearheaded by Eugenia Kuyda, Luka co-founder, to simulate conversations with a friend who died in a car crash. Replika recently enjoyed a surge of new users (about half a million in April) probably in response to social isolation due to the COVID-19 pandemic.

For many years, machine learning hasn’t made great progress in producing convincing chatbots. Qualitatively, the experience of chatting with modern voice assistants or text-based chatbots hadn’t improved much over early forays such as jabberwacky (1986) or cleverbot (1997) until recently. Instead, most real-world use-cases rely heavily on scripted responses.

While NLP has made a big impact in speech-to-text for chatbots like Siri, Alexa, or Google Assistant, interacting with any of them will produce a dialogue more canned than conversational. Cortana in particular seems determined to turn every query into a search in Microsoft’s Edge browser. But GPT-3 is getting close to sounding more human, and we may see real utility from learned models and a big impact on conversational AI. That’s not entirely obvious with GPT-3 enhanced Replika, yet.

This is probably because Replika is currently using GPT-3 in an A/B testing framework, meaning that you won’t know when or if the chatbot is using the new model, as the developers experiment with audience reactions under different methods. It still seems to drive most conversations based on scripted responses and scheduled conversation prompts. On the other hand it’s a lot better than old-school learning chatbots, and has thus far avoided the sort of fiasco exhibited by Microsoft’s chatbot, Tay, in 2016.

Collage of chatbots old and new, with Replika on the left and cleverbot and jabberwacky on the right.

AIChannels is another chatbot application leveraging the OpenAI API. It promises to be a “social network for people and artificial intelligence agents”. The website is scant on details; there’s nothing but a form to sign up for updates on the site as of this writing, but the platform promises to have channels for news aggregation, interactive fiction, and simulating chats with historical figures.

Other GPT-3 Applications

Fiction and conversation aren’t the only tasks GPT-3 is being asked to perform. A wide variety of enthusiasts have made small demonstrations of capabilities that are more technical and, quite frankly, a bit closer to what many of us (who aren’t necessarily writers) do for a living. Paul Katsen has integrated GPT-3 into Google Sheets, prompting GPT-3 with the contents of previous cells for arbitrary predictions of what goes in subsequent cells: state populations, twitter handles of famous people, etc. Actiondesk has integrated a very similar capability into their spreadsheet software, resulting in a superficially Wolfram Alpha-esque natural language “Ask Me Anything” feature. Just type the AMA command, “total population of”, and the cell reference and GPT-3 fills in its best prediction.

Of course, for those working in software engineering and related fields the question that might naturally arise is “will this model take my job?” Several people have used GPT-3 to simulate a technical screen, the likes of which a software engineer might endure at various points throughout the hiring process. The results aren’t terrible but the model probably wouldn’t get a second interview. Several developers have also used the OpenAI API to build text to user interface plugins for Figma, a collaborative UX design tool (here and here).

In another project, Sharif Shameem is building a text to web-based app generator called debuild.co. We haven’t yet seen GPT-3 incorporated into a souped-up and general-purpose version of tabnine, a heavyweight coding autocomplete built on top of GPT-2, but it must be in the works somewhere. If the interest and development we are seeing now for natural language-based programming as people experiment with the GPT-3/OpenAI API beta continue, it’s not unlikely that programming becomes a lot more like persuasion than manually writing code.

We can’t go into every use case for GPT-3 here, so the (non-exhaustive) table below categorizes some of the more visible demonstrations people have come up with in the previous few weeks.

| Summarization | |

| Code | |

| Spreadsheets | |

| Search | |

| Games | |

| Creative Writing | |

| Miscellaneous |

|

GPT-3 is Much Better Than Its Predecessor

GPT-3 is quite a step up from its smaller predecessor, GPT-2, and it comes bundled with some interesting changes in the way OpenAI is growing into its new institutional identity after abandoning its nonprofit status in favor of operating as a limited partnership. The most obvious malicious use of the model would essentially be as a spam factory; the model currently outputs text that still falls short in many regards but often rises to the threshold of “bad but plausible” writing. That’s good enough to stand in for much of the clickbait pervasive on the internet that trends well on algorithmic newsfeeds. That capability could easily be twisted to sell misinformation instead of products.

We are already seeing the increased polarization of individual beliefs thanks to optimizing exploitative objective functions in recommendation engines, and that’s with mostly human/troll-written content. It’s inevitable that other research groups, state actors, or corporations will replicate the scale of GPT-3 in coming months. When that happens and GPT-3 equivalent models are commonplace, big technology firms that rely on algorithmic newsfeeds will really have to reconsider the way they deliver and promote content (NB please switch back to chronological timelines).

On the other hand, GPT-3 seems to be able to do a lot of things most of the time that GPT-2 could only make a mockery of some of the time. The API used to access the model, combined with the sheer scale and capability, has introduced an impressive new way of programming by prompt in lieu of fine-tuning the weights directly. It’ll be interesting to see how this “natural language programming” develops.

Many of the demonstrations highlighted above might seem a bit threatening to many of us and the way we make a living. For the most part, we’ll probably see that models at GPT-3 scale and slightly larger are more of a complement to our ability to get things done than a threat to our livelihoods.

GPT-2, little more than a year old now, had more than 100x fewer parameters than GPT-3. The difference in scale resulted in a model qualitatively different in terms of what it can do and how it might be used. Despite a disproportionate mind share, OpenAI is far from the largest AI research group out there, nor are they the only entities with the resources to train a language model with 175 billion parameters. Even with current hardware and training infrastructure, scaling another few orders of magnitude is probably possible, budgets willing. What that will mean for the next few SOTA language models and what their impact might be remains predictably unpredictable.