How to Start Using Natural Language Processing With PyTorch

Natural language processing (NLP) is continuing to grow in popularity, and necessity, as artificial intelligence and deep learning programs grow and thrive in the coming years. Natural language processing with PyTorch is the best bet to implement these programs.

In this guide, we will address some of the obvious questions that may arise when starting to dive into natural language processing, but we will also engage with deeper questions and give you the right steps to get started working on your own NLP programs.

Interested in a deep learning workstation that can handle NLP training?

Learn more about Exxact AI workstations starting around $5,500

Can PyTorch Be Used For NLP?

First and foremost, NLP is an applied science. It is a branch of engineering that blends artificial intelligence, computational linguistics, and computer science in order to "understand" natural language, i.e., spoken and written language.

Second, NLP does not mean Machine Learning or Deep Learning. Instead, these artificial intelligence programs need to be taught how to process natural language and then use other systems to make use of what is being input into the programs.

While it is simpler to refer to some AI programs as NLP programs, that is not strictly the case. Instead, they are able to make sense of language, after being properly trained, but there is an entirely different system and process involved in helping these programs understand natural language.

This is why natural language processing with PyTorch comes in handy. PyTorch is built off of Python and has the benefit of having pre-written codes, called classes, all designed around NLP. This makes the entire process quicker and easier for everyone involved.

With these PyTorch classes at the ready and the various other Python libraries at PyTorch’s disposal, there is no better machine learning framework for natural language processing.

How Do I Start Learning Natural Language Processing?

To get started using NLP with PyTorch, you will need to be familiar with Python for coding.

Once you are familiar with Python, you will begin to see plenty of other frameworks that can be used for various deep learning projects. However, natural language processing with PyTorch is optimal because of PyTorch Tensors.

Simply put, tensors allow you to perform computations with the use of a GPU which can significantly increase the speed and performance of your program for NLP with PyTorch. This means you can train your deep learning program quicker to be able to utilize NLP for whatever desired outcome you have.

As mentioned above, there are different PyTorch classes with the ability to function well for NLP and accompanying programs. We will break down six of these classes and their use cases to help you get started making the right selection.

1. torch.nn.RNN

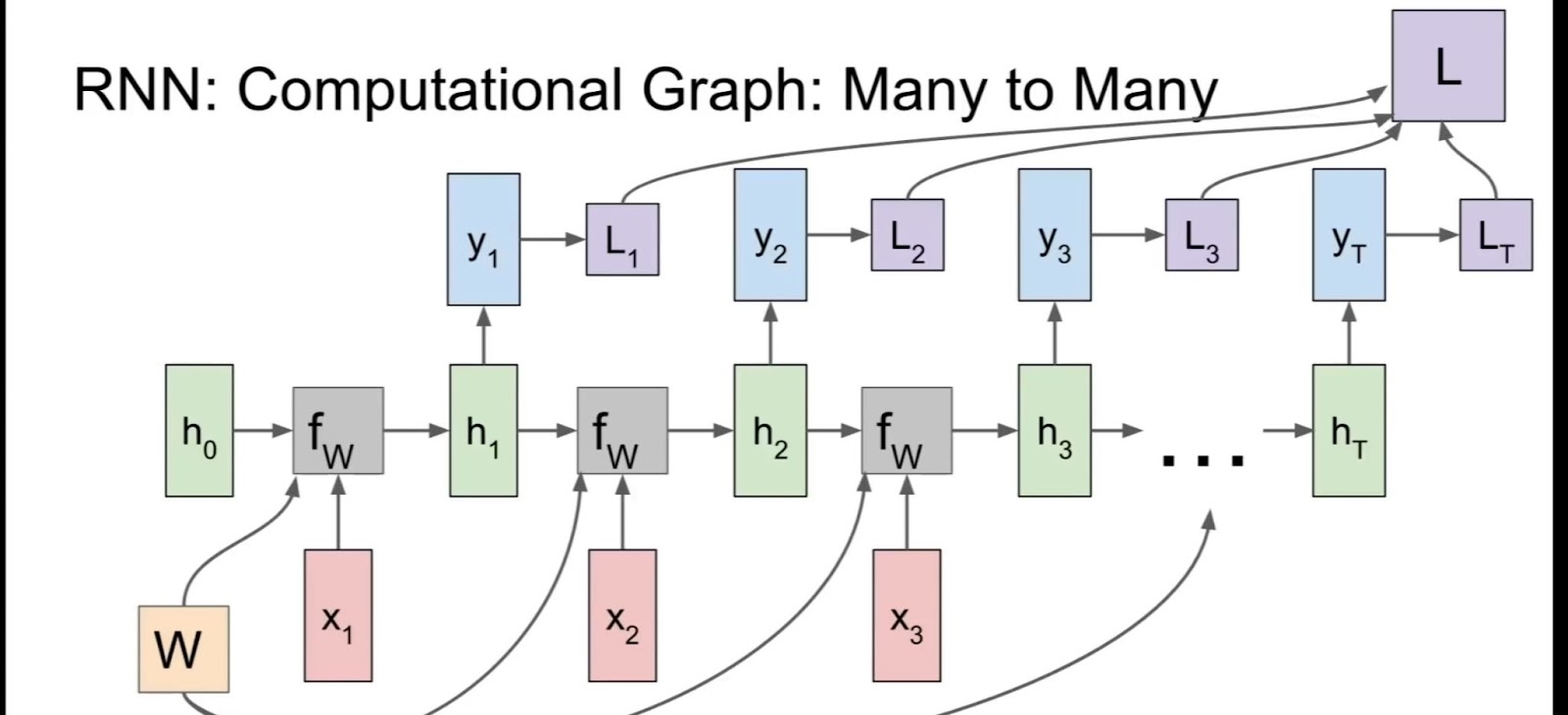

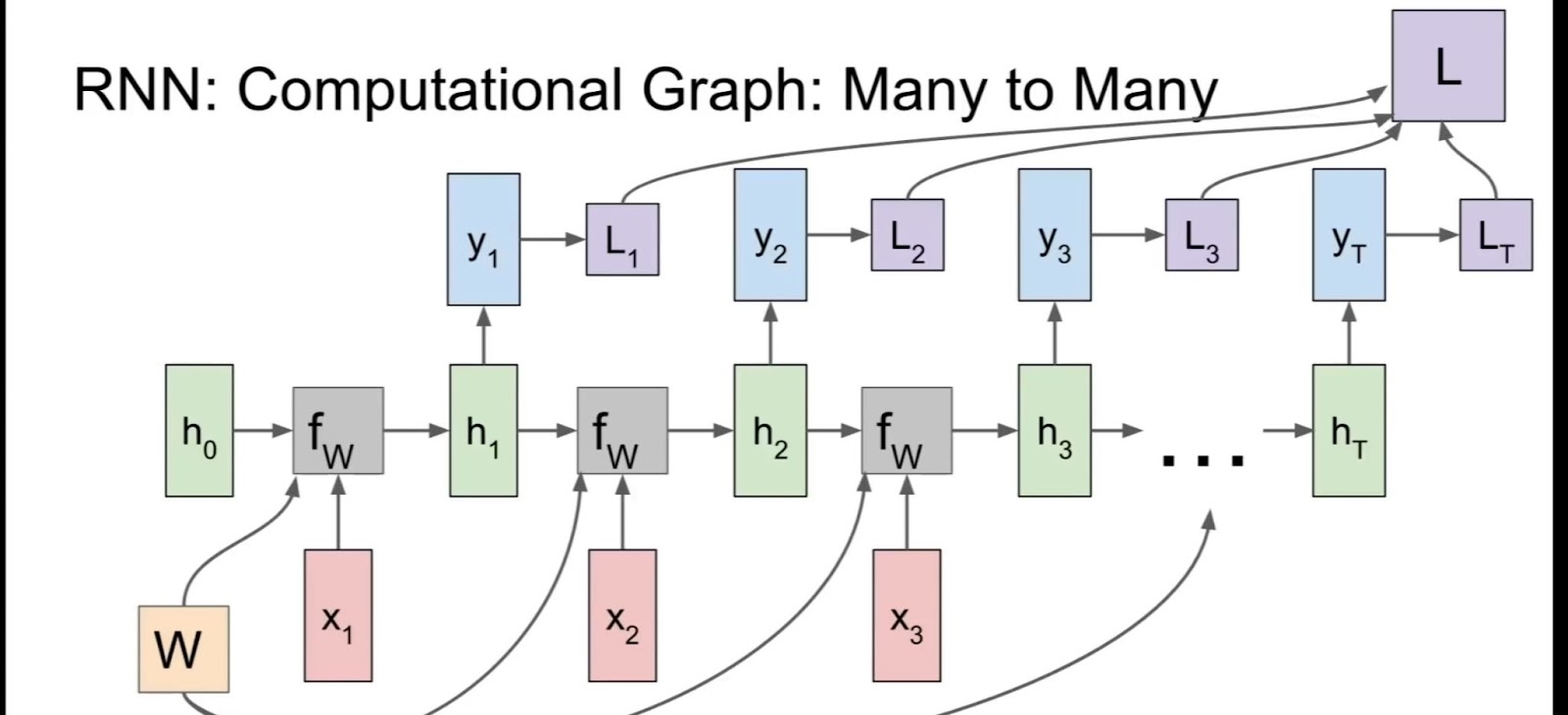

The first three classes we are going to look at are multi-layer classes, which means they can represent bi-directional recurrent neural networks. To simplify what this means, it allows the deep learning program to learn from past states as well as learn from new/future states as computations continue to run and process. This allows these programs to learn and process natural language inputs and even understand deeper language quirks.

torch.nn.RNN many to many diagram, Image Source

torch.nn.RNN stands for Recurring Neural Network and lets you know what to expect from the class. This is the simplest recurring neural network PyTorch class to use to get started with natural language processing.

2. torch.nn.LSTM

torch.nn.LSTM is another multi-layer PyTorch class. It has all the same benefits as torch.nn.RNN but with Long Short Term Memory. Essentially, this means deep learning programs using this class can go beyond one-to-one data point connections and process entire data sequences.

For natural language processing with PyTorch, torch.nn.LSTM is one of the more common classes used because it can begin to understand connections between not only written or typed data input but can also recognize speech and other vocalizations.

Being able to process more complex data sequences makes it a necessary component in being able to capably perform for programs utilizing the full potential of natural language processing.

3. torch.nn.GRU

torch.nn.GRU builds on the RNN and LSTM classes by creating Gated Recurrent Units. In brief, this translates to torch.nn.GRU class programs having a gated output. This means they function similarly to torch.nn.LSTM but actually have processes to simply forget datasets that do not fit or align with the rest of the desired outcomes or conclusions of the majority of datasets.

torch.nn.GRU class programs are another great way to get started in NLP with PyTorch because they are simpler but produce similar results to torch.nn.LSTM in less time. However, they can be less accurate without close monitoring if the program ignores datasets that might be important to its learning.

4. torch.nn.RNNCell

The next three classes are simplified versions of their predecessors, so they all function similarly with different benefits. These classes are all cell-level classes and basically run one operation at a time rather than process multiple datasets or sequences simultaneously.

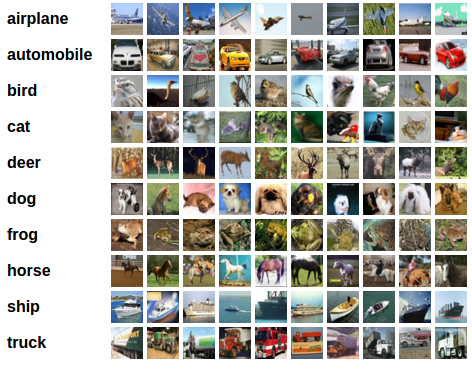

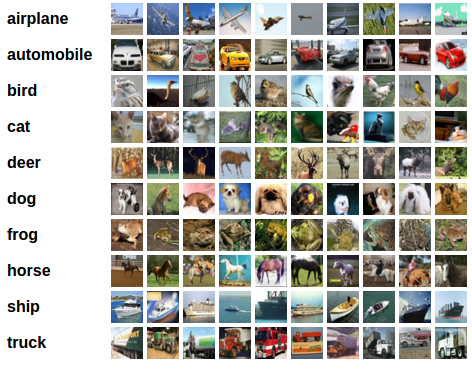

Output result of NLP with PyTorch using words assigned to corresponding images, Image Source

The going is slower this way, but the outcome can be far more accurate with enough time. Being a RNNCell, this class program can still learn from past and future states.

5. torch.nn.LSTMCell

The torch.nn.LSTMCell functions similarly to the regular torch.nn.LSTM class with the ability to process datasets and sequences, but not multiple simultaneously. As with RNNCell programs, this means the going is slower and less intensive but it can actually increase accuracy with time.

Each of these cell-level classes have small variations from their predecessors, but to dive too much into detail for these differences would be far beyond the scope of this guide.

6. torch.nn.GRUCell

One of the most interesting classes used in natural language processing with PyTorch is torch.nn.GRUCell. It still maintains the functionality of having gated outputs, which means it can ignore or even forget outlier datasets while still learning from past and future operations.

This is, arguably, one of the more popular PyTorch classes used for those starting out because it has the most potential with the lowest number of requirements for optimal operations.

The main sacrifice here is time and effort in making sure the program is trained properly.

Implementing Natural Language Processing With PyTorch

There is still much to be said about how to get started with natural language processing with PyTorch, but one more major factor to understand once you have selected a PyTorch class that is appropriate for your deep learning model is to decide how you will implement NLP within your model.

Encoding words into your model is probably one of the most obvious and important processes to having a fully-optimized and operational deep learning model with natural language processing. NLP with PyTorch requires having some sort of word encoding method.

There are many ways to have models process individual letters, but the point of creating NLP deep learning models is to, presumably, focus not on individual words and letters but the semantics and linguistic meanings of those words and phrases. Here are three basic word embedding models for NLP with PyTorch:

- Simple word encoding: training the model to focus on each individual word in a sequence and letting them derive similarities and differences on their own. This is the simplest but can be difficult for the model to accurately understand or predict semantics.

- N-Gram language modeling: the model is trained to learn words with respect to the words in the sequence. This means they can learn how words function in relationship to one another and in sentences as a whole.

- Continuous bag-of-words (CBOW): an expanded version of N-Gram language modeling, the deep learning model is trained to sequence data of a set amount of words before and a set amount of words after each word in a sequence in order to deeply learn how words function with surrounding words and how they function in their sequence. This is, by far, the most popular method used by those using natural language processing with PyTorch.

Once you have your PyTorch class selected and a method of embedding words you are ready to get started utilizing natural language processing in your next deep learning project!

Using natural language processing with PyTorch to create end result like Siri, Image Source

Looking For More Information On Natural Language Processing or PyTorch?

Natural language processing is one of the hottest topics in deep learning and AI with many industries trying to figure out ways to utilize this type of deep learning model for internal and external use.

There is so much more to see and learn, but let us know if you think we missed anything. If you have any questions about how to start implementing NLP or PyTorch, then do not hesitate to reach out!

What do you think? Are you ready to tackle natural language processing with PyTorch? Feel free to comment below with any questions you have.

Have any questions?

Contact Exxact Today

How to Start Using Natural Language Processing With PyTorch

How to Start Using Natural Language Processing With PyTorch

Natural language processing (NLP) is continuing to grow in popularity, and necessity, as artificial intelligence and deep learning programs grow and thrive in the coming years. Natural language processing with PyTorch is the best bet to implement these programs.

In this guide, we will address some of the obvious questions that may arise when starting to dive into natural language processing, but we will also engage with deeper questions and give you the right steps to get started working on your own NLP programs.

Interested in a deep learning workstation that can handle NLP training?

Learn more about Exxact AI workstations starting around $5,500

Can PyTorch Be Used For NLP?

First and foremost, NLP is an applied science. It is a branch of engineering that blends artificial intelligence, computational linguistics, and computer science in order to "understand" natural language, i.e., spoken and written language.

Second, NLP does not mean Machine Learning or Deep Learning. Instead, these artificial intelligence programs need to be taught how to process natural language and then use other systems to make use of what is being input into the programs.

While it is simpler to refer to some AI programs as NLP programs, that is not strictly the case. Instead, they are able to make sense of language, after being properly trained, but there is an entirely different system and process involved in helping these programs understand natural language.

This is why natural language processing with PyTorch comes in handy. PyTorch is built off of Python and has the benefit of having pre-written codes, called classes, all designed around NLP. This makes the entire process quicker and easier for everyone involved.

With these PyTorch classes at the ready and the various other Python libraries at PyTorch’s disposal, there is no better machine learning framework for natural language processing.

How Do I Start Learning Natural Language Processing?

To get started using NLP with PyTorch, you will need to be familiar with Python for coding.

Once you are familiar with Python, you will begin to see plenty of other frameworks that can be used for various deep learning projects. However, natural language processing with PyTorch is optimal because of PyTorch Tensors.

Simply put, tensors allow you to perform computations with the use of a GPU which can significantly increase the speed and performance of your program for NLP with PyTorch. This means you can train your deep learning program quicker to be able to utilize NLP for whatever desired outcome you have.

As mentioned above, there are different PyTorch classes with the ability to function well for NLP and accompanying programs. We will break down six of these classes and their use cases to help you get started making the right selection.

1. torch.nn.RNN

The first three classes we are going to look at are multi-layer classes, which means they can represent bi-directional recurrent neural networks. To simplify what this means, it allows the deep learning program to learn from past states as well as learn from new/future states as computations continue to run and process. This allows these programs to learn and process natural language inputs and even understand deeper language quirks.

torch.nn.RNN many to many diagram, Image Source

torch.nn.RNN stands for Recurring Neural Network and lets you know what to expect from the class. This is the simplest recurring neural network PyTorch class to use to get started with natural language processing.

2. torch.nn.LSTM

torch.nn.LSTM is another multi-layer PyTorch class. It has all the same benefits as torch.nn.RNN but with Long Short Term Memory. Essentially, this means deep learning programs using this class can go beyond one-to-one data point connections and process entire data sequences.

For natural language processing with PyTorch, torch.nn.LSTM is one of the more common classes used because it can begin to understand connections between not only written or typed data input but can also recognize speech and other vocalizations.

Being able to process more complex data sequences makes it a necessary component in being able to capably perform for programs utilizing the full potential of natural language processing.

3. torch.nn.GRU

torch.nn.GRU builds on the RNN and LSTM classes by creating Gated Recurrent Units. In brief, this translates to torch.nn.GRU class programs having a gated output. This means they function similarly to torch.nn.LSTM but actually have processes to simply forget datasets that do not fit or align with the rest of the desired outcomes or conclusions of the majority of datasets.

torch.nn.GRU class programs are another great way to get started in NLP with PyTorch because they are simpler but produce similar results to torch.nn.LSTM in less time. However, they can be less accurate without close monitoring if the program ignores datasets that might be important to its learning.

4. torch.nn.RNNCell

The next three classes are simplified versions of their predecessors, so they all function similarly with different benefits. These classes are all cell-level classes and basically run one operation at a time rather than process multiple datasets or sequences simultaneously.

Output result of NLP with PyTorch using words assigned to corresponding images, Image Source

The going is slower this way, but the outcome can be far more accurate with enough time. Being a RNNCell, this class program can still learn from past and future states.

5. torch.nn.LSTMCell

The torch.nn.LSTMCell functions similarly to the regular torch.nn.LSTM class with the ability to process datasets and sequences, but not multiple simultaneously. As with RNNCell programs, this means the going is slower and less intensive but it can actually increase accuracy with time.

Each of these cell-level classes have small variations from their predecessors, but to dive too much into detail for these differences would be far beyond the scope of this guide.

6. torch.nn.GRUCell

One of the most interesting classes used in natural language processing with PyTorch is torch.nn.GRUCell. It still maintains the functionality of having gated outputs, which means it can ignore or even forget outlier datasets while still learning from past and future operations.

This is, arguably, one of the more popular PyTorch classes used for those starting out because it has the most potential with the lowest number of requirements for optimal operations.

The main sacrifice here is time and effort in making sure the program is trained properly.

Implementing Natural Language Processing With PyTorch

There is still much to be said about how to get started with natural language processing with PyTorch, but one more major factor to understand once you have selected a PyTorch class that is appropriate for your deep learning model is to decide how you will implement NLP within your model.

Encoding words into your model is probably one of the most obvious and important processes to having a fully-optimized and operational deep learning model with natural language processing. NLP with PyTorch requires having some sort of word encoding method.

There are many ways to have models process individual letters, but the point of creating NLP deep learning models is to, presumably, focus not on individual words and letters but the semantics and linguistic meanings of those words and phrases. Here are three basic word embedding models for NLP with PyTorch:

- Simple word encoding: training the model to focus on each individual word in a sequence and letting them derive similarities and differences on their own. This is the simplest but can be difficult for the model to accurately understand or predict semantics.

- N-Gram language modeling: the model is trained to learn words with respect to the words in the sequence. This means they can learn how words function in relationship to one another and in sentences as a whole.

- Continuous bag-of-words (CBOW): an expanded version of N-Gram language modeling, the deep learning model is trained to sequence data of a set amount of words before and a set amount of words after each word in a sequence in order to deeply learn how words function with surrounding words and how they function in their sequence. This is, by far, the most popular method used by those using natural language processing with PyTorch.

Once you have your PyTorch class selected and a method of embedding words you are ready to get started utilizing natural language processing in your next deep learning project!

Using natural language processing with PyTorch to create end result like Siri, Image Source

Looking For More Information On Natural Language Processing or PyTorch?

Natural language processing is one of the hottest topics in deep learning and AI with many industries trying to figure out ways to utilize this type of deep learning model for internal and external use.

There is so much more to see and learn, but let us know if you think we missed anything. If you have any questions about how to start implementing NLP or PyTorch, then do not hesitate to reach out!

What do you think? Are you ready to tackle natural language processing with PyTorch? Feel free to comment below with any questions you have.

Have any questions?

Contact Exxact Today