Python just isn’t fast enough.

Python has been the choice programming language for Deep Learning with a strong emphasis on simplicity and various packages users can import such as Pandas, NumPy, and more. While its simple syntax can power complex tasks, it is slow. Other languages like C and C++ have incredible speed and serious programmers looking for speed would have to write those sections of code in their Python program in Rust, C, or even CUDA.

Hardware that accelerates our workloads like GPUs, CPUs are usually the star. Just like how we cover new advancements in hardware, we are excited to see a newly designed Python superset language being introduced to the market that is making headlines in developer news, while not even being available yet.

The Language to Replace Python

There are numerous ongoing projects to make Python faster, such as Jax from Google. We also have alternative data science-oriented languages like Julia.

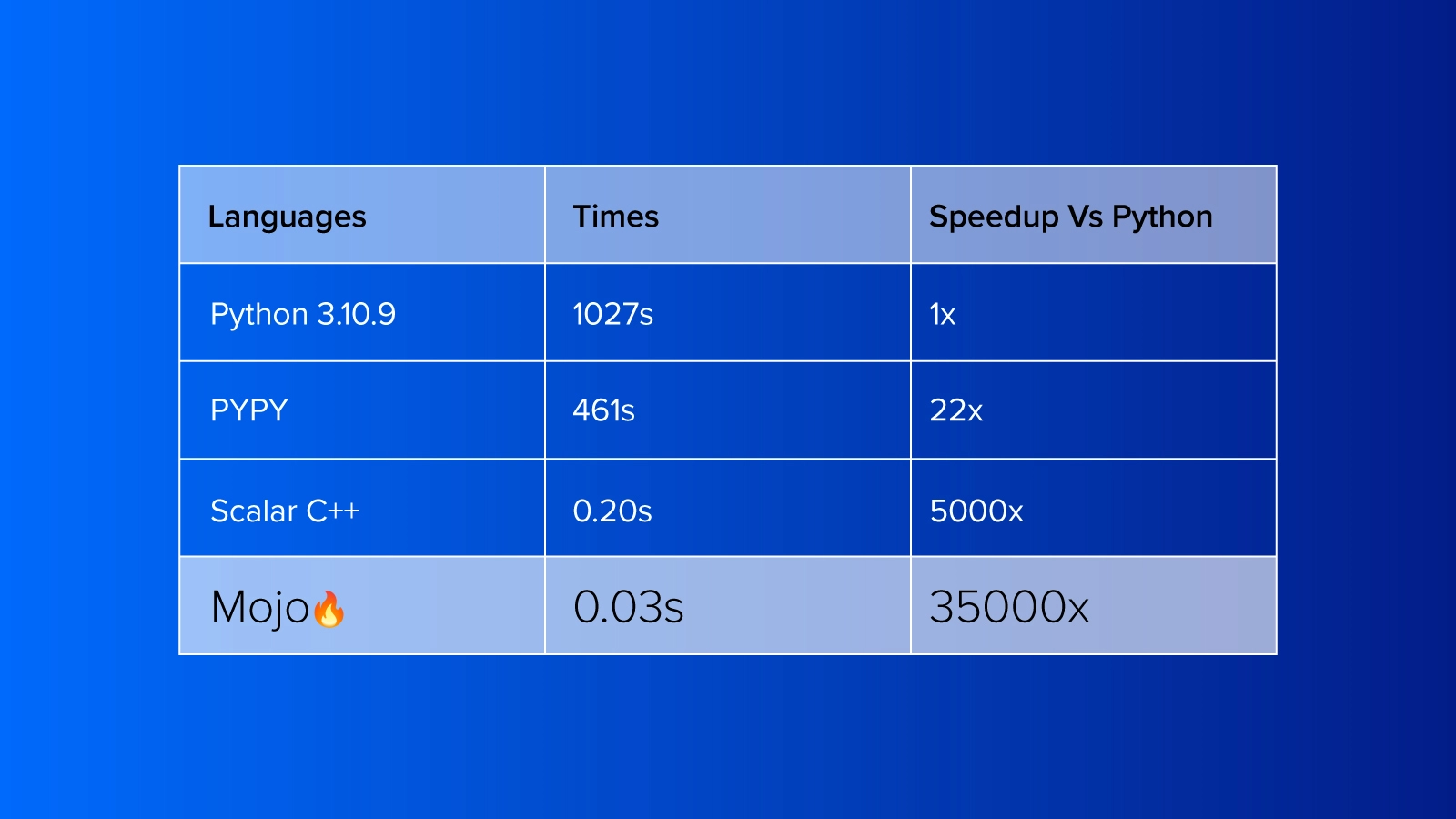

Mojo distinguishes itself from other Python enhancements through its alleged speedup of 35,000x faster than Python (when running numeric algorithms such as Mandelbrot) thanks to hardware acceleration. Mojo isn’t just a new kid on the block built by a random group, but instead Chris Lattner, creator of Swift and the LLVM compiler.

Fully Compatible with Python

Mojo is a superset of Python, so it is built on top to add features while still be able to use all your previous Python code including ability to import extensions and frameworks built specifically for Python.

Both programming languages will share many functions, features, and libraries such as NumPy, Pandas, matplotlib, TensorFlow, and more. Importing Python libraries in Mojo is as such:

# For Matplotlib

plt = Python.import module ('matplotlib.pyplot')

# For NumPy

np = Python.import module ('numpy')

Built for Accelerated Hardware

It is designed for developing AI and utilizing AI hardware like AMX and Tensor Cores on CUDA through Multi-Level Intermediate Representation (MLIR). Mojo has an autotune function that automatically optimizes the performance according to your hardware.

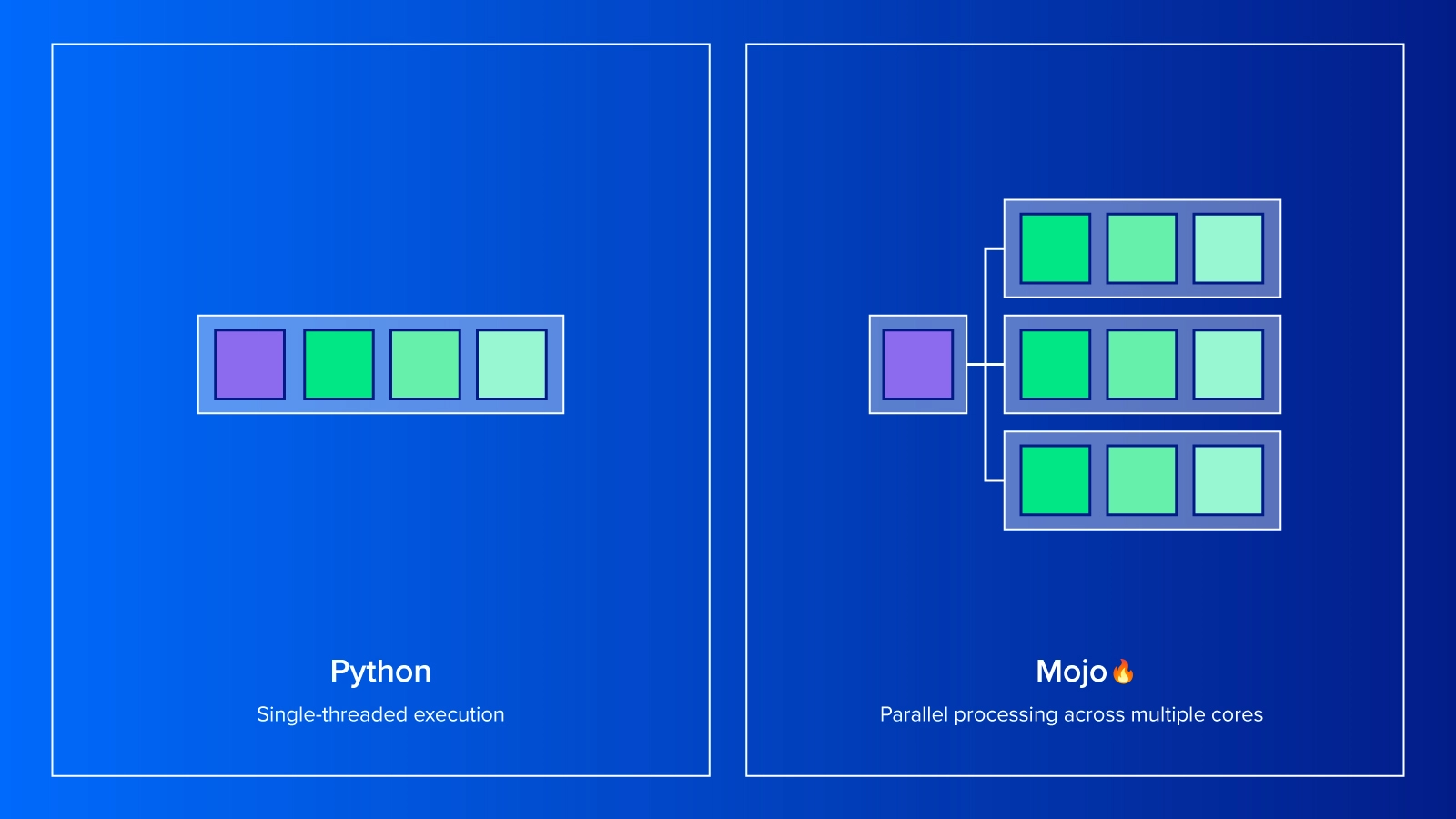

Instead of executing in serial in Python, Mojo parallelizes the task to multiple cores for up to 35,000 faster.

Strong Type Checking and Granularity

While Mojo is built on top of Python it adds a couple of things that make it a better choice for fast and efficient computation and compilation. It enables your Python code to be more granular, just as you intended while being memory efficient and safe.

Mojo adds the use for mutable and immutable variables denoted with var and let. It also uses a static data type called ‘struct’ which tightly packs the program snippet in memory which can then be pointed to via pointers. Mojo’s implementations uses ‘fn' to create functions in which programmers can dictate the data type (integers, string, boolean, floating points, etc.). The added granularity enables intended variables to stay static and predictable without having to encounter Python’s inherent dynamic programming language.

Keep in mind, since Mojo is built on top of Python; you can still run your code dynamically if you so choose to do so.

Memory and Ownership

Ownership and the borrow checker are fundamental concepts in the Rust programming language. They are key features that help ensure memory safety and prevent common programming errors like null pointer dereferences, data races, and memory leaks.

Mojo implements the borrow checker and ownership in its code to ensure you can take advantage of memory safety without the rough edges.

def reorder_and_process (owned x: HugeArray):

# Update in place

sort(x)

# Transfer ownership

give_away(x^)

# Error: 'x' moved away!

print(x[0])

Parallelization

Mojo adds the use of SIMD (single instruction multiple data) type which is a built in type representing a vector where a single instruction can be executed across multiple cores in hardware.

In deep learning and machine learning, matrix multiplication is a huge slowdown for Python (which is typically outsourced). In Mojo we can use the ‘parallelize’ function for extreme speedup. Don’t stop there. Mojo includes Tiling Optimization for caching and reusing data and since hardware is different between various machines, there is an autotuning feature in which the compiler will evaluate the fastest tiling size.

How to Get Started with Mojo

Mojo (as of May 2023) is still in development and is not open to the public. However, you can sign up on a waiting list to access their Mojo playground.

We are by no means hardcore programming experts so please check out Modular’s website for Mojo and its documentation. Mojo's developers stress the importance of their new programming language being easy to implement in our workloads. Having all your previous Python code work in Mojo will be a huge plus and a big thing to look out for in the future of AI development.

Exxact is a supplier of High-Performance GPU solutions for AI and Deep Learning.

Contact us today to boost your computing to the next level.

Mojo🔥 - A Programming Language for AI Developers 35000x Faster than Python

Python just isn’t fast enough.

Python has been the choice programming language for Deep Learning with a strong emphasis on simplicity and various packages users can import such as Pandas, NumPy, and more. While its simple syntax can power complex tasks, it is slow. Other languages like C and C++ have incredible speed and serious programmers looking for speed would have to write those sections of code in their Python program in Rust, C, or even CUDA.

Hardware that accelerates our workloads like GPUs, CPUs are usually the star. Just like how we cover new advancements in hardware, we are excited to see a newly designed Python superset language being introduced to the market that is making headlines in developer news, while not even being available yet.

The Language to Replace Python

There are numerous ongoing projects to make Python faster, such as Jax from Google. We also have alternative data science-oriented languages like Julia.

Mojo distinguishes itself from other Python enhancements through its alleged speedup of 35,000x faster than Python (when running numeric algorithms such as Mandelbrot) thanks to hardware acceleration. Mojo isn’t just a new kid on the block built by a random group, but instead Chris Lattner, creator of Swift and the LLVM compiler.

Fully Compatible with Python

Mojo is a superset of Python, so it is built on top to add features while still be able to use all your previous Python code including ability to import extensions and frameworks built specifically for Python.

Both programming languages will share many functions, features, and libraries such as NumPy, Pandas, matplotlib, TensorFlow, and more. Importing Python libraries in Mojo is as such:

# For Matplotlib

plt = Python.import module ('matplotlib.pyplot')

# For NumPy

np = Python.import module ('numpy')

Built for Accelerated Hardware

It is designed for developing AI and utilizing AI hardware like AMX and Tensor Cores on CUDA through Multi-Level Intermediate Representation (MLIR). Mojo has an autotune function that automatically optimizes the performance according to your hardware.

Instead of executing in serial in Python, Mojo parallelizes the task to multiple cores for up to 35,000 faster.

Strong Type Checking and Granularity

While Mojo is built on top of Python it adds a couple of things that make it a better choice for fast and efficient computation and compilation. It enables your Python code to be more granular, just as you intended while being memory efficient and safe.

Mojo adds the use for mutable and immutable variables denoted with var and let. It also uses a static data type called ‘struct’ which tightly packs the program snippet in memory which can then be pointed to via pointers. Mojo’s implementations uses ‘fn' to create functions in which programmers can dictate the data type (integers, string, boolean, floating points, etc.). The added granularity enables intended variables to stay static and predictable without having to encounter Python’s inherent dynamic programming language.

Keep in mind, since Mojo is built on top of Python; you can still run your code dynamically if you so choose to do so.

Memory and Ownership

Ownership and the borrow checker are fundamental concepts in the Rust programming language. They are key features that help ensure memory safety and prevent common programming errors like null pointer dereferences, data races, and memory leaks.

Mojo implements the borrow checker and ownership in its code to ensure you can take advantage of memory safety without the rough edges.

def reorder_and_process (owned x: HugeArray):

# Update in place

sort(x)

# Transfer ownership

give_away(x^)

# Error: 'x' moved away!

print(x[0])

Parallelization

Mojo adds the use of SIMD (single instruction multiple data) type which is a built in type representing a vector where a single instruction can be executed across multiple cores in hardware.

In deep learning and machine learning, matrix multiplication is a huge slowdown for Python (which is typically outsourced). In Mojo we can use the ‘parallelize’ function for extreme speedup. Don’t stop there. Mojo includes Tiling Optimization for caching and reusing data and since hardware is different between various machines, there is an autotuning feature in which the compiler will evaluate the fastest tiling size.

How to Get Started with Mojo

Mojo (as of May 2023) is still in development and is not open to the public. However, you can sign up on a waiting list to access their Mojo playground.

We are by no means hardcore programming experts so please check out Modular’s website for Mojo and its documentation. Mojo's developers stress the importance of their new programming language being easy to implement in our workloads. Having all your previous Python code work in Mojo will be a huge plus and a big thing to look out for in the future of AI development.

Exxact is a supplier of High-Performance GPU solutions for AI and Deep Learning.

Contact us today to boost your computing to the next level.

.jpg?format=webp)