Introduction to Deep Learning for Manufacturing

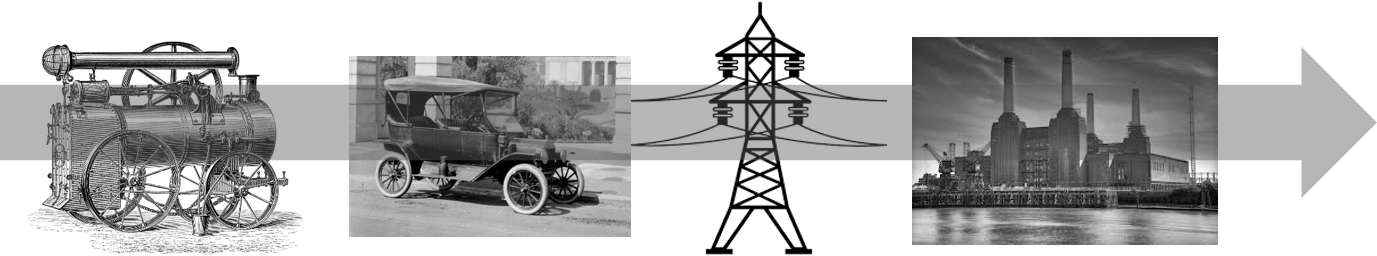

Before getting into the details of deep learning for manufacturing, it's good to step back and view a brief history. Concepts, original thinking, and physical inventions have been shaping the world economy and manufacturing industry since the beginning of modern era i.e. early 18th century.

Ideas of economies-of-scale by the likes of Adam Smith and John Stuart Mill, the first industrial revolution and steam-powered machines, electrification of factories and the second industrial revolution, and the introduction of the assembly line method by Henry Ford, are just some of the prime examples of how the search for high efficiency and enhanced productivity have always been at the heart of manufacturing.

However, almost all of these inventions centered around extracting the maximum efficiency from men and machines by carefully manipulating the laws of mechanics and thermodynamics. For the past few decades, however, the greatest new gains in manufacturing have come from adding the concept of information or data into the existing mix.

Adding Deep Learning Information Into the Mix

Movement of raw materials, goods, and parts is at the heart of any manufacturing system. After the revolution in computing and information technology, it was realized that such physical movement can only be optimally efficient when that movement is controlled in a precise manner, in conjunction with hundreds of other similar movements, supervised by an information-processing engine. Therefore, innovative combination of hardware and software has ushered the "old industries" into the era of smart manufacturing.

But, today the manufacturing industries worldwide are facing a new problem stemming from those very information-processing systems. It is the dual (and related) problem of deluge of data and information explosion.

As the cost and operational complexity of computing and storage decreased at an exponential pace (Moore’s law), the information content generated by workers, machines, controllers, factories, warehouses, and the logistic machinery exploded in size and complexity in such a manner, that it took traditional manufacturing organizations by surprise.

However, they have not been alone. Even the information-savvy software and IT organizations have had to confront the same issue in the last decade or so. Google’s blogs and publications have admitted that the complexity of their software projects were becoming unwieldy.

The solution?

Innovative ideas in the field of artificial intelligence and machine learning have come to rescue many software organizations from being drowned in the deluge of data and have helped them make sense of the exabytes of data, that they need to process everyday.

While not at the same scale yet, manufacturing organizations around the world are also warming up to the idea of using cutting-edge advances in these fields to aid and enhance their operation and continue delivering the highest value to their customers and shareholders. Lets take a look at a few interesting examples and practical cases.

Potential Applications of Deep learning in Manufacturing

It is to be noted that digital transformation and application of modeling techniques has been going on in the arena of manufacturing industry for quite some time. As inefficiencies plagued global manufacturing in the 60’s and 70’s, almost every big organization streamlined and adopted good practices like Toyota's Manufacturing Technique. This kind of technique relied on continuous measurement and statistical modeling of a multitude of process variables and product features.

As the measurement and storage of such information became digitized, computers were brought in for building those predictive models. This was the precursor to modern digital analytics of today.

However, as the data explosion continues, traditional statistical modeling cannot keep up with such high-dimensional, non-structured data feed. It is here that deep learning shines bright as it is inherently capable of dealing with highly nonlinear data patterns and also allowing you to discover features that are extremely difficult to be spotted by statisticians or data modelers manually.

Quality Control in Machine Learning and Deep Learning

Machine learning, in general, and deep learning, in particular, can significantly improve the quality control tasks in a large assembly line. In fact, analytics and ML-driven process and quality optimization are predicted to grow by 35% and process visualization and automation is slated to grow by 34%, according to Forbes.

Traditionally, machines have only been effective at spotting quality issues with high-level metrics such as weight or length of a product. Without spending a fortune on very sophisticated computer vision systems, it was not possible to detect subtle visual clues on quality issues while the parts whizz by on an assembly line at high speed.

Even then, those computer vision systems were somewhat unreliable and unable to scale effectively across problem-area domains. A particular sub-organization of a large manufacturing plant might have such a system but it could not be ‘trained’ to work with other sections of the plant if that was needed.

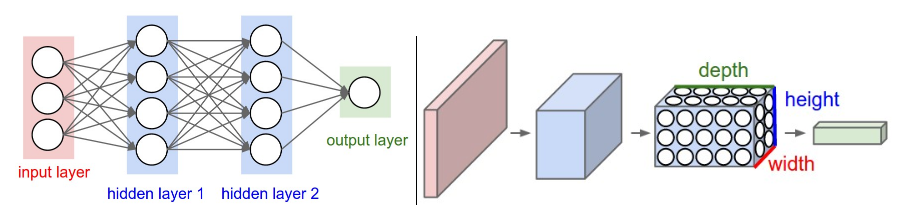

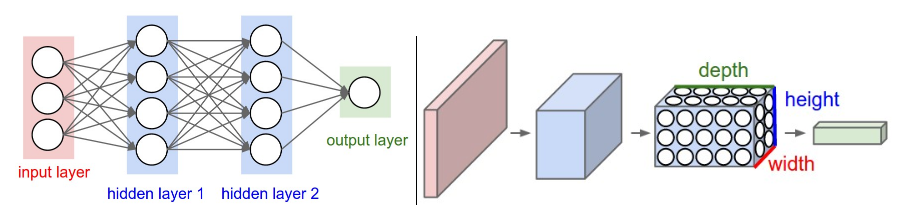

Deep learning architectures like convolutional neural nets are particularly poised to take over from human operators to spot and detect visual clues indicative of quality problems in manufactured goods and parts in a large assembly process. They are much more scalable than their older counterparts, which relied on hand-crafted feature engineering, and can be trained and re-deployed in whichever section of the manufacturing plant needs them. All that needs to happen for retraining is to train the system with relevant image data.

Process Monitoring and Anomaly Detection

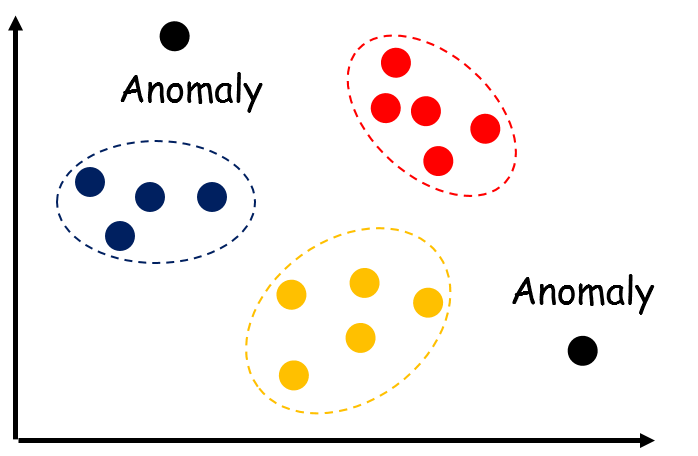

Process monitoring and anomaly detection is necessary for any continuous quality improvement effort. All the major manufacturing organizations use it extensively. Traditional approaches like SPC (Statistical Process Control) charts have stemmed from simple (sometimes wrong) assumptions about the nature of the statistical distribution of the process variables.

However, as the number of mutually-interacting variables increases and an ever-increasing array of sensors pick up stationary and time-varying data about these variables, the traditional approaches do not scale with high accuracy or reliability.

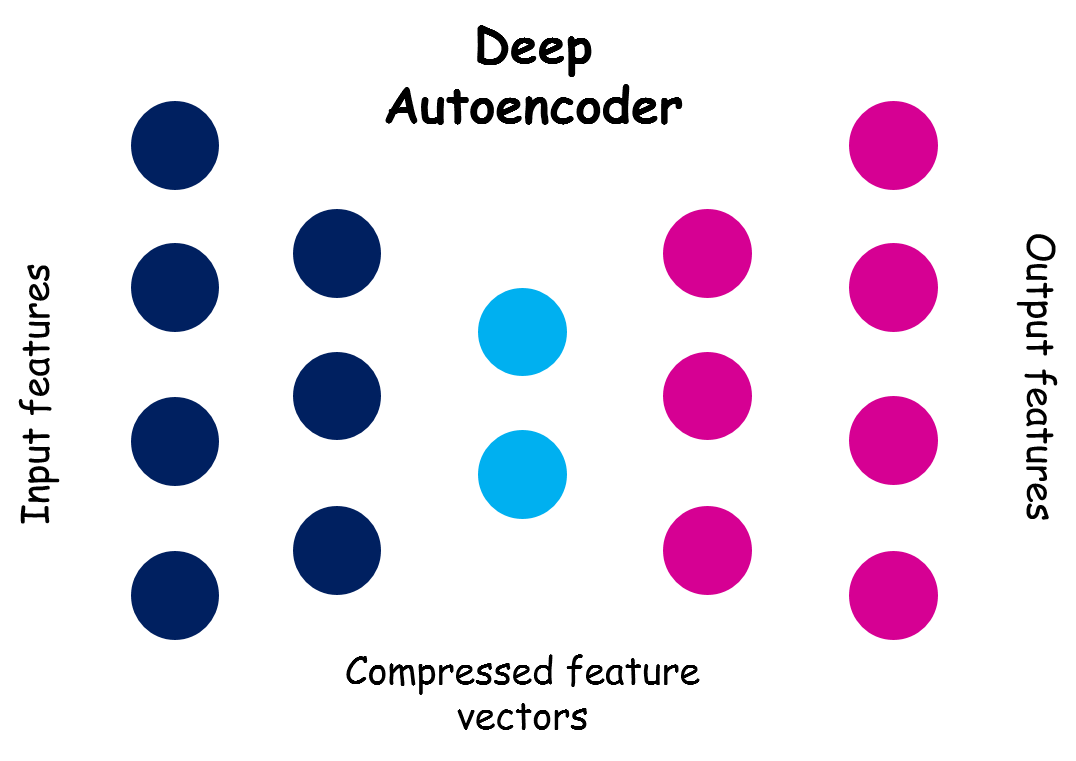

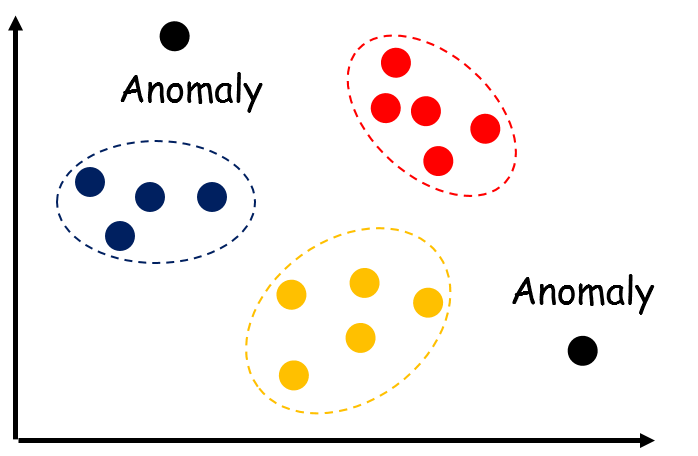

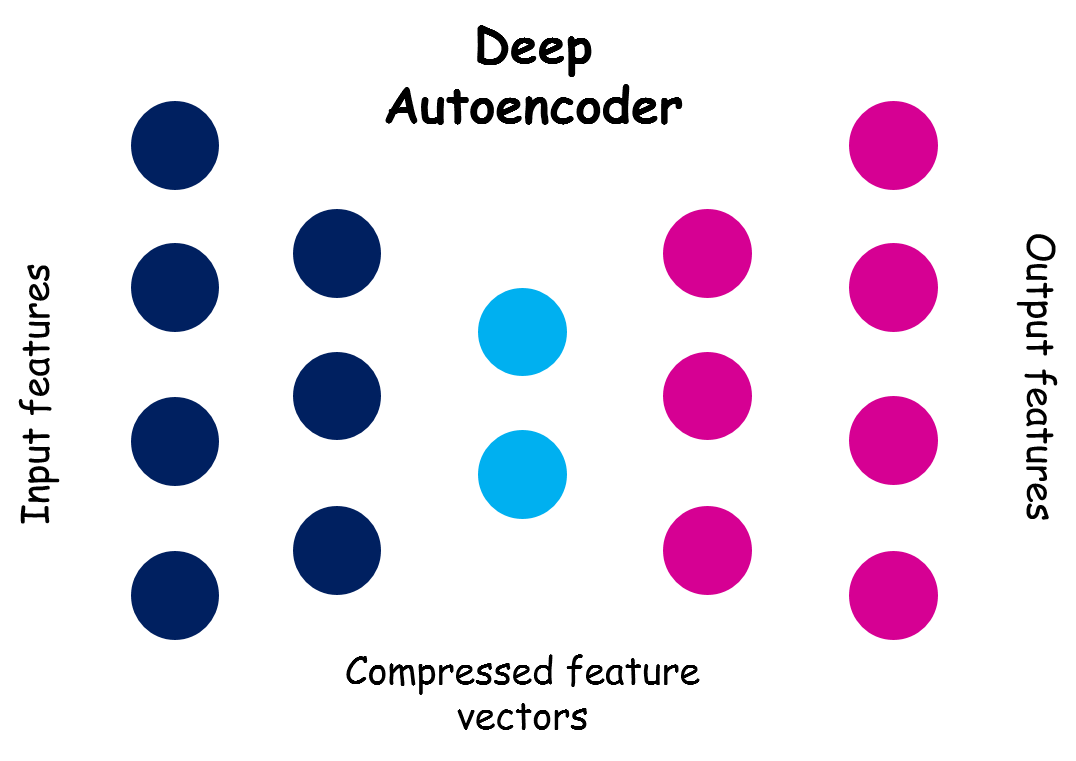

This is where deep learning models can help in a rather unexpected manner. To detect anomaly or departure from the norm, often dimensionality reduction techniques like PCA (Principal Component Analysis) is used from traditional statistical signal processing domain. However, one can use static or variational Autoencoders, which are deep neural networks with layers consisting of progressively decreasing and increasing convolutional filters (and pooling).

These type of encoder networks look past the noise and usual variance and encode the essential features of a signal or datastream in a small number of high-dimensional bits. It is much easier to track highly encoded bits if they are changing unexpectedly when one is looking for anomalies in a continuously running, high-volume process.

In brief, the central problem of process monitoring is something which can be handled by the branch of machine learning known as unsupervised machine learning. In this respect, deep learning autoencoders are a powerful set of tools you can employ.

As the process complexity and associated Big Data grows without bounds, it is of no doubt that conventional statistical modeling (which is based on small scale sampling of data), will give away to such advanced ML techniques and models.

These articles provide a good overview:

- Machine learning for anomaly detection and condition monitoring

- Deep Learning for Anomaly Detection: A Survey

Predictive Maintenance in Deep Learning

Deep learning models have already proven to be highly effective in the domain of economics and financial modeling, dealing with time-series data. Similarly, in predictive maintenance, the data is collected over time to monitor the health of an asset with the goal of finding patterns to predict failures. Consequently, deep learning can be of significant aid for predictive maintenance of complex machinery and connected systems.

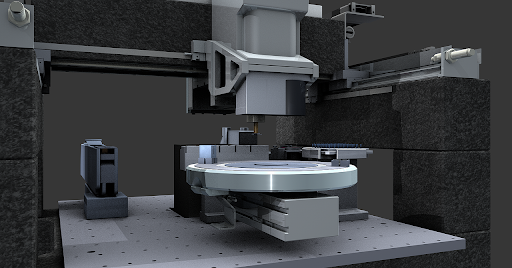

Determining when to conduct maintenance on equipment is an exceptionally difficult task with high financial and managerial stakes. Each time a machine is taken offline for maintenance, the result is reduced production, or even factory downtime. Frequent fixes translate into clear losses, but infrequent maintenance can lead to even more costly breakdowns and catastrophic industrial accidents.

This is why the automated feature engineering of neural networks is of critical importance. Traditional ML algorithms for predictive maintenance depends on narrow, domain-specific expertise to hand-craft features to detect machine health issues. Whereas a neural net can infer those features automatically with sufficiently high-quality training data. It is therefore, cross-domain and scalable.

In particular, recurrent neural networks (RNN) with Long-short-term-memory (LSTM) cells or gated-recurrent-units (GRU) can predict short-range to mid-range temporal behavior based on past training time in the form of time series.

Fortunately, there is a deluge of research activities on RNN with the goal of applying them to the field of natural language processing and text analytics. All the knowledge in this area of research can be leveraged to apply in the setting of an industrial application. For example, compute-optimized RNNs can be used for manufacturing jobs where the computational load is minimized without sacrificing the predictive power too much. It may not be best performing for an NLP task, but can be sufficiently powerful for predicting potential issues with a machine health parameters.

Of course, a human expert will review the predictions of a deep learning system to finally decide about the maintenance work. But in a smart, connected factory, using such prediction machines along with engineers and technicians, can save a manufacturing organization money and manpower ultimately improving downtime and machine utilization.

In fact, the adoption of machine learning and analytics in manufacturing will only improve predictive maintenance. Predictive maintenance is expected to increase by 38% in the next five years according to PwC. This article from Microsoft provides more information on the topic:

Deep learning for predictive maintenance with Long Short Term Memory Networks

Factory Input Optimization

A manufacturing organization’s profitability critically depends on optimizing the physical resources going into the production process as well as supporting those processes. For example, electrical power and water supply are two crucial factory inputs that can benefit from optimization.

Complex optimization processes and strategies are often employed for maximizing the utilization of these essential resources. As the factory size and the machine-to-machine interaction grows, the flow of these resources become intractably complex to manage with simple predictive algorithms. This is when powerful learning machines like neural nets need to be brought into the game.

Deep learning systems can track the pattern of electricity usage as a function of hundreds of plant process parameters and product design variables and can dynamically recommend best practices for the optimum utilization. If the organization is moving toward renewable energy adoption, predictions from deep learning algorithms can be used to chart out the optimum transition trajectory from fossil-fuel dependency to a sustainable energy footprint. This kind of paradigm change is difficult to handle using classical predictive analytics.

Summary

Information-system-enabled smart manufacturing has increased productivity and quality of industrial organizations, big and small, for quite a few decades now. In this smart manufacturing setting, usage of data analytics, statistical modeling, and predictive algorithms have increased by leaps and bounds, as the quality and propensity of machine-generated and human-generated data improved over time. The industrial revolution, which started with Henry Ford’s assembly line at the turn of the past century, was aided throughout the 20th century by innovations in automation, control systems, electronics, sensors, digital computing, and the internet. Big data revolution of the 21st century is poised to finally take it to a whole new level by unleashing exponential growth opportunities.

To take full advantage of this data explosion, deep learning, and associated AI-assisted techniques, must be integrated in the toolkit of modern manufacturing systems, as they are exponentially more powerful than classical statistical learning and prediction systems.

Deep learning is able to integrate seamlessly with the ambitious goals of Industry 4.0 - Extreme automation, and Digital Factory. Industry 4.0 is designed around the constant connection to information—sensors, drives, valves, all working together with a single common goal: minimizing downtime and increasing efficiency. Algorithmic frameworks like a deep neural network, which is flexible enough to work with a variety of data types as they stream in continuously, are the right choice for handling that particular type of task.

The resulting increase in productivity and quality is expected to go far beyond the narrow goal of satisfying corporate profitability. Tomorrow’s smart manufacturing will enrich the lives of billions of consumers by providing goods and services with high quality and at an affordable cost. Society, as a whole, should benefit from such paradigm-shifting transformation.

The future is bright and we look forward to it.

Deep Learning for Manufacturing: Overview & Applications

Introduction to Deep Learning for Manufacturing

Before getting into the details of deep learning for manufacturing, it's good to step back and view a brief history. Concepts, original thinking, and physical inventions have been shaping the world economy and manufacturing industry since the beginning of modern era i.e. early 18th century.

Ideas of economies-of-scale by the likes of Adam Smith and John Stuart Mill, the first industrial revolution and steam-powered machines, electrification of factories and the second industrial revolution, and the introduction of the assembly line method by Henry Ford, are just some of the prime examples of how the search for high efficiency and enhanced productivity have always been at the heart of manufacturing.

However, almost all of these inventions centered around extracting the maximum efficiency from men and machines by carefully manipulating the laws of mechanics and thermodynamics. For the past few decades, however, the greatest new gains in manufacturing have come from adding the concept of information or data into the existing mix.

Adding Deep Learning Information Into the Mix

Movement of raw materials, goods, and parts is at the heart of any manufacturing system. After the revolution in computing and information technology, it was realized that such physical movement can only be optimally efficient when that movement is controlled in a precise manner, in conjunction with hundreds of other similar movements, supervised by an information-processing engine. Therefore, innovative combination of hardware and software has ushered the "old industries" into the era of smart manufacturing.

But, today the manufacturing industries worldwide are facing a new problem stemming from those very information-processing systems. It is the dual (and related) problem of deluge of data and information explosion.

As the cost and operational complexity of computing and storage decreased at an exponential pace (Moore’s law), the information content generated by workers, machines, controllers, factories, warehouses, and the logistic machinery exploded in size and complexity in such a manner, that it took traditional manufacturing organizations by surprise.

However, they have not been alone. Even the information-savvy software and IT organizations have had to confront the same issue in the last decade or so. Google’s blogs and publications have admitted that the complexity of their software projects were becoming unwieldy.

The solution?

Innovative ideas in the field of artificial intelligence and machine learning have come to rescue many software organizations from being drowned in the deluge of data and have helped them make sense of the exabytes of data, that they need to process everyday.

While not at the same scale yet, manufacturing organizations around the world are also warming up to the idea of using cutting-edge advances in these fields to aid and enhance their operation and continue delivering the highest value to their customers and shareholders. Lets take a look at a few interesting examples and practical cases.

Potential Applications of Deep learning in Manufacturing

It is to be noted that digital transformation and application of modeling techniques has been going on in the arena of manufacturing industry for quite some time. As inefficiencies plagued global manufacturing in the 60’s and 70’s, almost every big organization streamlined and adopted good practices like Toyota's Manufacturing Technique. This kind of technique relied on continuous measurement and statistical modeling of a multitude of process variables and product features.

As the measurement and storage of such information became digitized, computers were brought in for building those predictive models. This was the precursor to modern digital analytics of today.

However, as the data explosion continues, traditional statistical modeling cannot keep up with such high-dimensional, non-structured data feed. It is here that deep learning shines bright as it is inherently capable of dealing with highly nonlinear data patterns and also allowing you to discover features that are extremely difficult to be spotted by statisticians or data modelers manually.

Quality Control in Machine Learning and Deep Learning

Machine learning, in general, and deep learning, in particular, can significantly improve the quality control tasks in a large assembly line. In fact, analytics and ML-driven process and quality optimization are predicted to grow by 35% and process visualization and automation is slated to grow by 34%, according to Forbes.

Traditionally, machines have only been effective at spotting quality issues with high-level metrics such as weight or length of a product. Without spending a fortune on very sophisticated computer vision systems, it was not possible to detect subtle visual clues on quality issues while the parts whizz by on an assembly line at high speed.

Even then, those computer vision systems were somewhat unreliable and unable to scale effectively across problem-area domains. A particular sub-organization of a large manufacturing plant might have such a system but it could not be ‘trained’ to work with other sections of the plant if that was needed.

Deep learning architectures like convolutional neural nets are particularly poised to take over from human operators to spot and detect visual clues indicative of quality problems in manufactured goods and parts in a large assembly process. They are much more scalable than their older counterparts, which relied on hand-crafted feature engineering, and can be trained and re-deployed in whichever section of the manufacturing plant needs them. All that needs to happen for retraining is to train the system with relevant image data.

Process Monitoring and Anomaly Detection

Process monitoring and anomaly detection is necessary for any continuous quality improvement effort. All the major manufacturing organizations use it extensively. Traditional approaches like SPC (Statistical Process Control) charts have stemmed from simple (sometimes wrong) assumptions about the nature of the statistical distribution of the process variables.

However, as the number of mutually-interacting variables increases and an ever-increasing array of sensors pick up stationary and time-varying data about these variables, the traditional approaches do not scale with high accuracy or reliability.

This is where deep learning models can help in a rather unexpected manner. To detect anomaly or departure from the norm, often dimensionality reduction techniques like PCA (Principal Component Analysis) is used from traditional statistical signal processing domain. However, one can use static or variational Autoencoders, which are deep neural networks with layers consisting of progressively decreasing and increasing convolutional filters (and pooling).

These type of encoder networks look past the noise and usual variance and encode the essential features of a signal or datastream in a small number of high-dimensional bits. It is much easier to track highly encoded bits if they are changing unexpectedly when one is looking for anomalies in a continuously running, high-volume process.

In brief, the central problem of process monitoring is something which can be handled by the branch of machine learning known as unsupervised machine learning. In this respect, deep learning autoencoders are a powerful set of tools you can employ.

As the process complexity and associated Big Data grows without bounds, it is of no doubt that conventional statistical modeling (which is based on small scale sampling of data), will give away to such advanced ML techniques and models.

These articles provide a good overview:

- Machine learning for anomaly detection and condition monitoring

- Deep Learning for Anomaly Detection: A Survey

Predictive Maintenance in Deep Learning

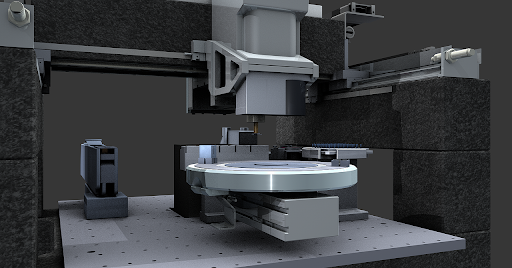

Deep learning models have already proven to be highly effective in the domain of economics and financial modeling, dealing with time-series data. Similarly, in predictive maintenance, the data is collected over time to monitor the health of an asset with the goal of finding patterns to predict failures. Consequently, deep learning can be of significant aid for predictive maintenance of complex machinery and connected systems.

Determining when to conduct maintenance on equipment is an exceptionally difficult task with high financial and managerial stakes. Each time a machine is taken offline for maintenance, the result is reduced production, or even factory downtime. Frequent fixes translate into clear losses, but infrequent maintenance can lead to even more costly breakdowns and catastrophic industrial accidents.

This is why the automated feature engineering of neural networks is of critical importance. Traditional ML algorithms for predictive maintenance depends on narrow, domain-specific expertise to hand-craft features to detect machine health issues. Whereas a neural net can infer those features automatically with sufficiently high-quality training data. It is therefore, cross-domain and scalable.

In particular, recurrent neural networks (RNN) with Long-short-term-memory (LSTM) cells or gated-recurrent-units (GRU) can predict short-range to mid-range temporal behavior based on past training time in the form of time series.

Fortunately, there is a deluge of research activities on RNN with the goal of applying them to the field of natural language processing and text analytics. All the knowledge in this area of research can be leveraged to apply in the setting of an industrial application. For example, compute-optimized RNNs can be used for manufacturing jobs where the computational load is minimized without sacrificing the predictive power too much. It may not be best performing for an NLP task, but can be sufficiently powerful for predicting potential issues with a machine health parameters.

Of course, a human expert will review the predictions of a deep learning system to finally decide about the maintenance work. But in a smart, connected factory, using such prediction machines along with engineers and technicians, can save a manufacturing organization money and manpower ultimately improving downtime and machine utilization.

In fact, the adoption of machine learning and analytics in manufacturing will only improve predictive maintenance. Predictive maintenance is expected to increase by 38% in the next five years according to PwC. This article from Microsoft provides more information on the topic:

Deep learning for predictive maintenance with Long Short Term Memory Networks

Factory Input Optimization

A manufacturing organization’s profitability critically depends on optimizing the physical resources going into the production process as well as supporting those processes. For example, electrical power and water supply are two crucial factory inputs that can benefit from optimization.

Complex optimization processes and strategies are often employed for maximizing the utilization of these essential resources. As the factory size and the machine-to-machine interaction grows, the flow of these resources become intractably complex to manage with simple predictive algorithms. This is when powerful learning machines like neural nets need to be brought into the game.

Deep learning systems can track the pattern of electricity usage as a function of hundreds of plant process parameters and product design variables and can dynamically recommend best practices for the optimum utilization. If the organization is moving toward renewable energy adoption, predictions from deep learning algorithms can be used to chart out the optimum transition trajectory from fossil-fuel dependency to a sustainable energy footprint. This kind of paradigm change is difficult to handle using classical predictive analytics.

Summary

Information-system-enabled smart manufacturing has increased productivity and quality of industrial organizations, big and small, for quite a few decades now. In this smart manufacturing setting, usage of data analytics, statistical modeling, and predictive algorithms have increased by leaps and bounds, as the quality and propensity of machine-generated and human-generated data improved over time. The industrial revolution, which started with Henry Ford’s assembly line at the turn of the past century, was aided throughout the 20th century by innovations in automation, control systems, electronics, sensors, digital computing, and the internet. Big data revolution of the 21st century is poised to finally take it to a whole new level by unleashing exponential growth opportunities.

To take full advantage of this data explosion, deep learning, and associated AI-assisted techniques, must be integrated in the toolkit of modern manufacturing systems, as they are exponentially more powerful than classical statistical learning and prediction systems.

Deep learning is able to integrate seamlessly with the ambitious goals of Industry 4.0 - Extreme automation, and Digital Factory. Industry 4.0 is designed around the constant connection to information—sensors, drives, valves, all working together with a single common goal: minimizing downtime and increasing efficiency. Algorithmic frameworks like a deep neural network, which is flexible enough to work with a variety of data types as they stream in continuously, are the right choice for handling that particular type of task.

The resulting increase in productivity and quality is expected to go far beyond the narrow goal of satisfying corporate profitability. Tomorrow’s smart manufacturing will enrich the lives of billions of consumers by providing goods and services with high quality and at an affordable cost. Society, as a whole, should benefit from such paradigm-shifting transformation.

The future is bright and we look forward to it.