NVIDIA Quadro RTX 8000 Benchmarks

Updated 6/11/2019 with XLA FP32 and XLA FP16 metrics.

For this post, we conducted deep learning performance benchmarks for TensorFlow using the new NVIDIA Quadro RTX 8000 GPUs. Our Exxact Valence Workstation was equipped with 4x Quadro RTX 8000's giving us an awesome 192 GB of GPU memory for our system. To demonstrate, we ran the standard tf_cnn_benchmarks.py benchmark script (found here in the official TensorFlow github). Also, we ran tests on the following networks: ResNet-50, ResNet-152, Inception v3, Inception v4, VGG-16, AlexNet, and Nasnet. For good measure, we compared FP16 to FP32 performance, and used 'typical' batch sizes (64 in most cases). Furthermore, we incrementally doubled the batch size until we threw a memory error. Incidentally, all tests ran on1,2 and 4 GPU configurations.

Key Points and Observations

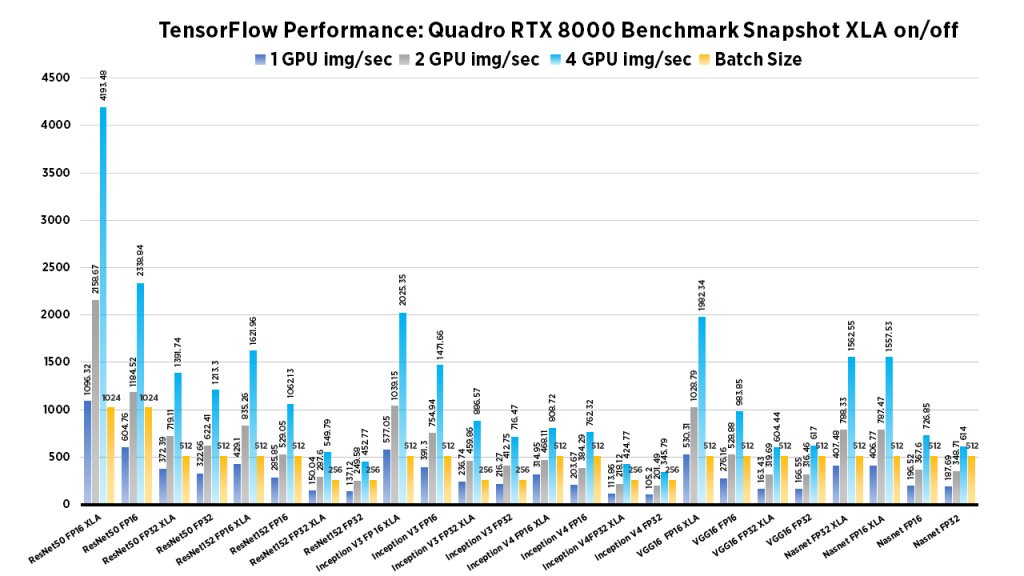

- In most scenarios, large batch size training showed impressive results in images/sec when compared to smaller batch sizes. This is especially true when scaling to the 4 GPU configuration.

- AlexNet and VGG16 performed better using smaller batch size on a single GPU, but larger batch size performed better on these models when scaling up to 4 GPUs.

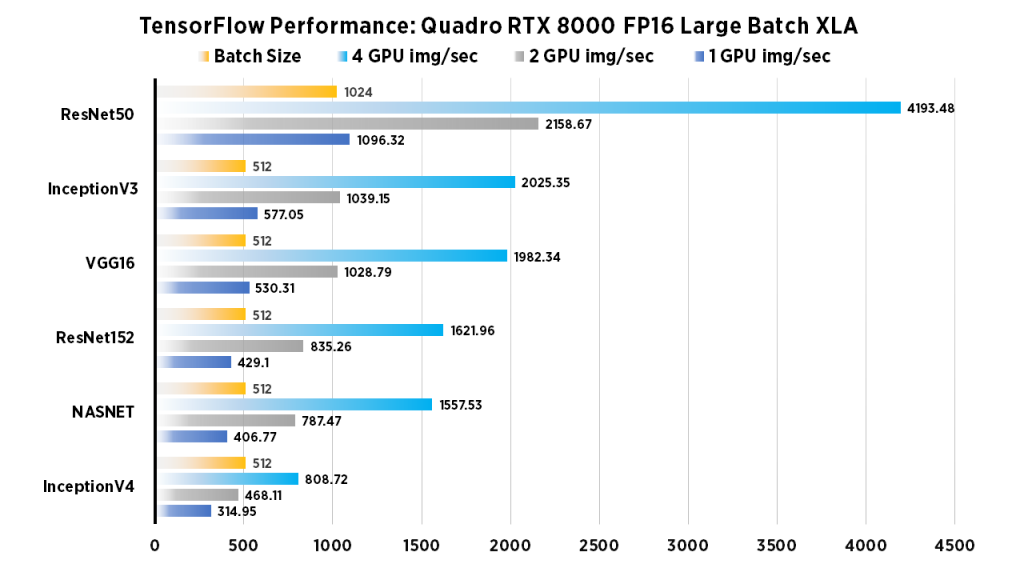

- ResNet-50 and ResNet-152 Showed massive scaling when going from 1-2-4 GPUs, a mind blowing 4193.48 images/sec for ResNet-50 and 1621.96 images/sec for ResNet-152 at FP16 & XLA!

- Using FP16 showed impressive gains in images/sec across most models when using 4 GPUs. (exception AlexNet)

- The Quadro RTX 8000 with 48 GB RAM is Ideal for training networks that require large batch sizes that otherwise would be limited on lower end GPUs.

- The Quadro RTX 8000 is an ideal choice for deep learning if you're restricted to a workstation or single server form factor and want maximum GPU memory.

- Our workstations with Quadro RTX 8000 can also train state of the art NLP Transformer networks that require large batch size for best performance, a popular application for the fast growing data science market.

- XLA significantly increases the amount of Img/sec across most models, however the most dramatic gains were seen in FP16.

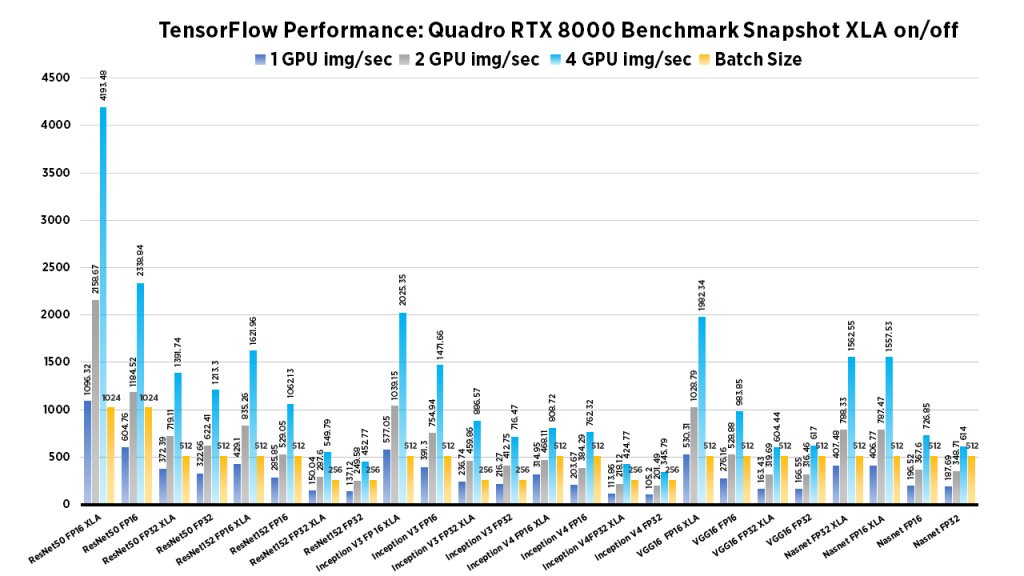

Quadro RTX 8000 Deep Learning Benchmark Snapshot (FP16, FP32, XLA on/off)

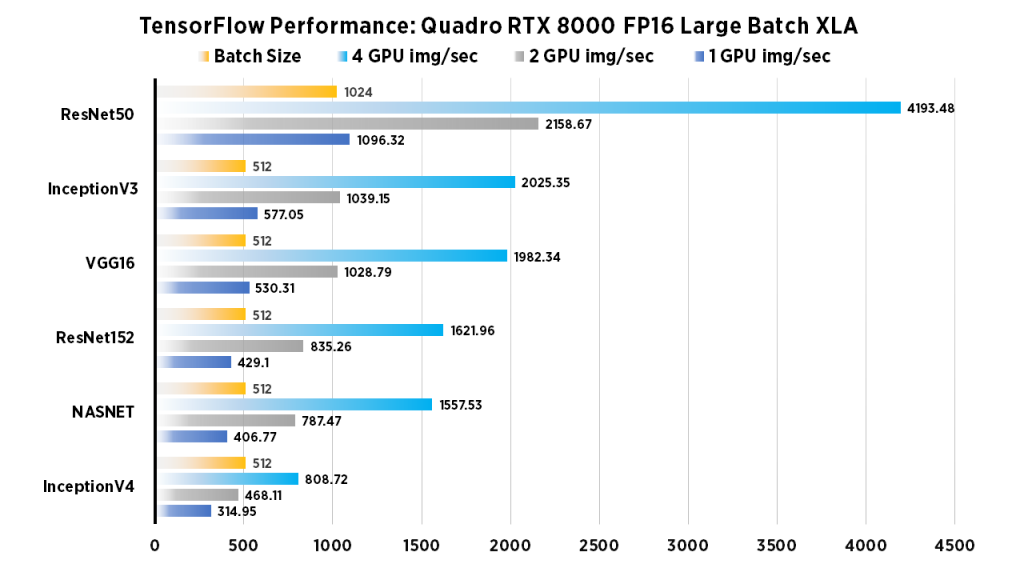

Quadro RTX 8000 Deep Learning Benchmarks: FP16, XLA

| 1 GPU img/sec | 2 GPU img/sec | 4 GPU img/sec | Batch Size | |

| InceptionV4 | 314.95 | 468.11 | 808.72 | 512 |

| NASNET | 406.77 | 787.47 | 1557.53 | 512 |

| ResNet152 | 429.1 | 835.26 | 1621.96 | 512 |

| VGG16 | 530.31 | 1028.79 | 1982.34 | 512 |

| InceptionV3 | 577.05 | 1039.15 | 2025.35 | 512 |

| ResNet50 | 1096.32 | 2158.67 | 4193.48 | 1024 |

Run these benchmarks

Configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --data_format=NCHW --batch_size=512 --num_batches=100 --model=inception4 --optimizer=momentum --variable_update=replicated --all_reduce_spec=nccl --use_fp16=True --nodistortions --gradient_repacking=2 --datasets_use_prefetch=True --per_gpu_thread_count=2 --loss_type_to_report=base_loss --compute_lr_on_cpu=True --single_l2_loss_op=True --xla_compile=True --local_parameter_device=gpu --num_gpus=1 --display_every=10

Quadro RTX 8000 Deep Learning Benchmarks: FP32, XLA

| 1 GPU img/sec | 2 GPU img/sec | 4 GPU img/sec | Batch Size | |

| InceptionV4 | 113.86 | 218.12 | 424.77 | 256 |

| ResNet152 | 150.04 | 287.6 | 549.79 | 256 |

| VGG16 | 163.43 | 319.69 | 604.44 | 512 |

| InceptionV3 | 236.74 | 459.86 | 886.57 | 256 |

| ResNet50 | 372.39 | 719.11 | 1391.74 | 512 |

| NASNET | 407.48 | 788.33 | 1562.55 | 512 |

Run these benchmarks

Configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --data_format=NCHW --batch_size=256 --num_batches=100 --model=resnet50 --optimizer=momentum --variable_update=replicated --all_reduce_spec=nccl --nodistortions --gradient_repacking=2 --datasets_use_prefetch=True --per_gpu_thread_count=2 --loss_type_to_report=base_loss --compute_lr_on_cpu=True --single_l2_loss_op=True --xla_compile=True --local_parameter_device=gpu --num_gpus=1 --display_every=10

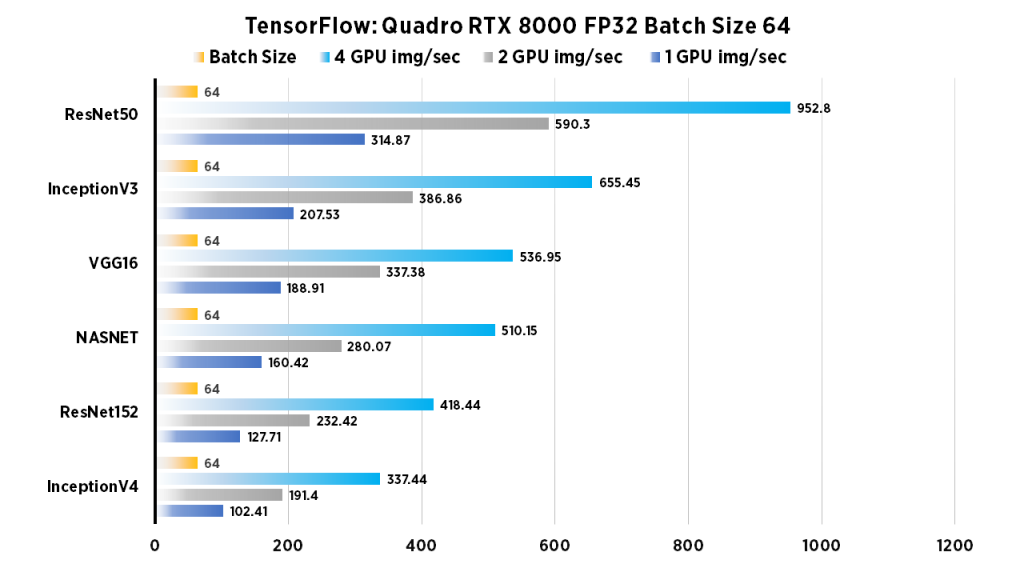

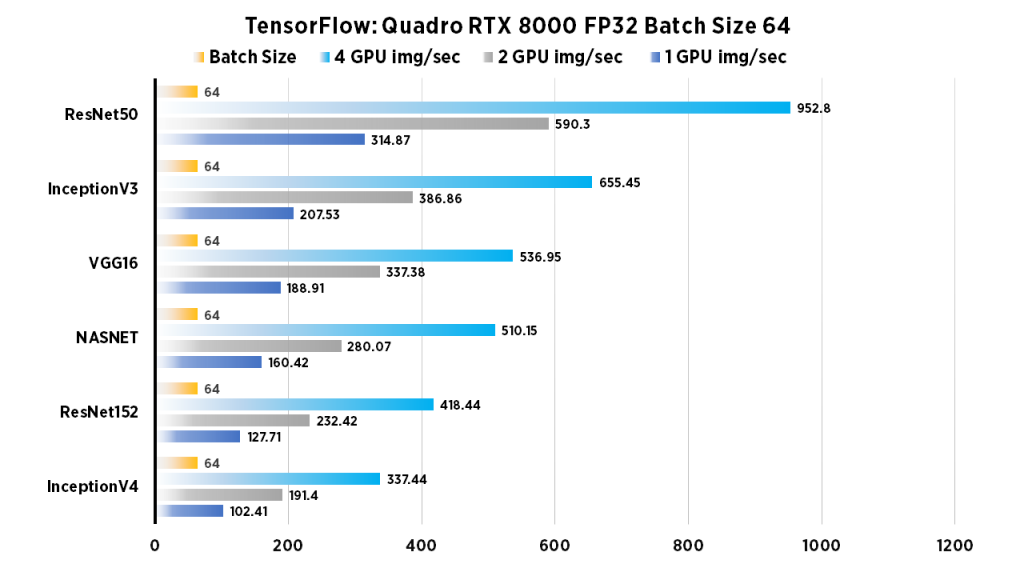

Quadro RTX 8000 Deep Learning Benchmarks: FP32, Batch Size 64

| 1 GPU | 2 GPU | 4 GPU | Batch Size | |

| ResNet50 | 314.87 | 590.3 | 952.8 | 64 |

| ResNet152 | 127.71 | 232.42 | 418.44 | 64 |

| InceptionV3 | 207.53 | 386.86 | 655.45 | 64 |

| InceptionV4 | 102.41 | 191.4 | 337.44 | 64 |

| VGG16 | 188.91 | 337.38 | 536.95 | 64 |

| NASNET | 160.42 | 280.07 | 510.15 | 64 |

Run these benchmarks

Configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=64 --model=resnet50 --variable_update=parameter_server

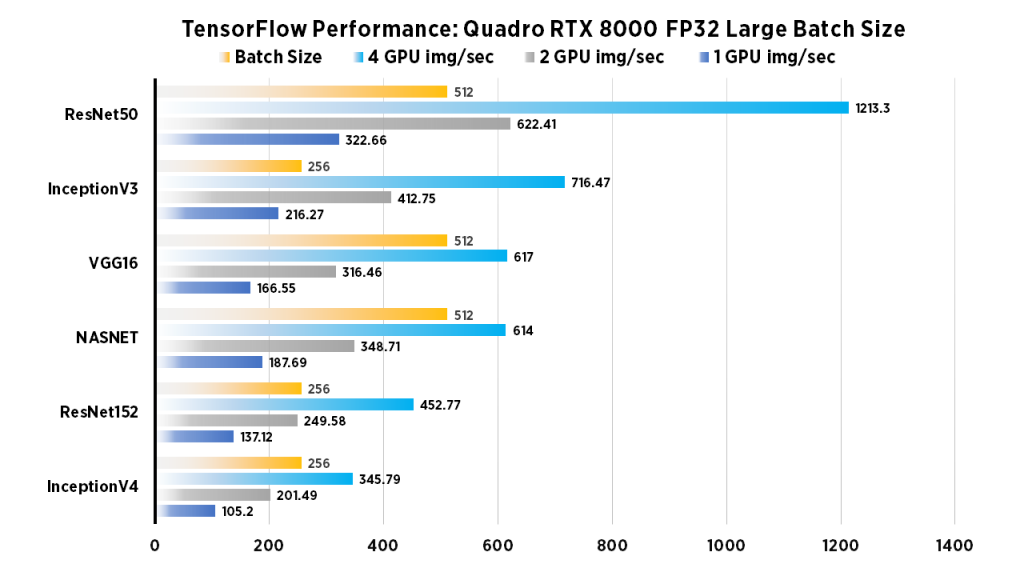

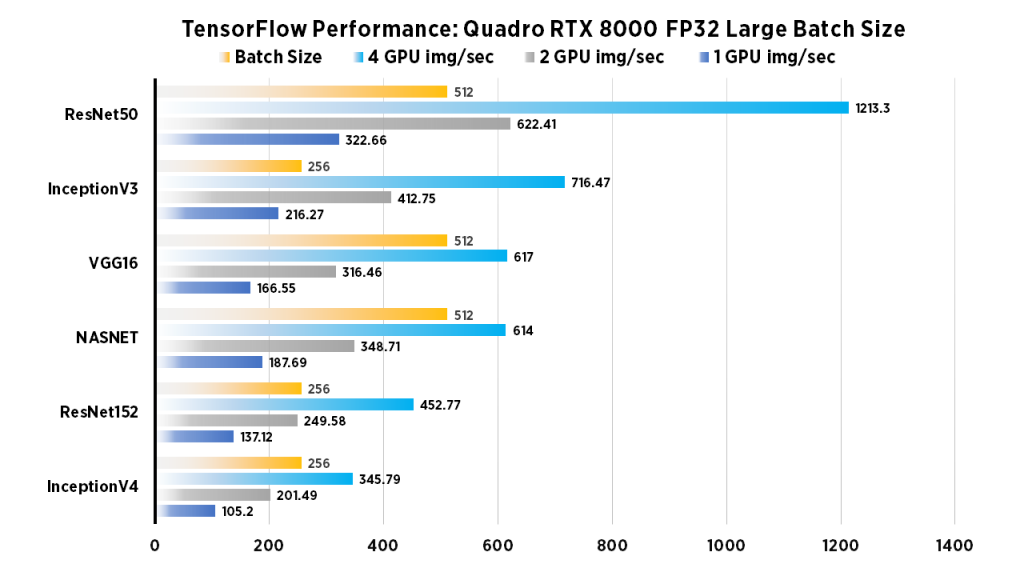

Quadro RTX 8000 Deep Learning Benchmarks: FP32, Large Batch Size

| 1 GPU | 2 GPU | 4 GPU | Batch Size | |

| ResNet50 | 322.66 | 622.41 | 1213.3 | 512 |

| ResNet152 | 137.12 | 249.58 | 452.77 | 256 |

| InceptionV3 | 216.27 | 412.75 | 716.47 | 256 |

| InceptionV4 | 105.2 | 201.49 | 345.79 | 256 |

| VGG16 | 166.55 | 316.46 | 617 | 512 |

| NASNET | 187.69 | 348.71 | 614 | 512 |

Run these benchmarks

Configure num_gpus to the number of GPUs desired to test. Change model to desired architecture. Change batch_size to desired mini-batch.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=512 --model=resnet50 --variable_update=parameter_server

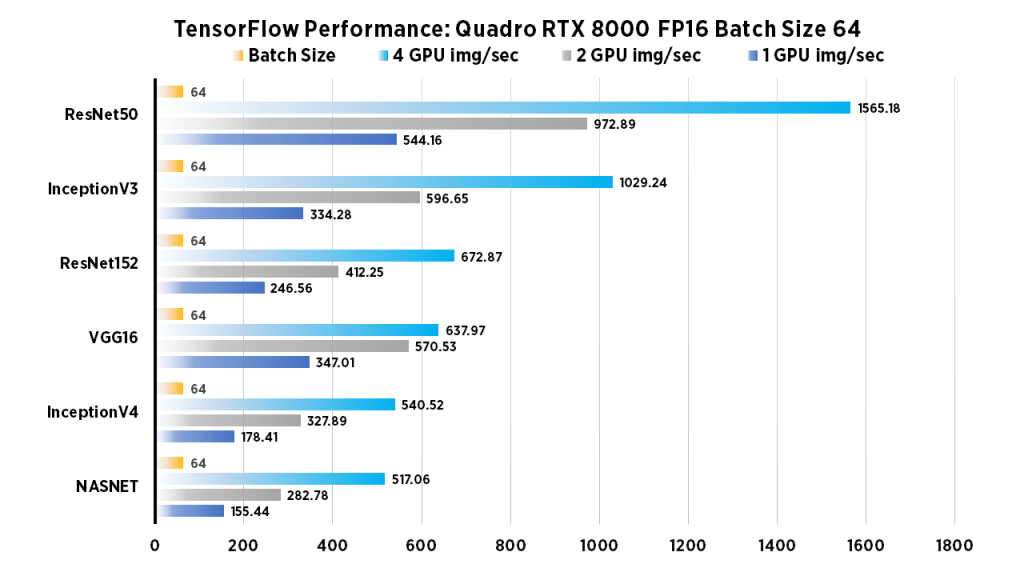

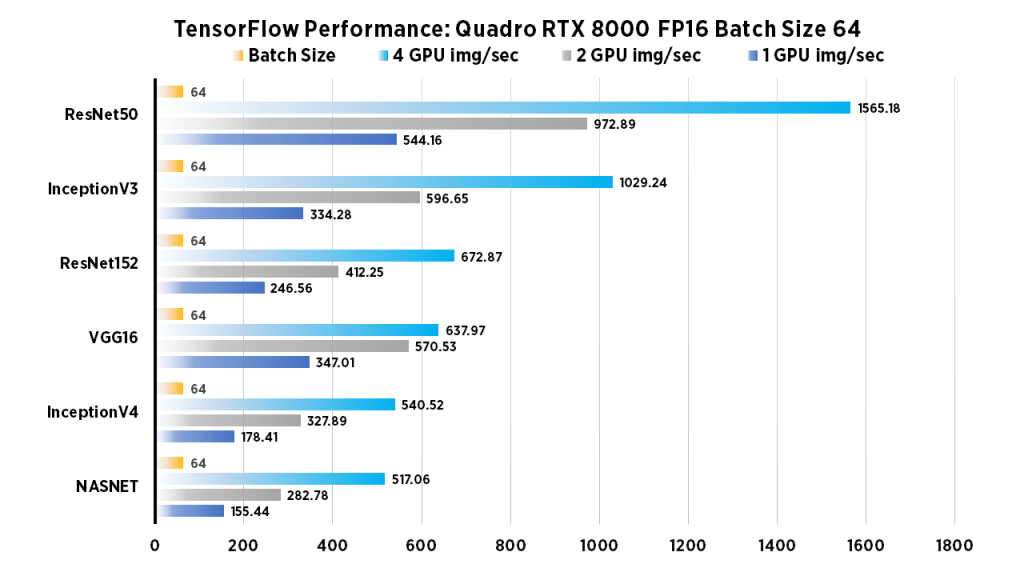

Quadro RTX 8000 Deep Learning Benchmarks: FP16, Batch Size 64

| 1 GPU | 2 GPU | 4 GPU | Batch Size | |

| ResNet50 | 544.16 | 972.89 | 1565.18 | 64 |

| ResNet152 | 246.56 | 412.25 | 672.87 | 64 |

| InceptionV3 | 334.28 | 596.65 | 1029.24 | 64 |

| InceptionV4 | 178.41 | 327.89 | 540.52 | 64 |

| VGG16 | 347.01 | 570.53 | 637.97 | 64 |

| NASNET | 155.44 | 282.78 | 517.06 | 64 |

Run these benchmarks

Configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=64 --model=resnet50 --variable_update=parameter_server --use_fp16=True

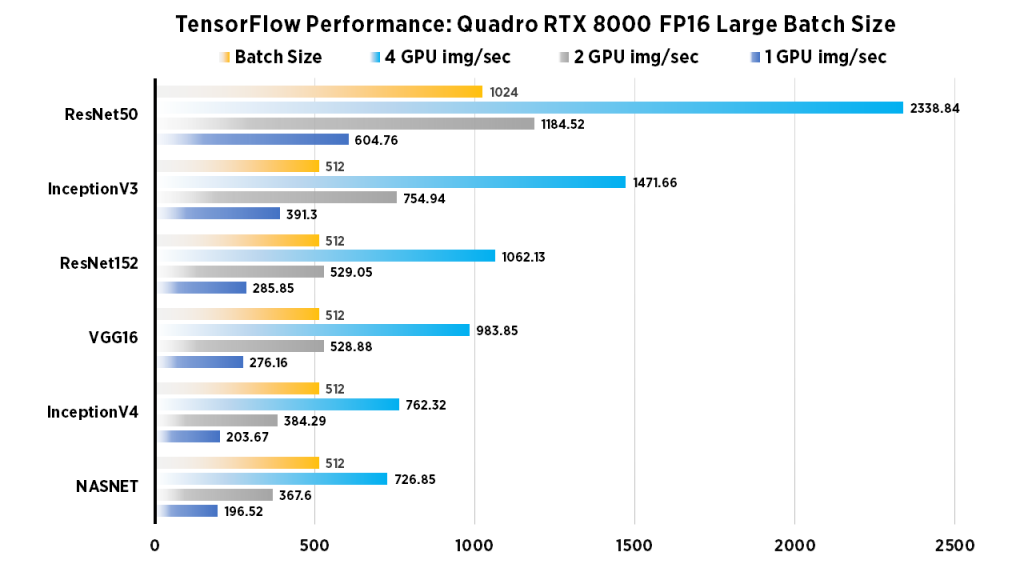

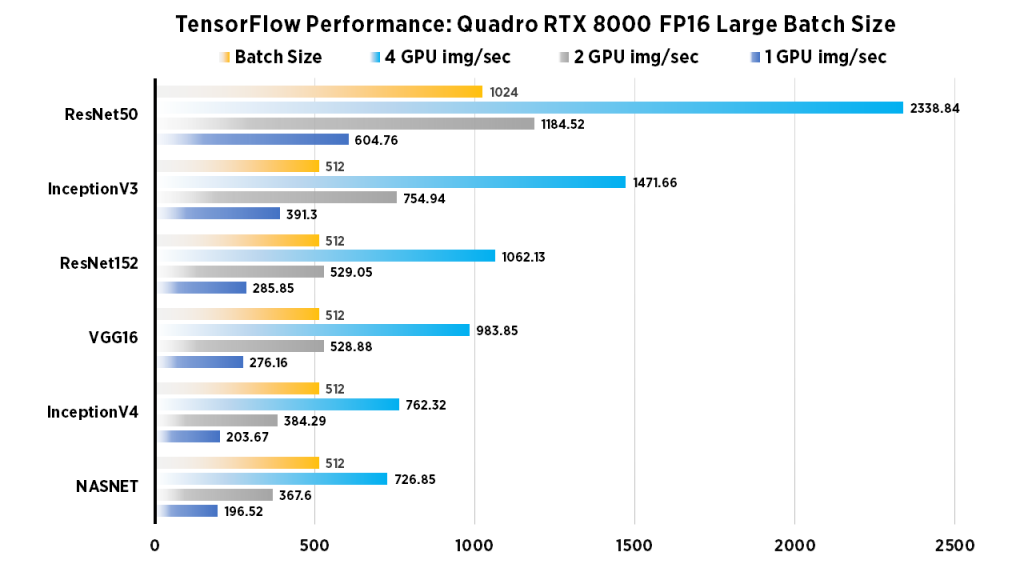

Quadro RTX 8000 Deep Learning Benchmarks: FP16, Large Batch Size

| 1 GPU | 2 GPU | 4 GPU | Batch Size | |

| ResNet50 | 604.76 | 1184.52 | 2338.84 | 1024 |

| ResNet152 | 285.85 | 529.05 | 1062.13 | 512 |

| InceptionV3 | 391.3 | 754.94 | 1471.66 | 512 |

| InceptionV4 | 203.67 | 384.29 | 762.32 | 512 |

| VGG16 | 276.16 | 528.88 | 983.85 | 512 |

| NASNET | 196.52 | 367.6 | 726.85 | 512 |

Run these benchmarks

Configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture. Change batch_size to desired mini-batch.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=1024 --model=resnet50 --variable_update=parameter_server --use_fp16=True

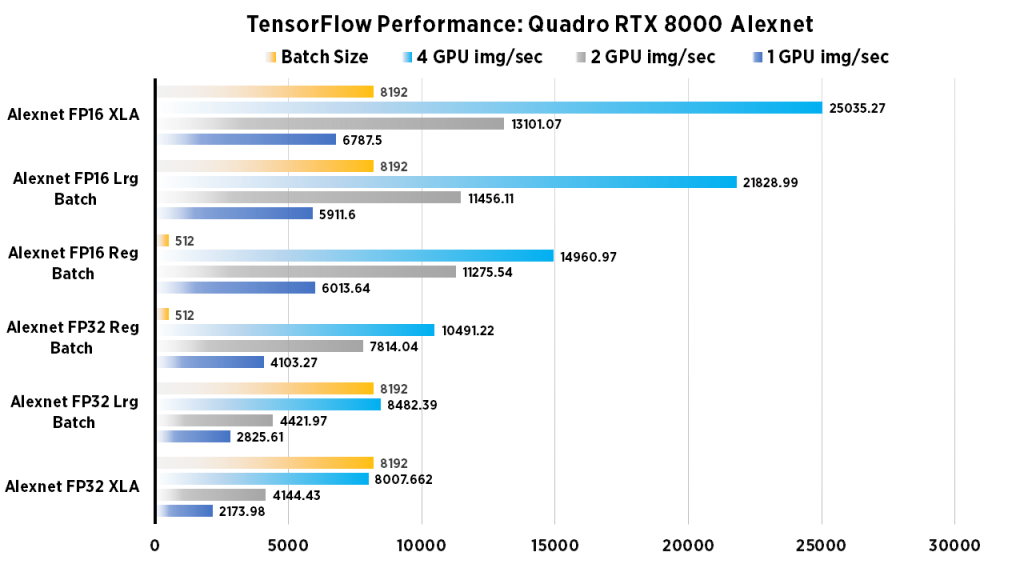

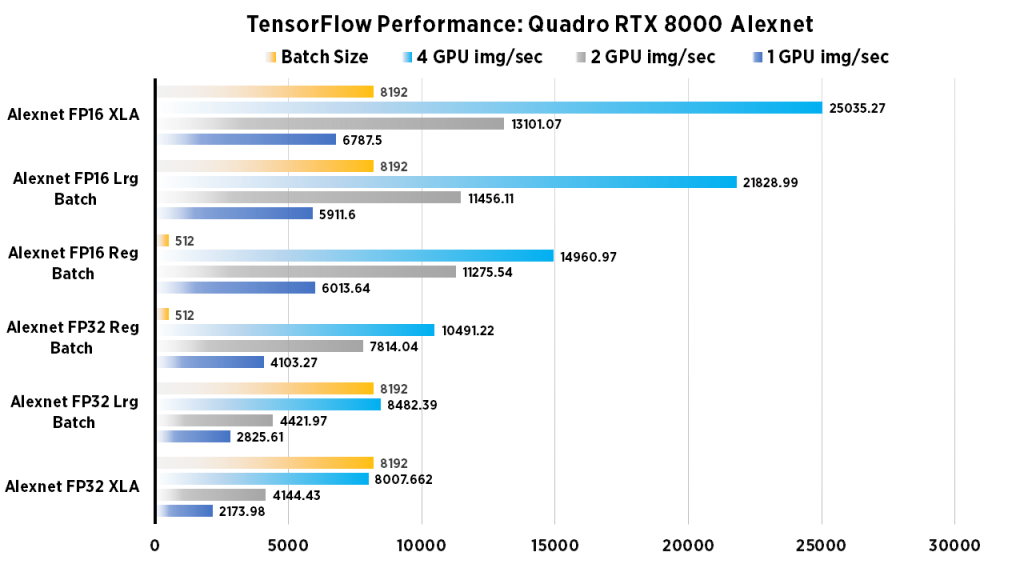

Quadro RTX 8000 Deep Learning Benchmarks: AlexNet (FP32, FP16, XLA on, off)

| 1 GPU | 2 GPU | 4 GPU | Batch Size | |

| Alexnet FP16 (Large Batch) | 5911.6 | 11456.11 | 21828.99 | 8192 |

| Alexnet FP16 (Regular Batch) | 6013.64 | 11275.54 | 14960.97 | 512 |

| Alexnet FP32 (Large Batch) | 2825.61 | 4421.97 | 8482.39 | 8192 |

| Alexnet FP32 (Regular Batch) | 4103.27 | 7814.04 | 10491.22 | 512 |

| Alexnet FP16 XLA | 6787.5 | 13101.07 | 25035.27 | 8192 |

| Alexnet FP32 XLA | 2173.97 | 4144.43 | 8007.66 | 8192 |

Run these deep learning benchmarks

configure the num_gpus to the number of GPUs desired to test, and omit use_fp16 flag to run in FP32. Change batch_size to desired mini-batch.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=8192 --model=alexnet --variable_update=parameter_server --use_fp16=True

System Specifications

| System | Exxact Valence Workstation |

| GPU | 4 x NVIDIA Quadro RTX 8000 |

| CPU | Intel CORE I7-7820X 3.6GHZ |

| RAM | 32GB DDR4 |

| SSD | 480 GB SSD |

| HDD (data) | 10 TB HDD |

| OS | Ubuntu 18.04 |

| NVIDIA DRIVER | 410.79 |

| CUDA Version | 10 |

| Python | 2.7 |

| TensorFlow | 1.14 |

| Docker Image | tensorflow/tensorflow:nightly-gpu |

Training Parameters (non XLA)

| Dataset | Imagenet (synthetic) |

| Mode: | training |

| SingleSess: | False |

| Batch Size: | Varied |

| Num Batches: | 100 |

| Num Epochs: | 0.08 |

| Devices: | ['/gpu:0']...(varied) |

| NUMA bind: | False |

| Data format: | NCHW |

| Optimizer: | sgd |

| Variables: | parameter_server |

Training Parameters (XLA)

| Dataset: | Imagenet (synthetic) |

| Mode: | training |

| SingleSess: | False |

| Batch Size: | Varied |

| Num Batches: | 100 |

| Num Epochs: | 0.08 |

| Devices: | ['/gpu:0']...(varied) |

| NUMA bind: | False |

| Data format: | NCHW |

| Optimizer: | momentum |

| Variables: | replicated |

| AllReduce | nccl |

More Deep Learning Benchmarks

- NVIDIA RTX 2080 Ti Deep Learning Benchmarks for TensorFlow: Updated with XLA & FP16

- NVIDIA Quadro RTX 6000 GPU Benchmarks for TensorFlow

- RTX 2080 Ti Deep Learning Performance Benchmarks for TensorFlow

- TITAN RTX Deep Learning Benchmarks 2019

That's it for now! Have any questions? Let us know on social media.

https://www.facebook.com/exxactcorp/

https://twitter.com/Exxactcorp

NVIDIA Quadro RTX 8000 Benchmarks for Deep Learning in TensorFlow 2019

NVIDIA Quadro RTX 8000 Benchmarks

Updated 6/11/2019 with XLA FP32 and XLA FP16 metrics.

For this post, we conducted deep learning performance benchmarks for TensorFlow using the new NVIDIA Quadro RTX 8000 GPUs. Our Exxact Valence Workstation was equipped with 4x Quadro RTX 8000's giving us an awesome 192 GB of GPU memory for our system. To demonstrate, we ran the standard tf_cnn_benchmarks.py benchmark script (found here in the official TensorFlow github). Also, we ran tests on the following networks: ResNet-50, ResNet-152, Inception v3, Inception v4, VGG-16, AlexNet, and Nasnet. For good measure, we compared FP16 to FP32 performance, and used 'typical' batch sizes (64 in most cases). Furthermore, we incrementally doubled the batch size until we threw a memory error. Incidentally, all tests ran on1,2 and 4 GPU configurations.

Key Points and Observations

- In most scenarios, large batch size training showed impressive results in images/sec when compared to smaller batch sizes. This is especially true when scaling to the 4 GPU configuration.

- AlexNet and VGG16 performed better using smaller batch size on a single GPU, but larger batch size performed better on these models when scaling up to 4 GPUs.

- ResNet-50 and ResNet-152 Showed massive scaling when going from 1-2-4 GPUs, a mind blowing 4193.48 images/sec for ResNet-50 and 1621.96 images/sec for ResNet-152 at FP16 & XLA!

- Using FP16 showed impressive gains in images/sec across most models when using 4 GPUs. (exception AlexNet)

- The Quadro RTX 8000 with 48 GB RAM is Ideal for training networks that require large batch sizes that otherwise would be limited on lower end GPUs.

- The Quadro RTX 8000 is an ideal choice for deep learning if you're restricted to a workstation or single server form factor and want maximum GPU memory.

- Our workstations with Quadro RTX 8000 can also train state of the art NLP Transformer networks that require large batch size for best performance, a popular application for the fast growing data science market.

- XLA significantly increases the amount of Img/sec across most models, however the most dramatic gains were seen in FP16.

Quadro RTX 8000 Deep Learning Benchmark Snapshot (FP16, FP32, XLA on/off)

Quadro RTX 8000 Deep Learning Benchmarks: FP16, XLA

| 1 GPU img/sec | 2 GPU img/sec | 4 GPU img/sec | Batch Size | |

| InceptionV4 | 314.95 | 468.11 | 808.72 | 512 |

| NASNET | 406.77 | 787.47 | 1557.53 | 512 |

| ResNet152 | 429.1 | 835.26 | 1621.96 | 512 |

| VGG16 | 530.31 | 1028.79 | 1982.34 | 512 |

| InceptionV3 | 577.05 | 1039.15 | 2025.35 | 512 |

| ResNet50 | 1096.32 | 2158.67 | 4193.48 | 1024 |

Run these benchmarks

Configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --data_format=NCHW --batch_size=512 --num_batches=100 --model=inception4 --optimizer=momentum --variable_update=replicated --all_reduce_spec=nccl --use_fp16=True --nodistortions --gradient_repacking=2 --datasets_use_prefetch=True --per_gpu_thread_count=2 --loss_type_to_report=base_loss --compute_lr_on_cpu=True --single_l2_loss_op=True --xla_compile=True --local_parameter_device=gpu --num_gpus=1 --display_every=10

Quadro RTX 8000 Deep Learning Benchmarks: FP32, XLA

| 1 GPU img/sec | 2 GPU img/sec | 4 GPU img/sec | Batch Size | |

| InceptionV4 | 113.86 | 218.12 | 424.77 | 256 |

| ResNet152 | 150.04 | 287.6 | 549.79 | 256 |

| VGG16 | 163.43 | 319.69 | 604.44 | 512 |

| InceptionV3 | 236.74 | 459.86 | 886.57 | 256 |

| ResNet50 | 372.39 | 719.11 | 1391.74 | 512 |

| NASNET | 407.48 | 788.33 | 1562.55 | 512 |

Run these benchmarks

Configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --data_format=NCHW --batch_size=256 --num_batches=100 --model=resnet50 --optimizer=momentum --variable_update=replicated --all_reduce_spec=nccl --nodistortions --gradient_repacking=2 --datasets_use_prefetch=True --per_gpu_thread_count=2 --loss_type_to_report=base_loss --compute_lr_on_cpu=True --single_l2_loss_op=True --xla_compile=True --local_parameter_device=gpu --num_gpus=1 --display_every=10

Quadro RTX 8000 Deep Learning Benchmarks: FP32, Batch Size 64

| 1 GPU | 2 GPU | 4 GPU | Batch Size | |

| ResNet50 | 314.87 | 590.3 | 952.8 | 64 |

| ResNet152 | 127.71 | 232.42 | 418.44 | 64 |

| InceptionV3 | 207.53 | 386.86 | 655.45 | 64 |

| InceptionV4 | 102.41 | 191.4 | 337.44 | 64 |

| VGG16 | 188.91 | 337.38 | 536.95 | 64 |

| NASNET | 160.42 | 280.07 | 510.15 | 64 |

Run these benchmarks

Configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=64 --model=resnet50 --variable_update=parameter_server

Quadro RTX 8000 Deep Learning Benchmarks: FP32, Large Batch Size

| 1 GPU | 2 GPU | 4 GPU | Batch Size | |

| ResNet50 | 322.66 | 622.41 | 1213.3 | 512 |

| ResNet152 | 137.12 | 249.58 | 452.77 | 256 |

| InceptionV3 | 216.27 | 412.75 | 716.47 | 256 |

| InceptionV4 | 105.2 | 201.49 | 345.79 | 256 |

| VGG16 | 166.55 | 316.46 | 617 | 512 |

| NASNET | 187.69 | 348.71 | 614 | 512 |

Run these benchmarks

Configure num_gpus to the number of GPUs desired to test. Change model to desired architecture. Change batch_size to desired mini-batch.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=512 --model=resnet50 --variable_update=parameter_server

Quadro RTX 8000 Deep Learning Benchmarks: FP16, Batch Size 64

| 1 GPU | 2 GPU | 4 GPU | Batch Size | |

| ResNet50 | 544.16 | 972.89 | 1565.18 | 64 |

| ResNet152 | 246.56 | 412.25 | 672.87 | 64 |

| InceptionV3 | 334.28 | 596.65 | 1029.24 | 64 |

| InceptionV4 | 178.41 | 327.89 | 540.52 | 64 |

| VGG16 | 347.01 | 570.53 | 637.97 | 64 |

| NASNET | 155.44 | 282.78 | 517.06 | 64 |

Run these benchmarks

Configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=64 --model=resnet50 --variable_update=parameter_server --use_fp16=True

Quadro RTX 8000 Deep Learning Benchmarks: FP16, Large Batch Size

| 1 GPU | 2 GPU | 4 GPU | Batch Size | |

| ResNet50 | 604.76 | 1184.52 | 2338.84 | 1024 |

| ResNet152 | 285.85 | 529.05 | 1062.13 | 512 |

| InceptionV3 | 391.3 | 754.94 | 1471.66 | 512 |

| InceptionV4 | 203.67 | 384.29 | 762.32 | 512 |

| VGG16 | 276.16 | 528.88 | 983.85 | 512 |

| NASNET | 196.52 | 367.6 | 726.85 | 512 |

Run these benchmarks

Configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture. Change batch_size to desired mini-batch.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=1024 --model=resnet50 --variable_update=parameter_server --use_fp16=True

Quadro RTX 8000 Deep Learning Benchmarks: AlexNet (FP32, FP16, XLA on, off)

| 1 GPU | 2 GPU | 4 GPU | Batch Size | |

| Alexnet FP16 (Large Batch) | 5911.6 | 11456.11 | 21828.99 | 8192 |

| Alexnet FP16 (Regular Batch) | 6013.64 | 11275.54 | 14960.97 | 512 |

| Alexnet FP32 (Large Batch) | 2825.61 | 4421.97 | 8482.39 | 8192 |

| Alexnet FP32 (Regular Batch) | 4103.27 | 7814.04 | 10491.22 | 512 |

| Alexnet FP16 XLA | 6787.5 | 13101.07 | 25035.27 | 8192 |

| Alexnet FP32 XLA | 2173.97 | 4144.43 | 8007.66 | 8192 |

Run these deep learning benchmarks

configure the num_gpus to the number of GPUs desired to test, and omit use_fp16 flag to run in FP32. Change batch_size to desired mini-batch.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=8192 --model=alexnet --variable_update=parameter_server --use_fp16=True

System Specifications

| System | Exxact Valence Workstation |

| GPU | 4 x NVIDIA Quadro RTX 8000 |

| CPU | Intel CORE I7-7820X 3.6GHZ |

| RAM | 32GB DDR4 |

| SSD | 480 GB SSD |

| HDD (data) | 10 TB HDD |

| OS | Ubuntu 18.04 |

| NVIDIA DRIVER | 410.79 |

| CUDA Version | 10 |

| Python | 2.7 |

| TensorFlow | 1.14 |

| Docker Image | tensorflow/tensorflow:nightly-gpu |

Training Parameters (non XLA)

| Dataset | Imagenet (synthetic) |

| Mode: | training |

| SingleSess: | False |

| Batch Size: | Varied |

| Num Batches: | 100 |

| Num Epochs: | 0.08 |

| Devices: | ['/gpu:0']...(varied) |

| NUMA bind: | False |

| Data format: | NCHW |

| Optimizer: | sgd |

| Variables: | parameter_server |

Training Parameters (XLA)

| Dataset: | Imagenet (synthetic) |

| Mode: | training |

| SingleSess: | False |

| Batch Size: | Varied |

| Num Batches: | 100 |

| Num Epochs: | 0.08 |

| Devices: | ['/gpu:0']...(varied) |

| NUMA bind: | False |

| Data format: | NCHW |

| Optimizer: | momentum |

| Variables: | replicated |

| AllReduce | nccl |

More Deep Learning Benchmarks

- NVIDIA RTX 2080 Ti Deep Learning Benchmarks for TensorFlow: Updated with XLA & FP16

- NVIDIA Quadro RTX 6000 GPU Benchmarks for TensorFlow

- RTX 2080 Ti Deep Learning Performance Benchmarks for TensorFlow

- TITAN RTX Deep Learning Benchmarks 2019

That's it for now! Have any questions? Let us know on social media.

https://www.facebook.com/exxactcorp/

https://twitter.com/Exxactcorp