Fine Tuning BERT Large on a GPU Workstation

For this post, we measured fine-tuning performance (training and inference) for the BERT implementation of TensorFlow on NVIDIA Quadro RTX 6000 GPUs. For testing we used an Exxact Valence Workstation was fitted with 4x Quadro RTX 6000 GPUs with NVLink, giving us 96 GB of GPU memory for our system.

Benchmark scripts we used for evaluation was the finetune_train_benchmark.sh and finetune_inference_benchmark.sh from NVIDIA NGC Repository BERT for TensorFlow. We made slight modifications to the training benchmark script to get the larger batch size numbers.

The script runs multiple tests on the SQuAD v1.1 dataset using batch sizes 1, 2, 4, 8, 16, and 32, for training, and 1, 2, 4, and 8 for inference and conducted tests using 1, 2, and 4 GPU configurations on BERT Large (We used 1 GPU for inference benchmark). In addition ran all benchmarks using TensorFlow's XLA on across the board. Other training settings can be viewed at the end of this blog in the Appendix section.

Key Points and Observations

- Performance wise, the RTX 6000 Performs well, and in some cases better than the RTX 8000, however resources max out when batch size 32 is reached at sequence size 128.

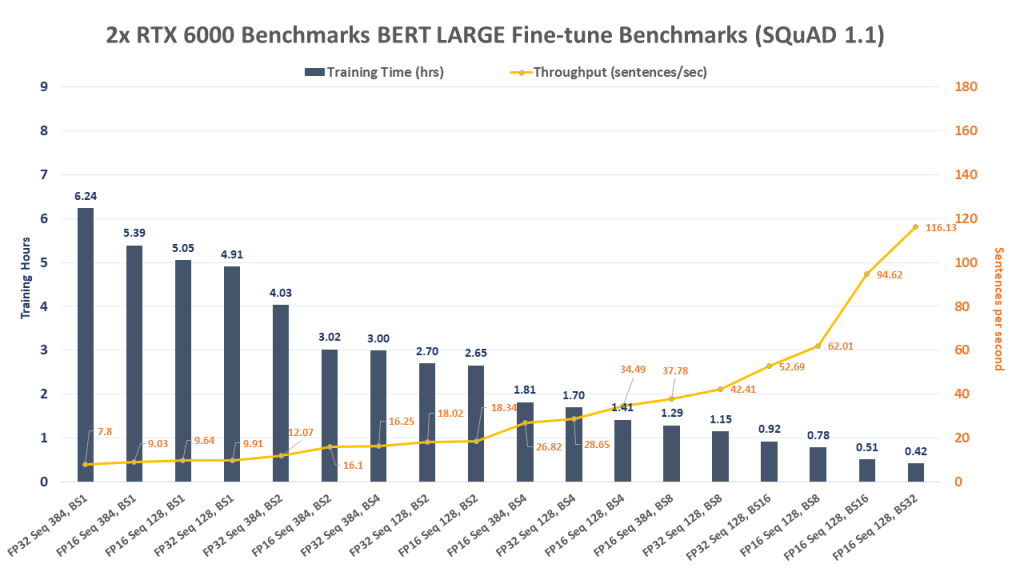

- In terms of Throughput, the 2x and 4x configs really started to shine when the batch size reached 8 and above. The 4x configuration began to break away around batch size 16.

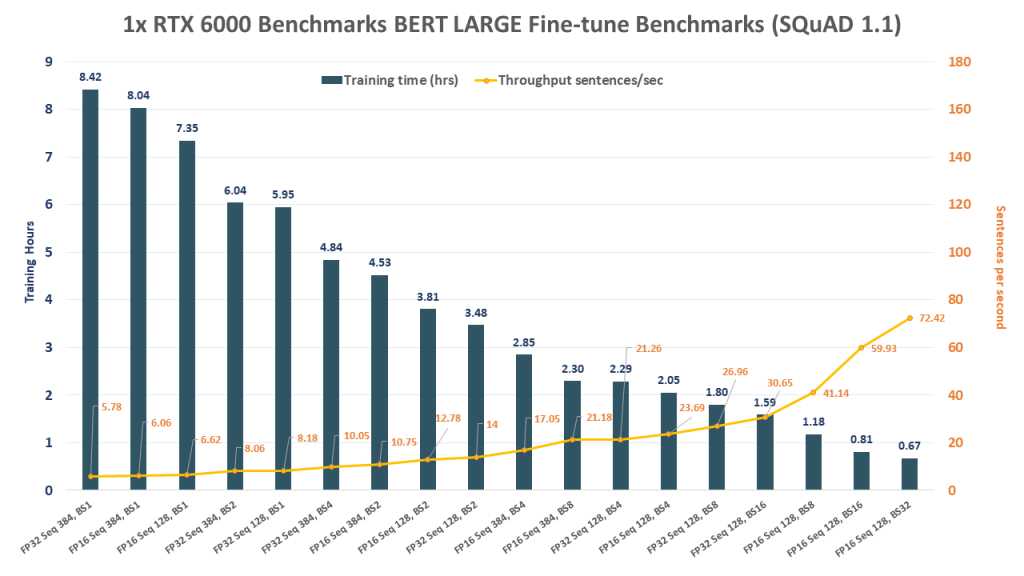

- For those interested in training BERT Large, a 2x Quadro RTX 6000 system is a great choice, with the opportunity to add additional cards later as budget/scaling needs increase. Even a Deep Learning Workstation with a single RTX 6000 can fine tune BERT Large in about 40 minutes!

- NOTE: In order to run these benchmarks, or be able to fine-tune BERT Large with 4x GPUs you'll need a system with at least 64GB RAM.

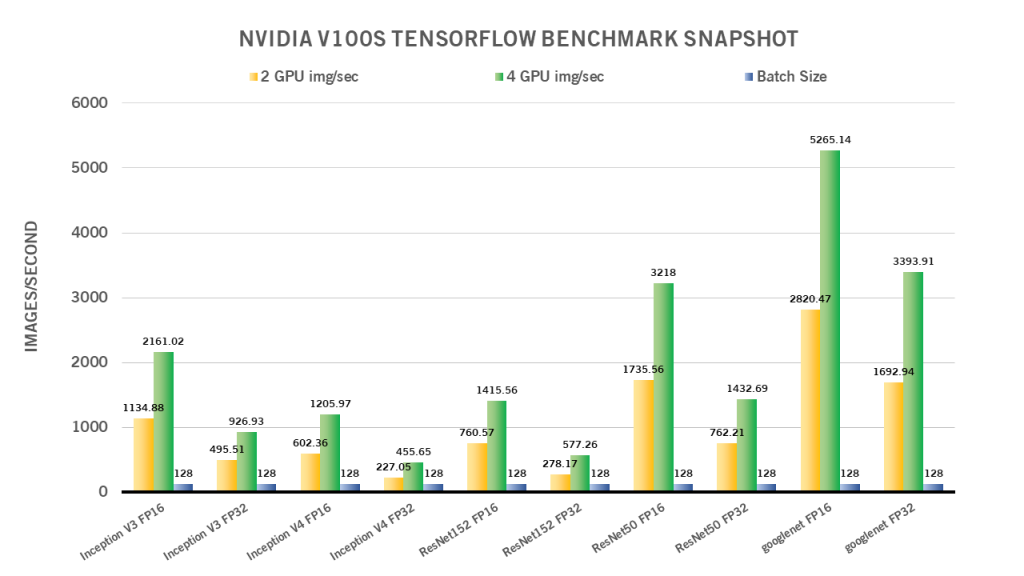

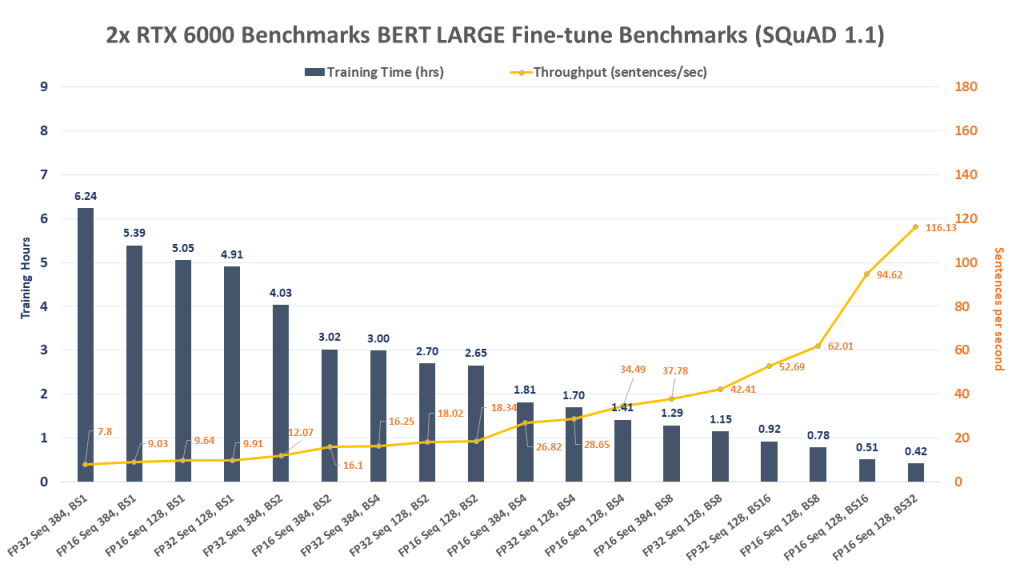

Quadro RTX 6000 BERT Large Fine tuning GPU Benchmark Snapshot

FP = Floating Point Codecision, Seq = Sequence Length, BS = Batch Size

4x Quadro RTX 6000 BERT Large GPU Fine-tune Benchmark

fp="Floating" point="" codecision,="" seq="Sequence" length,="" bs="Batch" size<="" strong><="" h3>=""

[supsystic-tables="" id="60]

"run="" these="" benchmarks<="" p>="" <="" <="" strong><="" p>=""

assuming="" you're="" using="" the="" ngc="" bert="" for="" tensorflow="" container,="" run="" following="" command.<=""

FP = Floating Point Codecision, Seq = Sequence Length, BS = Batch Size

[supsystic-tables id=61]

Run these benchmarks

Assuming you're using the NGC BERT for TensorFlow container, run the following command.

scripts/finetune_train_benchmark.sh large true 2 squad

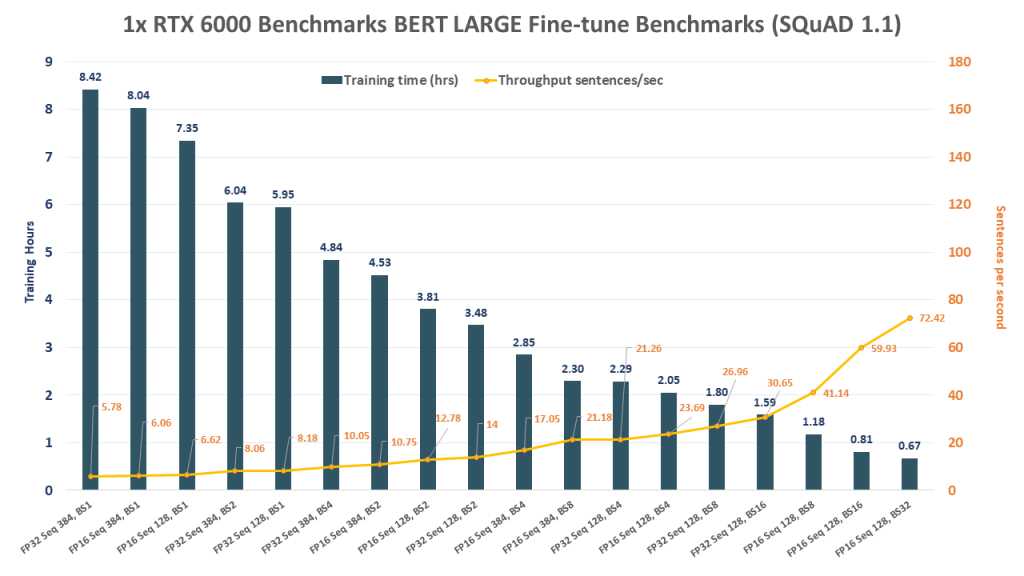

1x Quadro RTX 6000 BERT LARGE GPU Fine-tune Benchmark

FP = Floating Point Codecision, Seq = Sequence Length, BS = Batch Size

[supsystic-tables id=62]

Run these benchmarks

Assuming you're using the NGC BERT for TensorFlow container, run the following command.

scripts/finetune_train_benchmark.sh large true 1 squad1x Quadro RTX 6000 BERT LARGE GPU Inference Benchmark

FP = Floating Point Codecision, Seq = Sequence Length, BS = Batch SizeRun these benchmarks

| Settings | Total Inference Time | num of sentences | Latency Confidence Level 50ms | Latency Confidence Level 90 ms | Latency Confidence Level 95 ms | Latency Confidence Level 99 ms | Latency Confidence Level 100 ms | Latency Avg | Throughput (sentences/sec) |

|---|---|---|---|---|---|---|---|---|---|

| FP16 Seq 384, BS1 | 17.43 | 1042 | 16.92 | 17.35 | 17.57 | 18.25 | 20.41 | 16.73 | 59.78 |

| FP16 Seq 384, BS2 | 24.23 | 2068 | 23.13 | 23.67 | 23.81 | 24.51 | 529.93 | 23.43 | 85.36 |

| FP16 Seq 128, BS2 | 24.69 | 3402 | 15.09 | 16.21 | 16.35 | 17.29 | 25.46 | 14.52 | 137.76 |

| FP16 Seq 128, BS1 | 26.4 | 1800 | 15.55 | 16.66 | 16.86 | 17.59 | 25.35 | 14.67 | 68.19 |

| FP16 Seq 128, BS4 | 29.18 | 7128 | 16.12 | 16.68 | 17.02 | 17.7 | 556.13 | 16.37 | 244.31 |

| FP32 Seq 128, BS1 | 34.04 | 1800 | 20.79 | 22.08 | 22.47 | 22.97 | 26.48 | 18.91 | 52.88 |

| FP32 Seq 128, BS2 | 38.72 | 3402 | 22.92 | 23.79 | 23.98 | 24.58 | 27.02 | 22.76 | 87.87 |

| FP16 Seq 384, BS4 | 40.08 | 4128 | 38.55 | 39.09 | 39.32 | 40.93 | 530.08 | 38.84 | 102.99 |

| FP32 Seq 384, BS1 | 41.99 | 1042 | 42.3 | 43.79 | 43.93 | 44.19 | 45.8 | 40.3 | 24.81 |

| FP16 Seq 128, BS8 | 66.94 | 16040 | 23.06 | 23.75 | 24.01 | 24.61 | 543.94 | 23.16 | 345.5 |

| FP32 Seq 384, BS2 | 74.73 | 2068 | 73.25 | 74.51 | 74.71 | 75.09 | 89.2 | 72.27 | 27.67 |

| FP16 Seq 128, BS8 | 76.66 | 8432 | 72.3 | 74.22 | 74.63 | 75.63 | 628.88 | 72.73 | 109.99 |

| FP32 Seq 128, BS4 | 83.22 | 7128 | 47.45 | 48.84 | 48.99 | 49.25 | 207.56 | 46.7 | 86.66 |

| FP32 Seq 384, BS4 | 160.17 | 4128 | 158.24 | 160.38 | 160.38 | 160.82 | 161.59 | 176.45 | 25.77 |

| FP32 Seq 128, BS8 | 171.83 | 16040 | 86.26 | 87.56 | 87.72 | 88.32 | 97.13 | 85.7 | 93.35 |

| FP32 Seq 128, BS8 | 300.44 | 8432 | 287.05 | 288.46 | 288.78 | 290.09 | 493.14 | 285.05 | 28.07 |

Assuming you're using the NGC BERT for TensorFlow container, run the following command.

scripts/finetune_inference_benchmark.sh large squadSystem Specifications:

| System | Exxact Valence Workstation |

| GPU | 4 x NVIDIA Quadro RTX 6000 |

| CPU | Intel CORE I7-7820X 3.6GHZ |

| RAM | 64GB DDR4 |

| SSD | 480 GB SSD |

| HDD (data) | 10 TB HDD |

| OS | Ubuntu 18.04 |

| NVIDIA DRIVER | 435.21 |

| CUDA Version | 10.1 |

| Python | 2.7/3.6 |

| TensorFlow | 1.14 |

| Container (using NVIDIA Docker) | TensorFlow 19.08-py3+ NGC container |

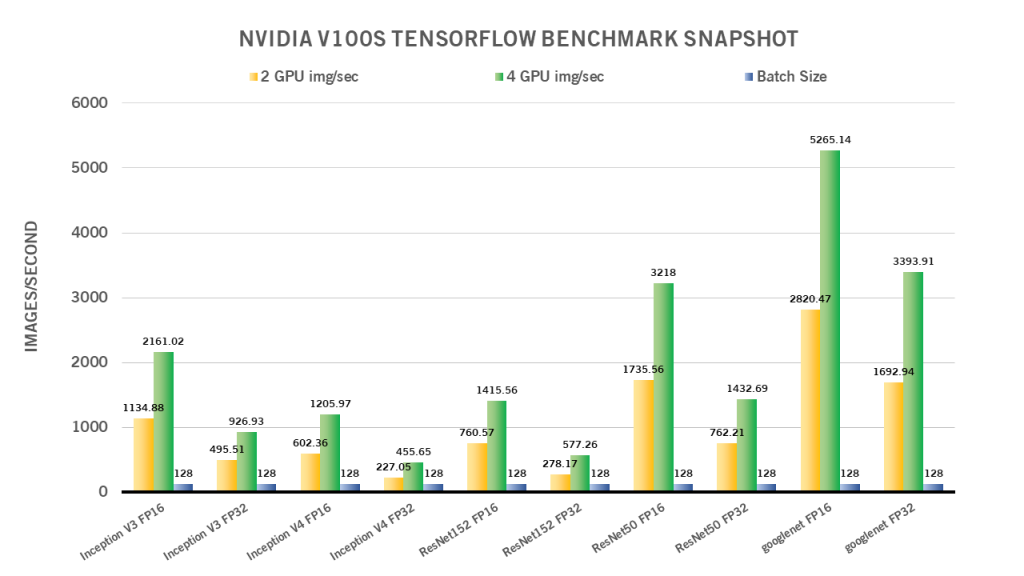

Additional GPU Benchmarks

- NVIDIA Quadro RTX 8000 BERT Large GPU Fine Tuning Benchmarks in TensorFlow (NLP)

- RTX 2080 Ti Deep Learning Benchmarks for TensorFlow (image classification)

- TITAN RTX Deep Learning Benchmarks for Tensorflow (image classification)

- NVIDIA Quadro RTX 6000 GPU Benchmarks for TensorFlow (image classification)

- Quadro RTX 8000 Deep Learning Benchmarks for TensorFlow (image classification)

Appendix/Additional settings

NOTE: these will change with each run depending on batch size, sequence length, etc.

***** Configuaration *****

I1212 17:24:48.136919 139750589261632 run_squad.py:950] logtostderr: False

I1212 17:24:48.136960 139750589261632 run_squad.py:950] alsologtostderr: False

I1212 17:24:48.137000 139750589261632 run_squad.py:950] log_dir:

I1212 17:24:48.137040 139750589261632 run_squad.py:950] v: 0

I1212 17:24:48.137079 139750589261632 run_squad.py:950] verbosity: 0

I1212 17:24:48.137117 139750589261632 run_squad.py:950] stderrthreshold: fatal

I1212 17:24:48.137156 139750589261632 run_squad.py:950] showcodefixforinfo: True

I1212 17:24:48.137195 139750589261632 run_squad.py:950] run_with_pdb: False

I1212 17:24:48.137233 139750589261632 run_squad.py:950] pdb_post_mortem: False

I1212 17:24:48.137271 139750589261632 run_squad.py:950] run_with_profiling: False

I1212 17:24:48.137310 139750589261632 run_squad.py:950] profile_file: None

I1212 17:24:48.137349 139750589261632 run_squad.py:950] use_cprofile_for_profiling: True

I1212 17:24:48.137388 139750589261632 run_squad.py:950] only_check_args: False

I1212 17:24:48.137426 139750589261632 run_squad.py:950] op_conversion_fallback_to_while_loop: False

I1212 17:24:48.137465 139750589261632 run_squad.py:950] test_random_seed: 301

I1212 17:24:48.137504 139750589261632 run_squad.py:950] test_srcdir:

I1212 17:24:48.137542 139750589261632 run_squad.py:950] test_tmpdir: /tmp/absl_testing

I1212 17:24:48.137581 139750589261632 run_squad.py:950] test_randomize_ordering_seed: None

I1212 17:24:48.137620 139750589261632 run_squad.py:950] xml_output_file:

I1212 17:24:48.137658 139750589261632 run_squad.py:950] bert_config_file: data/download/google_codetrained_weights/uncased_L-24_H-1024_A-16/bert_config.json

I1212 17:24:48.137696 139750589261632 run_squad.py:950] vocab_file: data/download/google_codetrained_weights/uncased_L-24_H-1024_A-16/vocab.txt

I1212 17:24:48.137734 139750589261632 run_squad.py:950] output_dir: /results/bert_large_gpu_1_sl_128_codec_fp16_bs_1

I1212 17:24:48.137772 139750589261632 run_squad.py:950] train_file: data/download/squad/v1.1/train-v1.1.json

I1212 17:24:48.137810 139750589261632 run_squad.py:950] codedict_file: None

I1212 17:24:48.137849 139750589261632 run_squad.py:950] init_checkpoint: data/download/google_codetrained_weights/uncased_L-24_H-1024_A-16/bert_model.ckpt

I1212 17:24:48.137887 139750589261632 run_squad.py:950] do_lower_case: True

I1212 17:24:48.137926 139750589261632 run_squad.py:950] max_seq_length: 128

I1212 17:24:48.137964 139750589261632 run_squad.py:950] doc_stride: 64

I1212 17:24:48.138002 139750589261632 run_squad.py:950] max_query_length: 64

I1212 17:24:48.138040 139750589261632 run_squad.py:950] do_train: True

I1212 17:24:48.138079 139750589261632 run_squad.py:950] do_codedict: False

I1212 17:24:48.138117 139750589261632 run_squad.py:950] train_batch_size: 1

I1212 17:24:48.138156 139750589261632 run_squad.py:950] codedict_batch_size: 8

I1212 17:24:48.138199 139750589261632 run_squad.py:950] learning_rate: 5e-06

I1212 17:24:48.138237 139750589261632 run_squad.py:950] use_trt: False

I1212 17:24:48.138276 139750589261632 run_squad.py:950] horovod: False

I1212 17:24:48.138315 139750589261632 run_squad.py:950] num_train_epochs: 2.0

I1212 17:24:48.138354 139750589261632 run_squad.py:950] warmup_proportion: 0.1

I1212 17:24:48.138392 139750589261632 run_squad.py:950] save_checkpoints_steps: 1000

I1212 17:24:48.138430 139750589261632 run_squad.py:950] iterations_per_loop: 1000

I1212 17:24:48.138469 139750589261632 run_squad.py:950] num_accumulation_steps: 1

I1212 17:24:48.138506 139750589261632 run_squad.py:950] n_best_size: 20

I1212 17:24:48.138545 139750589261632 run_squad.py:950] max_answer_length: 30

I1212 17:24:48.138583 139750589261632 run_squad.py:950] verbose_logging: False

I1212 17:24:48.138622 139750589261632 run_squad.py:950] version_2_with_negative: False

I1212 17:24:48.138660 139750589261632 run_squad.py:950] null_score_diff_threshold: 0.0

I1212 17:24:48.138699 139750589261632 run_squad.py:950] use_fp16: True

I1212 17:24:48.138737 139750589261632 run_squad.py:950] use_xla: True

I1212 17:24:48.138775 139750589261632 run_squad.py:950] num_eval_iterations: None

I1212 17:24:48.138813 139750589261632 run_squad.py:950] export_trtis: False

I1212 17:24:48.138851 139750589261632 run_squad.py:950] trtis_model_name: bert

I1212 17:24:48.138890 139750589261632 run_squad.py:950] trtis_model_version: 1

I1212 17:24:48.138928 139750589261632 run_squad.py:950] trtis_server_url: localhost:8001

I1212 17:24:48.138966 139750589261632 run_squad.py:950] trtis_model_overwrite: False

I1212 17:24:48.139004 139750589261632 run_squad.py:950] trtis_max_batch_size: 8

I1212 17:24:48.139043 139750589261632 run_squad.py:950] trtis_dyn_batching_delay: 0.0

I1212 17:24:48.139081 139750589261632 run_squad.py:950] trtis_engine_count: 1

I1212 17:24:48.139120 139750589261632 run_squad.py:950] ?: False

I1212 17:24:48.139158 139750589261632 run_squad.py:950] help: False

I1212 17:24:48.139196 139750589261632 run_squad.py:950] helpshort: False

I1212 17:24:48.139235 139750589261632 run_squad.py:950] helpfull: False

I1212 17:24:48.139273 139750589261632 run_squad.py:950] helpxml: False

I1212 17:24:48.139307 139750589261632 run_squad.py:951] **************************

xtagstartz/h3xtagstartz/h3xtagstartz/h3

NVIDIA Quadro RTX 6000 BERT Large Fine-tune Benchmarks with SQuAD Dataset

Fine Tuning BERT Large on a GPU Workstation

For this post, we measured fine-tuning performance (training and inference) for the BERT implementation of TensorFlow on NVIDIA Quadro RTX 6000 GPUs. For testing we used an Exxact Valence Workstation was fitted with 4x Quadro RTX 6000 GPUs with NVLink, giving us 96 GB of GPU memory for our system.

Benchmark scripts we used for evaluation was the finetune_train_benchmark.sh and finetune_inference_benchmark.sh from NVIDIA NGC Repository BERT for TensorFlow. We made slight modifications to the training benchmark script to get the larger batch size numbers.

The script runs multiple tests on the SQuAD v1.1 dataset using batch sizes 1, 2, 4, 8, 16, and 32, for training, and 1, 2, 4, and 8 for inference and conducted tests using 1, 2, and 4 GPU configurations on BERT Large (We used 1 GPU for inference benchmark). In addition ran all benchmarks using TensorFlow's XLA on across the board. Other training settings can be viewed at the end of this blog in the Appendix section.

Key Points and Observations

- Performance wise, the RTX 6000 Performs well, and in some cases better than the RTX 8000, however resources max out when batch size 32 is reached at sequence size 128.

- In terms of Throughput, the 2x and 4x configs really started to shine when the batch size reached 8 and above. The 4x configuration began to break away around batch size 16.

- For those interested in training BERT Large, a 2x Quadro RTX 6000 system is a great choice, with the opportunity to add additional cards later as budget/scaling needs increase. Even a Deep Learning Workstation with a single RTX 6000 can fine tune BERT Large in about 40 minutes!

- NOTE: In order to run these benchmarks, or be able to fine-tune BERT Large with 4x GPUs you'll need a system with at least 64GB RAM.

Quadro RTX 6000 BERT Large Fine tuning GPU Benchmark Snapshot

FP = Floating Point Codecision, Seq = Sequence Length, BS = Batch Size

4x Quadro RTX 6000 BERT Large GPU Fine-tune Benchmark

fp="Floating" point="" codecision,="" seq="Sequence" length,="" bs="Batch" size<="" strong><="" h3>=""

[supsystic-tables="" id="60]

"run="" these="" benchmarks<="" p>="" <="" <="" strong><="" p>=""

assuming="" you're="" using="" the="" ngc="" bert="" for="" tensorflow="" container,="" run="" following="" command.<=""

FP = Floating Point Codecision, Seq = Sequence Length, BS = Batch Size

[supsystic-tables id=61]

Run these benchmarks

Assuming you're using the NGC BERT for TensorFlow container, run the following command.

scripts/finetune_train_benchmark.sh large true 2 squad

1x Quadro RTX 6000 BERT LARGE GPU Fine-tune Benchmark

FP = Floating Point Codecision, Seq = Sequence Length, BS = Batch Size

[supsystic-tables id=62]

Run these benchmarks

Assuming you're using the NGC BERT for TensorFlow container, run the following command.

scripts/finetune_train_benchmark.sh large true 1 squad1x Quadro RTX 6000 BERT LARGE GPU Inference Benchmark

FP = Floating Point Codecision, Seq = Sequence Length, BS = Batch SizeRun these benchmarks

| Settings | Total Inference Time | num of sentences | Latency Confidence Level 50ms | Latency Confidence Level 90 ms | Latency Confidence Level 95 ms | Latency Confidence Level 99 ms | Latency Confidence Level 100 ms | Latency Avg | Throughput (sentences/sec) |

|---|---|---|---|---|---|---|---|---|---|

| FP16 Seq 384, BS1 | 17.43 | 1042 | 16.92 | 17.35 | 17.57 | 18.25 | 20.41 | 16.73 | 59.78 |

| FP16 Seq 384, BS2 | 24.23 | 2068 | 23.13 | 23.67 | 23.81 | 24.51 | 529.93 | 23.43 | 85.36 |

| FP16 Seq 128, BS2 | 24.69 | 3402 | 15.09 | 16.21 | 16.35 | 17.29 | 25.46 | 14.52 | 137.76 |

| FP16 Seq 128, BS1 | 26.4 | 1800 | 15.55 | 16.66 | 16.86 | 17.59 | 25.35 | 14.67 | 68.19 |

| FP16 Seq 128, BS4 | 29.18 | 7128 | 16.12 | 16.68 | 17.02 | 17.7 | 556.13 | 16.37 | 244.31 |

| FP32 Seq 128, BS1 | 34.04 | 1800 | 20.79 | 22.08 | 22.47 | 22.97 | 26.48 | 18.91 | 52.88 |

| FP32 Seq 128, BS2 | 38.72 | 3402 | 22.92 | 23.79 | 23.98 | 24.58 | 27.02 | 22.76 | 87.87 |

| FP16 Seq 384, BS4 | 40.08 | 4128 | 38.55 | 39.09 | 39.32 | 40.93 | 530.08 | 38.84 | 102.99 |

| FP32 Seq 384, BS1 | 41.99 | 1042 | 42.3 | 43.79 | 43.93 | 44.19 | 45.8 | 40.3 | 24.81 |

| FP16 Seq 128, BS8 | 66.94 | 16040 | 23.06 | 23.75 | 24.01 | 24.61 | 543.94 | 23.16 | 345.5 |

| FP32 Seq 384, BS2 | 74.73 | 2068 | 73.25 | 74.51 | 74.71 | 75.09 | 89.2 | 72.27 | 27.67 |

| FP16 Seq 128, BS8 | 76.66 | 8432 | 72.3 | 74.22 | 74.63 | 75.63 | 628.88 | 72.73 | 109.99 |

| FP32 Seq 128, BS4 | 83.22 | 7128 | 47.45 | 48.84 | 48.99 | 49.25 | 207.56 | 46.7 | 86.66 |

| FP32 Seq 384, BS4 | 160.17 | 4128 | 158.24 | 160.38 | 160.38 | 160.82 | 161.59 | 176.45 | 25.77 |

| FP32 Seq 128, BS8 | 171.83 | 16040 | 86.26 | 87.56 | 87.72 | 88.32 | 97.13 | 85.7 | 93.35 |

| FP32 Seq 128, BS8 | 300.44 | 8432 | 287.05 | 288.46 | 288.78 | 290.09 | 493.14 | 285.05 | 28.07 |

Assuming you're using the NGC BERT for TensorFlow container, run the following command.

scripts/finetune_inference_benchmark.sh large squadSystem Specifications:

| System | Exxact Valence Workstation |

| GPU | 4 x NVIDIA Quadro RTX 6000 |

| CPU | Intel CORE I7-7820X 3.6GHZ |

| RAM | 64GB DDR4 |

| SSD | 480 GB SSD |

| HDD (data) | 10 TB HDD |

| OS | Ubuntu 18.04 |

| NVIDIA DRIVER | 435.21 |

| CUDA Version | 10.1 |

| Python | 2.7/3.6 |

| TensorFlow | 1.14 |

| Container (using NVIDIA Docker) | TensorFlow 19.08-py3+ NGC container |

Additional GPU Benchmarks

- NVIDIA Quadro RTX 8000 BERT Large GPU Fine Tuning Benchmarks in TensorFlow (NLP)

- RTX 2080 Ti Deep Learning Benchmarks for TensorFlow (image classification)

- TITAN RTX Deep Learning Benchmarks for Tensorflow (image classification)

- NVIDIA Quadro RTX 6000 GPU Benchmarks for TensorFlow (image classification)

- Quadro RTX 8000 Deep Learning Benchmarks for TensorFlow (image classification)

Appendix/Additional settings

NOTE: these will change with each run depending on batch size, sequence length, etc.

***** Configuaration *****

I1212 17:24:48.136919 139750589261632 run_squad.py:950] logtostderr: False

I1212 17:24:48.136960 139750589261632 run_squad.py:950] alsologtostderr: False

I1212 17:24:48.137000 139750589261632 run_squad.py:950] log_dir:

I1212 17:24:48.137040 139750589261632 run_squad.py:950] v: 0

I1212 17:24:48.137079 139750589261632 run_squad.py:950] verbosity: 0

I1212 17:24:48.137117 139750589261632 run_squad.py:950] stderrthreshold: fatal

I1212 17:24:48.137156 139750589261632 run_squad.py:950] showcodefixforinfo: True

I1212 17:24:48.137195 139750589261632 run_squad.py:950] run_with_pdb: False

I1212 17:24:48.137233 139750589261632 run_squad.py:950] pdb_post_mortem: False

I1212 17:24:48.137271 139750589261632 run_squad.py:950] run_with_profiling: False

I1212 17:24:48.137310 139750589261632 run_squad.py:950] profile_file: None

I1212 17:24:48.137349 139750589261632 run_squad.py:950] use_cprofile_for_profiling: True

I1212 17:24:48.137388 139750589261632 run_squad.py:950] only_check_args: False

I1212 17:24:48.137426 139750589261632 run_squad.py:950] op_conversion_fallback_to_while_loop: False

I1212 17:24:48.137465 139750589261632 run_squad.py:950] test_random_seed: 301

I1212 17:24:48.137504 139750589261632 run_squad.py:950] test_srcdir:

I1212 17:24:48.137542 139750589261632 run_squad.py:950] test_tmpdir: /tmp/absl_testing

I1212 17:24:48.137581 139750589261632 run_squad.py:950] test_randomize_ordering_seed: None

I1212 17:24:48.137620 139750589261632 run_squad.py:950] xml_output_file:

I1212 17:24:48.137658 139750589261632 run_squad.py:950] bert_config_file: data/download/google_codetrained_weights/uncased_L-24_H-1024_A-16/bert_config.json

I1212 17:24:48.137696 139750589261632 run_squad.py:950] vocab_file: data/download/google_codetrained_weights/uncased_L-24_H-1024_A-16/vocab.txt

I1212 17:24:48.137734 139750589261632 run_squad.py:950] output_dir: /results/bert_large_gpu_1_sl_128_codec_fp16_bs_1

I1212 17:24:48.137772 139750589261632 run_squad.py:950] train_file: data/download/squad/v1.1/train-v1.1.json

I1212 17:24:48.137810 139750589261632 run_squad.py:950] codedict_file: None

I1212 17:24:48.137849 139750589261632 run_squad.py:950] init_checkpoint: data/download/google_codetrained_weights/uncased_L-24_H-1024_A-16/bert_model.ckpt

I1212 17:24:48.137887 139750589261632 run_squad.py:950] do_lower_case: True

I1212 17:24:48.137926 139750589261632 run_squad.py:950] max_seq_length: 128

I1212 17:24:48.137964 139750589261632 run_squad.py:950] doc_stride: 64

I1212 17:24:48.138002 139750589261632 run_squad.py:950] max_query_length: 64

I1212 17:24:48.138040 139750589261632 run_squad.py:950] do_train: True

I1212 17:24:48.138079 139750589261632 run_squad.py:950] do_codedict: False

I1212 17:24:48.138117 139750589261632 run_squad.py:950] train_batch_size: 1

I1212 17:24:48.138156 139750589261632 run_squad.py:950] codedict_batch_size: 8

I1212 17:24:48.138199 139750589261632 run_squad.py:950] learning_rate: 5e-06

I1212 17:24:48.138237 139750589261632 run_squad.py:950] use_trt: False

I1212 17:24:48.138276 139750589261632 run_squad.py:950] horovod: False

I1212 17:24:48.138315 139750589261632 run_squad.py:950] num_train_epochs: 2.0

I1212 17:24:48.138354 139750589261632 run_squad.py:950] warmup_proportion: 0.1

I1212 17:24:48.138392 139750589261632 run_squad.py:950] save_checkpoints_steps: 1000

I1212 17:24:48.138430 139750589261632 run_squad.py:950] iterations_per_loop: 1000

I1212 17:24:48.138469 139750589261632 run_squad.py:950] num_accumulation_steps: 1

I1212 17:24:48.138506 139750589261632 run_squad.py:950] n_best_size: 20

I1212 17:24:48.138545 139750589261632 run_squad.py:950] max_answer_length: 30

I1212 17:24:48.138583 139750589261632 run_squad.py:950] verbose_logging: False

I1212 17:24:48.138622 139750589261632 run_squad.py:950] version_2_with_negative: False

I1212 17:24:48.138660 139750589261632 run_squad.py:950] null_score_diff_threshold: 0.0

I1212 17:24:48.138699 139750589261632 run_squad.py:950] use_fp16: True

I1212 17:24:48.138737 139750589261632 run_squad.py:950] use_xla: True

I1212 17:24:48.138775 139750589261632 run_squad.py:950] num_eval_iterations: None

I1212 17:24:48.138813 139750589261632 run_squad.py:950] export_trtis: False

I1212 17:24:48.138851 139750589261632 run_squad.py:950] trtis_model_name: bert

I1212 17:24:48.138890 139750589261632 run_squad.py:950] trtis_model_version: 1

I1212 17:24:48.138928 139750589261632 run_squad.py:950] trtis_server_url: localhost:8001

I1212 17:24:48.138966 139750589261632 run_squad.py:950] trtis_model_overwrite: False

I1212 17:24:48.139004 139750589261632 run_squad.py:950] trtis_max_batch_size: 8

I1212 17:24:48.139043 139750589261632 run_squad.py:950] trtis_dyn_batching_delay: 0.0

I1212 17:24:48.139081 139750589261632 run_squad.py:950] trtis_engine_count: 1

I1212 17:24:48.139120 139750589261632 run_squad.py:950] ?: False

I1212 17:24:48.139158 139750589261632 run_squad.py:950] help: False

I1212 17:24:48.139196 139750589261632 run_squad.py:950] helpshort: False

I1212 17:24:48.139235 139750589261632 run_squad.py:950] helpfull: False

I1212 17:24:48.139273 139750589261632 run_squad.py:950] helpxml: False

I1212 17:24:48.139307 139750589261632 run_squad.py:951] **************************

xtagstartz/h3xtagstartz/h3xtagstartz/h3

.jpg?format=webp)