NVIDIA A30 Benchmarks

For this blog article, we conducted deep learning performance benchmarks for TensorFlow on NVIDIA A30 GPUs.

Our Deep Learning Server was fitted with eight A30 GPUs and we ran the standard “tf_cnn_benchmarks.py” benchmark script found in the official TensorFlow github. We tested on the following networks: ResNet50, ResNet152, Inception v3, and Googlenet. Furthermore, we ran the same tests using 1, 2, 4, and 8 GPU configurations with a batch size of 128 for FP32 and 256 for FP16.

Key Points and Observations

- The NVIDIA A30 exhibits near linear scaling up to 8 GPUs

- The NVIDIA A30 is a well rounded GPU for most deep learning applications.

- For those not needing the full compute power of A100, you should consider the A30 as an option.

- Since the A30 is FP64 capable, it may also be well suited for other HPC applications.

Interested in getting faster results?

Learn more about Exxact deep learning workstations starting at $3,700

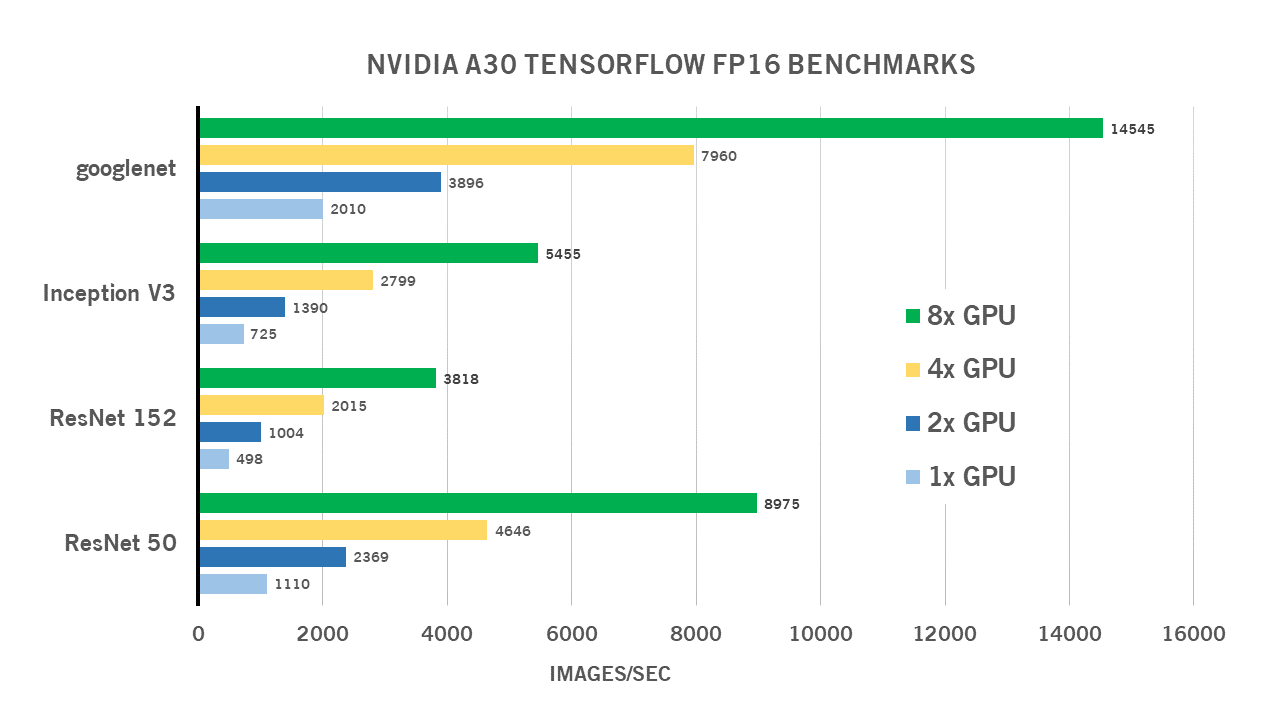

NVIDIA A30 TensorFlow FP 16 Benchmarks

| 1x GPU | 2x GPU | 4x GPU | 8x GPU | |

| ResNet 50 | 1,110 | 2,369 | 4,646 | 8,975 |

| ResNet 152 | 498 | 1,004 | 2,015 | 3,818 |

| Inception V3 | 725 | 1,390 | 2,799 | 5,455 |

| googlenet | 2,010 | 3,896 | 7,960 | 14,545 |

Batch Size 256 for all FP16 tests.

NVIDIA A30 TensorFlow FP 32 Benchmarks

| 1x GPU | 2x GPU | 4x GPU | 8x GPU | |

| ResNet 50 | 463 | 915 | 1,787 | 3,458 |

| ResNet 152 | 206 | 402 | 786 | 1,509 |

| Inception V3 | 319 | 636 | 1,244 | 2,395 |

| googlenet | 1,060 | 2,100 | 3,983 | 7,690 |

Batch Size 128 for all FP32 tests.

More About NVIDIA A30's Features

- NVIDIA A30 Tensor Cores with Tensor Float (TF32) provide up to 10X higher performance over the NVIDIA T4 with zero code changes and an additional 2X boost with automatic mixed precision and FP16, delivering a combined 20X throughput increase. When combined with NVIDIA® NVLink®, PCIe Gen4, NVIDIA networking, and the NVIDIA Magnum IO™ SDK, it’s possible to scale to thousands of GPUs.

- Tensor Cores and MIG enable A30 to be used for workloads dynamically throughout the day. It can be used for production inference at peak demand, and part of the GPU can be repurposed to rapidly re-train those very same models during off-peak hours.

- The NVIDIA A30 GPU delivers a versatile platform for mainstream enterprise workloads, like AI inference, training, and HPC. With TF32 and FP64 Tensor Core support, as well as an end-to-end software and hardware solution stack, A30 ensures that mainstream AI training and HPC applications can be rapidly addressed.

Have any questions about NVIDIA GPUs or AI workstations and servers?

Contact Exxact Today

NVIDIA A30 Deep Learning Benchmarks for TensorFlow

NVIDIA A30 Benchmarks

For this blog article, we conducted deep learning performance benchmarks for TensorFlow on NVIDIA A30 GPUs.

Our Deep Learning Server was fitted with eight A30 GPUs and we ran the standard “tf_cnn_benchmarks.py” benchmark script found in the official TensorFlow github. We tested on the following networks: ResNet50, ResNet152, Inception v3, and Googlenet. Furthermore, we ran the same tests using 1, 2, 4, and 8 GPU configurations with a batch size of 128 for FP32 and 256 for FP16.

Key Points and Observations

- The NVIDIA A30 exhibits near linear scaling up to 8 GPUs

- The NVIDIA A30 is a well rounded GPU for most deep learning applications.

- For those not needing the full compute power of A100, you should consider the A30 as an option.

- Since the A30 is FP64 capable, it may also be well suited for other HPC applications.

Interested in getting faster results?

Learn more about Exxact deep learning workstations starting at $3,700

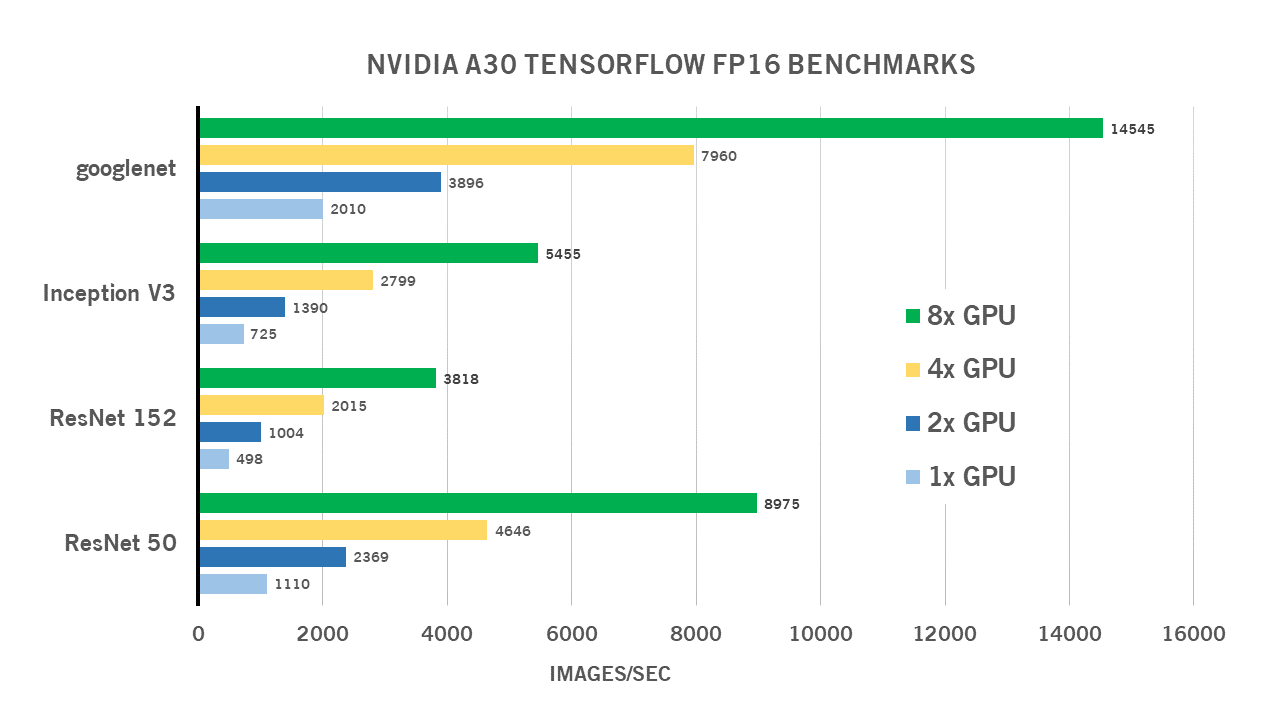

NVIDIA A30 TensorFlow FP 16 Benchmarks

| 1x GPU | 2x GPU | 4x GPU | 8x GPU | |

| ResNet 50 | 1,110 | 2,369 | 4,646 | 8,975 |

| ResNet 152 | 498 | 1,004 | 2,015 | 3,818 |

| Inception V3 | 725 | 1,390 | 2,799 | 5,455 |

| googlenet | 2,010 | 3,896 | 7,960 | 14,545 |

Batch Size 256 for all FP16 tests.

NVIDIA A30 TensorFlow FP 32 Benchmarks

| 1x GPU | 2x GPU | 4x GPU | 8x GPU | |

| ResNet 50 | 463 | 915 | 1,787 | 3,458 |

| ResNet 152 | 206 | 402 | 786 | 1,509 |

| Inception V3 | 319 | 636 | 1,244 | 2,395 |

| googlenet | 1,060 | 2,100 | 3,983 | 7,690 |

Batch Size 128 for all FP32 tests.

More About NVIDIA A30's Features

- NVIDIA A30 Tensor Cores with Tensor Float (TF32) provide up to 10X higher performance over the NVIDIA T4 with zero code changes and an additional 2X boost with automatic mixed precision and FP16, delivering a combined 20X throughput increase. When combined with NVIDIA® NVLink®, PCIe Gen4, NVIDIA networking, and the NVIDIA Magnum IO™ SDK, it’s possible to scale to thousands of GPUs.

- Tensor Cores and MIG enable A30 to be used for workloads dynamically throughout the day. It can be used for production inference at peak demand, and part of the GPU can be repurposed to rapidly re-train those very same models during off-peak hours.

- The NVIDIA A30 GPU delivers a versatile platform for mainstream enterprise workloads, like AI inference, training, and HPC. With TF32 and FP64 Tensor Core support, as well as an end-to-end software and hardware solution stack, A30 ensures that mainstream AI training and HPC applications can be rapidly addressed.

Have any questions about NVIDIA GPUs or AI workstations and servers?

Contact Exxact Today