While all eyes are on the newer NVIDIA GeForce RTX Series graphics cards, we decided to benchmark one of our TITAN Workstations featuring NVIDIA TITAN V Graphics cards. The TITAN V, powered by the NVIDIA Volta architecture is a battle-tested workhorse for Deep Learning and High Performance Computing (HPC) workloads. This blog will focus on TensorFlow benchmarks for Deep Learning, and without further hesitation, let's dig right into the numbers.

Exxact TITAN Workstation System Specs

| CPU | 2 x Intel Xeon Gold 6148 2.4GHz CPU |

|---|---|

| RAM | 192GB DDR4-2666 |

| SSD | 1x480GB SSD |

| GPU | 1, 2, 4x NVIDIA TITAN V 12GB |

| OS | Ubuntu Server 16.04 |

| DRIVER | NVIDIA version 396.44 |

| CUDA | CUDA Toolkit 9.2 |

| Python | v 2.7, pip v8, Anaconda |

| Tensorflow | 1.12 |

Titan V Benchmarks Snapshot: Nasnet, Inception V3, ResNET-50

Exxact Titan V Benchmarks: 1,2,4 GPU Configuration

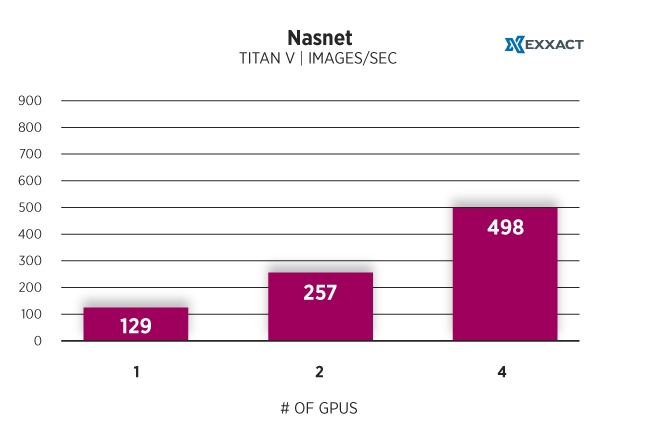

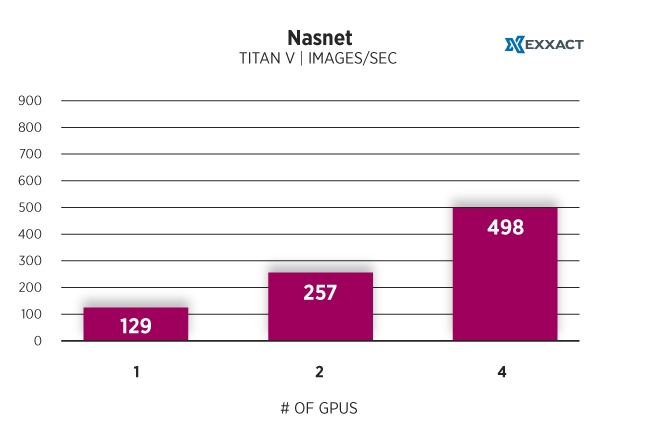

Nasnet Images/Sec (Real Data)

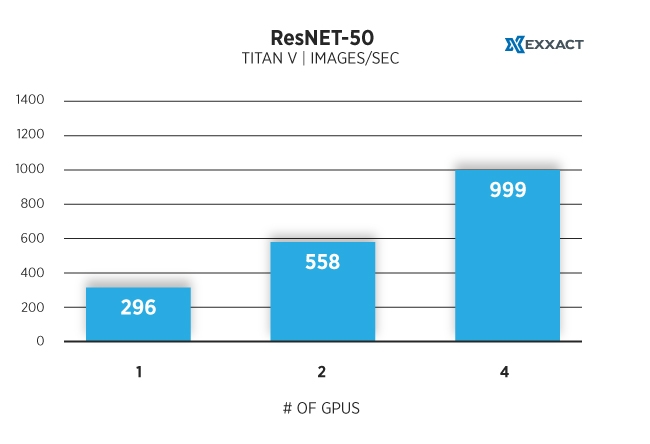

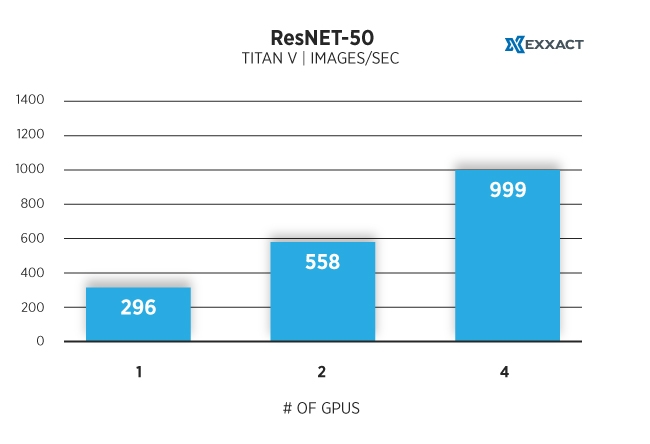

ResNET - 50 Images/Sec (Synthetic Data)

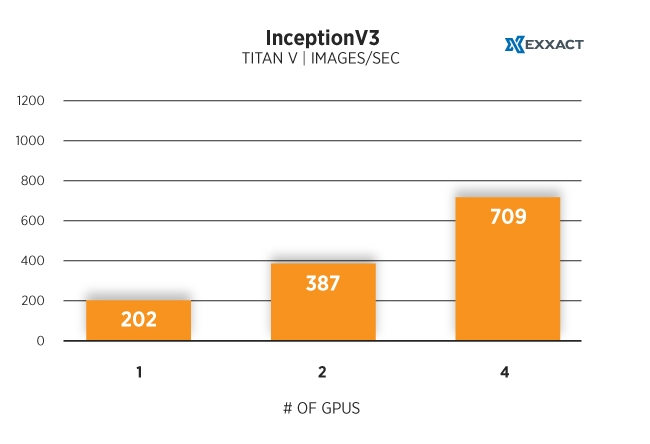

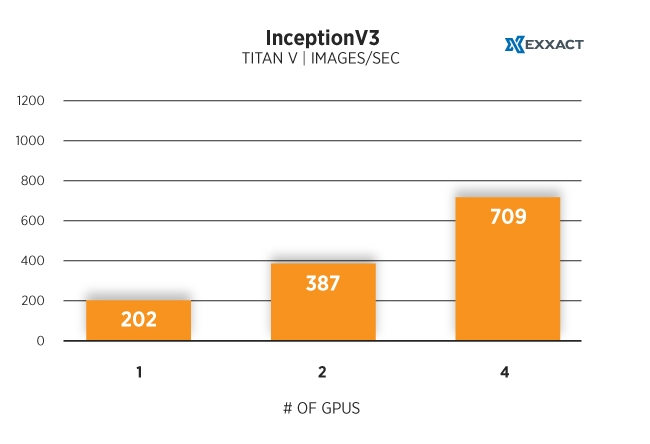

Inception V3 Images/Sec (Synthetic Data)

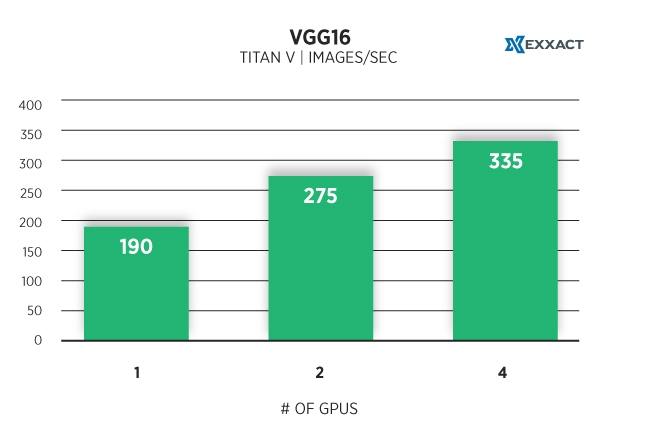

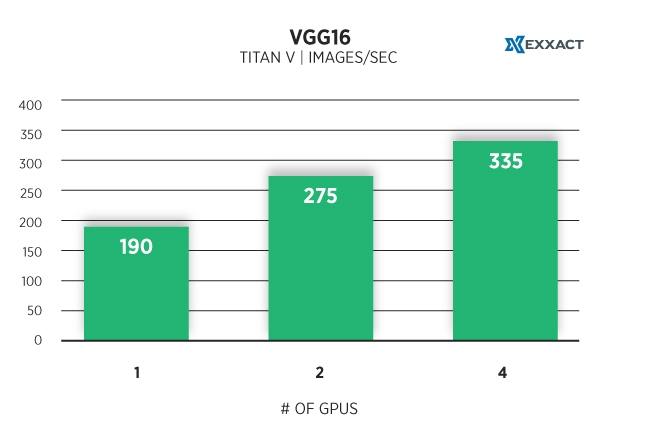

VGG16 Images/Sec (Synthetic Data)

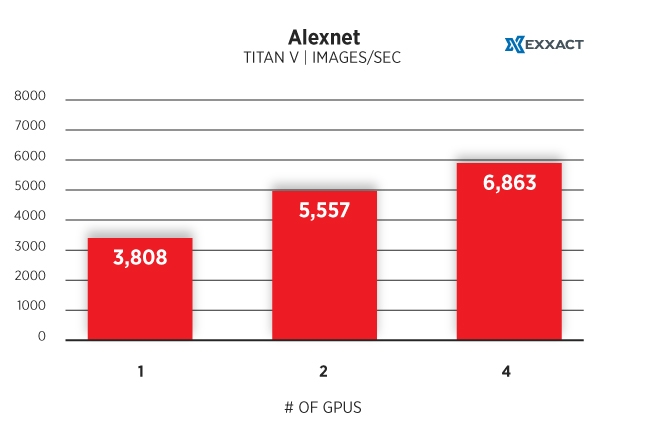

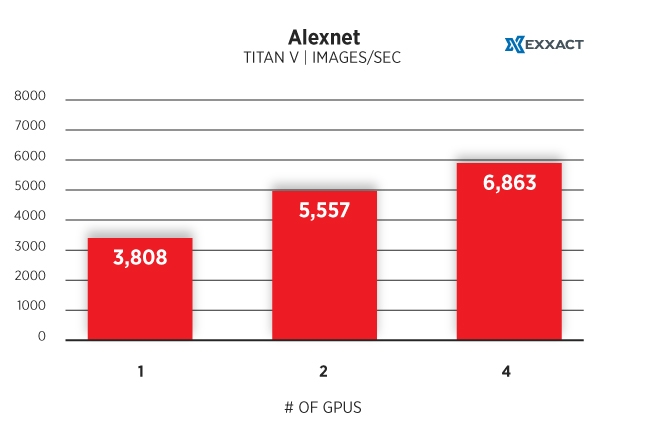

Alexnet Images/Sec (Synthetic Data)

Titan V Benchmarks Methodology

The configuration used for testing TensorFlow was unchanged from beginning to end with the exception of the number of GPU's utilized in a specific benchmark run. All of the models used for these measurements contained synthetic data with the exception of the Nasnet model, which contained real data consisting of jpeg images downloaded from the internet. The archive was a 20GB file containing jpeg images of 7 different species of flowers.

The image-retrain function within TensorFlow was used to import the real data into the Nasnet model. The other models whose data was synthetic were all measured "as-is" without any modifications made throughout the testing cycle. Experiments ran using the python-pip package within the Anaconda run-time as prescribed in the TensorFlow installation documentation.

Downloaded the benchmark scripts and pre-constructed models and accompanying data-sets (with the exception of the Nasnet model which was populated with custom image data). Below the specific commands to run each of the scenarios is documented above the benchmark results. In each case, the only variables which changed from run to run were: --num_gpus and --model. All other parameters were unchanged throughout the duration of these experiments. Batch size used was 64 for all training.

Titan V Benchmarks Commands and Output for Nasnet Training

Running warm up

Done warm up

Step Img/sec total_loss

1 images/sec: 129.5 +/- 0.0 (jitter = 0.0) 7.410

10 images/sec: 129.7 +/- 0.3 (jitter = 0.7) 7.582

20 images/sec: 129.4 +/- 0.2 (jitter = 0.9) 7.550

30 images/sec: 129.3 +/- 0.2 (jitter = 0.9) 7.497

40 images/sec: 129.3 +/- 0.2 (jitter = 1.0) 7.563

50 images/sec: 129.3 +/- 0.1 (jitter = 1.1) 7.413

60 images/sec: 129.3 +/- 0.1 (jitter = 1.0) 7.364

70 images/sec: 129.3 +/- 0.1 (jitter = 1.0) 7.574

80 images/sec: 129.3 +/- 0.1 (jitter = 0.9) 7.345

90 images/sec: 129.3 +/- 0.1 (jitter = 0.7) 7.375

100 images/sec: 129.3 +/- 0.1 (jitter = 0.6) 7.428

----------------------------------------------------------------

total images/sec: 129.26

----------------------------------------------------------------

| python benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py --num_gpus=1 --model nasnet --batch_size 64 |

Running warm up

Done warm up

Step Img/sec total_loss

1 images/sec: 254.3 +/- 0.0 (jitter = 0.0) 7.495

10 images/sec: 257.3 +/- 1.1 (jitter = 2.6) 7.499

20 images/sec: 257.6 +/- 0.9 (jitter = 2.6) 7.424

30 images/sec: 257.9 +/- 0.8 (jitter = 3.7) 7.409

40 images/sec: 257.7 +/- 0.6 (jitter = 3.5) 7.368

50 images/sec: 257.9 +/- 0.6 (jitter = 3.9) 7.414

60 images/sec: 257.5 +/- 0.5 (jitter = 3.9) 7.441

70 images/sec: 257.1 +/- 0.5 (jitter = 3.9) 7.475

80 images/sec: 257.3 +/- 0.4 (jitter = 3.9) 7.386

90 images/sec: 257.4 +/- 0.4 (jitter = 4.2) 7.386

100 images/sec: 257.4 +/- 0.4 (jitter = 4.3) 7.472

----------------------------------------------------------------

total images/sec: 257.29

----------------------------------------------------------------

| python benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py --num_gpus=2 --model nasnet --batch_size 64 |

Running warm up

Done warm up

Step Img/sec total_loss

1 images/sec: 479.7 +/- 0.0 (jitter = 0.0) 7.459

10 images/sec: 486.9 +/- 4.6 (jitter = 12.1) 7.486

20 images/sec: 489.4 +/- 3.4 (jitter = 19.4) 7.398

30 images/sec: 492.2 +/- 2.8 (jitter = 17.2) 7.420

40 images/sec: 494.0 +/- 2.3 (jitter = 14.0) 7.400

50 images/sec: 496.2 +/- 1.9 (jitter = 10.5) 7.401

60 images/sec: 496.7 +/- 1.7 (jitter = 10.1) 7.364

70 images/sec: 497.1 +/- 1.5 (jitter = 8.8) 7.388

80 images/sec: 497.4 +/- 1.4 (jitter = 7.8) 7.383

90 images/sec: 498.0 +/- 1.2 (jitter = 8.1) 7.407

100 images/sec: 498.4 +/- 1.2 (jitter = 7.6) 7.396

----------------------------------------------------------------

total images/sec: 498.28

----------------------------------------------------------------

| python benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py --num_gpus=4 --model nasnet --batch_size 64 |

TITAN Workstations from Exxact

Powered by NVIDIA TITAN GPU's, Exxact TITAN Workstations offer powerful computational power for a multitude of different workloads from Deep Learning to HPC.

Do you have any questions regarding our Titan V Benchmarks? Contact us directly here.

For GPU Benchmaks please see our other blog posts!

- RTX 2080 Ti Deep Learning Benchmarks for TensorFlow

- TITAN RTX Deep Learning Benchmarks for Tensorflow

- NVIDIA Quadro RTX 6000 GPU Benchmarks for TensorFlow

- Quadro RTX 8000 Deep Learning Benchmarks for TensorFlow

Exxact TITAN V Workstation Crushes Deep Learning Performance Benchmarks for TensorFlow

While all eyes are on the newer NVIDIA GeForce RTX Series graphics cards, we decided to benchmark one of our TITAN Workstations featuring NVIDIA TITAN V Graphics cards. The TITAN V, powered by the NVIDIA Volta architecture is a battle-tested workhorse for Deep Learning and High Performance Computing (HPC) workloads. This blog will focus on TensorFlow benchmarks for Deep Learning, and without further hesitation, let's dig right into the numbers.

Exxact TITAN Workstation System Specs

| CPU | 2 x Intel Xeon Gold 6148 2.4GHz CPU |

|---|---|

| RAM | 192GB DDR4-2666 |

| SSD | 1x480GB SSD |

| GPU | 1, 2, 4x NVIDIA TITAN V 12GB |

| OS | Ubuntu Server 16.04 |

| DRIVER | NVIDIA version 396.44 |

| CUDA | CUDA Toolkit 9.2 |

| Python | v 2.7, pip v8, Anaconda |

| Tensorflow | 1.12 |

Titan V Benchmarks Snapshot: Nasnet, Inception V3, ResNET-50

Exxact Titan V Benchmarks: 1,2,4 GPU Configuration

Nasnet Images/Sec (Real Data)

ResNET - 50 Images/Sec (Synthetic Data)

Inception V3 Images/Sec (Synthetic Data)

VGG16 Images/Sec (Synthetic Data)

Alexnet Images/Sec (Synthetic Data)

Titan V Benchmarks Methodology

The configuration used for testing TensorFlow was unchanged from beginning to end with the exception of the number of GPU's utilized in a specific benchmark run. All of the models used for these measurements contained synthetic data with the exception of the Nasnet model, which contained real data consisting of jpeg images downloaded from the internet. The archive was a 20GB file containing jpeg images of 7 different species of flowers.

The image-retrain function within TensorFlow was used to import the real data into the Nasnet model. The other models whose data was synthetic were all measured "as-is" without any modifications made throughout the testing cycle. Experiments ran using the python-pip package within the Anaconda run-time as prescribed in the TensorFlow installation documentation.

Downloaded the benchmark scripts and pre-constructed models and accompanying data-sets (with the exception of the Nasnet model which was populated with custom image data). Below the specific commands to run each of the scenarios is documented above the benchmark results. In each case, the only variables which changed from run to run were: --num_gpus and --model. All other parameters were unchanged throughout the duration of these experiments. Batch size used was 64 for all training.

Titan V Benchmarks Commands and Output for Nasnet Training

Running warm up

Done warm up

Step Img/sec total_loss

1 images/sec: 129.5 +/- 0.0 (jitter = 0.0) 7.410

10 images/sec: 129.7 +/- 0.3 (jitter = 0.7) 7.582

20 images/sec: 129.4 +/- 0.2 (jitter = 0.9) 7.550

30 images/sec: 129.3 +/- 0.2 (jitter = 0.9) 7.497

40 images/sec: 129.3 +/- 0.2 (jitter = 1.0) 7.563

50 images/sec: 129.3 +/- 0.1 (jitter = 1.1) 7.413

60 images/sec: 129.3 +/- 0.1 (jitter = 1.0) 7.364

70 images/sec: 129.3 +/- 0.1 (jitter = 1.0) 7.574

80 images/sec: 129.3 +/- 0.1 (jitter = 0.9) 7.345

90 images/sec: 129.3 +/- 0.1 (jitter = 0.7) 7.375

100 images/sec: 129.3 +/- 0.1 (jitter = 0.6) 7.428

----------------------------------------------------------------

total images/sec: 129.26

----------------------------------------------------------------

| python benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py --num_gpus=1 --model nasnet --batch_size 64 |

Running warm up

Done warm up

Step Img/sec total_loss

1 images/sec: 254.3 +/- 0.0 (jitter = 0.0) 7.495

10 images/sec: 257.3 +/- 1.1 (jitter = 2.6) 7.499

20 images/sec: 257.6 +/- 0.9 (jitter = 2.6) 7.424

30 images/sec: 257.9 +/- 0.8 (jitter = 3.7) 7.409

40 images/sec: 257.7 +/- 0.6 (jitter = 3.5) 7.368

50 images/sec: 257.9 +/- 0.6 (jitter = 3.9) 7.414

60 images/sec: 257.5 +/- 0.5 (jitter = 3.9) 7.441

70 images/sec: 257.1 +/- 0.5 (jitter = 3.9) 7.475

80 images/sec: 257.3 +/- 0.4 (jitter = 3.9) 7.386

90 images/sec: 257.4 +/- 0.4 (jitter = 4.2) 7.386

100 images/sec: 257.4 +/- 0.4 (jitter = 4.3) 7.472

----------------------------------------------------------------

total images/sec: 257.29

----------------------------------------------------------------

| python benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py --num_gpus=2 --model nasnet --batch_size 64 |

Running warm up

Done warm up

Step Img/sec total_loss

1 images/sec: 479.7 +/- 0.0 (jitter = 0.0) 7.459

10 images/sec: 486.9 +/- 4.6 (jitter = 12.1) 7.486

20 images/sec: 489.4 +/- 3.4 (jitter = 19.4) 7.398

30 images/sec: 492.2 +/- 2.8 (jitter = 17.2) 7.420

40 images/sec: 494.0 +/- 2.3 (jitter = 14.0) 7.400

50 images/sec: 496.2 +/- 1.9 (jitter = 10.5) 7.401

60 images/sec: 496.7 +/- 1.7 (jitter = 10.1) 7.364

70 images/sec: 497.1 +/- 1.5 (jitter = 8.8) 7.388

80 images/sec: 497.4 +/- 1.4 (jitter = 7.8) 7.383

90 images/sec: 498.0 +/- 1.2 (jitter = 8.1) 7.407

100 images/sec: 498.4 +/- 1.2 (jitter = 7.6) 7.396

----------------------------------------------------------------

total images/sec: 498.28

----------------------------------------------------------------

| python benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py --num_gpus=4 --model nasnet --batch_size 64 |

TITAN Workstations from Exxact

Powered by NVIDIA TITAN GPU's, Exxact TITAN Workstations offer powerful computational power for a multitude of different workloads from Deep Learning to HPC.

Do you have any questions regarding our Titan V Benchmarks? Contact us directly here.

For GPU Benchmaks please see our other blog posts!

- RTX 2080 Ti Deep Learning Benchmarks for TensorFlow

- TITAN RTX Deep Learning Benchmarks for Tensorflow

- NVIDIA Quadro RTX 6000 GPU Benchmarks for TensorFlow

- Quadro RTX 8000 Deep Learning Benchmarks for TensorFlow