MPN: DGXA-2530A+P2EDI00

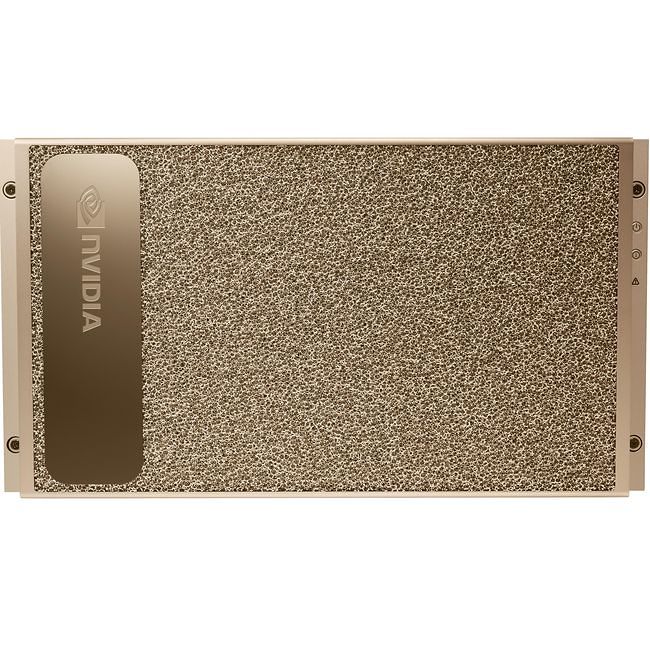

NVIDIA DGX A100 System for EDU (Educational Institutions) - DGXA-2530A+P2EDI00

Highlights

Product Type:

Graphics Computing System

Condition:

New

Category:

Computers and Portables

Subcategory:

Server Systems

Specifications

General Information

Manufacturer

NVIDIA

Manufacturer Part Number

DGXA-2530A+P2EDI00

Manufacturer Website Address

http://www.nvidia.com

Brand Name

NVIDIA

Product Line

DGX

Product Name

DGX A100

Product Type

Graphics Computing System

Processor & Chipset

Number of Processors Installed

2

Processor Manufacturer

AMD

Processor Type

EPYC

Processor Model

7742

Processor Core

64 Core

Processor Speed

2.25 GHz

GPU

Number of GPUs Installed

8

Number of NVIDIA NVSwitches

6

GPU Manufacturer

NVIDIA

Chipset Line

Tesla

GPU Architecture

Ampere

Chipset Model

A100

Processor Core

- 432 Tensor

- 6912 CUDA

Total GPU Memory

320 GB (8x Ampere A100 40 GB)

GPU Technology

SXM4 NVLINK

Memory

Standard Memory

1 TB

Memory Technology

DDR4-3200/PC4-25600

Storage

Total Hard Drive Capacity

- OS: 2x 1.92TB M.2 NVMe

- Internal Storage: 4x 3.84TB U.2 NVMe (15TB)

Storage Type

Solid State Drive

Storage Configuration

RAID 0

Network & Communication

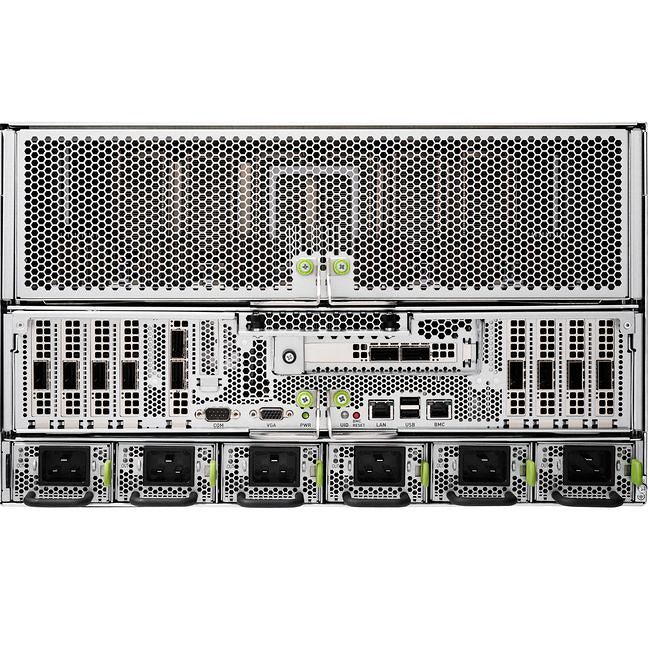

Networking

- 8x Single-Port Mellanox ConnectX-6 VPI 200Gb/s HDR InfiniBand

- 1x Dual-Port Mellanox ConnectX-6 VPI 10/25/50/100/200Gb/s Ethernet

Software

Operating System

Ubuntu Linux OS

Power Description

Maximum Power Consumption

6500 W

Physical Characteristics

Form Factor

Rack

Rack Height

6U

Height

10.4"

Width

19.0"

Depth

35.3"

Weight (Approximate)

271 lbs